Virtualization and Cloud Performance Testing

Today we talk about testing the performance of cloud services and virtualization systems, and also draw useful conclusions. But before talking about benchmarking, let's think about how to properly conduct tests in a virtual environment? In fact, this issue has its own tricks and its own proven methods.

Most people who test virtual environments for the first time do so incorrectly, to the extent that VMware introduced a ban on publishing such testing materials into a user license agreement without the consent of VMware. And all because they definitely need to evaluate the methodology of the test.

Indeed, what is the first approach that comes to mind? We create a virtual machine, put in it a popular software for the test of disk, memory, processor and video card. And we will compare its results, for example, with a physical system or another virtual machine.

This approach, although not without reservations, has the right to life if we are talking about a laptop or a workstation, when the virtual machine is almost always the same, and I want to get the most out of it - to start the game or the “no brakes” office application.

')

Alas, when it comes to servers, where there are many virtual machines, this technique is completely useless, because on its basis almost no conclusions can be drawn.

Why?

First, no one uses the server to run a single virtual machine, there are almost always a lot of them; and if for example one of them has high performance to the detriment of another (this is quite possible with a poorly functioning task scheduler), the platform is in principle very bad - but the test will not show it!

Secondly, the virtual machine on the server almost always has limited resources - and this is not about 90%, but often only a few percent of server resources. In this case, two different platforms on one, five or ten virtualization virtual machines can show the same results. Does this mean that systems are equally good or bad? Absolutely not necessary! After all, different solutions can work with memory in different ways, use CPU time or virtualize disk operations. And as long as there are free resources on the server, the difference between the various platforms will not be noticeable. But resources always end, because in fact, in fact, there is a sense of virtualization in order to use resources to the maximum. And the decision from which they end earlier, in real life, will show the results worse, but with the method outlined we will not be able to find out.

Thirdly, hypervisors have their own set of “Achilles heels” - certain load patterns, under which each of them will show especially bad results. Depending on how much the load on such patterns implies testing, the results may vary significantly. It is easy to choose or write a test that will show the “desired” results - good or bad - and it is much more difficult to make a test that will produce useful and objective results.

How will we test, gentlemen?

However, virtualization based on Intel architecture is almost 20 years old, and for people working in this field, the topic of benchmarking is completely new. Over the past years, a certain “gold standard” of such testing has been developed. Omitting some details, the approach can be formulated as follows:

• Create a system of several virtual machines with typical server applications (there usually includes a server with a database, a server for some heavy Java application, a mail server, a file server, etc.)

• Obtain integrated points from these applications that characterize the performance of each of these systems.

• When adding new similar systems, add points

• Evaluate the decision on the amount of points with equal configurations (when the difference is only in one parameter - in the virtualization system itself).

Such testing certainly does not provide answers to all questions. For example, because the set of reference loads can be very different from what the client really needs - and real loads can give very different results (recall the Achilles' heels of virtualization tools). But it is still much better than the “approach to the forehead”, which we discussed at the very beginning.

At Virtuozzo, we mainly deal with cloud services and know what customers are thinking about, not just service providers (who choose a virtualization platform manufacturer). They often do not care what kind of virtualization system was used, since only the performance of the virtual server that he bought is important to him. And how it is achieved - with the help of a more productive hypervisor, more productive iron, or simply by placing fewer clients on each server - to the customer is not at all important.

From the client’s point of view, the “desktop” approach has a right to exist — the same processor, disk, and other “parrots” that intend such tests — they show how much a purchased server is worth its money. It turns out that the service provider needs one thing, and the consumer needs another.

Golden mean?

But not so long ago, we started a small project with our partner company Cloud Spectator, where we had to figure out - is it possible to make a test that will test server virtualization, but from the point of view of the client? It seems to us that we have found an approach - if not ideal, then it has a right to exist.

For reference. Cloud Spectator tests the performance of cloud servers, and does it in fact by the "desktop" method - running tests like geekbench and fio inside one virtual machine and collecting their results for a long time (for example, a full day for one working day). You can read a similar report, where one of the cloud services from company 1 & 1 showed the best performance.

Our experiment, in which we tested two different virtualization platforms using a similar method, gave interesting results. We had to first bring the test server to the "production load" (let's call this load "ballast"). Its only purpose is to load the server equally, creating “other equal conditions”.

The servers themselves were quite typical for modern providers - not the highest end, but the goal was not in the maximum figures, but in obtaining data that can be compared with each other.

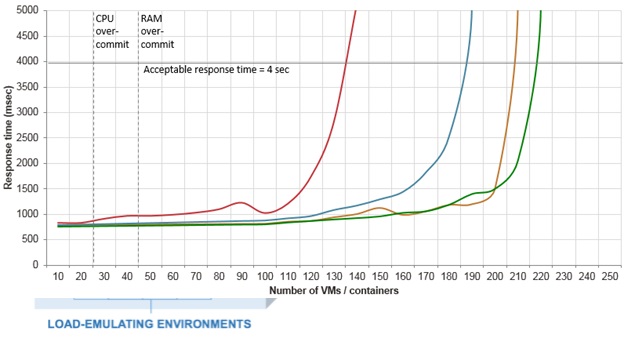

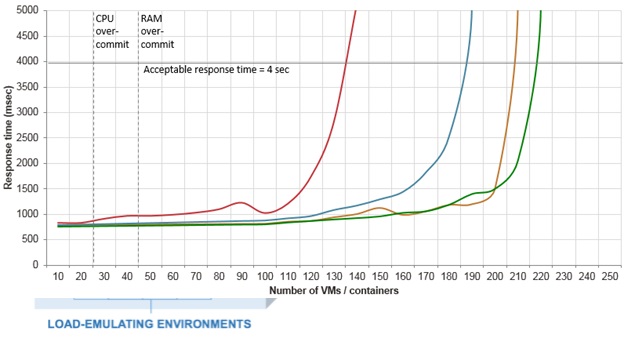

To create the same ballast load on both servers, we used another test, which we have already done before. It emulates the work of a web server, to which clients come. We usually use this test to measure the maximum attainable density of virtual servers per physical by the following method: adding more and more virtual servers, we measure the response rate, and stop the test when the 95th percentile exceeds 4 seconds, considering the maximum density reached.

Here is an example of the graphs that this test builds for different virtualization systems:

But today we decided to use this test differently - fix the number of virtual servers, but change the frequency of requests per second.

In preparation for testing, we created an equal number of such virtual servers (50 each) on both hosts and began to load them from several clients equally, gradually increasing the frequency of incoming requests. When both servers started to show the CPU utilization rate close to 100%, we stopped and fixed the ballast load at this level (the motivation is that most providers want to load the server almost 100%, but so that customers do not suffer).

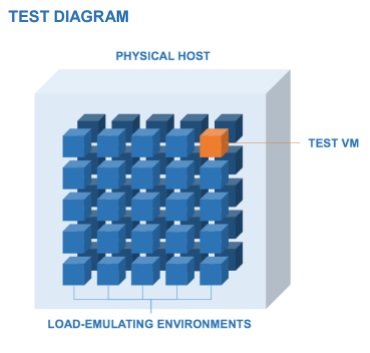

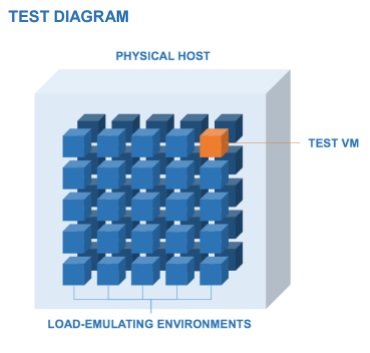

Next, we created the 51st server on both hosts and sent it to Cloud Spectator in order to test the “client look at performance”. That is, the test "stand" looked like this:

As you may have guessed, our virtualization system won by the test results (the full report can be viewed here at this link ), but this is not the essence of this post. The main thing is that we have a technique that allows the cloud service provider to evaluate how a change in one of the system's parameters “other things being equal”, affects the level of performance that its client receives.

Interesting Facts…

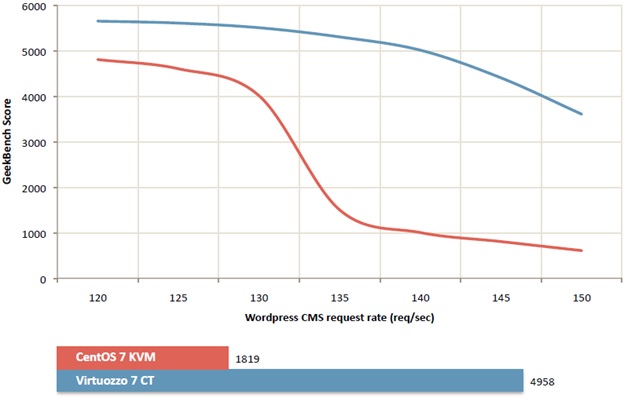

1. Performance may drop dramatically

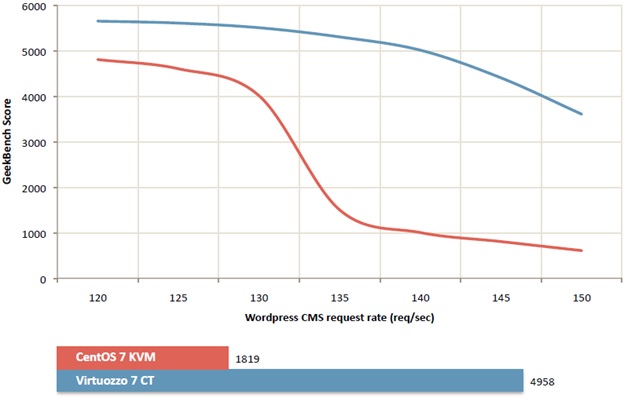

During the test, we were able to find out some interesting facts. For example, we learned that a small change in the “ballast” load can lead to severe performance failures in a test client. And really small - about 10-15% increase leads to the fact that test results deteriorate significantly, because there is a certain load limit, the excess of which leads to failure. Here is a graph of the performance of the geekbench that we removed, picking up the ballast load.

As you can see, as the number of client requests for ballast load increases from about 125 to 135 requests per second, the performance of the tested environment on the "first surrendered" platform drops several times. Such a fall cannot be reproduced when the load on one or several clients rises - resource isolation of virtualization will not allow them to expend all resources. But if this happens on many or all clients at the same time, such a drop in performance is quite possible.

Hence the conclusion: if you are using a cloud server and your website slows down at certain hours - perhaps your provider has created too many clients on one server - and this was imperceptible only "for the time being - until time."

2. Want performance? It must be constantly measured!

This should be done by the provider, who wants his clients to not complain, and the client himself. In real life, the load on the physical server is different at different times of the day, so usually Cloud Spectator tests the server continuously for 24 hours to cover the full daily cycle of work. For one-time testing, this may be enough (unless of course these 24 hours fall out on the weekend), but in real life the situation may change in a longer term. Your virtual server can get on the physical server among the first - and everything will “fly” until they create a server for other clients. There may be “empty” cloud servers around you that will fill with real workloads in a month or two. In the end, productivity may be enough until the online shopping season comes before the holidays.

3. Well, the last - “fast and cheap” does not happen.

Equipment, network, data centers, electricity - everything costs money. "Standard" means of optimizing costs like using equipment with a maximum ratio of performance / price is used by almost all providers, but there is not much to squeeze. The only way to sell your services is cheaper than the market and, at the same time, not to go to the minus is to run more clients on one server. But this approach is always at the expense of performance - either now or in the near future. Therefore, if cloud service performance is important to you, price should not be the only factor when choosing a service provider.

Most people who test virtual environments for the first time do so incorrectly, to the extent that VMware introduced a ban on publishing such testing materials into a user license agreement without the consent of VMware. And all because they definitely need to evaluate the methodology of the test.

Indeed, what is the first approach that comes to mind? We create a virtual machine, put in it a popular software for the test of disk, memory, processor and video card. And we will compare its results, for example, with a physical system or another virtual machine.

This approach, although not without reservations, has the right to life if we are talking about a laptop or a workstation, when the virtual machine is almost always the same, and I want to get the most out of it - to start the game or the “no brakes” office application.

')

Alas, when it comes to servers, where there are many virtual machines, this technique is completely useless, because on its basis almost no conclusions can be drawn.

Why?

First, no one uses the server to run a single virtual machine, there are almost always a lot of them; and if for example one of them has high performance to the detriment of another (this is quite possible with a poorly functioning task scheduler), the platform is in principle very bad - but the test will not show it!

Secondly, the virtual machine on the server almost always has limited resources - and this is not about 90%, but often only a few percent of server resources. In this case, two different platforms on one, five or ten virtualization virtual machines can show the same results. Does this mean that systems are equally good or bad? Absolutely not necessary! After all, different solutions can work with memory in different ways, use CPU time or virtualize disk operations. And as long as there are free resources on the server, the difference between the various platforms will not be noticeable. But resources always end, because in fact, in fact, there is a sense of virtualization in order to use resources to the maximum. And the decision from which they end earlier, in real life, will show the results worse, but with the method outlined we will not be able to find out.

Thirdly, hypervisors have their own set of “Achilles heels” - certain load patterns, under which each of them will show especially bad results. Depending on how much the load on such patterns implies testing, the results may vary significantly. It is easy to choose or write a test that will show the “desired” results - good or bad - and it is much more difficult to make a test that will produce useful and objective results.

How will we test, gentlemen?

However, virtualization based on Intel architecture is almost 20 years old, and for people working in this field, the topic of benchmarking is completely new. Over the past years, a certain “gold standard” of such testing has been developed. Omitting some details, the approach can be formulated as follows:

• Create a system of several virtual machines with typical server applications (there usually includes a server with a database, a server for some heavy Java application, a mail server, a file server, etc.)

• Obtain integrated points from these applications that characterize the performance of each of these systems.

• When adding new similar systems, add points

• Evaluate the decision on the amount of points with equal configurations (when the difference is only in one parameter - in the virtualization system itself).

Such testing certainly does not provide answers to all questions. For example, because the set of reference loads can be very different from what the client really needs - and real loads can give very different results (recall the Achilles' heels of virtualization tools). But it is still much better than the “approach to the forehead”, which we discussed at the very beginning.

At Virtuozzo, we mainly deal with cloud services and know what customers are thinking about, not just service providers (who choose a virtualization platform manufacturer). They often do not care what kind of virtualization system was used, since only the performance of the virtual server that he bought is important to him. And how it is achieved - with the help of a more productive hypervisor, more productive iron, or simply by placing fewer clients on each server - to the customer is not at all important.

From the client’s point of view, the “desktop” approach has a right to exist — the same processor, disk, and other “parrots” that intend such tests — they show how much a purchased server is worth its money. It turns out that the service provider needs one thing, and the consumer needs another.

Golden mean?

But not so long ago, we started a small project with our partner company Cloud Spectator, where we had to figure out - is it possible to make a test that will test server virtualization, but from the point of view of the client? It seems to us that we have found an approach - if not ideal, then it has a right to exist.

For reference. Cloud Spectator tests the performance of cloud servers, and does it in fact by the "desktop" method - running tests like geekbench and fio inside one virtual machine and collecting their results for a long time (for example, a full day for one working day). You can read a similar report, where one of the cloud services from company 1 & 1 showed the best performance.

Our experiment, in which we tested two different virtualization platforms using a similar method, gave interesting results. We had to first bring the test server to the "production load" (let's call this load "ballast"). Its only purpose is to load the server equally, creating “other equal conditions”.

The servers themselves were quite typical for modern providers - not the highest end, but the goal was not in the maximum figures, but in obtaining data that can be compared with each other.

CPU: 2 x Intel Xeon E5-2620

RAM: 64GB (4 x 16GB DDR4-2133 ECC REG)

Storage: LSI 9271-8i (8-port SAS2, 1GB) RAID0 over 8 x HGST 450GB 15K RPM

To create the same ballast load on both servers, we used another test, which we have already done before. It emulates the work of a web server, to which clients come. We usually use this test to measure the maximum attainable density of virtual servers per physical by the following method: adding more and more virtual servers, we measure the response rate, and stop the test when the 95th percentile exceeds 4 seconds, considering the maximum density reached.

Here is an example of the graphs that this test builds for different virtualization systems:

But today we decided to use this test differently - fix the number of virtual servers, but change the frequency of requests per second.

In preparation for testing, we created an equal number of such virtual servers (50 each) on both hosts and began to load them from several clients equally, gradually increasing the frequency of incoming requests. When both servers started to show the CPU utilization rate close to 100%, we stopped and fixed the ballast load at this level (the motivation is that most providers want to load the server almost 100%, but so that customers do not suffer).

Next, we created the 51st server on both hosts and sent it to Cloud Spectator in order to test the “client look at performance”. That is, the test "stand" looked like this:

As you may have guessed, our virtualization system won by the test results (the full report can be viewed here at this link ), but this is not the essence of this post. The main thing is that we have a technique that allows the cloud service provider to evaluate how a change in one of the system's parameters “other things being equal”, affects the level of performance that its client receives.

Interesting Facts…

1. Performance may drop dramatically

During the test, we were able to find out some interesting facts. For example, we learned that a small change in the “ballast” load can lead to severe performance failures in a test client. And really small - about 10-15% increase leads to the fact that test results deteriorate significantly, because there is a certain load limit, the excess of which leads to failure. Here is a graph of the performance of the geekbench that we removed, picking up the ballast load.

As you can see, as the number of client requests for ballast load increases from about 125 to 135 requests per second, the performance of the tested environment on the "first surrendered" platform drops several times. Such a fall cannot be reproduced when the load on one or several clients rises - resource isolation of virtualization will not allow them to expend all resources. But if this happens on many or all clients at the same time, such a drop in performance is quite possible.

Hence the conclusion: if you are using a cloud server and your website slows down at certain hours - perhaps your provider has created too many clients on one server - and this was imperceptible only "for the time being - until time."

2. Want performance? It must be constantly measured!

This should be done by the provider, who wants his clients to not complain, and the client himself. In real life, the load on the physical server is different at different times of the day, so usually Cloud Spectator tests the server continuously for 24 hours to cover the full daily cycle of work. For one-time testing, this may be enough (unless of course these 24 hours fall out on the weekend), but in real life the situation may change in a longer term. Your virtual server can get on the physical server among the first - and everything will “fly” until they create a server for other clients. There may be “empty” cloud servers around you that will fill with real workloads in a month or two. In the end, productivity may be enough until the online shopping season comes before the holidays.

3. Well, the last - “fast and cheap” does not happen.

Equipment, network, data centers, electricity - everything costs money. "Standard" means of optimizing costs like using equipment with a maximum ratio of performance / price is used by almost all providers, but there is not much to squeeze. The only way to sell your services is cheaper than the market and, at the same time, not to go to the minus is to run more clients on one server. But this approach is always at the expense of performance - either now or in the near future. Therefore, if cloud service performance is important to you, price should not be the only factor when choosing a service provider.

Source: https://habr.com/ru/post/323162/

All Articles