Will the voxels become a new breakthrough technology?

We talked to the awesome Atomontage developers, trying to figure out if the voxels could come back and beat the pixels.

Branislav: in 2000-2002 I participated in competitions of the European demoscene. I wrote several 256-byte demos (also called intro) under the nickname Silique / Bizzare Devs (see “Njufnjuf”, “Oxlpka”, “I like ya, Tweety” and “Comatose”). Each intro generated real-time voxels or graphics from a point cloud. Both voxels and point clouds are examples of sampled geometry.

Intros performed their task in just 100 processor instructions, such as ADD, MUL, STOSB, PUSH and the like. However, due to the very nature of this type of program, in fact dozens of instructions were used simply for proper setup, and not for generating the graphics itself. Nevertheless, these more than 50 instructions, which in essence were elementary mathematical operations or memory operations, were enough to generate quite beautiful mobile 3D graphics in real time. All these 256-byte intros won from first to third places. This made me realize that if such 3D graphics can be created without polygons, then much more can be achieved in games and other applications using the same principle: using sampled geometry instead of polygonal meshes. The solution is simplicity. I realized that the then dominant paradigm, based on complex and fundamentally limited (non-volume) data presentation, was already ready to lean against the ceiling of possibilities. That is, it is the right time to try this “new”, simpler paradigm: volumetric sampled geometry.

')

Dan: While still in high school in Sweden, I started to program a side-scrolling 2D engine, which I created as a result of an indie game called “Cortex Command”. She looked like Worms or Liero, but with more real-time gameplay and RTS elements. Also, the game used a more detailed simulation of different materials of each pixel of the relief. In the side view, similar to the “ant farm”, the player’s characters could dig gold in soft ground and build protective bunkers with solid concrete and metal walls. In 2009, Cortex Command won the Technical Excellence Award and Audience Award at the Independent Games Festival. Since that time, I dreamed of creating a fully three-dimensional version of the game, and this was possible only with the help of volumetric simulation and graphics.

About six years ago I was looking for finished voxel solutions and found Branislav’s work on his website and in a video in which he talked about the inevitable transition from polygonal 3D graphics to something that resembled what I had done in 2D: to simulate the entire virtual world as small atomic blocks with material properties. Not only did I find his statement correct - his technology, judging by the simple but impressive videos, turned out to be the best and most convincing of the existing ones. I began to sponsor his project through his website and communicate with him, which led to the beginning of many years of friendship, and now co-financing our company. It’s amazing to feel part of this turning point in such an epic project in which the results of many years of research and development can finally be passed on to people and make a revolution in the creation and consumption of 3D content!

We believe that many large players have realized: polygonal technologies have rested on the ceiling of complexity more than a decade ago. This problem manifests itself in many ways: in complex tulchains, in tricky hacks, allowing for interaction and simulation of damage, in a complex representation of geometry (polygonal surface model + collision models + other models for representing the internal structure, if present) volumetric videos, hacks and huge code bases, etc. Because of these problems, progress is almost entirely dependent on the power of video processors, and some aspects are generally unattainable. This is a battle in which you can not win. This is typical of the nature of large companies: often they do not even try to spend a lot of time and resources on developing risky and changing game conditions decisions; instead, their strategy is to buy small companies that succeed.

There is a set of techniques that people usually consider voxel-based. The oldest of them were used in games based on height maps, where the renderer interpreted a 2D elevation map to calculate the boundaries between the air and the ground of the scene. This is not a completely voxel approach because a volumetric data set is not used here (examples: Delta Force 1, Comanche, Outcast, and others).

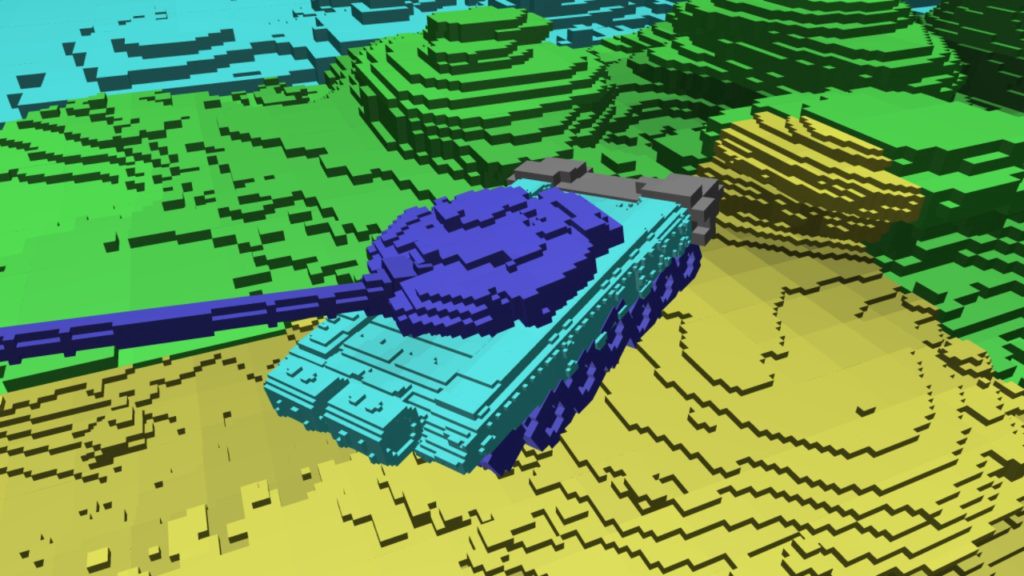

Some engines and games use large blocks with their own internal structure that make up the virtual world (example: Minecraft). These blocks are usually rendered using polygons, that is, the smallest elements are triangles and texels, not voxels. Such geometry is simply ordered into a grid of larger blocks, but this, strictly speaking, does not make them voxels.

Some games use relatively large voxels, or SDF elements (signed distance fields, fields with a significant distance) that still do not provide realism, but will already allow you to create interesting gameplay (examples: Voxelstein, Voxelnauts, Staxel). There are also SDF-based projects that provide great interactions and simulations and have the potential to create high realism (example: Claybook). However, so far we have not seen attempts to develop a solution for simulating and rendering realistic large scenes similar to those our technology is capable of.

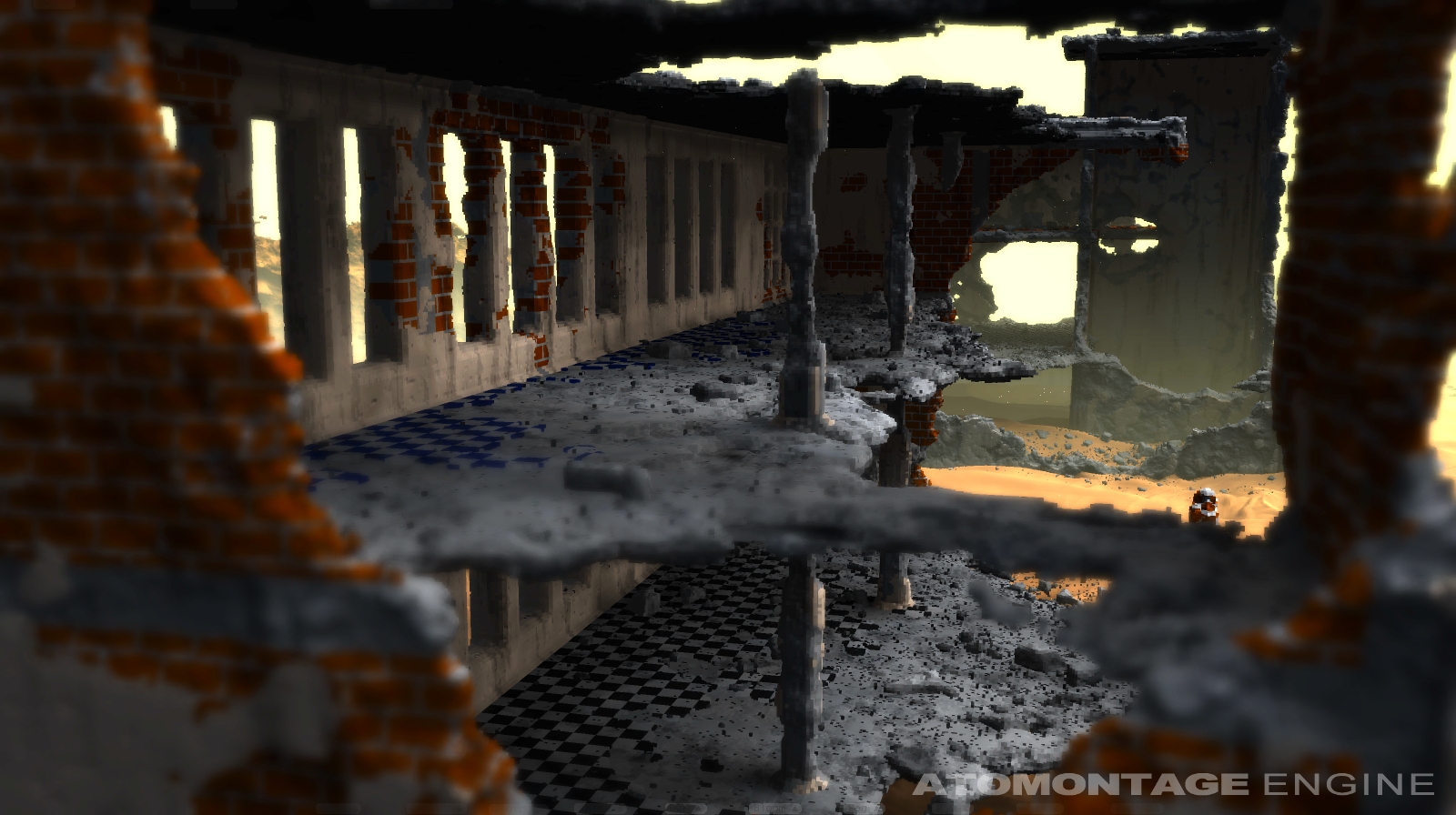

Atomontage uses voxels as basic building blocks of scenes. Individual voxels in our technology have virtually no structure. This approach provides simplicity, which greatly helps in simulations, interactions, content generation, as well as data compression, rendering, encoding of volumetric video, etc.

Our voxel-based solution removes many of the difficulties that people face with polygon-based technologies, including the very concept of a polygon limit and the many hacks used to circumvent this polygon. Our technology is voluminous by its very nature and therefore does not require any additional representation for modeling inside objects.

In this approach, there is a powerful and integral LOD system (level of detail, level of detail), which allows technology to balance performance and quality in traditionally difficult situations. One of the many advantages is granular control over LOD, foveated rendering and processing, which require almost no unnecessary costs.

Voxel geometry relieves the burden of a complex structure: it is based on sampling, and therefore it is easy to work with it (a simple and universal data model for any geometry, unlike a complex resource-based polygon data model). This allows for faster iterations when developing powerful compression methods, interaction tools, content generators, physics simulators, etc. This does not happen in polygonal technologies, because for at least a decade they have been struggling with the ceiling of complexity, and their progress strongly depends on the exponential growth of the power of video processors.

The voxel approach is effective because it does not waste resources or bandwidth on poorly compressible components of vectors or vector representations of data (polygons, point clouds, particles), that is, values that have an almost random arrangement. In the case of voxels, it is generally possible to encode useful information (color, material information, etc.), rather than redundant data, simply by placing this information in the right place in space. You can compare this with JPEG and some 2D vector format encoding a large and complex image. JPEG encoding is predictable and can be fine tuned to optimal quality and low volume, and the vector image will spend most of the space on vector information instead of the color samples themselves.

Our approach will allow ordinary people to give free rein to their creative talent without having to learn and understand the internal technology and its limitations. The skills that we learned, growing up in the real world, will be sufficient for interacting with virtual environments in a useful and realistic way.

Thanks to the LOD system, which is an integral part of the technology, and the simple spatial structure of voxel resources, it is easy to obtain large-scale high-resolution video. Our voxel-based rendering does not reduce the quality of the geometry due to the mesh simplification, and the performance achieved on standard modern equipment is incomparable.

Now we are at the stage when we can voxelize not only one large high-poly model, but also a whole series of such resources with the assembly of a volumetric video from them. We can also do this with whole environments: Imagine a scene of cinematic quality with characters that can be used in a science fiction or animated film. We can turn the environment into a VR film, in which the user can be in the same space with the characters, freely moving the viewpoint (and not just looking around, like in a virtual video) in a scene the size of a room or even larger. The user can perceive the process as usual for VR games, with the exception of interactivity. Now we are looking for partners that would help us to make the first trial short film with high-quality graphics.

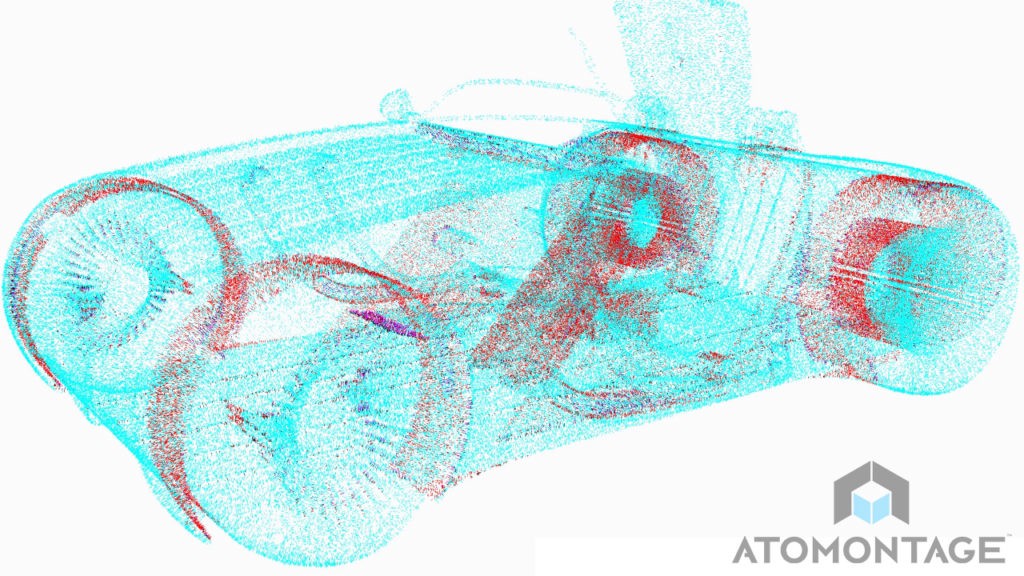

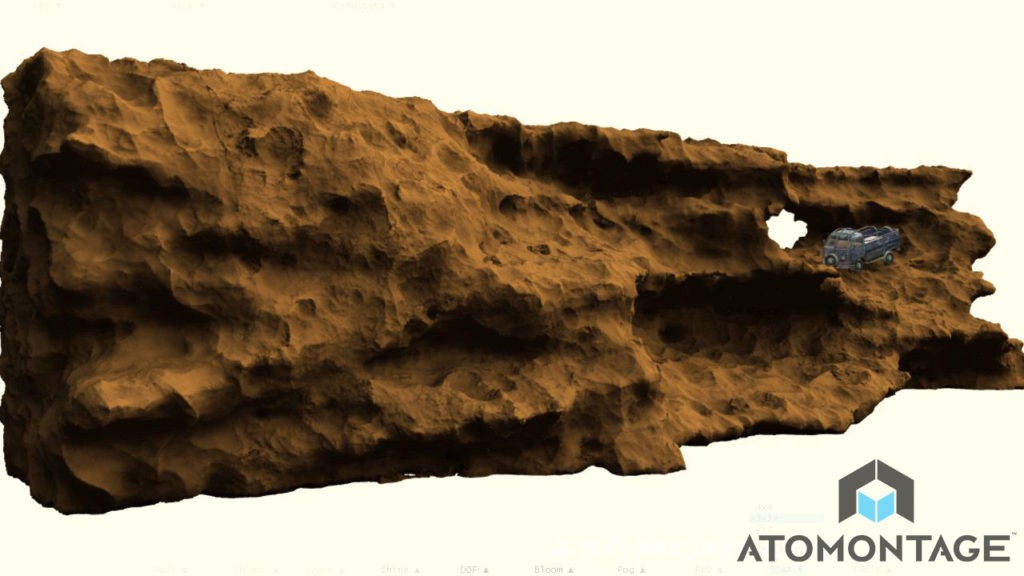

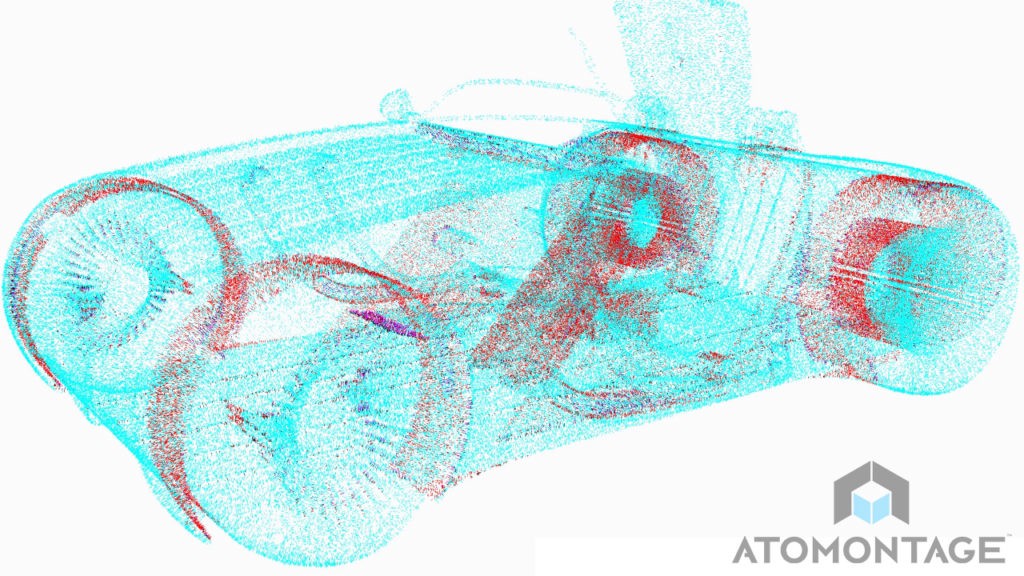

Our technology already allows the use of various transformations that affect any voxel model, creating convincing effects of deformation of soft bodies. Although we do not yet have a demo character animation with full skinning and other functions, our technology is certainly capable of that, if you attach it to any traditional rigging system. This is already visible in our soft-body videos: tires on car wheels are compressed in response to simulated forces, and this deformation is similar to what happens in character animation when the attached bones are affected. In the early stages, the rigging itself can even be performed in some of the already existing tools before voxelization, and over time we implement it in our own tools.

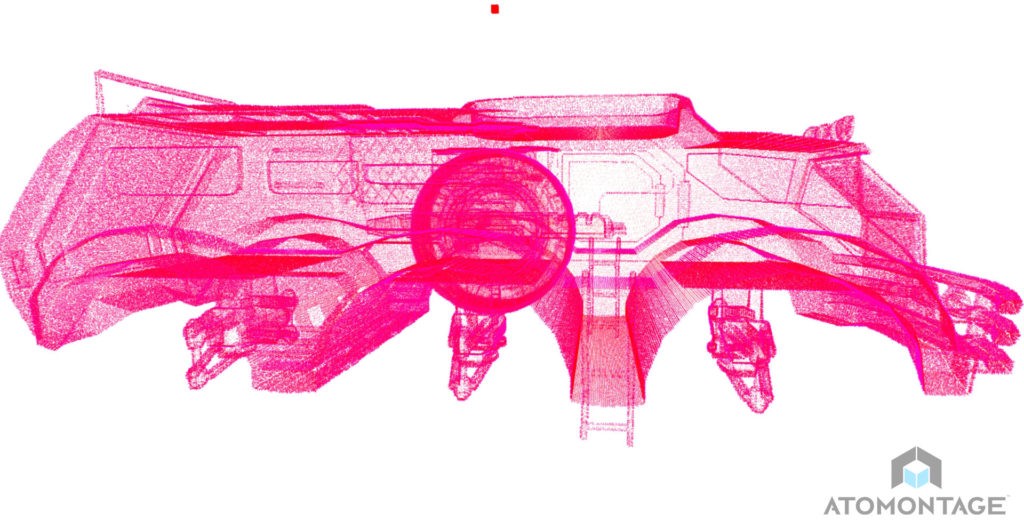

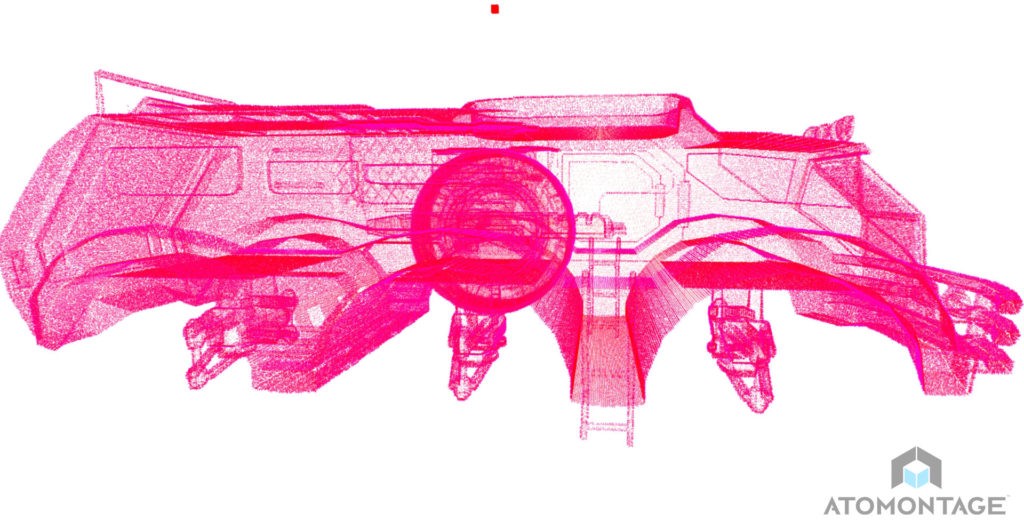

Ready textural resources can be voxelised in various ways. We have the best results with two of them - with a ray-based voxelizer and a projection-based voxelizer. The first emits rays through a polygonal model, recognizes intersections between each of the rays and the mesh, calculates the relative positions on the attached texture, reads texels and bakes them into the corresponding voxels applied to the model.

Based on the projection, voxelizer renders the model from several viewpoints into maps, including depth maps. The intersection of volumes, defined by depth maps, provides information about voxels that need to be created. Other generated maps provide the rest of the surface information (color, normal, etc.), which is also baked into the surface voxels.

There are also other ways to create correctly painted surfaces - by voxelising the point cloud data or procedurally generating content / surface properties of the finished models.

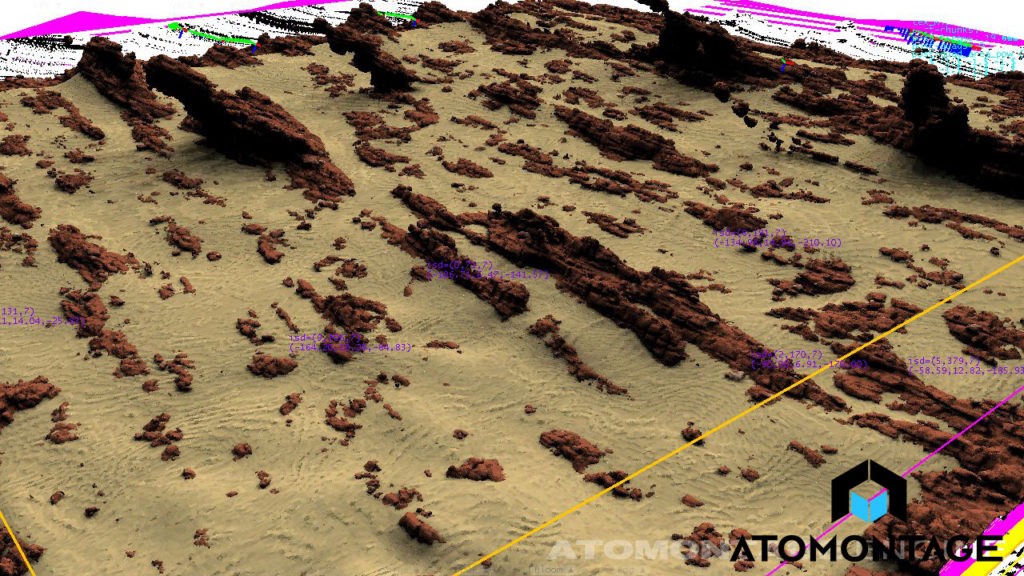

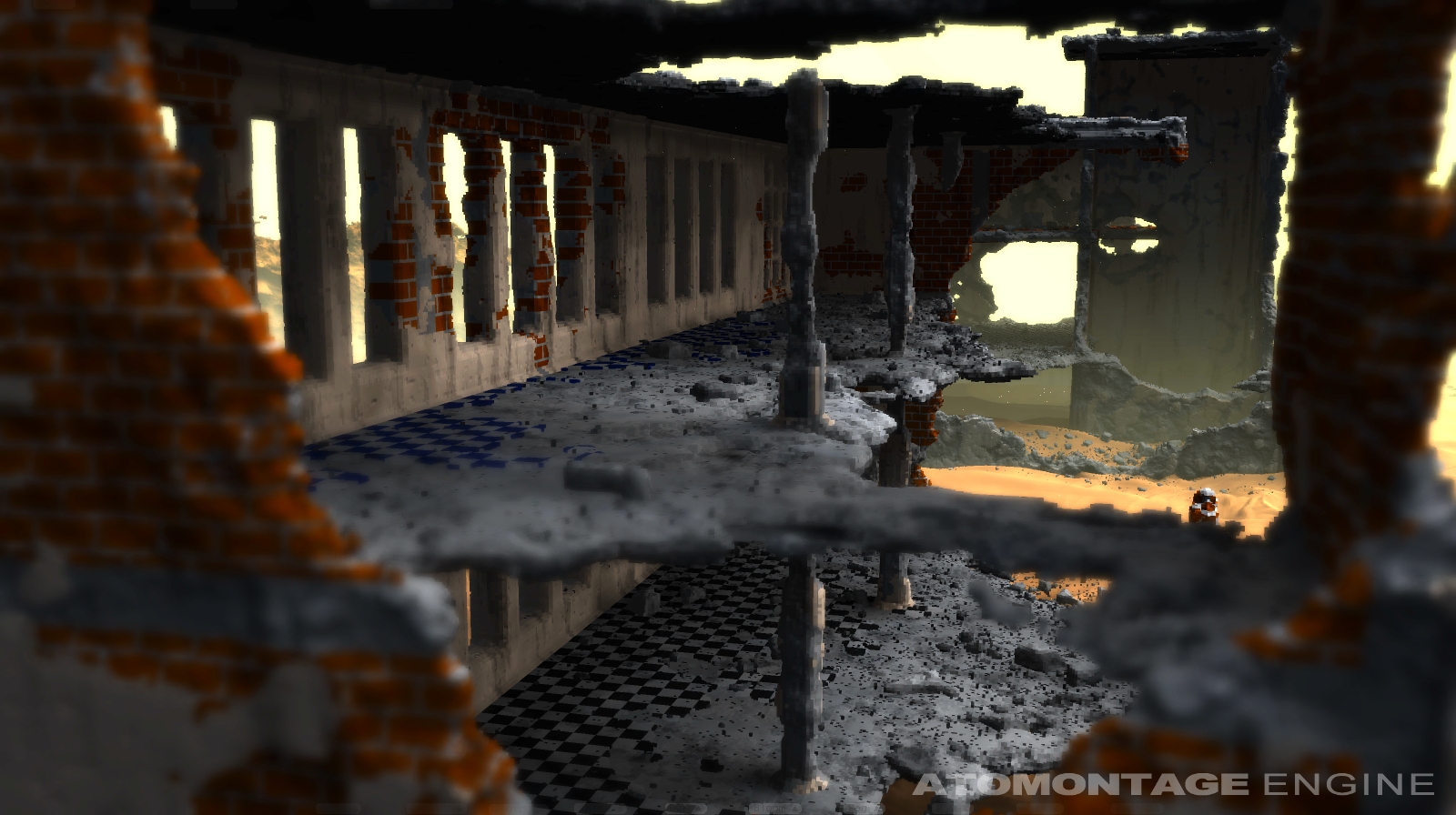

These are two separate problems. Generating content using voxels is simple because all you need to do is to add a large number of samples with some useful properties (color, material information, etc.) into a uniform grid with a given spatial resolution, or with several resolutions in the case of data with variable LOD . This is easily implemented using voxels, because we are not faced with restrictions on the number of polygons. In addition, there are no textures either, so we have no problem resolving textures.

Rendering large scenes is also quite simple thanks to the powerful LOD system. The renderer can use the most optimal LOD combination of small geometry segments to render the entire scene with the greatest possible detail, while maintaining a high FPS. LOD voxels are very inexpensive and are well suited for saving voxel sizes (and therefore form errors) smaller than the pixel size on the screen.

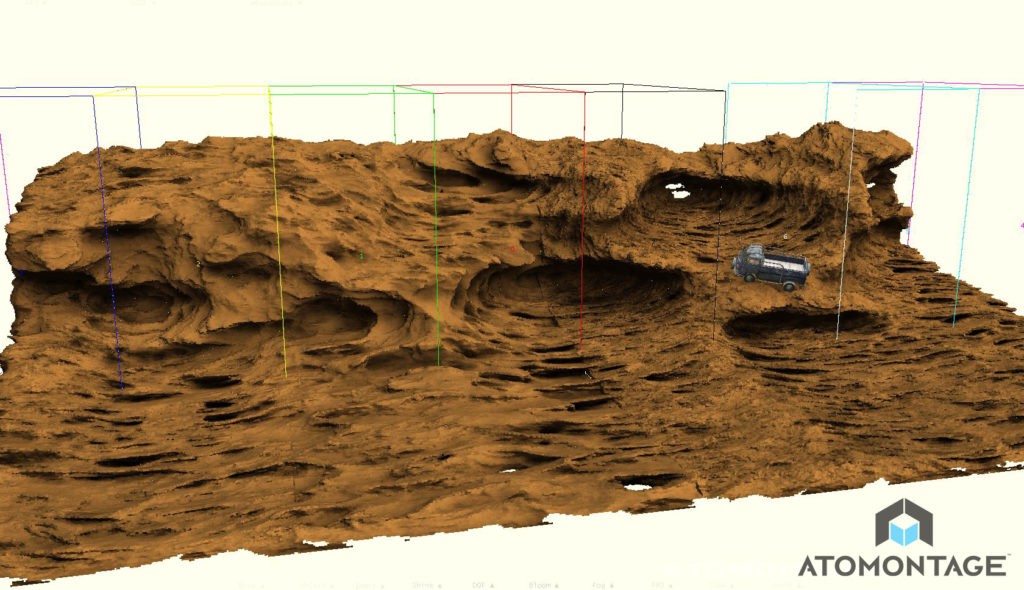

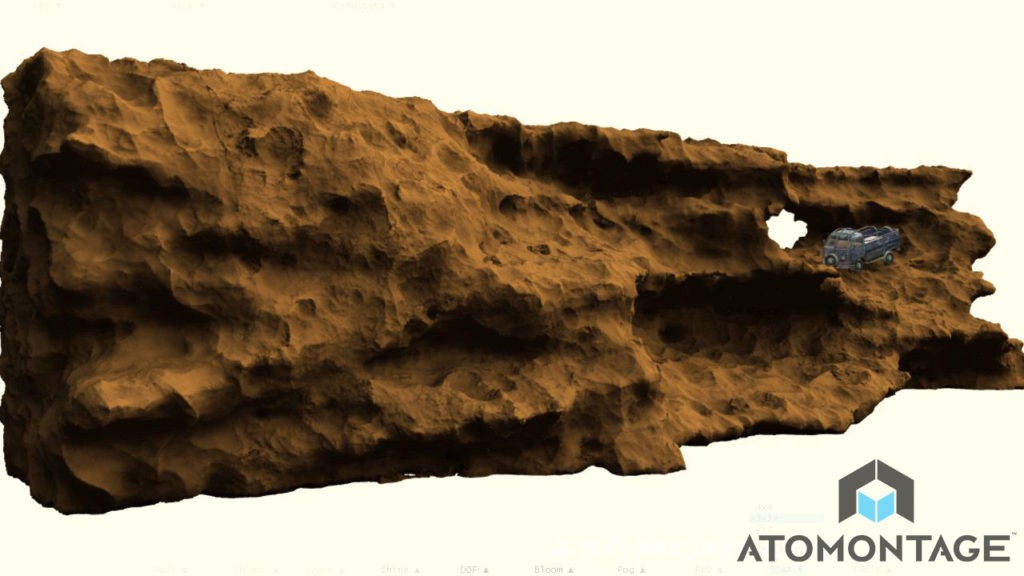

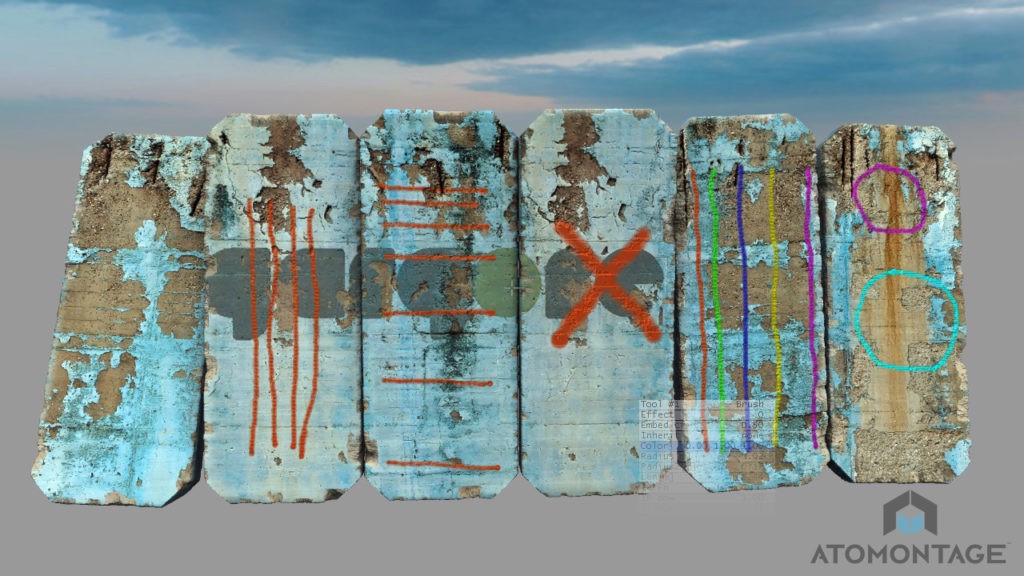

Our voxelizers are already powerful enough to voxelize very high poly meshes and point clouds using the methods mentioned above. We demonstrated the first results with photogrammetry data at the beginning of 2013, voxelizing a mesh of 150 million polygons, which we rendered and changed in real time on an average gaming laptop of 2008. After voxelization, the number of polygons of the original geometry becomes unimportant, and changing the model is a simple task. This can be seen in our videos; performance depends more on the number of pixels than on the number of polygons of the original data. All this is necessary to provide users with a comfortable workflow when cleaning up huge scanned models. In addition, voxels are in some sense similar to pixels, so we foresee the use of highly specialized AI (with deep training) for automatic cleanup of photogrammetry data.

Representing voxel space is a prerequisite for creating a huge network of interactive interactive virtual environments that at some point will begin to resemble the real world. The key point is that such environments are not static and allow users to interact with them in the usual way in the real world. That is, such virtual environments must be fully simulated and with convincing physics. The simulation and rendering of a complex, fully dynamic world requires that such worlds be volumetric, and all processing, synchronization and rendering of the simulated geometry must be effective. The volumetric nature of this process eliminates the use of polygons. In addition, as mentioned above, other vector forms of representation are not sufficiently effective in creating high quality at low cost.

We expect that such huge simulated environments will consist mainly of voxels, and only a small part will be simulated using particles to create some effects. It is remarkable that both of these representations are simple and sampled, and that the conversion from one to the other will be trivial and inexpensive in terms of computation.

These are the tremendous advantages of voxels over other presentation methods, making them the ideal solution for describing such gigantic virtual environments. The LOD system is great for optimizing processing and rendering on the fly, depending on any combination of parameters (distance to the user, importance of the simulated process, accuracy / cost ratio, etc.). Also, thanks to this, they are ideal for foveated rendering and very efficient streaming. They can also be set with a higher dimension, which is necessary to perform a large-scale distributed physical simulation. Such simulations cannot be performed in just three dimensions due to network delays and the impossibility of processing a large model on one PC and a one-time transfer of a large part of the geometry. A segmented, mutable voxel geometry LOD system will help a lot here. When changing a small part or several parts of a large voxel model, there is no need to recalculate its large mesh and textures, as well as synchronize the entire model over the network — only the affected parts that can be synchronized with the most suitable for transmission over the LOD network are important.

All these requirements for the huge virtual environments of the future lead to the necessity and inevitability of a paradigm shift towards volumetric sampled geometry. The properties of our voxel technology make it the best and only candidate for a paradigm shift in the near future.

Atomontage development team . Interview conducted by Cyril Tokarev.

Voxel development

Branislav: in 2000-2002 I participated in competitions of the European demoscene. I wrote several 256-byte demos (also called intro) under the nickname Silique / Bizzare Devs (see “Njufnjuf”, “Oxlpka”, “I like ya, Tweety” and “Comatose”). Each intro generated real-time voxels or graphics from a point cloud. Both voxels and point clouds are examples of sampled geometry.

Intros performed their task in just 100 processor instructions, such as ADD, MUL, STOSB, PUSH and the like. However, due to the very nature of this type of program, in fact dozens of instructions were used simply for proper setup, and not for generating the graphics itself. Nevertheless, these more than 50 instructions, which in essence were elementary mathematical operations or memory operations, were enough to generate quite beautiful mobile 3D graphics in real time. All these 256-byte intros won from first to third places. This made me realize that if such 3D graphics can be created without polygons, then much more can be achieved in games and other applications using the same principle: using sampled geometry instead of polygonal meshes. The solution is simplicity. I realized that the then dominant paradigm, based on complex and fundamentally limited (non-volume) data presentation, was already ready to lean against the ceiling of possibilities. That is, it is the right time to try this “new”, simpler paradigm: volumetric sampled geometry.

')

Dan: While still in high school in Sweden, I started to program a side-scrolling 2D engine, which I created as a result of an indie game called “Cortex Command”. She looked like Worms or Liero, but with more real-time gameplay and RTS elements. Also, the game used a more detailed simulation of different materials of each pixel of the relief. In the side view, similar to the “ant farm”, the player’s characters could dig gold in soft ground and build protective bunkers with solid concrete and metal walls. In 2009, Cortex Command won the Technical Excellence Award and Audience Award at the Independent Games Festival. Since that time, I dreamed of creating a fully three-dimensional version of the game, and this was possible only with the help of volumetric simulation and graphics.

About six years ago I was looking for finished voxel solutions and found Branislav’s work on his website and in a video in which he talked about the inevitable transition from polygonal 3D graphics to something that resembled what I had done in 2D: to simulate the entire virtual world as small atomic blocks with material properties. Not only did I find his statement correct - his technology, judging by the simple but impressive videos, turned out to be the best and most convincing of the existing ones. I began to sponsor his project through his website and communicate with him, which led to the beginning of many years of friendship, and now co-financing our company. It’s amazing to feel part of this turning point in such an epic project in which the results of many years of research and development can finally be passed on to people and make a revolution in the creation and consumption of 3D content!

Growing interest

We believe that many large players have realized: polygonal technologies have rested on the ceiling of complexity more than a decade ago. This problem manifests itself in many ways: in complex tulchains, in tricky hacks, allowing for interaction and simulation of damage, in a complex representation of geometry (polygonal surface model + collision models + other models for representing the internal structure, if present) volumetric videos, hacks and huge code bases, etc. Because of these problems, progress is almost entirely dependent on the power of video processors, and some aspects are generally unattainable. This is a battle in which you can not win. This is typical of the nature of large companies: often they do not even try to spend a lot of time and resources on developing risky and changing game conditions decisions; instead, their strategy is to buy small companies that succeed.

Technology

There is a set of techniques that people usually consider voxel-based. The oldest of them were used in games based on height maps, where the renderer interpreted a 2D elevation map to calculate the boundaries between the air and the ground of the scene. This is not a completely voxel approach because a volumetric data set is not used here (examples: Delta Force 1, Comanche, Outcast, and others).

Some engines and games use large blocks with their own internal structure that make up the virtual world (example: Minecraft). These blocks are usually rendered using polygons, that is, the smallest elements are triangles and texels, not voxels. Such geometry is simply ordered into a grid of larger blocks, but this, strictly speaking, does not make them voxels.

Some games use relatively large voxels, or SDF elements (signed distance fields, fields with a significant distance) that still do not provide realism, but will already allow you to create interesting gameplay (examples: Voxelstein, Voxelnauts, Staxel). There are also SDF-based projects that provide great interactions and simulations and have the potential to create high realism (example: Claybook). However, so far we have not seen attempts to develop a solution for simulating and rendering realistic large scenes similar to those our technology is capable of.

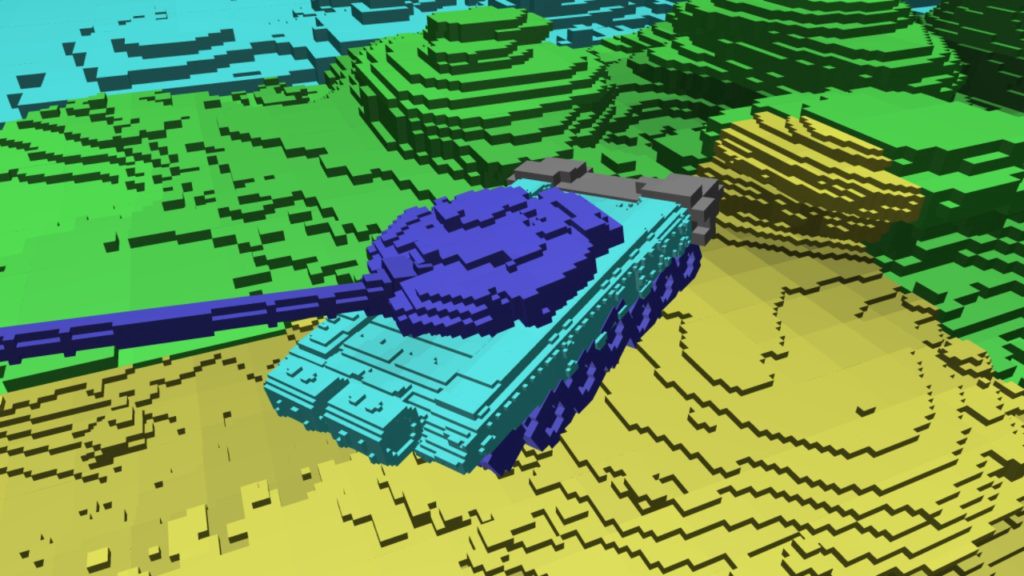

Atomontage uses voxels as basic building blocks of scenes. Individual voxels in our technology have virtually no structure. This approach provides simplicity, which greatly helps in simulations, interactions, content generation, as well as data compression, rendering, encoding of volumetric video, etc.

Benefits

Our voxel-based solution removes many of the difficulties that people face with polygon-based technologies, including the very concept of a polygon limit and the many hacks used to circumvent this polygon. Our technology is voluminous by its very nature and therefore does not require any additional representation for modeling inside objects.

In this approach, there is a powerful and integral LOD system (level of detail, level of detail), which allows technology to balance performance and quality in traditionally difficult situations. One of the many advantages is granular control over LOD, foveated rendering and processing, which require almost no unnecessary costs.

Voxel geometry relieves the burden of a complex structure: it is based on sampling, and therefore it is easy to work with it (a simple and universal data model for any geometry, unlike a complex resource-based polygon data model). This allows for faster iterations when developing powerful compression methods, interaction tools, content generators, physics simulators, etc. This does not happen in polygonal technologies, because for at least a decade they have been struggling with the ceiling of complexity, and their progress strongly depends on the exponential growth of the power of video processors.

The voxel approach is effective because it does not waste resources or bandwidth on poorly compressible components of vectors or vector representations of data (polygons, point clouds, particles), that is, values that have an almost random arrangement. In the case of voxels, it is generally possible to encode useful information (color, material information, etc.), rather than redundant data, simply by placing this information in the right place in space. You can compare this with JPEG and some 2D vector format encoding a large and complex image. JPEG encoding is predictable and can be fine tuned to optimal quality and low volume, and the vector image will spend most of the space on vector information instead of the color samples themselves.

Our approach will allow ordinary people to give free rein to their creative talent without having to learn and understand the internal technology and its limitations. The skills that we learned, growing up in the real world, will be sufficient for interacting with virtual environments in a useful and realistic way.

Thanks to the LOD system, which is an integral part of the technology, and the simple spatial structure of voxel resources, it is easy to obtain large-scale high-resolution video. Our voxel-based rendering does not reduce the quality of the geometry due to the mesh simplification, and the performance achieved on standard modern equipment is incomparable.

Now we are at the stage when we can voxelize not only one large high-poly model, but also a whole series of such resources with the assembly of a volumetric video from them. We can also do this with whole environments: Imagine a scene of cinematic quality with characters that can be used in a science fiction or animated film. We can turn the environment into a VR film, in which the user can be in the same space with the characters, freely moving the viewpoint (and not just looking around, like in a virtual video) in a scene the size of a room or even larger. The user can perceive the process as usual for VR games, with the exception of interactivity. Now we are looking for partners that would help us to make the first trial short film with high-quality graphics.

Gif

Rigging

Our technology already allows the use of various transformations that affect any voxel model, creating convincing effects of deformation of soft bodies. Although we do not yet have a demo character animation with full skinning and other functions, our technology is certainly capable of that, if you attach it to any traditional rigging system. This is already visible in our soft-body videos: tires on car wheels are compressed in response to simulated forces, and this deformation is similar to what happens in character animation when the attached bones are affected. In the early stages, the rigging itself can even be performed in some of the already existing tools before voxelization, and over time we implement it in our own tools.

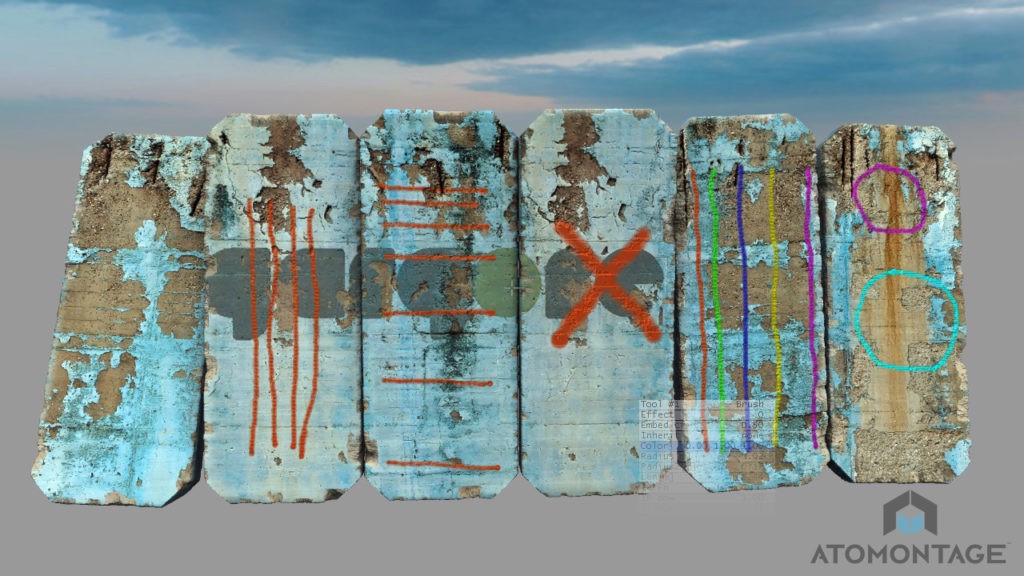

Texturing

Ready textural resources can be voxelised in various ways. We have the best results with two of them - with a ray-based voxelizer and a projection-based voxelizer. The first emits rays through a polygonal model, recognizes intersections between each of the rays and the mesh, calculates the relative positions on the attached texture, reads texels and bakes them into the corresponding voxels applied to the model.

Based on the projection, voxelizer renders the model from several viewpoints into maps, including depth maps. The intersection of volumes, defined by depth maps, provides information about voxels that need to be created. Other generated maps provide the rest of the surface information (color, normal, etc.), which is also baked into the surface voxels.

There are also other ways to create correctly painted surfaces - by voxelising the point cloud data or procedurally generating content / surface properties of the finished models.

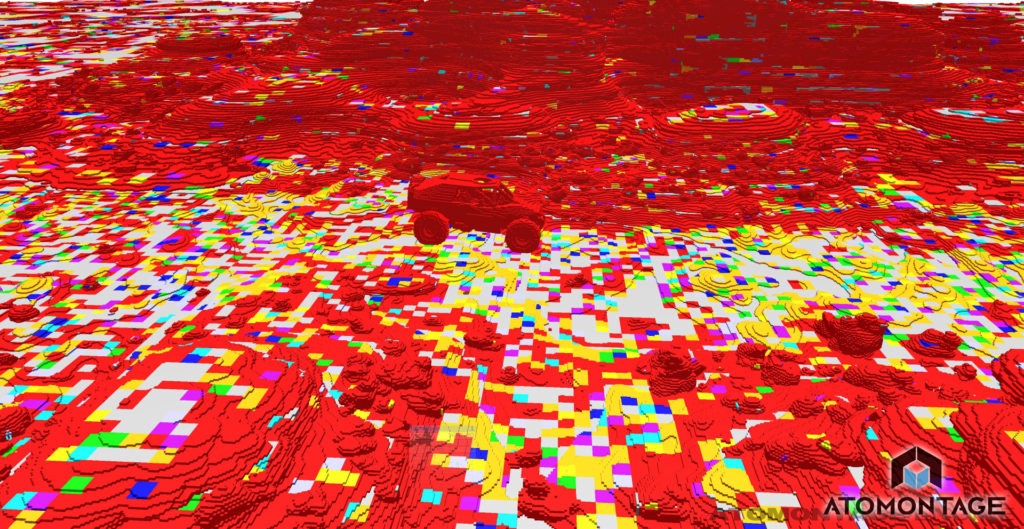

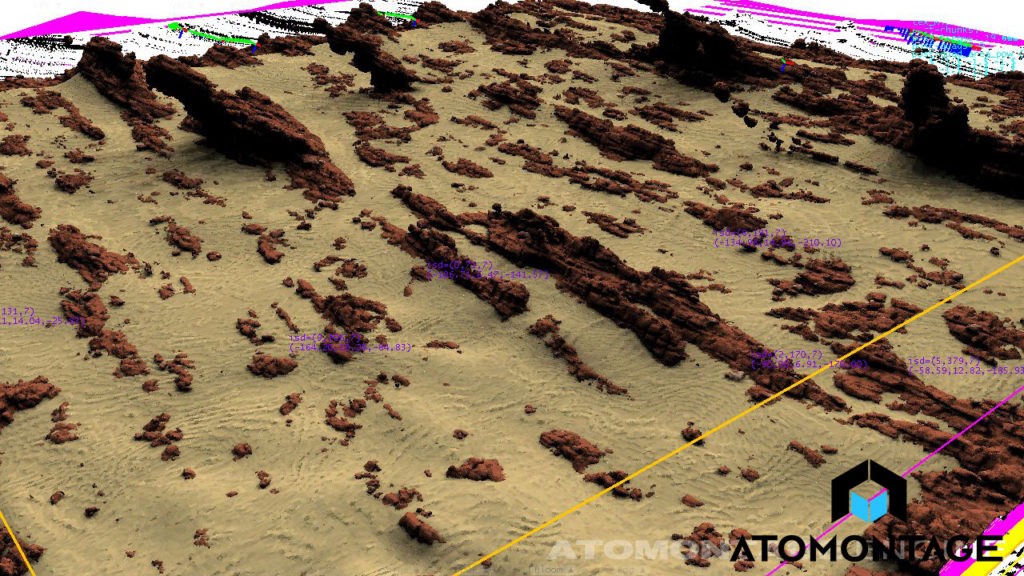

Large-scale landscapes

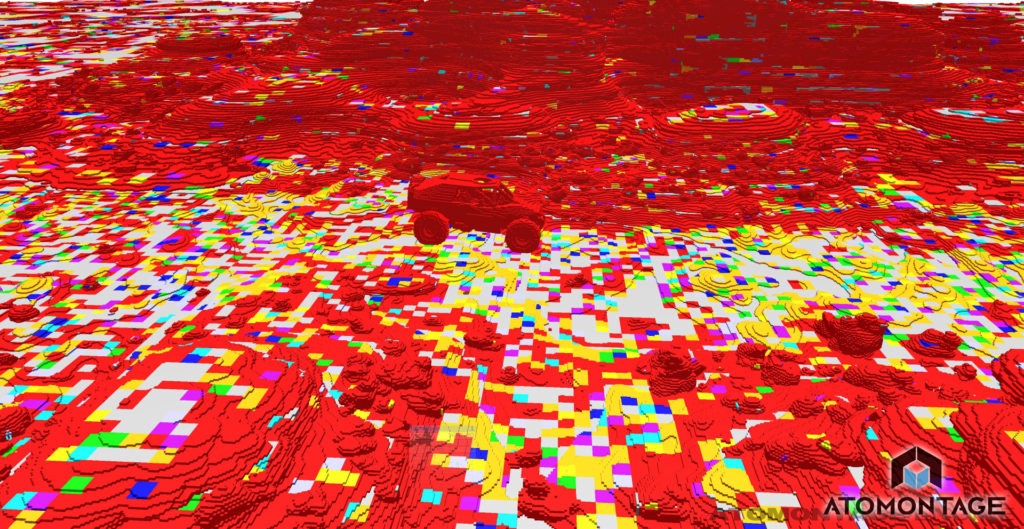

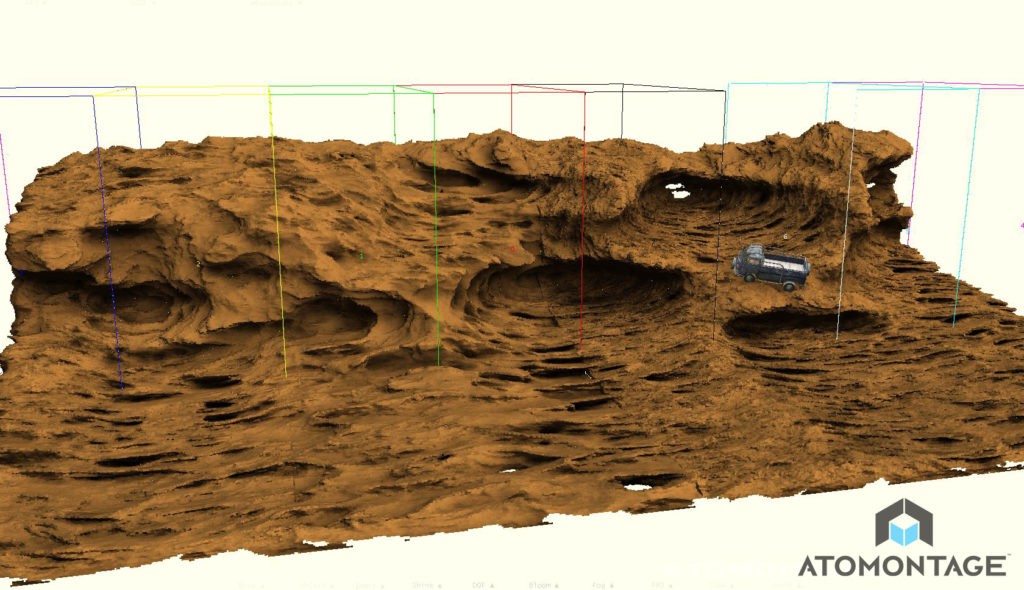

These are two separate problems. Generating content using voxels is simple because all you need to do is to add a large number of samples with some useful properties (color, material information, etc.) into a uniform grid with a given spatial resolution, or with several resolutions in the case of data with variable LOD . This is easily implemented using voxels, because we are not faced with restrictions on the number of polygons. In addition, there are no textures either, so we have no problem resolving textures.

Rendering large scenes is also quite simple thanks to the powerful LOD system. The renderer can use the most optimal LOD combination of small geometry segments to render the entire scene with the greatest possible detail, while maintaining a high FPS. LOD voxels are very inexpensive and are well suited for saving voxel sizes (and therefore form errors) smaller than the pixel size on the screen.

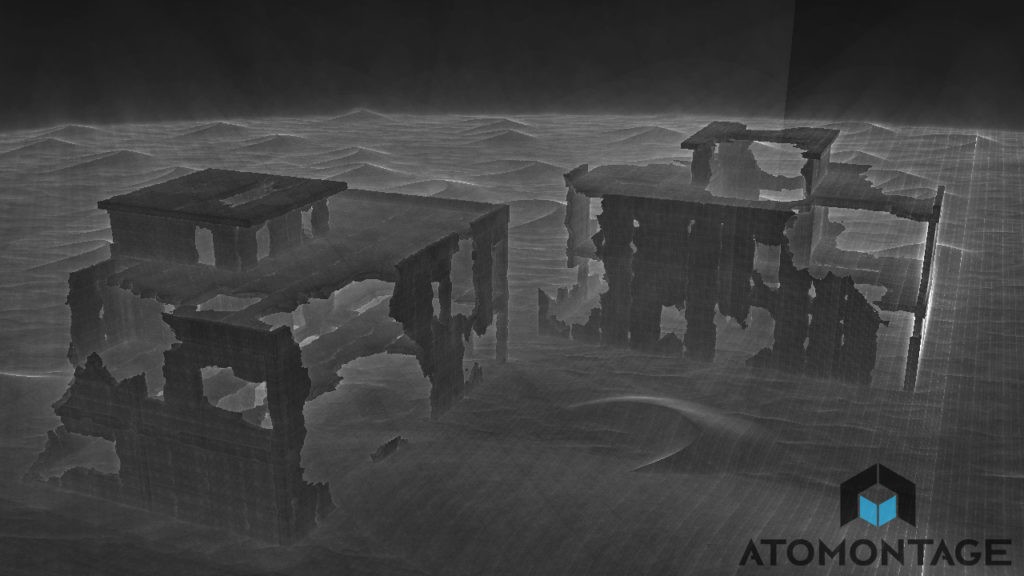

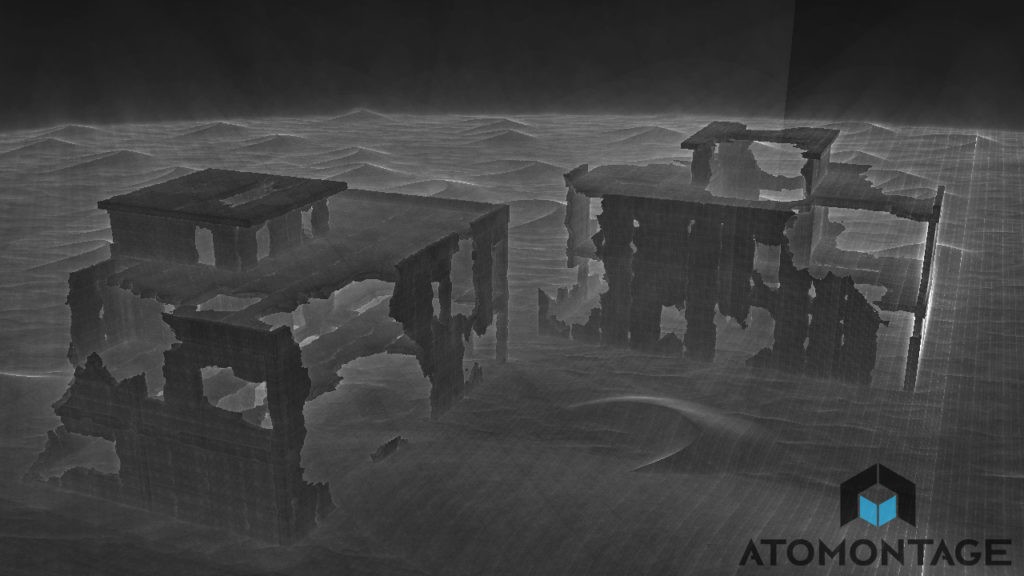

Work with scans

Our voxelizers are already powerful enough to voxelize very high poly meshes and point clouds using the methods mentioned above. We demonstrated the first results with photogrammetry data at the beginning of 2013, voxelizing a mesh of 150 million polygons, which we rendered and changed in real time on an average gaming laptop of 2008. After voxelization, the number of polygons of the original geometry becomes unimportant, and changing the model is a simple task. This can be seen in our videos; performance depends more on the number of pixels than on the number of polygons of the original data. All this is necessary to provide users with a comfortable workflow when cleaning up huge scanned models. In addition, voxels are in some sense similar to pixels, so we foresee the use of highly specialized AI (with deep training) for automatic cleanup of photogrammetry data.

The future of VR and streaming

Gif

Representing voxel space is a prerequisite for creating a huge network of interactive interactive virtual environments that at some point will begin to resemble the real world. The key point is that such environments are not static and allow users to interact with them in the usual way in the real world. That is, such virtual environments must be fully simulated and with convincing physics. The simulation and rendering of a complex, fully dynamic world requires that such worlds be volumetric, and all processing, synchronization and rendering of the simulated geometry must be effective. The volumetric nature of this process eliminates the use of polygons. In addition, as mentioned above, other vector forms of representation are not sufficiently effective in creating high quality at low cost.

We expect that such huge simulated environments will consist mainly of voxels, and only a small part will be simulated using particles to create some effects. It is remarkable that both of these representations are simple and sampled, and that the conversion from one to the other will be trivial and inexpensive in terms of computation.

Gif

These are the tremendous advantages of voxels over other presentation methods, making them the ideal solution for describing such gigantic virtual environments. The LOD system is great for optimizing processing and rendering on the fly, depending on any combination of parameters (distance to the user, importance of the simulated process, accuracy / cost ratio, etc.). Also, thanks to this, they are ideal for foveated rendering and very efficient streaming. They can also be set with a higher dimension, which is necessary to perform a large-scale distributed physical simulation. Such simulations cannot be performed in just three dimensions due to network delays and the impossibility of processing a large model on one PC and a one-time transfer of a large part of the geometry. A segmented, mutable voxel geometry LOD system will help a lot here. When changing a small part or several parts of a large voxel model, there is no need to recalculate its large mesh and textures, as well as synchronize the entire model over the network — only the affected parts that can be synchronized with the most suitable for transmission over the LOD network are important.

All these requirements for the huge virtual environments of the future lead to the necessity and inevitability of a paradigm shift towards volumetric sampled geometry. The properties of our voxel technology make it the best and only candidate for a paradigm shift in the near future.

Atomontage development team . Interview conducted by Cyril Tokarev.

Source: https://habr.com/ru/post/371751/

All Articles