Post effects in mobile games

Most graphics publications for consoles and desktops talk about something new, and for mobile platforms, optimization of an existing one is always at the forefront.

As for post-processing, its magical effect on a photo was discovered long before the first computers appeared, and its mathematical and algorithmic basis, created for digital image processing, successfully fitted into the programmable GPU pipeline.

')

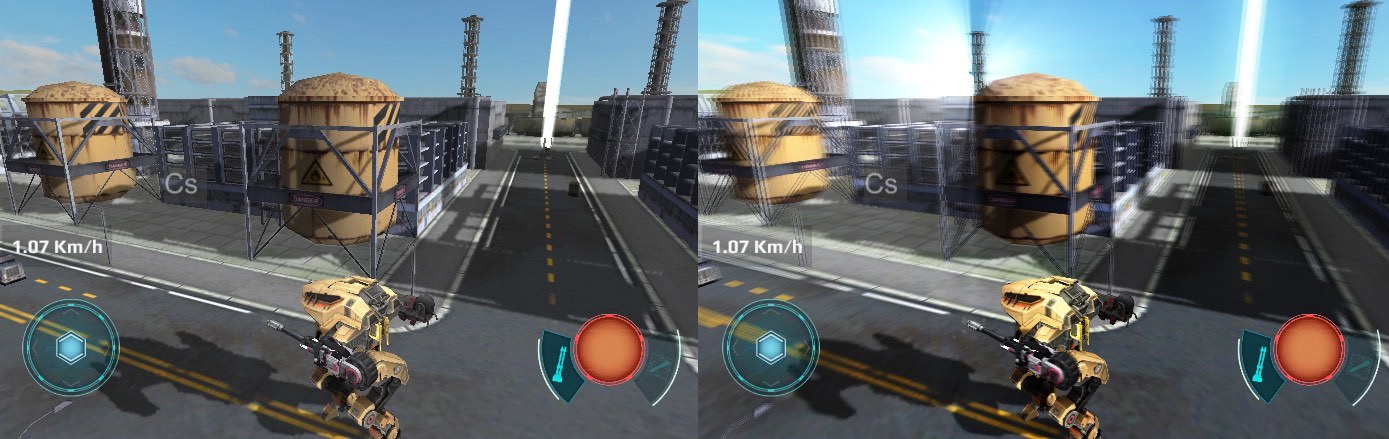

Besides the fact that post effects (more precisely, their not very competent use) are the subject of hatred among players, they are also almost the only way to quickly and cheaply “revive” and “refresh” the picture. How high-quality this “revival” will turn out and whether it will result in “skinning” as a result depends mostly on artists.

Slightly skinned War Robots screenshot.

As mentioned above, this article will focus on optimization. For those who are not in the subject - books from the GPU Gems series will be an excellent introductory course, the first three of which are available on the NVidia website [1].

The considered examples are implemented on Unity, however the optimization methods described here are applicable to any development environment.

Optimal Post-Processing Architecture

There are two ways to render post effects:

- sequential - when rendering is divided into separate steps and only one post-effect per step is applied to the image at each step;

- batch - first the intermediate result of each effect is rendered, and then all post effects are applied at the final step.

Sequential rendering is easier to implement and more convenient in terms of configuration. It is elementarily implemented as a list of post-effect objects, the rendering order of which is arbitrary in theory (not in practice), and moreover, the same type of effect can be applied several times. In fact, such advantages are claimed only in isolated cases.

At the same time, batch rendering is noticeably more efficient, since it saves the total number of memory accesses. The latter is most relevant for mobile platforms, on which increased computational load is accompanied by increased heat transfer (who would have thought). And even if the device manages to produce the required frame rate, it is unlikely that the player will be comfortable playing, holding a hot “brick” in their hands.

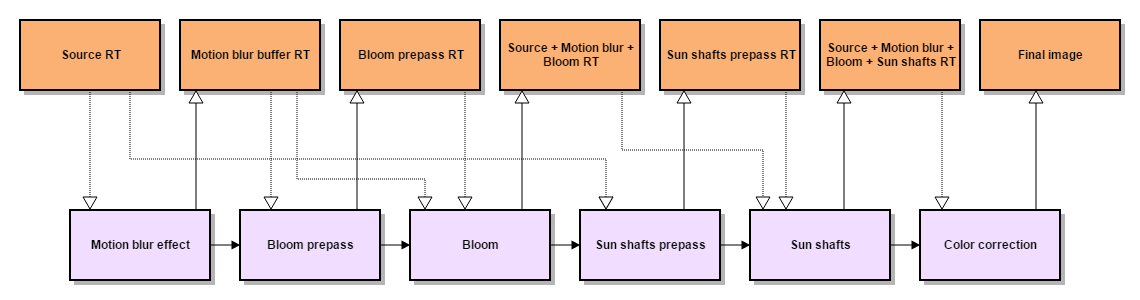

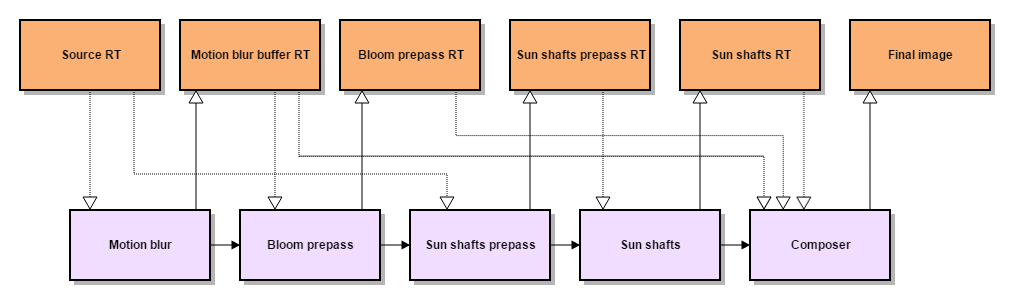

For clarity, I will give you a consistent and batch scheme for rendering post effects used in War Robots.

Sequential rendering: 8 readings, 6 entries.

Batch rendering: 7 readings, 5 entries.

Batch rendering for Unity is implemented in the Post Processing Stack module [2].

The sequence of applying post effects without changing the code is impossible to change (but not necessary), but you can turn off individual post effects. In addition, the module intensively uses the RenderTexture cache of resources built into Unity [3], so the specific post-effect code, as a rule, contains only rendering instructions.

Resources, however, the post-effect requests directly during rendering, and releases them upon its completion. This allows you to organize the reuse of resources in the subsequent post-effects, because the cache removes only those resources that have not been claimed in the last few frames.

The final stage in batch rendering is a compositional effect that combines the results of all the preceding steps and renders them with the help of a multivariate "Uber Shader". In Unity3D, such a shader can be done with the help of preprocessor directives #pragma multi_compile or #pragma shader_feature.

In general, we liked the Post Processing Stack, but it still didn’t work without a file. We needed a scalable module with the ability to add or replace post effects (including prepasses), as well as modify the hardline pipeline defining the rendering sequence, and the composition “uber-shader”. In addition, the effect quality settings and its parameters on a particular stage were separated in the effects.

Fillrate optimization

The main rendering method in post-processing is blitting: the specified shader is applied to all fragments of the texture used as the render target. Thus, rendering performance depends on the size of the texture and the computational complexity of the shader. The simplest way to improve performance (namely, reducing the size of the texture) affects the quality of post-processing.

But if it is known in advance that rendering is necessary only in a certain area of the texture, you can optimize the process, for example, replacing blitting with rendering a 3D model. Of course, no one forbids using viewport settings instead, but the 3D model differs from blitting by an increased amount of per-vertex data, which, in turn, allows more advanced vertex shaders to be used.

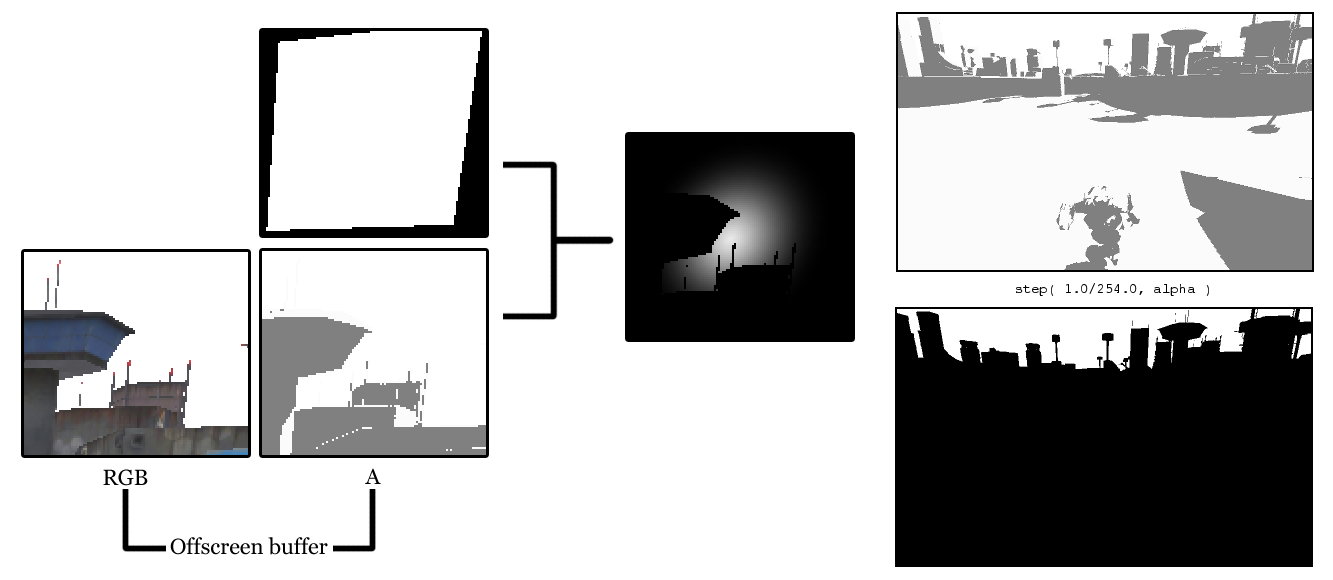

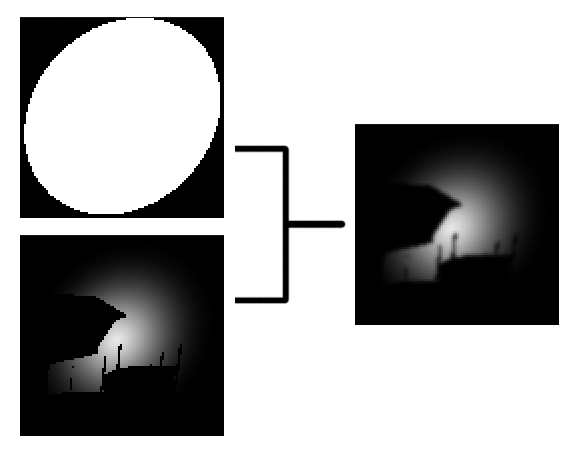

This is what we did with the post-effect of scattering light from the sun [4]. We have simplified the original prepass, replacing it with the rendering of a billboard with a "sun" texture. The fragments of the billboard, hidden behind the objects of the scene, were highlighted using a full-screen mask, which in combination serves as a buffer for shadows (I will tell more about shadow rendering later).

Right: shadow buffer and mask, which is obtained by applying a step function to it. All texels whose alpha is less than 1 overlap the “sun”.

struct appdata { float4 vertex : POSITION; half4 texcoord : TEXCOORD0; }; struct v2f { float4 pos : SV_POSITION; half4 screenPos : TEXCOORD0; half2 uv : TEXCOORD1; }; #include “Unity.cginc” sampler2D _SunTex; sampler2D _WWROffscreenBuffer; half4 _SunColor; v2f vertSunShaftsPrepass(appdata v) { v2f o; o.pos = mul(UNITY_MATRIX_MVP, v.vertex); o.screenPos = ComputeScreenPos(o.pos); o.uv = v.texcoord; return o; } fixed4 fragSunShaftsPrepass(v2f i) : COLOR { // _WWROffscreenBuffer - == 1 // const half AlphaThreshold = 0.99607843137; // 1 - 1.0/255.0 fixed4 result = tex2D( _SunTex, i.uv ) * _SunColor; half shadowSample = tex2Dproj( _WWROffscreenBuffer, UNITY_PROJ_COORD(i.screenPos) ).a; return result * step( AlphaThreshold, shadowSample ); } The smoothing of the prepass texture is also performed by rendering the 3D model.

struct appdata { float4 vertex : POSITION; }; struct v2f { float4 pos : SV_POSITION; half4 screenPos : TEXCOORD0; }; #include “Unity.cginc” sampler2D _PrePassTex; half4 _PrePassTex_TexelSize; half4 _BlurDirection; v2f vertSunShaftsBlurPrepass(appdata v) { v2f o; o.pos = mul(UNITY_MATRIX_MVP, v.vertex); o.screenPos = ComputeScreenPos(o.pos); o.uv = v.texcoord; return o; } fixed4 fragSunShaftsBlurPrepass(v2f i) : COLOR { half2 uv = i.screenPos.xy / i.screenPos.w; half2 blurOffset1 = _BlurDirection * _PrePassTex_TexelSize.xy * 0.53805; half2 blurOffset2 = _BlurDirection * _PrePassTex_TexelSize.xy * 2.06278; half2 uv0 = uv + blurOffset1; half2 uv1 = uv – blurOffset1; half2 uv2 = uv + blurOffset2; half2 uv3 = uv – blurOffset2; return (tex2D(_PrePassTex, uv0) + tex2D(_PrePassTex, uv1)) * 0.44908 + (tex2D(_PrePassTex, uv2) + tex2D(_PrePassTex, uv3)) * 0.05092; } Of course, we went to the end: the final pass was also made using the 3D model rendering. And unlike the previous cases, which, if desired, can be replaced by blitting in the viewport, here the 3D model contains additional data (the color of the vertex) that are used in the effect shader.

struct appdata { float4 vertex : POSITION; float4 color : COLOR; }; struct v2f { float4 pos : POSITION; float4 color : COLOR; float4 screenPos : TEXCOORD0; }; #include “Unity.cginc” sampler2D _PrePassTex; float4 _SunScreenPos; int _NumSamples; int _NumSteps; float _Density; float _Weight; float _Decay; float _Exposure; v2f vertSunShaftsRadialBlur(appdata v) { v2f o; o.pos = mul(UNITY_MATRIX_MVP, v.vertex); o.screenPos = ComputeScreenPos(o.pos); o.color = v.color; return o; } float4 fragSunShaftsRadialBlur(v2f i) : COLOR { float4 color = i.color; float2 uv = i.screenPos.xy / i.screenPos.w; float2 deltaTextCoords = (uv - _SunScreenPos.xy) / float(_NumSamples) * _Density; float2 illuminationDecay = 1.0; float4 result = 0; float4 sample0 = tex2D(_PrePassTex, uv); for(int i=0; i<_NumSteps; i++) { uv -= deltaTextCoords * 2; float4 sample2 = tex2D(_PrePassTex, uv); float4 sample1 = (sample0 + sample2) * 0.5; result += sample0 * illuminationDecay * _Weight; illuminationDecay *= _Decay; result += sample1 * illuminationDecay * _Weight; illuminationDecay *= _Decay; result += sample2 * illuminationDecay * _Weight; illuminationDecay *= _Decay; sample0 = sample2; } result *= _Exposure * color; return result; } Optimize dynamic shadows

Despite the computational complexity of post effects, dynamic shadows are often even more resource-dependent. This is due not only to the computational complexity of the respective shaders, but also to the fact that an additional full-screen rendering path is required to obtain smoothed shadows.

Usually, PCF filter is used to calculate shading for a fragment of an image using the Shadow Mapping technique [5]. However, the result without additional smoothing gives only PCF with a very large core size, which is unacceptable for mobile platforms. The more advanced Variance Shadow Mapping method requires the support of instructions for the approximation of partial derivatives and bilinear filtering for floating-point textures [6].

To obtain soft shadows, the render of the entire visible scene is performed twice - only shadows are rendered in the offscreen buffer, then a smoothing filter is applied to the offscreen buffer, and then the color of the objects is rendered to the screen, taking into account the effect of the shadow from the offscreen buffer. Which leads to dual loading of both the CPU (clipping, sorting, accessing the driver) and the GPU.

As one of the solutions to the problem, we decided to get rid of the double render of the scene, without switching to the deferred lighting technique.

First, we render the image into an intermediate buffer in RGBA format (1). The alpha value is the ratio of the brightness of the color of the fragment if it were in the shadow, to the brightness without shadow (2). Then, using the command buffer, we intercept control at the moment when the rendering of the opaque geometry is completed, in order to take the alpha from the buffer. Next, we smooth (3), and modulate the smoothed shadows with the color channels of the intermediate buffer (4). After this, the Unity pipeline resumes operation: transparent objects and a skybox are rendered (5).

This trick leads to a slight degradation of color in shaded places, but the tricks of computing what is written in alpha made it possible to reduce this effect to a minimum.

// shadow = 0..1 // spec - specular lighting // diff - diffuse lighting fixed4 c = tex2D( _MainTex, i.uv ); fixed3 ambDiffuse = c.xyz * UNITY_LIGHTMODEL_AMBIENT; fixed3 diffuseColor = _LightColor0.rgb * diff + UNITY_LIGHTMODEL_AMBIENT; fixed3 specularColor = _LightColor0.rgb * spec * shadow; c.rgb = saturate( c.rgb * diffuseColor + specularColor ); ca = Luminance( ambDiffuse / c.rgb ); As a result, we received a noticeable increase in performance (10-15%) on devices of average performance (mainly on androids), and on a number of devices the heat transfer decreased. This technique is an intermediate solution, before the transition to deferred lighting.

For shooting promo, we still use a better option, because degradation of the color rendition there is undesirable, but there are enough resources for the PC. To improve the softness of the shadow in this case, we applied the following: when applying a shadow, a formula that takes into account the LDotN is used, which allows us to achieve a smoother transition in illuminated places.

fixed shLDotN = lerp( clamp( shadow, 0, LDotN ), LDotN * shadow, 1 - LDotN); Paying for it is a slight burnout of the shadow in places where it becomes not completely black when it is blurred, but the result is a smoother transition to partial shade.

Links

[1] GPU Gems developer.nvidia.com/gpugems/GPUGems/gpugems_pref01.html

[2] Unity3D Post Processing Stack github.com/Unity-Technologies/PostProcessing

[3] RenderTexture cache docs.unity3d.com/ScriptReference/RenderTexture.html

[4] Volumetric light scattering as Post-Process http.developer.nvidia.com/GPUGems3/gpugems3_ch13.html

[5] Percentage-close filtering http.developer.nvidia.com/GPUGems/gpugems_ch11.html

[6] Summed-Area Variance Shadow Maps http.developer.nvidia.com/GPUGems3/gpugems3_ch08.html

PS

Special thanks must be given to Igor Polishchuk, who actually invented all the tricks related to shadows described here, and also participated in the writing of this article.

Source: https://habr.com/ru/post/327442/

All Articles