Practical application of ELK. Customize logstash

Introduction

Expanding the next system, faced with the need to handle a large number of different logs. We chose ELK as a tool. This article will discuss our experience in setting up this stack.

We do not set goals to describe all its capabilities, but we want to concentrate on solving practical problems. It is caused by the fact that in the presence of a sufficiently large amount of documentation and ready-made images, there are a lot of pitfalls, at least in our case they are revealed.

We deployed the stack through docker-compose. Moreover, we had a well-written docker-compose.yml, which allowed us to raise the stack with almost no problems. And it seemed to us that the victory was already close, now we will screw up a little to fit our needs and that's it.

Unfortunately, the attempt to tune the system to receive and process logs from our application was not crowned with success. Therefore, we decided that it is worth examining each component separately, and then return to their connections.

')

So, start with logstash.

Environment, Deployment, Logstash Launch in Container

For deployment, we use docker-compose, the experiments described here were conducted on MacOS and Ubuntu 18.0.4.

The image of logstash, which was registered in our original docker-compose.yml, is docker.elastic.co/logstash/logstash:6.3.2

We will use it for experiments.

To launch logstash, we wrote a separate docker-compose.yml. Of course, it was possible to launch an image from the command line, but we did solve the specific problem, where we do everything from docker-compose.

Briefly about configuration files

As follows from the description, logstash can be run as for one channel, in this case, it needs to transfer the * .conf file or for several channels, in this case, it needs to transfer the file pipelines.yml, which, in turn, will refer to the files .conf for each channel.

We went the second way. It seemed to us more versatile and scalable. Therefore, we created pipelines.yml, and made the pipelines directory into which we put the .conf files for each channel.

Inside the container there is another configuration file - logstash.yml. We do not touch it, use it as it is.

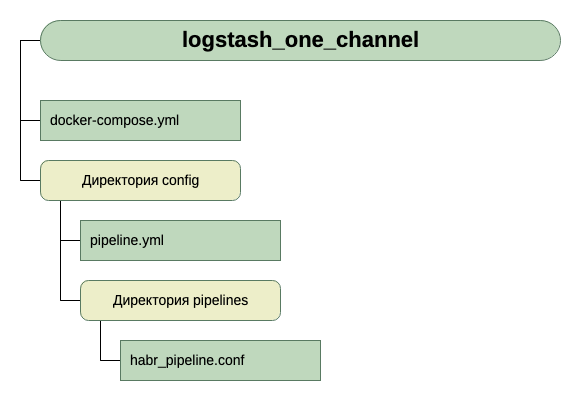

So, our directory structure:

For input, for the time being, we assume that this is tcp on port 5046, and for output we will use stdout.

Here is such a simple configuration for the first run. Because the initial task is to run.

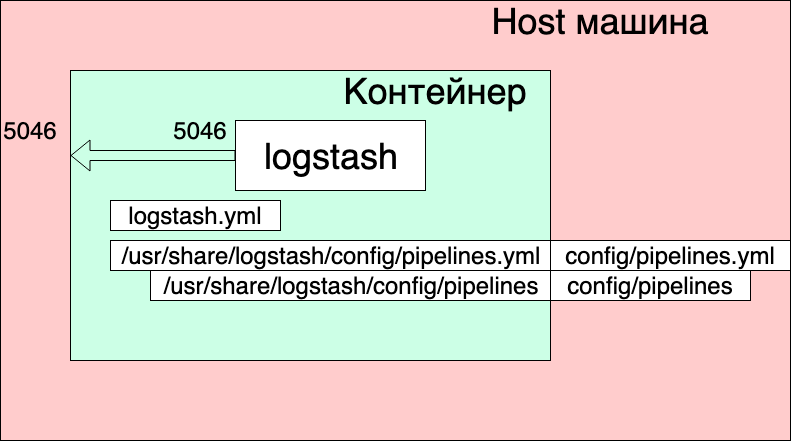

So, we have this docker-compose.yml

version: '3' networks: elk: volumes: elasticsearch: driver: local services: logstash: container_name: logstash_one_channel image: docker.elastic.co/logstash/logstash:6.3.2 networks: - elk ports: - 5046:5046 volumes: - ./config/pipelines.yml:/usr/share/logstash/config/pipelines.yml:ro - ./config/pipelines:/usr/share/logstash/config/pipelines:ro What do we see here?

- Networks and volumes were taken from the original docker-compose.yml (the one where the whole stack is started) and I think that they are not strongly affected by the overall picture.

- We create one service (services) logstash, from the image docker.elastic.co/logstash/logstash:6.3.2 and assign it the name logstash_one_channel.

- We forward port 5046 inside the container, to the same internal port.

- We map our channel configuration file ./config/pipelines.yml to the file /usr/share/logstash/config/pipelines.yml inside the container, from where it will be picked up by logstash and made it read-only, just in case.

- We display the directory ./config/pipelines, where we have the files with the channel settings in the directory / usr / share / logstash / config / pipelines and also make it read-only.

Pipelines.yml file

- pipeline.id: HABR pipeline.workers: 1 pipeline.batch.size: 1 path.config: "./config/pipelines/habr_pipeline.conf" It describes one channel with the HABR identifier and the path to its configuration file.

Finally, the file "./config/pipelines/habr_pipeline.conf"

input { tcp { port => "5046" } } filter { mutate { add_field => [ "habra_field", "Hello Habr" ] } } output { stdout { } } Let's not go into its description yet, try to run:

docker-compose up What do we see?

The container has started. We can check his work:

echo '13123123123123123123123213123213' | nc localhost 5046 And we see the answer in the container console:

But at the same time, we also see:

logstash_one_channel | [2019-04-29T11: 28: 59,790] [ERROR] [logstash.licensechecker.licensereader] Unable to retrieve license information license server {: message => "Elasticsearch Unreachable: [http: // elasticsearch: 9200 /] [Manticore :: ResolutionFailure] elasticsearch ", ...

logstash_one_channel | [2019-04-29T11: 28: 59,894] [INFO] [logstash.pipeline] Pipeline started successfully {: pipeline_id => ". Monitoring-logstash",: thread => "# <Thread: 0x119abb86 run>"}}

logstash_one_channel | [2019-04-29T11: 28: 59,988] [INFO] [logstash.agent] Pipelines running {: count => 2,: running_pipelines => [: HABR,: ". Monitoring-logstash"],: non_running_pipelines => [ ]}

logstash_one_channel | [2019-04-29T11: 29: 00,015] [ERROR] [logstash.inputs.metrics] X-Pack is installed on Logstash but not on Elasticsearch. Please install X-Pack on Elasticsearch to use the monitoring feature. Other features may be available.

logstash_one_channel | [2019-04-29T11: 29: 00,526] [INFO] [logstash.agent] Successfully started Logstash API endpoint {: port => 9600}

logstash_one_channel | [2019-04-29T11: 29: 04,478] [INFO] [logstash.outputs.elasticsearch] Elasticsearch connection is working {: healthcheck_url => http: // elasticsearch: 9200 /,: path => "/"}

l ogstash_one_channel | [2019-04-29T11: 29: 04,487] [WARN] [logstash.outputs.elasticsearch] Attempted to resurrect connection. {: url => " elasticsearch : 9200 /",: error_type => LogStash :: Outputs :: ElasticSearch :: HttpClient :: Pool :: HostUnreachableError,: error => "Elasticsearch Unreachable: [http: // elasticsearch: 9200 / ] [Manticore :: ResolutionFailure] elasticsearch »}

logstash_one_channel | [2019-04-29T11: 29: 04,704] [INFO] [logstash.licensechecker.licensereader] Elasticsearch connection is working {: healthcheck_url => http: // elasticsearch: 9200 /,: path => "/"}

logstash_one_channel | [2019-04-29T11: 29: 04,710] [WARN] [logstash.licensechecker.licensereader] Attempted to resurrect connection to dead instance. {: url => " elasticsearch : 9200 /",: error_type => LogStash :: Outputs :: ElasticSearch :: HttpClient :: Pool :: HostUnreachableError,: error => "Elasticsearch Unreachable: [http: // elasticsearch: 9200 / ] [Manticore :: ResolutionFailure] elasticsearch »}

And our log crawls up all the time.

Here I highlighted in green the message that the pipeline had started successfully, in red - the error message and in yellow - the message about the attempt to contact elasticsearch : 9200.

This is due to the fact that in the logstash.conf included in the image, it is worth checking for the availability of elasticsearch. After all, logstash assumes that it works as part of the Elk stack, and we have separated it.

You can work, but not convenient.

The solution is to disable this check through the XPACK_MONITORING_ENABLED environment variable.

Make a change to docker-compose.yml and run it again:

version: '3' networks: elk: volumes: elasticsearch: driver: local services: logstash: container_name: logstash_one_channel image: docker.elastic.co/logstash/logstash:6.3.2 networks: - elk environment: XPACK_MONITORING_ENABLED: "false" ports: - 5046:5046 volumes: - ./config/pipelines.yml:/usr/share/logstash/config/pipelines.yml:ro - ./config/pipelines:/usr/share/logstash/config/pipelines:ro Now, everything is fine. The container is ready for experiments.

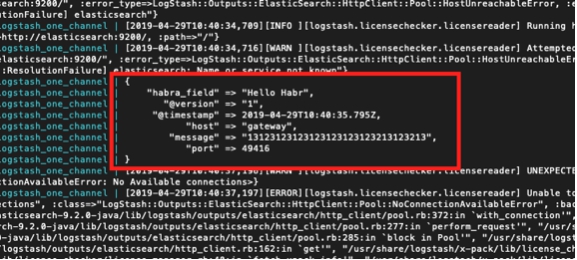

We can dial again in the next console:

echo '13123123123123123123123213123213' | nc localhost 5046 And see:

logstash_one_channel | { logstash_one_channel | "message" => "13123123123123123123123213123213", logstash_one_channel | "@timestamp" => 2019-04-29T11:43:44.582Z, logstash_one_channel | "@version" => "1", logstash_one_channel | "habra_field" => "Hello Habr", logstash_one_channel | "host" => "gateway", logstash_one_channel | "port" => 49418 logstash_one_channel | } Work within one channel

So, we started. Now you can actually spend time configuring logstash directly. For now, let's not touch the pipelines.yml file, see what can be obtained by working with one channel.

I must say that the general principle of working with the channel configuration file is well described in the official manual, here

If you want to read in Russian, we used this article (but the query syntax is old there, you need to take this into account).

Let's go in series from the Input section. We have already seen the work on tcp. What else can be interesting here?

Test messages using heartbeat

There is such an interesting opportunity to generate automatic test messages.

To do this, you need to include the heartbean plugin in the input section.

input { heartbeat { message => "HeartBeat!" } } Turn on, start once a minute to get

logstash_one_channel | { logstash_one_channel | "@timestamp" => 2019-04-29T13:52:04.567Z, logstash_one_channel | "habra_field" => "Hello Habr", logstash_one_channel | "message" => "HeartBeat!", logstash_one_channel | "@version" => "1", logstash_one_channel | "host" => "a0667e5c57ec" logstash_one_channel | } We want to receive more often, we need to add the interval parameter.

So we will receive a message every 10 seconds.

input { heartbeat { message => "HeartBeat!" interval => 10 } } Getting data from a file

We also decided to watch the file mode. If it works normally with the file, then it is possible that no agent is required, well, at least for local use.

According to the description, the mode of operation should be similar tail -f, i.e. reads new lines or, as an option, reads the entire file.

So, what we want to get:

- We want to get the lines that are added to a single log file.

- We want to receive data that is recorded in several log files, while being able to share what is received from.

- We want to check that when logstash is restarted, it will not receive this data again.

- We want to check that if logstash is disabled, and the data in the files continue to be written, then when we launch it, we will receive this data.

To conduct the experiment, add another line to docker-compose.yml, opening the directory where we put the files.

version: '3' networks: elk: volumes: elasticsearch: driver: local services: logstash: container_name: logstash_one_channel image: docker.elastic.co/logstash/logstash:6.3.2 networks: - elk environment: XPACK_MONITORING_ENABLED: "false" ports: - 5046:5046 volumes: - ./config/pipelines.yml:/usr/share/logstash/config/pipelines.yml:ro - ./config/pipelines:/usr/share/logstash/config/pipelines:ro - ./logs:/usr/share/logstash/input And change the input section to habr_pipeline.conf

input { file { path => "/usr/share/logstash/input/*.log" } } Starting:

docker-compose up To create and record log files, we will use the command:

echo '1' >> logs/number1.log { logstash_one_channel | "host" => "ac2d4e3ef70f", logstash_one_channel | "habra_field" => "Hello Habr", logstash_one_channel | "@timestamp" => 2019-04-29T14:28:53.876Z, logstash_one_channel | "@version" => "1", logstash_one_channel | "message" => "1", logstash_one_channel | "path" => "/usr/share/logstash/input/number1.log" logstash_one_channel | } Yeah, it works!

At the same time, we see that we automatically added the path field. So in the future, we will be able to filter records by it.

Let's try again:

echo '2' >> logs/number1.log { logstash_one_channel | "host" => "ac2d4e3ef70f", logstash_one_channel | "habra_field" => "Hello Habr", logstash_one_channel | "@timestamp" => 2019-04-29T14:28:59.906Z, logstash_one_channel | "@version" => "1", logstash_one_channel | "message" => "2", logstash_one_channel | "path" => "/usr/share/logstash/input/number1.log" logstash_one_channel | } And now to another file:

echo '1' >> logs/number2.log { logstash_one_channel | "host" => "ac2d4e3ef70f", logstash_one_channel | "habra_field" => "Hello Habr", logstash_one_channel | "@timestamp" => 2019-04-29T14:29:26.061Z, logstash_one_channel | "@version" => "1", logstash_one_channel | "message" => "1", logstash_one_channel | "path" => "/usr/share/logstash/input/number2.log" logstash_one_channel | } Fine! The file picked up, path was specified correctly, everything is fine.

Stop logstash and restart. Let's wait. Silence. Those. We do not receive these records again.

And now the most courageous experiment.

Put logstash and execute:

echo '3' >> logs/number2.log echo '4' >> logs/number1.log Run logstash again and see:

logstash_one_channel | { logstash_one_channel | "host" => "ac2d4e3ef70f", logstash_one_channel | "habra_field" => "Hello Habr", logstash_one_channel | "message" => "3", logstash_one_channel | "@version" => "1", logstash_one_channel | "path" => "/usr/share/logstash/input/number2.log", logstash_one_channel | "@timestamp" => 2019-04-29T14:48:50.589Z logstash_one_channel | } logstash_one_channel | { logstash_one_channel | "host" => "ac2d4e3ef70f", logstash_one_channel | "habra_field" => "Hello Habr", logstash_one_channel | "message" => "4", logstash_one_channel | "@version" => "1", logstash_one_channel | "path" => "/usr/share/logstash/input/number1.log", logstash_one_channel | "@timestamp" => 2019-04-29T14:48:50.856Z logstash_one_channel | } Hooray! Everything picked up.

But, it is necessary to warn about the following. If the container with logstash is removed (docker stop logstash_one_channel && docker rm logstash_one_channel), then nothing will catch up. The position of the file to which it was read was saved inside the container. If run from scratch, it will only accept new lines.

Reading existing files

Suppose we run logstash for the first time, but we already have logs and we would like to process them.

If we run logstash with the input section that was used above, we will not get anything. Only new lines will be processed by logstash.

In order to pull up lines from existing files, add an additional line to the input section:

input { file { start_position => "beginning" path => "/usr/share/logstash/input/*.log" } } Moreover, there is a nuance, it affects only new files that logstash has not yet seen. For the same files that were already in the logstash field of view, he already remembered their size and will now take only new entries in them.

Let us dwell on the study of the input section. There are still many options, but for us, for the future experiments is enough.

Routing and Data Conversion

Let's try to solve the following problem, let's say we have messages from one channel, some of which are informational, and some are an error message. Different tag. Some INFO, others ERROR.

We need to separate them at the exit. Those. Informational messages are written to one channel, and error messages to another.

For this, from the input section, go to filter and output.

Using the filter section, we will analyze the incoming message, receiving from it hash (key-value pairs), with which you can already work, i.e. disassemble according to the conditions. And in the output section, select messages and send each to its own channel.

Parsing a message using grok

In order to parse text lines and get a set of fields from them, in the filter section there is a special plugin - grok.

Without aiming to give here a detailed description of it (I refer to official documentation for this), I will give my simple example.

For this, you need to decide on the format of the input lines. I have these:

1 INFO message1

2 ERROR message2

Those. The identifier comes first, then INFO / ERROR, then a word without spaces.

Not difficult, but enough to understand the principle of work.

So, in the filter section, in the grok plugin, we need to define a pattern for parsing our strings.

It will look like this:

filter { grok { match => { "message" => ["%{INT:message_id} %{LOGLEVEL:message_type} %{WORD:message_text}"] } } } In essence, this is a regular expression. Ready-made patterns are used, such as INT, LOGLEVEL, WORD. Their description, as well as other patterns, can be found here.

Now, passing through this filter, our string will turn into hash of three fields: message_id, message_type, message_text.

They will be displayed in the output section.

Routing messages in the output section with the if command

In the output section, as we remember, we were going to separate the messages into two streams. Some - which iNFO, we will output to the console, and with errors, we will output to the file.

How do we separate these messages? The condition of the problem already prompts a solution - after all, we already have a selected message_type field, which can take only two INFO and ERROR values. It is for him and make a choice with the operator if.

if [message_type] == "ERROR" { # } else { # stdout } The description of work with fields and operators can be found here in this section of the official manual .

Now, about the actual output itself.

Output to the console, everything is clear here - stdout {}

But the output to the file - we remember that we are launching all this from the container and in order for the file to which we write the result to be accessible from the outside, we need to open this directory in docker-compose.yml.

Total:

The output section of our file looks like this:

output { if [message_type] == "ERROR" { file { path => "/usr/share/logstash/output/test.log" codec => line { format => "custom format: %{message}"} } } else {stdout { } } } Add another volume to docker-compose.yml for output:

version: '3' networks: elk: volumes: elasticsearch: driver: local services: logstash: container_name: logstash_one_channel image: docker.elastic.co/logstash/logstash:6.3.2 networks: - elk environment: XPACK_MONITORING_ENABLED: "false" ports: - 5046:5046 volumes: - ./config/pipelines.yml:/usr/share/logstash/config/pipelines.yml:ro - ./config/pipelines:/usr/share/logstash/config/pipelines:ro - ./logs:/usr/share/logstash/input - ./output:/usr/share/logstash/output We start, we try, we see division into two streams.

Source: https://habr.com/ru/post/451264/

All Articles