We are friends of gRPC with a long-lived project, PHP and front-end

A couple of years ago we fairly quietly worked with our small team and did hosting. It turned out that each service in the system had its own unique and unique API. But then it became a problem and it was decided to redo everything.

We will talk about how to combine the external API with the internal one and what to do if you have a lot of PHP code, but you want to take advantage of gRPC.

Now they talk a lot about microservices and SOA in general. Our infrastructure is no exception: after all, we are engaged in hosting and our services allow us to manage almost a thousand servers.

Over time, services in our system began to appear more and more: have become a domain registrar - we register in a separate service; there are a lot of server metrics - we are writing a service that does samples from ClickHouse / InfluxDB; You need to make an emulator of launching tasks “like through Crontab”; for users - we write service. It will probably be familiar to many.

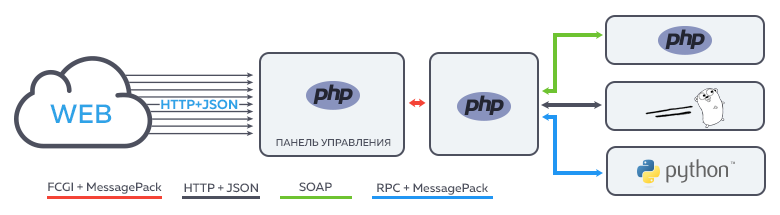

Inbound tasks in developing a lot. The number of different services is growing smoothly and, seemingly, unnoticed. It is impossible to take into account all the future nuances in advance, therefore one API was replaced by other, better ones . But the day came when it became apparent that too many protocols were divorced :

The more services and connections between them became, the longer and more difficult it was to solve problems: it was necessary to study several different APIs, write clients to them and only then begin the real work.

Oh, yes ... because the documentation is also needed. Otherwise the following dialogues take place in chat:

- Guys, how can I get user balance from billing?

- Make a call to billing / getBalance (customerId)

- How to get a list of services?

- I do not remember, look for the desired controller in

In short, the dream of a magical single standard and technology for creating a network API, which will solve all the problems and save us time, has arisen.

We form requirements

A little thought, we made a small list of requirements:

- The API description method used should be declarative.

- The result should be unambiguous and human-readable: you need to carry out code review

- We need the ability to describe both a successful flow and errors. And this should be done explicitly for each method.

- Based on the description, you need to generate as much boring code as possible for the client and server.

As a result of searches, evaluations and small tests, we stopped at gRPC . This framework is not new and has already been written about it on Habré .

Out of the box, he met almost all of our requirements. If in a nutshell:

- Declarative description of methods and data structures

- It is very readable and simple. It is easy to carry out code review on the resulting .proto files. IDL syntax is close to popular PL

- Generators have been delivered for most of the popular PL (but there is a nuance. About it below)

- gRPC is simply an RPC mechanism with no strict API organization requirements. This makes it possible to develop your own principles and guidelines based on the experience gained.

However, the ideal technology does not exist. For us, there were several stumbling blocks:

- We are actively using PHP and it does not know how to server gRPC ;

- Our frontend is still waiting for the usual HTTP. At the moment, we were forced to “proxy” front-end requests through a separate application that generates the right requests to the internal API. In most cases, this is an extra boring job. I would like to give everything inside our system through one protocol with automatic conversion to HTTP for the frontend.

Fortunately, we solved these problems quite easily. Further I will assume that the reader is familiar with gRPC. If not, it is better to first refer to the article mentioned above.

Yes indeed. OpenAPI and the toolbox provided by Swagger look enticing.

Immediately I must say that comparing OpenAPI and gRPC is not entirely correct. gRPC is primarily a framework that solves the technical problem of RPC interaction. It offers its own protocol, serialization method, service description language, and some tuling.

OpenAPI is primarily a specification trying to become a single standard for describing interfaces. Let it now be strongly oriented towards REST, but there are proposals to add support for RPC . Perhaps in the future, OpenAPI will become the modern equivalent of web services and WSDL.

Nevertheless, our team found several arguments in favor of using gRPC instead of OpenAPI:

- First, OpenAPI as a specification is currently focused on interfaces built on REST principles . In our system, most of the interactions between services are internal. Using REST principles can often lead to unnecessary complication: it is not always possible to turn any method of the type

doSomethingVerySpecialShitinto the right resource available at its URL. Well, or rather, of course you can (converting the verb-method into a noun), but it will look very alien. There are no such problems with gRPC; - Secondly, let's be honest: few developers can correctly and correctly design such an API . At interviews, people often can not explain the meaning of the abbreviation REST =) Of course, this is a weak argument: with proper expertise in the company, everything can be taught. But we decided that now it is better to spend time on other tasks;

- Thirdly, it is more convenient for us to share the description of services in the form of simple and understandable files that can be read fairly quickly and comfortably. In our opinion, Protobuf definitely wins: the description of methods and structures due to its own IDL is close to popular PL . OpenAPI offers to describe them in yaml or json. Despite the fact that these formats are also accessible for perception, they still remain the same formats for data serialization, and not a full-fledged IDL;

- Fourth, gRPC has unidirectional and bidirectional streams . In OpenAPI, similar functions (as far as we could figure out) perform callbacks . It seems that this is a similar thing, but judging by the proposals , full-fledged bilateral streams have not yet been implemented , and we sometimes use them (for example, to transfer large blobs in parts).

If we sum up a little, then we can say that OpenAPI turned out to be too large for us and did not always offer adequate solutions. I recall that our ultimate goal - to move to a single technical and organizational standard for RPC. Implementing REST principles would require rethinking and refactoring many things, which would be a task from a completely different weight category.

You can also read, for example, with this article-comparison . In general, we agree with her.

Everybody loves PHP

As I said above, we have a lot of business logic written in PHP. If we turn to the documentation , then we will have a bummer: due to the peculiarities of the code execution model, it cannot act as a server (various reactphp does not count). But it works well as a client and, if you feed the proto-file with the service description to the code generator, it will honestly generate classes for all structures (request and response). So, the problem is completely solved.

All that we found on the topic of how PHP works as a server is a discussion on this topic in Google Groups . In this discussion, one of the participants said that they are working on the possibility of proxying gRPC in FastCGI (PHP-FPM wants to see it used by us). This is exactly what we were looking for. Unfortunately, we were unable to contact, find out the status of this project and participate in it.

In this regard, it was decided to write a small proxy, which could accept requests and convert them to FactCGI. Since gRPC runs on top of HTTP / 2 and the method call in it is in fact a regular HTTP request, the task is not complicated.

As a result, we quickly made such a proxy in the Go language. For its work, it requires only a small config with information about where to proxy. We have published its code for everyone .

The scheme of work is as follows:

- Accept the request;

- Remove the body serialized in Protobuf from it;

- Form the headers for the FastCGI request;

- We send a FastCGI request to PHP-FPM;

- In PHP, we process the request. We form the answer;

- We receive the answer, we convert to gRPC, we send to the addressee;

Thus, the principle of processing a request in PHP is very simple:

- The request body will be contained in the body (

php://inputstream); - The requested service and its method are contained in the query parameter

r(we use Yii2 and its router wants to see the route in this parameter)

syntax = 'proto3'; package api.customer; service CustomerService { rpc getSomeInfo(GetSomeInfoRequest) returns (GetSomeInfoResponse) {} } message GetSomeInfoRequest { string login = 1; } message GetSomeInfoResponse { string first_name = 2; string second_name = 3; } When getSomeInfo requested, the r parameter will contain api.customer.customer-service/get-some-info .

Concentrated example of request processing in the application:

<?php // , gRPC : // package.service-name/method-name $route = $_GET['r']; // , protobuf $body = file_get_contents("php://input"); try { // 50% if (rand(0, 1)) { throw new \RuntimeException("Some error happened!"); } // , // -, , - $request = new GetSomeInfoRequest; $request->parse($body); $customer = findCustomer($request->getLogin()); $response = (new GetSomeInfoResponse) ->setFirstName($customer->getFirstName()); echo $response->serialize(); } catch (\Throwable $e) { // -. // , : // https://github.com/grpc/grpc-go/blob/master/codes/codes.go $errorCode = 13; header("X-Grpc-Status: ERROR"); header("X-Grpc-Error-Code: {$errorCode}"); header("X-Grpc-Error-Description: {$e->getMessage()}"); } As you can see, everything is quite simple. You can implement this logic in any popular framework and make a convenient layer of controllers in which all serialization / deserialization will occur automatically.

<?php namespace app\api\controllers\customer\actions; use app\api\base\Action; // protobuf use app\generated\api\customer\GetSomeInfoRequest; use app\generated\api\customer\GetSomeInfoResponse; class GetSomeInfoAction extends Action { public function run(GetSomeInfoRequest $request): GetSomeInfoResponse { // $customer = findCustomer($request->getLogin()); // return (new GetSomeInfoResponse) ->setFirstName($customer->getFirstName()) ->setSecondName($customer->getSecondName()); } } Exception handling and converting them into the corresponding gRPC statuscodes is implemented at the application level and occurs automatically.

All the developer needs is to create an Action and specify the expected types for the request and response in the signature of the run method.

To generate code in PHP

- Not so long ago, the official support for protobuf-plugin came out. Recommended for use.

- At the moment, we ourselves are still using the unofficial plugin and are planning to migrate.

Benefits

- We entered PHP into a common gRPC based messaging system;

- The proxy used is lightweight by itself, does not encode / decode requests;

- The proxy does not require .proto files for their work. You can “start and forget” (in our case, the proxy is simply launched in the adjacent container via docker-compose. Nginx is no longer needed to return HTTP).

disadvantages

- Despite the lightness, another potential point of failure was added;

- It is necessary once to write a bit of code to automate the generation of classes and adapt the router / controllers for your framework;

- No server support for streams. We think that under certain restrictions their implementation is not difficult, but so far such a need has not arisen;

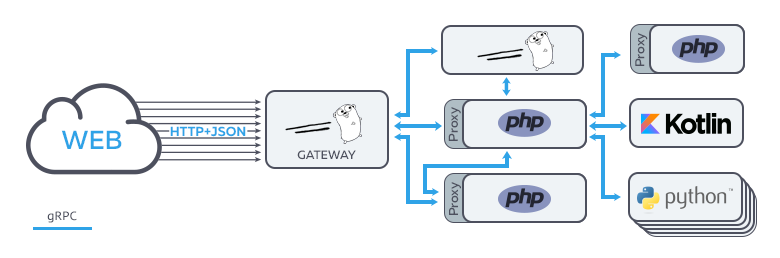

Gateway frontend

If we have more or less solved the issues with internal messaging, then the frontend still remains. Ideally, I would like to strive for using a single stack of technologies for both backend and frontend: it's easier and cheaper. Therefore, we began to study this issue.

Almost immediately, we found the grpc-gateway project , which allows us to generate proxies for converting gRPC / Protobuf to HTTP / JSON. It seems that this is a good solution for returning the API to the frontend and for those customers who do not want or cannot use gRPC (for example, if you need to write some one-time bash script quickly).

About this project there is also an article on Habré , so literally in two words: a plugin for protoc based on the transmitted .proto files with a description of the service and special meta-information about the HTTP routes in which they generate code for reverse proxy. Next, the main file is written with hands, in which the generated proxy server is simply started (the grpc-gateway authors describe in detail all the actions in README.md ).

True, out of the box for us there were a couple of inconveniences:

- I would like to add a few middleware before proxying an incoming request (authentication via JWT, logging, etc.);

- Each service stores its API in a separate repository (so that the client can easily connect it as a submodule). It is necessary to maximally automate the process of updating .proto-files and assembling the current version of gateway;

- Among other things, when commissioning a new service, it is necessary to make changes to the code of the main file;

In short, you need to make changes often and quickly. We'll have to work a little on convenience.

The grpc-gateway itself is a protoc plugin that generates a proxy and is written in Go. Based on this decision, it begs itself: write a generator that generates a plugin that generates a proxy =) Well, automate the launch and deployment of all this in our Gitlab CI.

The result is a generator generator, which takes a simple config to the input:

- url: git.repository.com/api0/example-service # .proto ref: c4d0504f690ee66349306f66578cb15787eefe72 # target: grpc-external.example.service.consul:50051 # - ... After changing it and starting the build, in our CI, a generator is launched that downloads all the necessary repositories based on the config, generates code for the main file, wraps the proxy itself with various middleware, and the output is a ready-made binary, which is then deployed to production.

Benefits

- We have extended most of our principles for describing and working with the front-end API;

- The developer on the backend operates with only one protocol;

- Depla changes is quite simple: simply config and push the config. Then everything is collected and updated in the CI;

- All HTTP routes are written directly in the proto-files in the description of each method.

disadvantages

- Yes, another proxy =) On the other hand, we still need a place in which authentication will be performed;

- Hayload lovers may notice that there is a moment of converting json into protobuf and it is certainly worth some resources;

- You need to spend a couple of hours to understand how HTTP headers are proxied and how various types are converted from protobuf to JSON ( https://developers.google.com/protocol-buffers/docs/proto3#json )

Result and conclusions

As a result of all these technical perturbations, we were able to quickly prepare our infrastructure for the transition to a single messaging protocol. Thus, we were able to simplify and speed up the exchange of information between developers, added strictness to our interfaces in the issue of types, moved to the Design First design principle and preliminary Code Review at the level of interservice interfaces.

It turned out something like this:

At the moment we have transferred most of the internal messaging to gRPC and are working on a new public API. All this happens in the background as far as possible. However, this process is not as complicated as it seemed before. Instead, we were able to quickly and consistently design our APIs, share them between developers, conduct a Code Review, and generate clients. For cases when HTTP is needed (for example, for internal web interfaces), we simply add a few annotations, add a couple of lines to the grpc-gateway config and get a ready endpoint.

We would also like to make it possible in the future to use gRPC or a similar protocol directly on the frontend =)

It cannot be said that there were no jambs and difficulties. Among the interesting problems characteristic of gRPC, we have identified the following:

- Lack of normal documentation on client and server configuration parameters. For example, we are faced with a limit on the length of a response message. It turned out that the

grpc.max_receive_message_lengthparameter is responsible for it. It was possible to find it only by digging in the client’s source code; - The package system and the include path used in the code generation are rather long dismayed. We have to develop rules for the integration of proto-files and write scripts to correctly generate code through

protoc. True, you need to do this only once; - Not for all the YP generators are written perfectly. For example, for the same PHP it was necessary to actively vote for the support of fluent-interfaces for the generated structures . Also, there were funny problems before when using reserved words for different YAPs in protobuf (for example,

publicorprivate). Now it is almost completely fixed; - In the current version of Protobuf, each type (except for custom structure types) has a default value. It works about the same as in Go. If not to transfer value for any

uint32, then on the server we will receive0, but notnull. This may be unusual, but, on the other hand, it turned out to be quite convenient;

In addition to the technical aspects described in this article, there were other things that need to be given close attention when switching to a new API:

- Decide where and in what form you will store your documentation or, as in our case, interface descriptions in .proto files;

- Understand how you will version it;

- Write guidelines about design principles. In the case of gRPC, the freedom it provides can play a cruel joke: your API will turn into a vinaigrette from various naming methods, structures and other beautiful things. To do this, we have developed a small set of rules and methods for solving typical problems within the team.

We hope that our experience will be useful to teams working on long-lived projects and ready to deal with what is usually called the term "historically."

Useful links from the article

- Article about gRPC on Habré

- OpenAPI vs. gRPC Comparison

- GRPC Proxy -> FastCGI

- The grpc-gateway project and an article about it

- The guys are trying to use gRPC in the browser

- Interactive debug console

')

Source: https://habr.com/ru/post/348008/

All Articles