Smart nets for fishermen: how we taught smartphones to recognize fish

The development of computer vision in the past 10 years, did not pay attention only to a person removed from the world. The pattern recognition technology owes its profound prosperity to its deep learning. Achievements of machines are amazing.

- In 2012, there is a competition for the classification of images, where a deep neural network wins with a grand margin from second place;

- Three years later, the machine classifies 1000 classes of pictures with an accuracy superior to the human one;

- Recognition of handwritten symbols and road signs exceeded people in accuracy.

- Eyes of self-driving cars - lidars, radar and cameras are able to recognize the environment, road markings, vehicles, cars, as well as the gender and age of pedestrians.

- The accuracy of lip reading by a network trained by Google DeepMind and Oxford University is more than human;

- Recognition of age and sex - accuracy exceeded human;

- One of the most evolving areas of machine vision technology is face recognition with an emphasis on biometrics due to the growing demand for this technology for security. Several countries use face recognition technology in their outdoor video surveillance systems. Thanks to him, even the criminal was caught.

This is only the tip of the iceberg of the achievements of computer vision algorithms. Left without mention achievements in art, medicine, games and impressive generative models, such as the generator of cats . Some sources claim that it was thanks to the cats that they managed to borrow the idea of convolutional neural networks from nature, which created the visual cortex of the brain.

Computer vision as part of the future world

Technologies for automating tasks that require pattern recognition are in demand at the service of governments and high-tech companies. They help solve the problems of people in need of help - farmers and environmentalists. Microsoft's Seeing AI application describes the world for blind people. A young developer created for parents of farmers sorting vegetables, facilitating the work.

')

We have been following technology for a long time. The potential of machine learning inspires us to look for applications of promising technologies in our products for solving everyday tasks of ordinary people. In 2016, working on a social network for fishermen, we decided to do the best that we can to make our favorite hobby not only more convenient, but also more interesting.

Purpose and setting of the problem

Modern fishing communities still live on forums built in the early 2000s. Themes with stories about the best places for fishing, equipment and photos of the catch are the most active. People share fish captured on a smartphone, laying out a post on the forum. Brothers in arms (in this case, a fishing rod) are discussing prey, competing with each other who caught a bigger pike. We did not want to break such a warm, competitive atmosphere. Just the opposite, to make fishing more active, more convenient and a bit more modern, at least in this aspect.

About 500 million people all over the world are keen on fishing today. Representatives of one of the largest community have been taking fishing smartphones with them for a long time, however, everyone is also sitting on outdated forums or groups on Facebook. Surprisingly, there are almost no modern resources and applications for fishermen. We wanted to correct this unfortunate omission through technology.

The application we have created will help fishermen not only determine the type and length of fish, but also leave the trophy on the honor roll. Every fisherman wants to see prey in his collection. Scanning the capture, the application adds it to the profile, so that the user can boast of the catch with his friends. Probably, most fishermen who consider this occupation more than a hobby have a sense of competitive excitement. Especially for them, we also decided to add ratings. Users with a large catch will be at the top of the rating, becoming an incentive for the rest. Stories like "I caught such a fish here" will not be taken seriously. The app will know who the fisherman caught and how long.

A person will no longer be distracted by uploading photos to the forum, determining the type and size of fish - machine learning will do everything. It will only enjoy your favorite pastime - to catch a fish.

Learning the net to catch fish

To teach the network to recognize the length and type of fish, in addition to the powerful video maps, we needed:

- collect data;

- process up to a clean set of marked beautiful pictures with fish;

- select the appropriate architecture;

- learn and validate;

- correct errors and try again.

Data collection

There are practically no open datasets with markings of the type of fish or box with its position. Data can be found at ImageNet and individual laboratories, but the volume, quality of images and markup leaves much to be desired.

A first look at the first hundred photos of the catch will be enough to understand that fishermen from all over the world like to take selfies with fish, but not high-quality photos.

Photos and videos from fishing contain the necessary data, but they have several significant drawbacks:

- Low quality ~ 40% dataset:

- The fish occupies a small part of the photograph, or is terribly oiled, photographed from afar;

- Fisherman's hands cover a significant portion of the fish;

- The fish is partially visible and does not fit in the frame, cut off or blocked in the picture.

- Frame not amenable to classification:

There are several types in the photo, and we want to define one class for each image.

Fishermen very often confuse similar species, “polluting” the data.

Deep nets are demanding in terms of volume and less demanding in quality. But raw fishing pictures are not suitable.

Our methodology allowed us to solve several problems at once - data collection and their cleaning. It consists in consistently increasing the quality, models and data:

We manually mark the borders of fish for several different species to avoid retraining for one species. For example, flounder, pike and carp. A few days of work is a couple of thousand pictures.

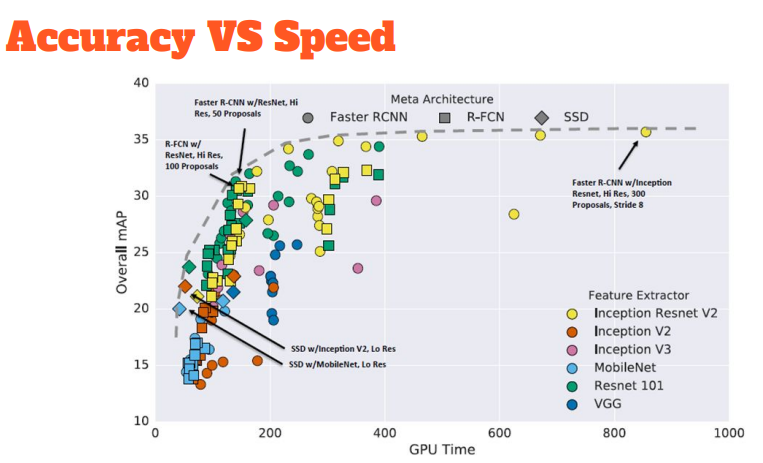

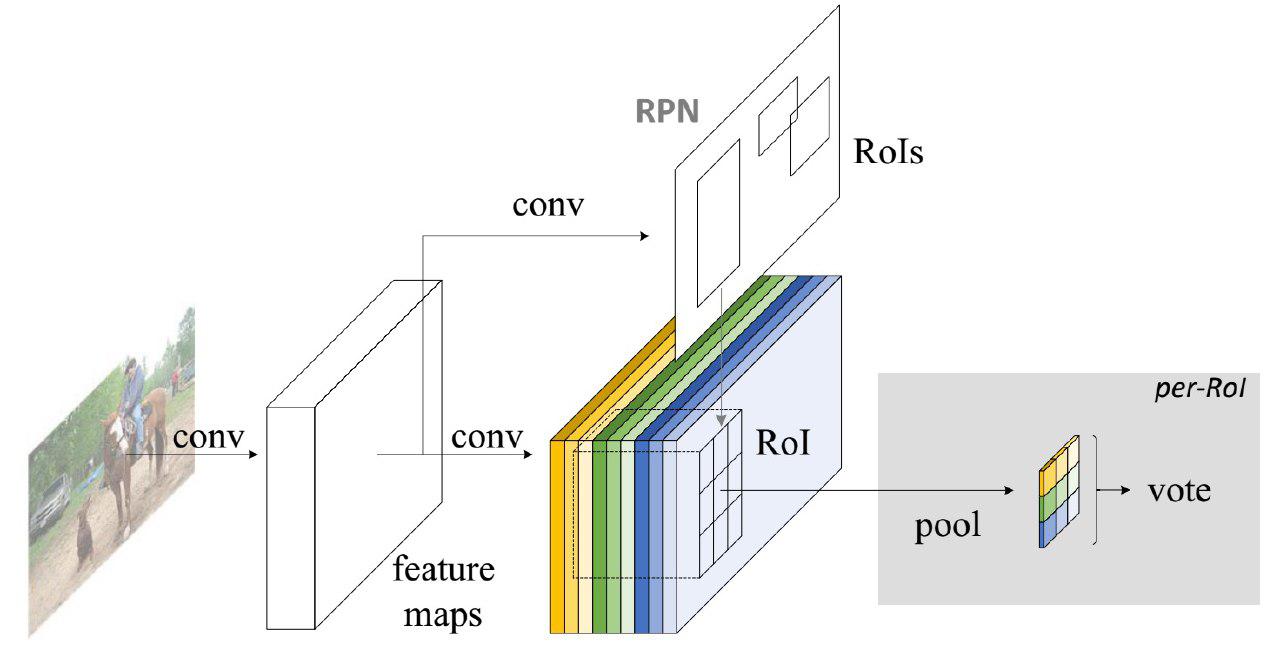

We choose the architecture for detection. Fast and accurate to work with large amounts of data. R-FCN seemed appropriate.

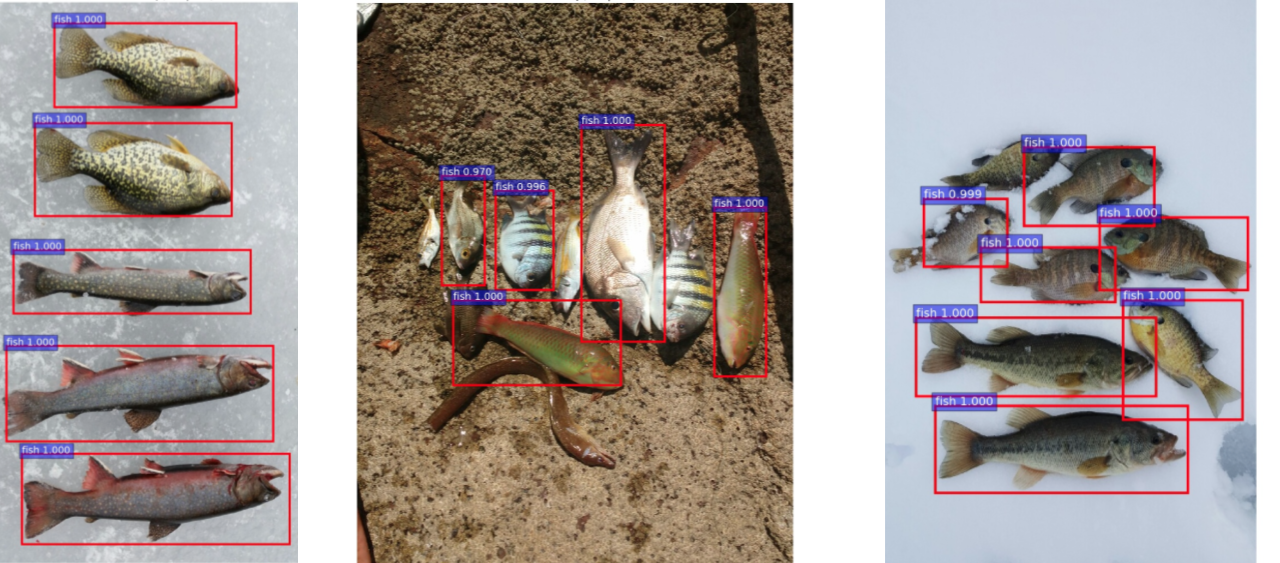

We teach the network to distinguish between fish and non-fish (background), to detect the first. We make a web interface for the inference of media materials of our fishermen. He can insert into the web interface a link to his video, which will be frame-by-frame through the detection model. All frames containing fish will be displayed on the screen to remove duplicates and errors ...

This admin panel allows you to collect data for the target species, and processed by the network and verified by a person to use for:

- Additional training of our detection model. Selecting examples of successful work on new types, we transfer to a larger number of types and improve the accuracy and quality of the model;

- Cutting out a fragment of fish from a photograph is at the exit, setting a high threshold of confidence for the filter of good frames, we get large quality frames with one fish. What you need to learn the classification model! Quality images processed in this way are ~ 60%.

Learning classification

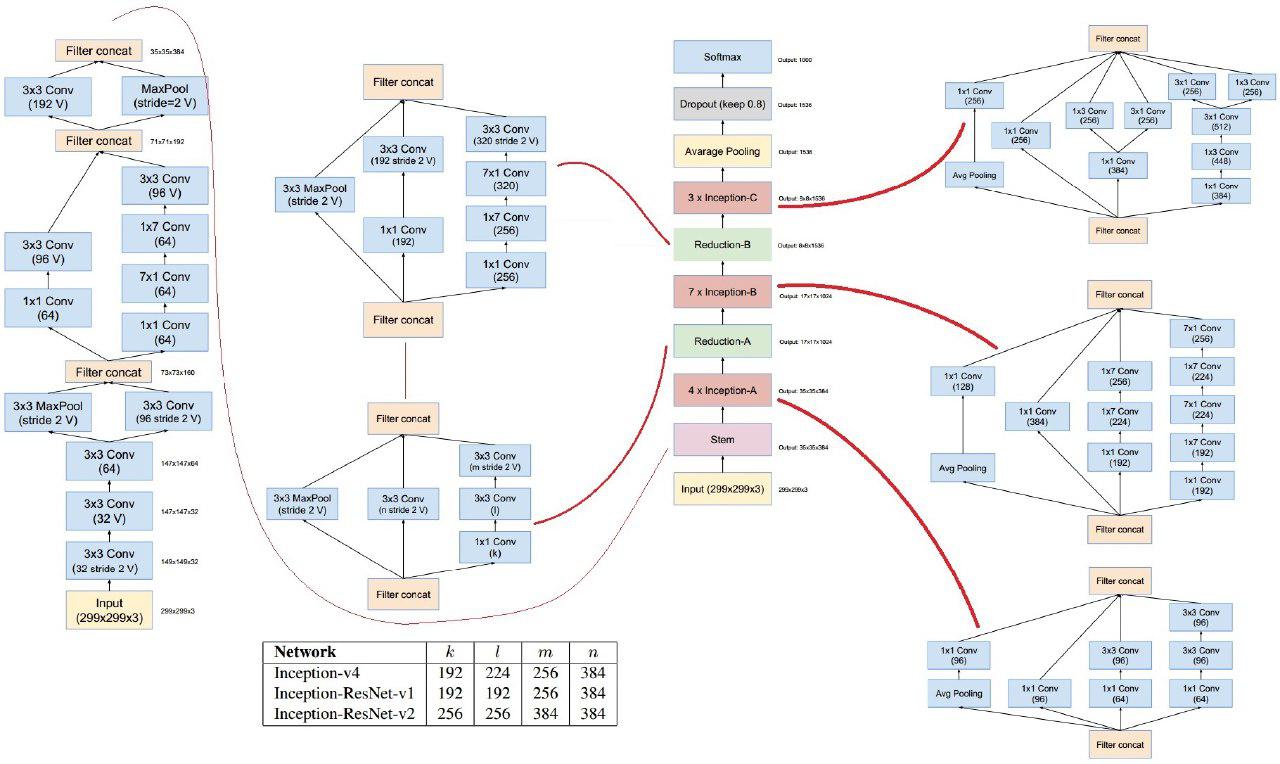

We intend to expand the number of recognizable types, so we chose the classifier architecture among the networks that predicted 1000 classes on ImageNet. The choice fell on Inception-ResNet-2, as the optimal ratio of size and accuracy.

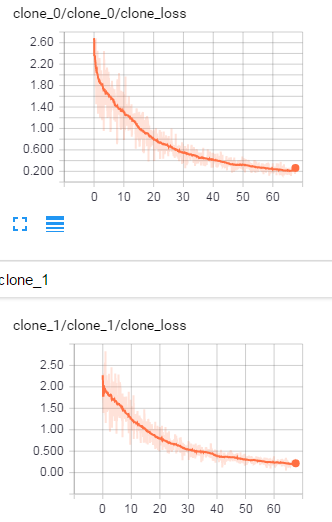

TensorFlow, EVGA GeForce GTX 1080 Ti and EVGA GeForce GTX 1080 were used for training.

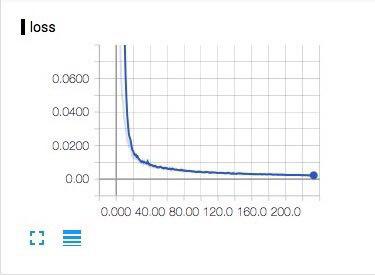

Full model training provided greater accuracy than the training of fully connected layers of the ImageNet model. Most likely because the network has learned low-level patterns, such as drawing scales. The training took more than 80 hours on two video cards.

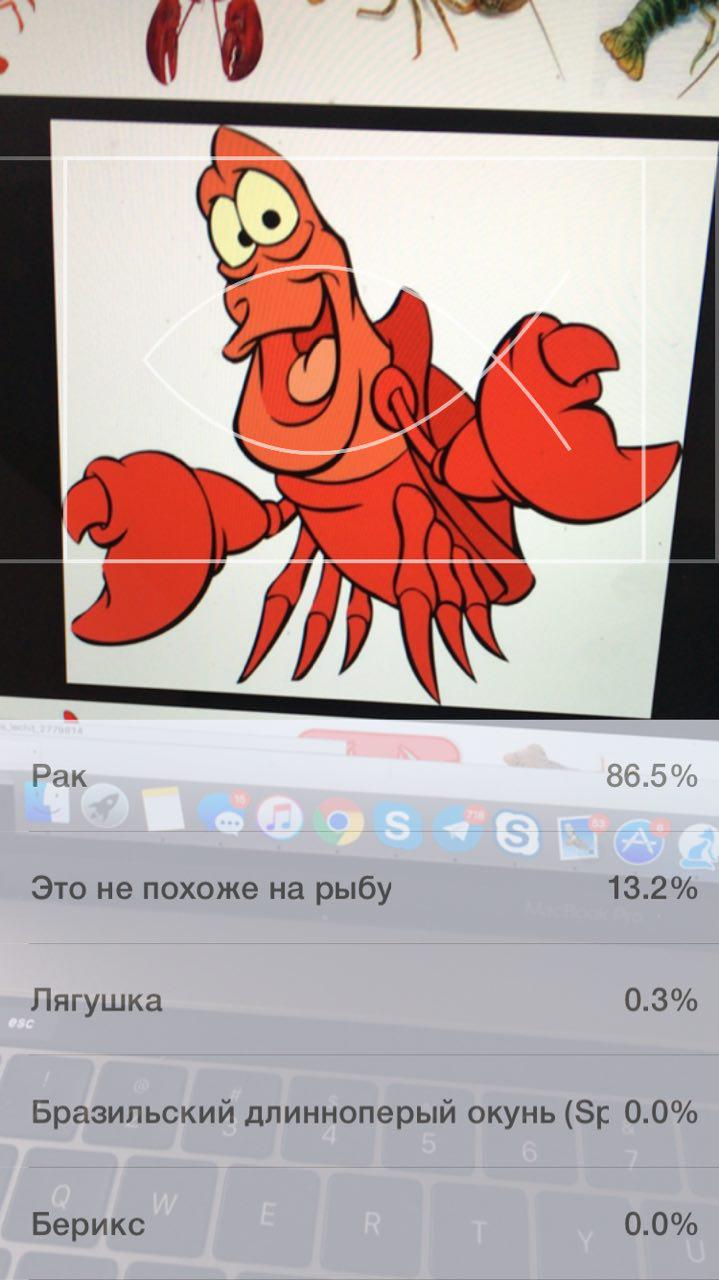

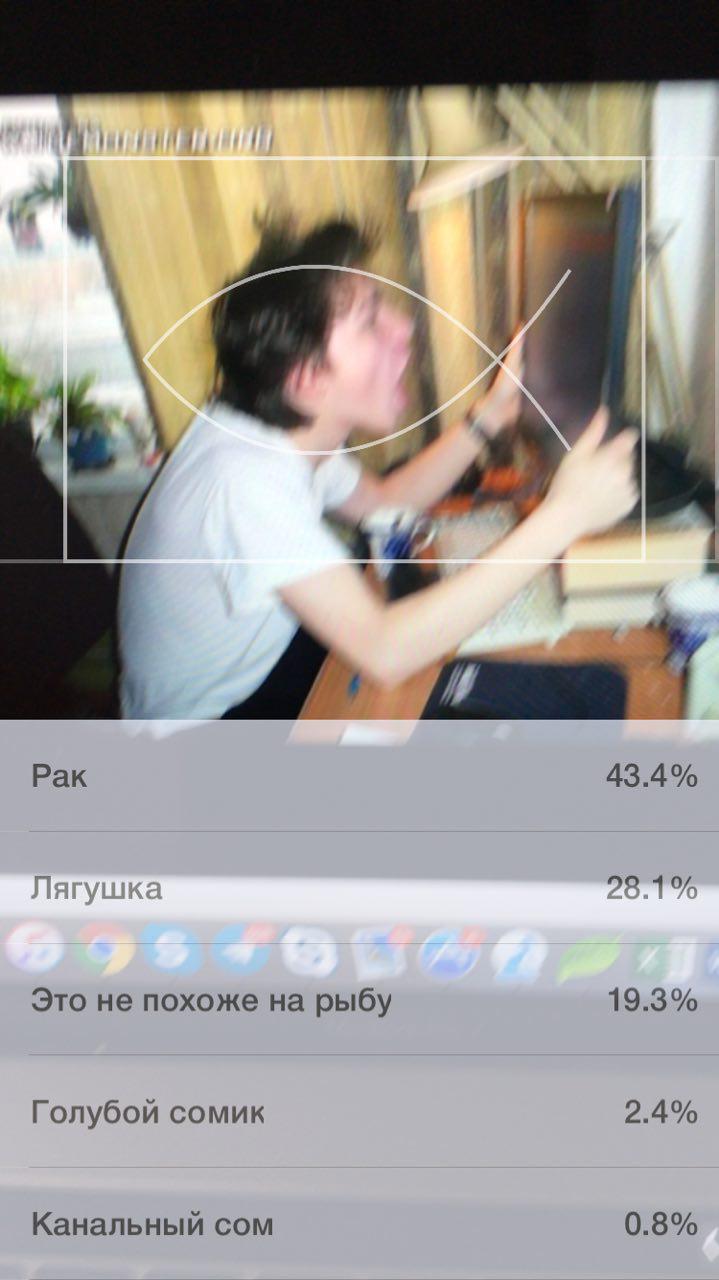

The first results were astounding!

While everyone was admired by the fact that they had trained the neuron to the level of a cartoon abstraction, some doubted that the network had retrained.

But in vain, the camera captured the typical Kerry on mid, so everything is fine.

The resulting solution suffered from large errors on similar species. The images represent the legendary ide and the chub, which can only be distinguished by avid fishermen.

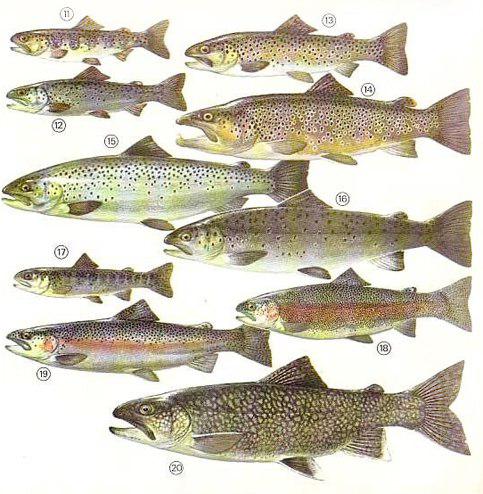

The situation is even worse with the trout.

More than a dozen popular types of trout and salmon can not only confuse a non-expert, but also are incredibly volatile throughout their lives. From the stage of the larva to spawning, they change their color and shape dramatically, depending on age, season, and even the composition of the water. Not every person recognizes the same species of trout at intervals of several years. Species such as coho and chinook are hard to distinguish even by description. Because of a human error, the data is confused, for each class the model requires at least 1000 photos for accuracy of more than 80%, with several dozen types of manual, competently view such volumes of resource-intensive.

Our solution was to iteratively clean the dataset from markup errors using the model itself. We run the model on our own dataset, we find all the pictures with a mismatch between the model prediction and the markup. Most of these images are the errors of the latter. We eliminate them, retrain the model and get high metrics on validation dataset. As a result, it was possible to obtain model accuracy of more than 90 +%, even for similar species. The network is able to almost correctly distinguish between 8 similar species of trout and salmon, and more than a dozen perch. However, in rare cases, the network is still wrong. The reason is better to show clearly:

Measure catch

Revaluation of your catch is a very important feature of protein neural networks. For accurate measurement, you need to know the boundaries of the fish in the photo and have a range finder, like on new smartphones with dual cameras.

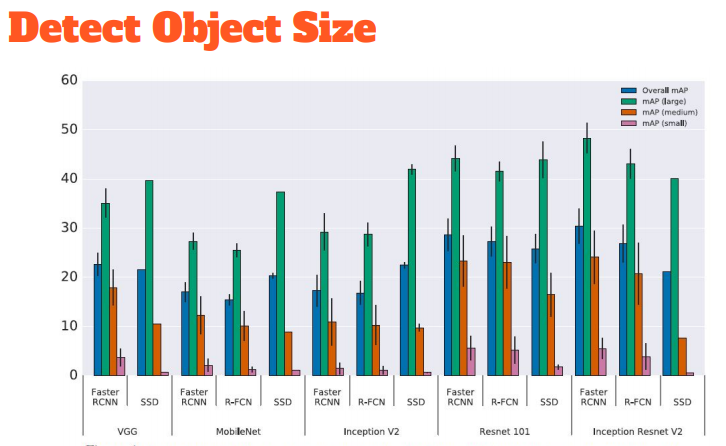

Our goal is to provide fishers with an accurate measurement of the catch automatically, by simply pointing the phone. The application must be able to determine when the fish in the frame is classified and localized. It is necessary to measure the length of fish according to several different standards. The Faster RCNN architecture with Inception-ResNet-2 shows itself better than its analogs, we translate the classification into it with R-FCN.

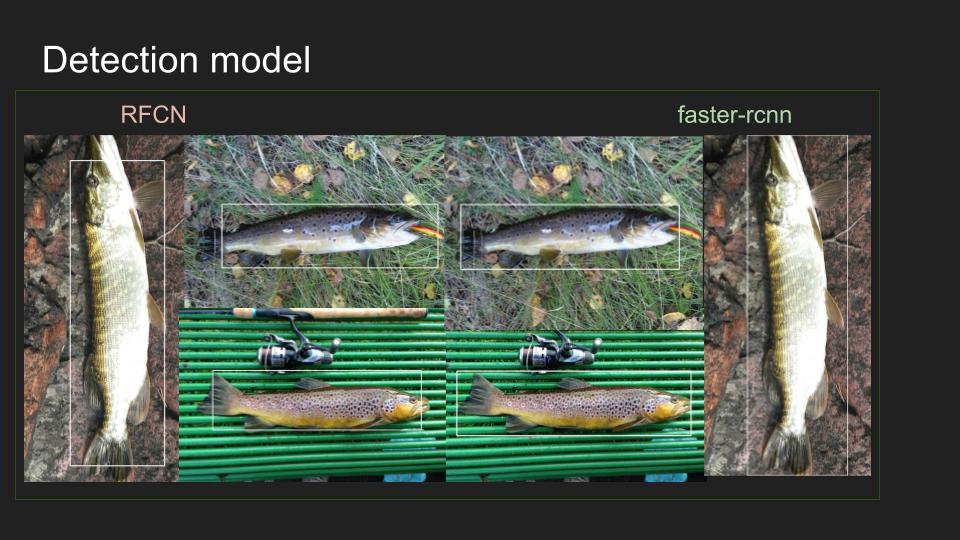

Results on similar data speak for themselves.

However, this method of measurement does not suit us, since the boxes correctly determine the length and width only for the horizontal and vertical position; they are not suitable for an arbitrary position and are not able to estimate the length of the bent fish.

More precisely measure the catch

We mark out several thousand photos with key points to cover all the measurement methods according to various standards.

We teach a model for regression by key points, which will increase the accuracy of length measurement and will be able to get into the phone. If we transfer the detection to the device, then we will be able to send the already trimmed section with fish to the classification, which will increase reliability and help to remove the load from the server. The phone will only send a request when it detects a fish. Unfortunately, the point regression model cannot distinguish between fish and non-fish, so we need a model of such a binary classifier for the device.

To regress by key points, we take the “head” of ResNet50 architecture, which was trained on ImageNet, and add 2 fully connected layers on the regression of 14 variables - the coordinates of all points. MAE loss function. The weight of the model is ~ megabyte.

Augmentation: flips horizontal / vertical, brightness, random crop (well logged), scale. All coordinates of the points were normalized to [-1, 1]

To create a binary classifier, we create our own model, similar to AlexNet. We teach on fish and non-fish.

Everything is standard here: binary cross entropy, augmentations, accuracy metric (the samples are balanced)

In the future, we plan to increase the number of recognizable species, exceed the accuracy of the human model and transfer the model completely to the device. Our goal is not just to create a universal tool for fishermen, but to unite all the community in a single project.

Source: https://habr.com/ru/post/340854/

All Articles