Memory model in the examples and not only

In the continuation of a series of topics called "the fundamental things about Java that are worth knowing, but which many do not know." Previous topic: Binary compatibility in the examples and not only

In the continuation of a series of topics called "the fundamental things about Java that are worth knowing, but which many do not know." Previous topic: Binary compatibility in the examples and not onlyThe Java memory model is something that influences the way any java developer code works. Nevertheless, quite a few people neglect the knowledge of this important topic, and sometimes come up against completely unexpected behavior of their applications, which is explained precisely by the features of the JMM device. Take, for example, a very common and incorrect implementation of the Double-checked locking pattern:

public class Keeper { private Data data = null; public Data getData() { if(data == null) { synchronized(this) { if(data == null) { data = new Data(); } } } return data; } } People who write such code try to improve performance by avoiding blocking if the value has already been assigned. Unfortunately, these people do not take into account many factors, as a result of the manifestation of which a zombie apocalypse can happen. Under the cut, I will tell the theory and give examples of how something can go wrong. In addition, as they said in one Indian film, “It’s not enough to know what is wrong. You need to know how to make it so. ” Therefore, recipes for success can also be found further.

')

A little bit of history

The first version of JMM appeared along with Java 1.0 in 1995. It was the first attempt to create a consistent and cross-platform memory model. Unfortunately, or fortunately, it had several serious flaws and misunderstandings. One of the saddest problems was the absence of any guarantees for final fields. That is, one thread could create an object with a final-field, and another thread could not see the values in this final-field. Even thejava.lang.String class was subject to this. In addition, this model did not allow the compiler to produce many effective optimizations, and when writing multi-threaded code it was difficult to be sure that it would actually work as expected.Therefore, in 2004, JSR 133 appeared in Java 5, in which the shortcomings of the original model were eliminated. We will talk about what happened.

Atomicity

Although many people know this, I consider it necessary to recall that on some platforms, some write operations may turn out to be non-atomic. That is, while the value is being recorded by one stream, another stream may see some intermediate state. There is no need to go far for an example - the records of the same long and double, if they are not declared as volatile, do not have to be atomic and on many platforms are written in two operations: the high and low 32 bits separately. (see standard )Visibility

In the old JMM, each of the running threads had its own cache (working memory), in which were stored some of the states of the objects that this thread manipulated. Under some conditions, the cache was synchronized with the main memory (main memory), but nevertheless a significant portion of the time values in the main memory and in the cache could diverge.

In the new model of memory, such a concept was abandoned, because where exactly the value is stored is not interesting to anyone at all. It is only important under which conditions one thread sees the changes made by another thread . In addition, iron is already smart enough to cache something, put it into registers, and get up other operations.

It is important to note that, unlike C ++, out-of-thin-air values are never taken: for any variable, the value observed by the flow is either assigned to it or is default.

Reordering

But this, as they say, is not all. public class ReorderingSample { boolean first = false; boolean second = false; void setValues() { first = true; second = true; } void checkValues() { while(!second); assert first; } } And in this code, the

checkValues method is called from one thread, and the checkValues method is checkValues from another thread. It would seem that the code should run without problems, because the second field is set to true later than the first field, and therefore when (more precisely, if) we see that the second field is true, then the first one should also be like this. But it was not there.Although you may not worry about this within one thread, in a multithreaded environment the results of operations performed by other threads may be observed in the wrong order. In order not to be unfounded, I wanted to ensure that assertion worked on my machine, but I did not succeed for so long ( no, I did not forget to specify the

-ea switch when I started -ea ) that, desperate, I asked the question still provoke reordering "to the well-known performance-engineers. So Sergey Kuksenko answered my question:On machines with TSO (which includes x86) is quite difficult to show.

breaking reordering. This can be shown on any ARM or

PowerPC. You can also refer to the Alpha - the processor with the weakest rules of ordering. Alpha - it was a nightmare for developers of compilers and operating system kernels. Happiness that he did die. On the net you can find masses of stories about it.

Classic example:

(example is similar to the one above - author’s comment)

... on x86 will always work correctly, because if you saw

If you set the “b”, then you will see the “a”.

And when I said that I would like for each aspect considered in the article to find a working demo of what is happening, Sergey pleased me, saying that it is necessary to read manuals on the corresponding hardware for a long time and diligently. For a while I was thinking about trying to get an effect on the phone, but in the end I decided that it was not so important. In addition, I still specialize not at all on the features of any specific platforms.

So, back to our original example and see how it can spoil reordering. Let our

Data class in the constructor perform some not-so-trivial calculations and, most importantly, write some values into non-final fields: public class Data { String question; int answer; int maxAllowedValue; public Data() { this.answer = 42; this.question = reverseEngineer(this.answer); this.maxAllowedValue = 9000; } } It turns out that the stream that first finds that

data == null will perform the following actions:- Allocate memory for a new object

- Call the

Dataclass constructor - Write the value 42 in the

answerfield of the classData - Write some string in the

questionfield of theDataclass. - Write the value 9000 in the

maxAllowedValuefield of theDataclass - Write the newly created object in the

datafield of theKeeperclass.

Happens-before

Definition

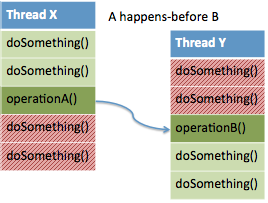

All these rules are set using the so-called happens-before relationship. It is defined as:Let there be a stream X and a stream Y (not necessarily different from the stream X ). And let there be operations A (running on thread X ) and B (running on thread Y ).In words, such a definition may not be perceived very well, therefore I will explain a little. Let's start with the simplest case, when there is only one flow, that is, X and Y are the same. Inside one thread, as we have said, there are no problems, because the operations with respect to each other, happens to be in accordance with the order in which they are specified in the source code (program order). For a multithreaded case, everything is somewhat more complicated, and here without ... the pictures cannot be understood. And here she is:

In this case, A happens-before B means that all changes made by flow X before operation A and changes that this operation has caused are visible to flow Y at the time of operation B and after the operation.

Here, on the left, the green marks are those operations that are guaranteed to be seen by the Y stream, and the red ones are those that may not be seen. On the right, red marks those operations that, while performing, may still not see the results of performing green operations, to the left, and green - those that, when performed, will see everything. It is important to note that the happens-before relation is transitive , that is, if A happens-before B and B happens-before C, then A happens-before C.

Operations related to the happens-before relationship

Now let's see what exactly the restrictions on reordering are in the JMM. A deep and detailed description can be found, for example, in The JSR-133 Cookbook , but I will give everything on a somewhat more superficial level and, perhaps, skip some of the limitations. Let's start with the simplest and most famous: locks.

1. releasing the monitor happens-before the monitor of the same monitor. Please note: it is the release, not the exit, that is, you can not worry about safety when using

wait .Let's see how this knowledge will help us correct our example. In this case, everything is very simple: just remove the external check and leave the synchronization as it is. Now the second stream is guaranteed to see all the changes, because it will receive the monitor only after another thread releases it. And since he will not let it go until everything initializes, we will see all the changes at once, not separately:

public class Keeper { private Data data = null; public Data getData() { synchronized(this) { if(data == null) { data = new Data(); } } return data; } } 2. Writing to the volatile variable happens-before reading from the same variable.

The change that we made, of course, corrects the incorrectness, but returns the one who wrote the original code to where it came from - to block each time. Rescue can keyword volatile. In fact, the considered statement (2) means that when reading everything that is declared volatile, we will always receive the current value. In addition, as I said before, for volatile fields, an entry is always (including long and double) an atomic operation. Another important point: if you have a volatile entity that has links to other entities (for example, an array, List, or some other class), then always the link to the entity itself, but not everything, will always be "fresh" incoming

So back to our Double-locking sheep. Using volatile, you can fix the situation like this:

public class Keeper { private volatile Data data = null; public Data getData() { if(data == null) { synchronized(this) { if(data == null) { data = new Data(); } } } return data; } } Here we still have a lock, but only if

data == null . We filter out the remaining cases using volatile read. Correctness is ensured by the fact that volatile store happens-before volatile read, and all operations that occur in the constructor are visible to the person who reads the field value.In addition, it uses an interesting assumption that is worth checking out: volatile store + read is faster than blocking. However, as all the same productivity engineers tirelessly repeat to us, microbench marks have little to do with reality, especially if you don’t know how the thing you are trying to measure is arranged. Moreover, if you think you know how it is arranged, then you are most likely mistaken and do not take into account any important factors. I do not have enough confidence in the depth of my knowledge to make my benchmarks, so there will be no such measurements here. However, there is some information on volatile performance in this presentation starting on slide # 54 (although I strongly recommend reading everything). UPD: there is an interesting comment in which they say that volatile is significantly faster than synchronization, by design.

3. Writing a value in the

final field (and, if this field is a link, then all the variables reachable from this field (dereference-chain)) when constructing an object, it happens-before writing this object to any variable that occurs outside this constructor.This also looks rather confusing, but in fact the essence is simple: if there is an object that has a

final field, then this object can be used only after setting this final field (and everything that this field can refer to). You should not forget, however, that if you pass a link to the constructed object (ie, this ) out of the constructor, someone can see your object in an unfinished state.In our example, it turns out that it is enough to make the field write to which happens last,

final , how everything will magically work without volatile and without synchronization every time: public class Data { String question; int answer; final int maxAllowedValue; public Data() { this.answer = 42; this.question = reverseEngineer(this.answer); this.maxAllowedValue = 9000; } private String reverseEngineer(int answer) { return null; } } Only this is the salt that it will work magically, and a person who does not know about your clever trick may not understand you. Yes, and you too can forget about it pretty quickly. There is, of course, an option to add a proud comment like “neat trick here!”, Describing what is happening here, but for some reason this seems to me not a very good practice.

UPD: This is not true. The comments describe why . UPD2 : According to the results of the discussion, Ruslan wrote an article .

In addition, it is important to remember that the fields are also static, and that the JVM classes are guaranteed to initialize only once upon the first access. In this case, the same singleton ( we will not call it a pattern or an anti-pattern within this article. The article is not about that at all;) ) like this:

public class Singleton { private Singleton() {} private static class InstanceContainer { private static final Singleton instance = new Singleton(); } public Singleton getInstance() { return InstanceContainer.instance; } } This, of course, is not a tip on how to implement a singleton, since everyone who read Effective Java knows that if you are By the way, to those who know that final-fields can be changed through Reflection and interested in how such changes will be visible, I can say this: “everything seems to be fine, it’s just not clear why, and it’s not clear whether everything is really whether well. " There are several topics on this topic, most of this online. If someone tells in the comments how it really is, I will be extremely happy. However, if no one tells, then I myself will find out and will definitely tell. UPD : In the comments told .

Surely there are some other operations related to the happens-before relationship that I did not cover in this article, but they are much more specific, and if you have interest, you can find them yourself in the standard or somewhere else, and then share them with all in the comments.

Credits, links and stuff

First of all, I would like to thank for some of the consultations and the preliminary verification of the article on the content of the clinical nonsense of the previously mentioned productivity engineers: Alexey TheShade Shipilev and Sergey Walrus Kuksenko .I also provide a list of sources that I used when writing the article and just good links on the topic:

- The JSR-133 Cookbook for Compiler Writers by Doug Lea

- Slides from the report about the Java Memory Model by Sergey Kuksenko

- Chapter 17. Threads and Locks of Java Language Specification

- Java Memory Model in brief by Artem Danilov

- Java Concurrency in Practice

Source: https://habr.com/ru/post/133981/

All Articles