How we built our mini data center. Part 5 - experience, breaks, heat

Hello! In past articles (part one , two , three , four ) we talked about how we built our mini data center.

In this article, we will try to talk about the problems that we had to endure during the last year of our work. We hope that this article will help you not to make the mistakes that we made from the very beginning and which we had to eliminate “on the living”. Go!

As you know from past articles, we had to quickly “stretch” our optical line to a new uplink, to protect against DDoS attacks (since the service was under massive DDoS attacks from competitors). As we did it extremely quickly, we made a number of mistakes, for which we paid off in full.

')

The first case was not long in coming. Already in April (yes, it was in April) there was a strong storm with snowfall that knocked down a lot of trees, and in our case even a lot of supports (pillars) along which we were sending an optical cable.

There was also a problem - to get optical cable, junction boxes and couplings and, most importantly, to find a team that would do it, because on that day, perhaps half of the Dnieper providers were without communication, due to weather conditions that caused trees to fall, pillars, breaks in optical lines, etc.

Trees sawed residents to clear the road and go to work.

The break of the optical fiber happened in four places at once, and it was very sad.

The situation was also complicated by the fact that the height at which installers fixed the optical cable was unattainable for our “standard” ladders, but we found a way out of this situation. I had to take a second car, which is used for the transport of goods and "pokatushek" and set the stairs straight inside, putting it in the seat.

Started to work.

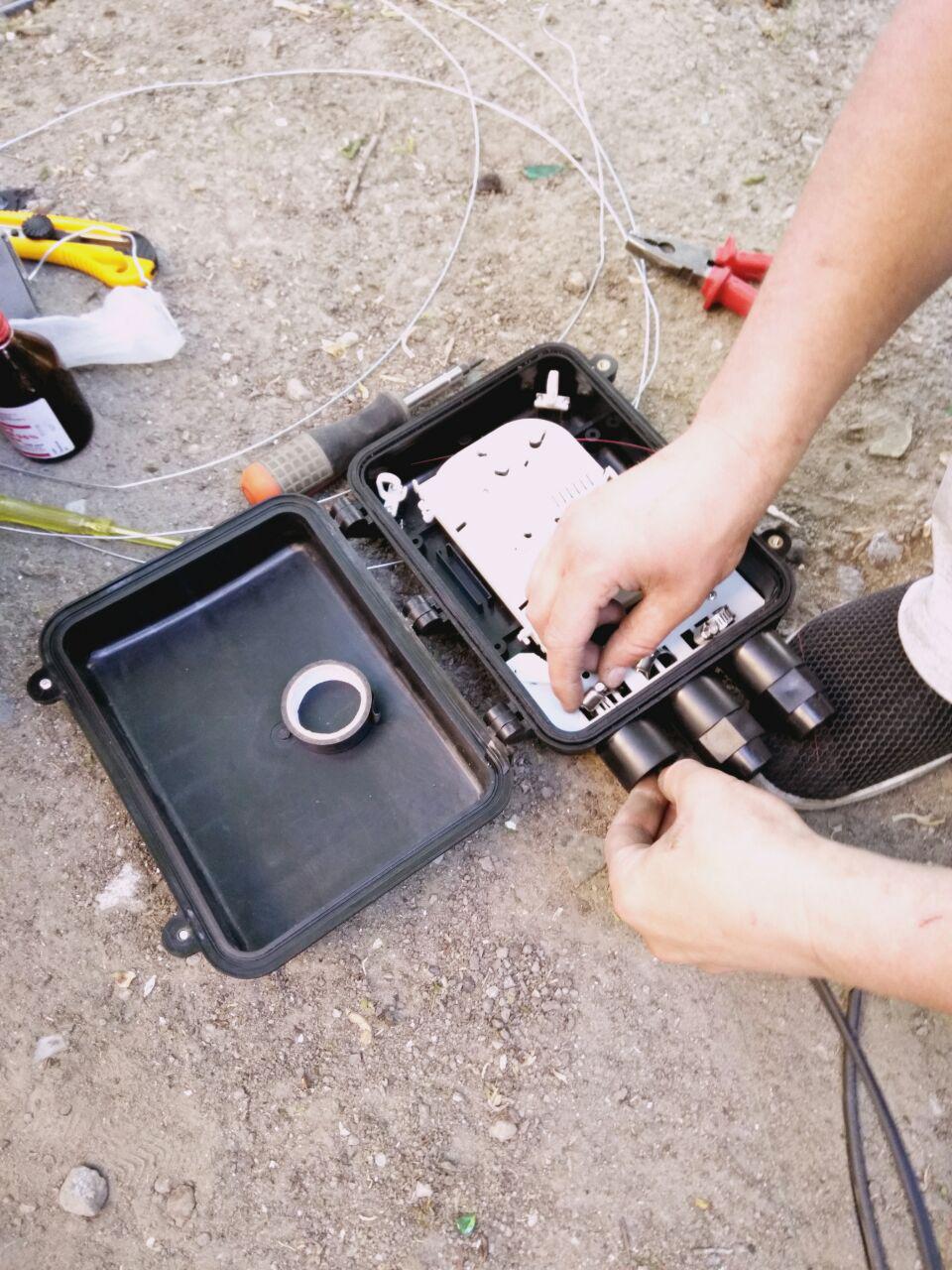

We clean the optical fiber.

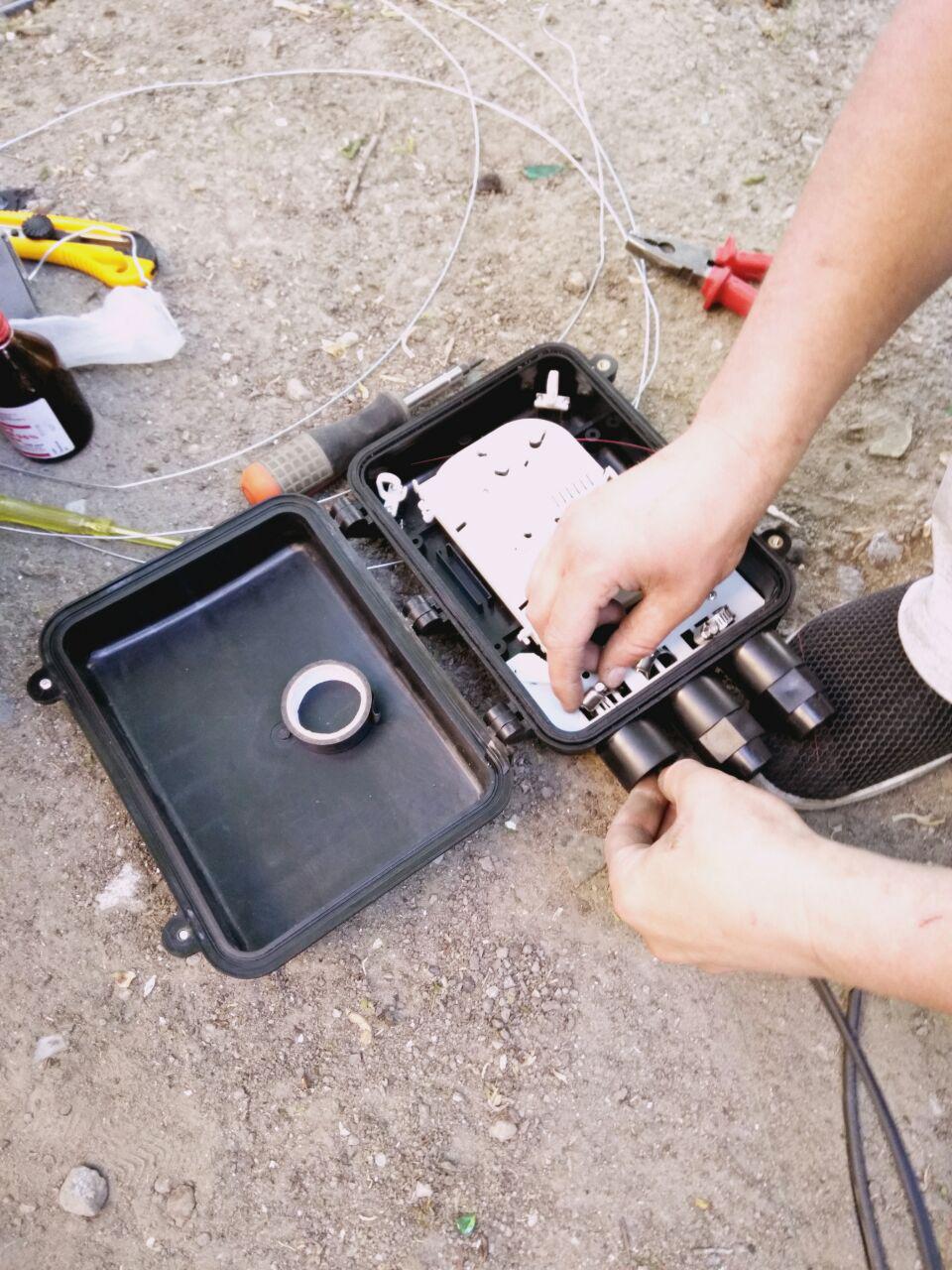

We weld the last vein.

Carried around the whole day, almost from early morning to evening. Many thanks to the brigade that helped us, climbed over icy pillars, soaked in half-meter puddles and froze under the "light" April breeze.

The second cliff occurred a month and a half later, in the same place as the first, as always at the most inappropriate moment. And it happened because of public utilities, who decided to remove fallen trees and at the same time break everything that the excavator bucket could reach ... (I hope by chance).

This time we already knew where to go and what to take with us, we prepared thoroughly. At this time, our mini "data center", was already sitting on the backup uplink with fully working functionality, which gave us the opportunity not to hurry too much.

The elimination of the consequences was successful; they tried to fasten the cable as high as possible. As it turned out later, not enough.

After some time (a very small interval), the signal again disappeared and we first went to inspect this part of the road. Everything was complicated by the fact that we needed to have in stock both the optical fiber and the “fopes / couplings” in which we were putting this fiber. But with such a frequency of accidents, stocks became less.

The precipice occurred exactly in the same area, in two places. The first part was cut off by the heavy load, together with the electric wires, and the second part was “finished off” by a garbage truck, which had already wound the loose cable on itself and tore it from three pillars.

We are very tired of this situation and we decided to act for sure. They called an aerial platform and began to fasten the cable so that it could be broken only by a falling plane (God forbid, of course).

Fiber welding was successful and the Internet was filed.

The last cliff occurred at the end of the summer and in the most inconvenient place for us, above the high-voltage trolleybus wires, above the busy road. As it turned out later, the electricians changed the fasteners and accidentally touched our cable.

We must pay tribute to the team that eliminated the cliff, they did it fearlessly. The height, even compared to our past cliffs, was very serious (about 10 m.).

But not only with cliffs and snowy spring we came across this year, but also with the anomalous heat, which almost paralyzed the work of our mini-center.

In the middle of summer, an abnormal heat began in Ukraine. The temperature in the shade rose to 45 degrees Celsius. We have a powerful, expensive, channel air conditioner, which, unfortunately, was not serviced as often as it was required. And now, when a week or two, the heat was at 45 degrees, and at night 30 - we started the adventure.

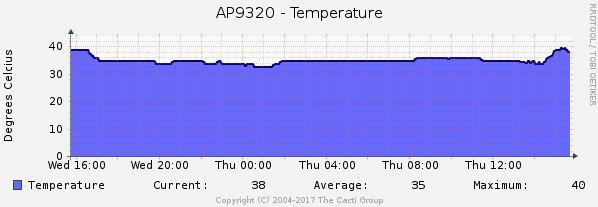

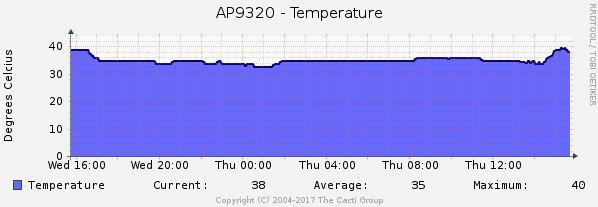

At first, we began to “take off” the data center due to the HP C7000 blade systems. There are coolers like "turbines" and they sound very specific. The temperature was (at the hottest point) about 40 degrees, on blade systems about 30.

First of all, of course, we started servicing the air conditioner, checked and added freon, cleaned the system and radiator, and it seemed to help, but exactly for a week.

We decided to add another air conditioner, floor, to help the main. But it gave the opposite effect, the temperature began only to rise.

We went on the principle of "removal of warm air" and began to reconstruct the exhaust system. Bought and installed the hood, laid exhaust channels, etc.

Test hood, absorption sheet A4:

They even did this:

For some time this had the desired effect, and for a month we lived relatively without problems, but with an elevated temperature in the server zone. We decided to replace the air conditioner with a new one, because we considered that the old one had failed (the experts pointed it out to us). They installed a new, more powerful air conditioner (also channel), spent a lot of finances, but it had almost no effect.

Having tried all possible options, after talking with a dozen contracting companies, we did not find any adequate solution, the temperature was within the normal range, but in its boundary part, which also did not suit us. The servers, though not overheated, but when building equipment, this would be a problem.

And so, one intelligent person (NM from the PINSPB company, hello!), Suggested to us, it would seem not a real solution to the problem. The problem was that in some corners, hot air accumulated and did not go anywhere, and no matter how much cold we served, it immediately became warm. We listened to the advice of colleagues and tried to make a test solution of available means.

I admit honestly, I was skeptical, and it looked silly. But after an hour of work, the temperature in the data center dropped from 29-30 to 22-24 degrees! Of course, the domestic fan was a temporary measure and after 2 days we installed a ventilation system that drove the air through the server on an industrial scale and helped the hood even better. But the fan and the advice of colleagues helped us understand the essence of the problem, which a dozen firms did not understand.

So we want our experience to help our fellow colleagues not to make mistakes, who are only thinking about “building” their decision or are already doing it.

Thanks for attention!

In this article, we will try to talk about the problems that we had to endure during the last year of our work. We hope that this article will help you not to make the mistakes that we made from the very beginning and which we had to eliminate “on the living”. Go!

As you know from past articles, we had to quickly “stretch” our optical line to a new uplink, to protect against DDoS attacks (since the service was under massive DDoS attacks from competitors). As we did it extremely quickly, we made a number of mistakes, for which we paid off in full.

')

First cliff

The first case was not long in coming. Already in April (yes, it was in April) there was a strong storm with snowfall that knocked down a lot of trees, and in our case even a lot of supports (pillars) along which we were sending an optical cable.

There was also a problem - to get optical cable, junction boxes and couplings and, most importantly, to find a team that would do it, because on that day, perhaps half of the Dnieper providers were without communication, due to weather conditions that caused trees to fall, pillars, breaks in optical lines, etc.

Trees sawed residents to clear the road and go to work.

The break of the optical fiber happened in four places at once, and it was very sad.

The situation was also complicated by the fact that the height at which installers fixed the optical cable was unattainable for our “standard” ladders, but we found a way out of this situation. I had to take a second car, which is used for the transport of goods and "pokatushek" and set the stairs straight inside, putting it in the seat.

Started to work.

We clean the optical fiber.

We weld the last vein.

Carried around the whole day, almost from early morning to evening. Many thanks to the brigade that helped us, climbed over icy pillars, soaked in half-meter puddles and froze under the "light" April breeze.

Second cliff

The second cliff occurred a month and a half later, in the same place as the first, as always at the most inappropriate moment. And it happened because of public utilities, who decided to remove fallen trees and at the same time break everything that the excavator bucket could reach ... (I hope by chance).

This time we already knew where to go and what to take with us, we prepared thoroughly. At this time, our mini "data center", was already sitting on the backup uplink with fully working functionality, which gave us the opportunity not to hurry too much.

The elimination of the consequences was successful; they tried to fasten the cable as high as possible. As it turned out later, not enough.

Third cliff

After some time (a very small interval), the signal again disappeared and we first went to inspect this part of the road. Everything was complicated by the fact that we needed to have in stock both the optical fiber and the “fopes / couplings” in which we were putting this fiber. But with such a frequency of accidents, stocks became less.

The precipice occurred exactly in the same area, in two places. The first part was cut off by the heavy load, together with the electric wires, and the second part was “finished off” by a garbage truck, which had already wound the loose cable on itself and tore it from three pillars.

We are very tired of this situation and we decided to act for sure. They called an aerial platform and began to fasten the cable so that it could be broken only by a falling plane (God forbid, of course).

Fiber welding was successful and the Internet was filed.

Fourth cliff

The last cliff occurred at the end of the summer and in the most inconvenient place for us, above the high-voltage trolleybus wires, above the busy road. As it turned out later, the electricians changed the fasteners and accidentally touched our cable.

We must pay tribute to the team that eliminated the cliff, they did it fearlessly. The height, even compared to our past cliffs, was very serious (about 10 m.).

Heat

But not only with cliffs and snowy spring we came across this year, but also with the anomalous heat, which almost paralyzed the work of our mini-center.

In the middle of summer, an abnormal heat began in Ukraine. The temperature in the shade rose to 45 degrees Celsius. We have a powerful, expensive, channel air conditioner, which, unfortunately, was not serviced as often as it was required. And now, when a week or two, the heat was at 45 degrees, and at night 30 - we started the adventure.

At first, we began to “take off” the data center due to the HP C7000 blade systems. There are coolers like "turbines" and they sound very specific. The temperature was (at the hottest point) about 40 degrees, on blade systems about 30.

First of all, of course, we started servicing the air conditioner, checked and added freon, cleaned the system and radiator, and it seemed to help, but exactly for a week.

We decided to add another air conditioner, floor, to help the main. But it gave the opposite effect, the temperature began only to rise.

We went on the principle of "removal of warm air" and began to reconstruct the exhaust system. Bought and installed the hood, laid exhaust channels, etc.

Test hood, absorption sheet A4:

They even did this:

For some time this had the desired effect, and for a month we lived relatively without problems, but with an elevated temperature in the server zone. We decided to replace the air conditioner with a new one, because we considered that the old one had failed (the experts pointed it out to us). They installed a new, more powerful air conditioner (also channel), spent a lot of finances, but it had almost no effect.

Having tried all possible options, after talking with a dozen contracting companies, we did not find any adequate solution, the temperature was within the normal range, but in its boundary part, which also did not suit us. The servers, though not overheated, but when building equipment, this would be a problem.

And so, one intelligent person (NM from the PINSPB company, hello!), Suggested to us, it would seem not a real solution to the problem. The problem was that in some corners, hot air accumulated and did not go anywhere, and no matter how much cold we served, it immediately became warm. We listened to the advice of colleagues and tried to make a test solution of available means.

I admit honestly, I was skeptical, and it looked silly. But after an hour of work, the temperature in the data center dropped from 29-30 to 22-24 degrees! Of course, the domestic fan was a temporary measure and after 2 days we installed a ventilation system that drove the air through the server on an industrial scale and helped the hood even better. But the fan and the advice of colleagues helped us understand the essence of the problem, which a dozen firms did not understand.

So we want our experience to help our fellow colleagues not to make mistakes, who are only thinking about “building” their decision or are already doing it.

Thanks for attention!

Source: https://habr.com/ru/post/337658/

All Articles