Three strategies for testing Terraform

I really like Terraform.

In addition to CloudFormation for AWS and OpenStack Heat, this is one of the most useful open source tools for deploying and configuring infrastructure on any platform. However, there is one way to work with Terraform that worries me:

terraform plan # « ; !» — . terraform apply Maybe this is not a problem if you deploy software on the same rack in a data center or test an AWS account with limited rights. In such a situation, harm is quite difficult.

And if the deployment is done from under the all-seeing and all-powerful production-account or covers the entire data center? It seems to me very risky.

Integration and unit testing can solve this problem. You probably ask: “Unit testing is like for programs?” Yes, that unit testing!

In this article we will talk a little about what integration and unit testing are, as well as consider the problems and strategies for testing infrastructure used in practice. We will also touch upon the deployment strategies of the infrastructure as they relate to testing. Despite the fact that the article contains a sufficient amount of code, deep programming knowledge from readers is not required.

Introduction to testing programs

Unit testing is used by developers to verify the correct operation of individual functions, and integration testing is used to test the function as part of an application. Let's illustrate the difference with an example.

Suppose I write a pseudo-language calculator and are now creating a function that adds two numbers. It looks like this:

function addTwoNumbers(integer firstNumber, integer secondNumber) { return (firstNumber + secondNumber); } The unit test of this function looks like this:

function testAddTwoNumbers() { firstNumber = 2; secondNumber = 4; expected = 6; actual = addTwoNumbers(firstNumber, secondNumber); if ( expectedResult != actualResult ) { return testFailed("Expected %s but got %s", expected, actual); } return testPassed(); } As you can see from the listing, the unit test verifies that the addTwoNumbers function can add two numbers. Despite the seemingly triviality, such tests are very important, and their absence has led to the failure of many large projects!

Making sure this feature works as part of the entire application is much more difficult. The success of this process is affected by the complexity of the application itself and its dependencies. In our case, the integration testing calculator looks quite simple:

Calculator testCalculator = new Calculator(); firstNumber = 1; secondNumber = 2; expected = 3; actual = testCalculator.addTwoNumbers(firstNumber, secondNumber); # add other tests here Test testAddTwoNumbers { if ( expected != actual ) { testFailed("Expected %s, but got %s.", expected, actual); } testPassed(); } We create a Calculator class testCalculator object and check if the addition function of two numbers works. If, during unit testing, this function was isolated from other components of the Calculator class, during integration testing, all components are put together to ensure that the changes to addCalculator have not broken the entire application.

“I deploy infrastructure, Carlos. Why do I need all this?

I'll explain now.

Have you ever written such scripts ...

function increaseDiskSize(string smbComputerName, string driveLetter) { wmiQueryResult = new wmiObject("SELECT * FROM WIN32_LOGICALDISK WHERE DRIVELETTER = \"%s\"", driveLetter) if ( wmiQuery != null ) { # do stuff } } ... in the hope that they will work properly? Did you restart them several times on the test machine, making changes each time after the defects found? Has it happened that after launching this procedure on a combat server, it did not work as it should? Did you lose the evening or weekend for this reason?

If so, then I think you perfectly understand the problems associated with this approach.

terraform plan # « ; !» — . terraform apply This is the same!

Terraform Test Strategy # 1: Visual Inspection

Terraform plan is a great feature that gives you the opportunity to see what Terraform is going to do. It was created for practitioners so that they could evaluate the upcoming actions, make sure that everything looks good, and then run terraform apply as the final touch.

Let's say you are going to deploy a Kubernetes cluster in AWS using VPC and EC2 keys. The corresponding code might look like this (without working hosts):

provider "aws" { region = "${var.aws_region}" } resource "aws_vpc" "infrastructure" { cidr_block = "10.1.0.0/16" tags = { Name = "vpc.domain.internal" Environment = "dev" } } resource "aws_key_pair" "keypair" { key_name = "${var.key_name}" public_key = "${var.public_key}" } resource "aws_instance" "kubernetes_controller" { ami = "${data.aws_ami.kubernetes_instances.id}" instance_type = "${var.kubernetes_controller_instance_size}" count = 3 } When using visual controls, you run terraform plan , make sure everything is fine (by specifying the appropriate variables), and run terraform apply to apply the changes.

Benefits

Development takes a little time

This approach works well on a small scale or if you need to quickly write a configuration to test something new. The feedback loop in this case works very quickly (we write the code, terraform plan , check, then, if everything is fine, terraform apply ); Over time, you can learn how to quickly stamp new configurations.

Sloping learning curve

This work can be performed by any person regardless of their level of Terraform knowledge, programming experience and system administration. The plan has almost all the necessary information.

disadvantages

Error detection difficulty

Let's say you read this document on Borg and wanted to deploy five replicas of the master Kubernetes instead of three. You quickly check the plan and, being absolutely sure that everything is in order, you launch it for execution. But then it suddenly turns out that you forgot about the most important thing and nevertheless three masters unfolded, and not five!

In this example, to fix the situation is very simple: change the values of variables in variables.tf and expand the cluster again. However, things could be much worse if, for example, it was a battle cluster, and the teams responsible for the functionality had already deployed their services there.

Scaling issues

Visual control is good if you are not using Terraform very actively.

The situation becomes more complicated when you begin to deploy dozens and hundreds of different infrastructure components on dozens and hundreds of servers each. The modules will help us illustrate the situation. Take a look at this configuration:

# main.tf module "stack" "standard" { source = "git@github.com:team/terraform_modules/stack.git" number_of_web_servers = 2 number_of_db_servers = 2 aws_account_to_use = "${var.aws_account_number}" aws_access_key = "${var.aws_access_key}" aws_secret_key = "${var.aws_secret_key}" aws_region_to_deploy_into = "${var.aws_region}" … } It seems simple enough: it says where the module is and what variables it receives at the input. You decide to see how this module works, open its code and see ...

# team/terraform_modules/stack/main.tf module "web_server" "web_servers_in_stack" { source = "git@github.com:team/terraform_modules/core/web.git" count = "${var.number_of_web_servers}" type = "${coalesce(var.web_server_type, "IIS")}" aws_access_key = "${var.aws_access_key}" aws_secret_key = "${var.aws_secret_key}" … } module "database_server" "db_servers_in_stack" { source = "git@github.com:team/terraform_modules/core/db.git" count = "${var.number_of_db_servers}" type = "${coalesce(var.web_server_type, "MSSQLServerExp2016")}" aws_access_key = "${var.aws_access_key}" aws_secret_key = "${var.aws_secret_key}" … } ... more modules ! It is not hard to guess that wandering along chains of repositories or directories in order to find out what the current configuration of Terraform is doing is tedious at best, and fraught at worst; and performing this exercise during a crash is also very expensive!

Terraform # 2 Testing Strategy: Integration Testing First

')

To solve the problems associated with the test method described above, you can use serverspec, Goss and / or InSpec tools . When using this approach, first your plans are executed in the sandbox with automatic verification of the success of the procedure. If all tests are passed, you can proceed to the deployment in a production environment. Otherwise, the analysis of errors and their correction.

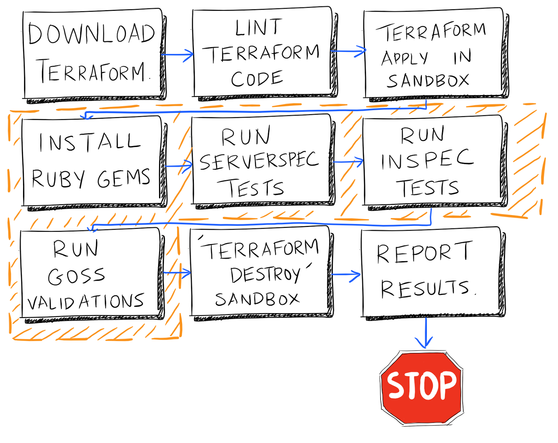

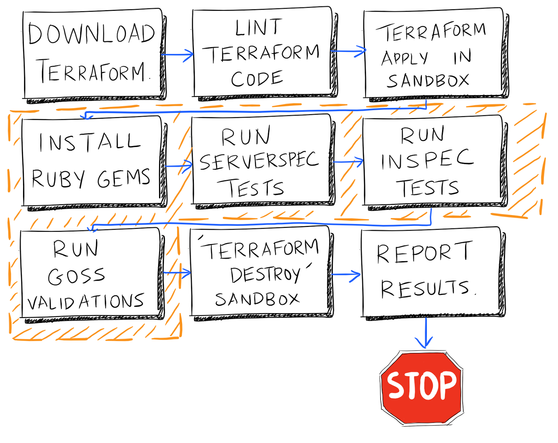

In the case of using a Jenkins or Bamboo CI chain, the process might look like this:

Scheme 1. An example of the procedure for deploying infrastructure with the use of integration testing. Orange color marks the steps performed in the sandbox.

This is a big step in the right direction, but there are pitfalls here that are worth talking about separately.

Benefits

Allows you to get rid of long-lived development environments and encourages the use of immutable infrastructure

A common practice is to create long-lived development environments or sandboxes designed to test software update procedures and script changes. These environments usually have differences from production, enough so that what “works in dev” breaks in combat conditions. This leads to "testing in production", which ultimately can result in service downtime due to human errors.

Well-written integration tests allow you to abandon these unsafe practices.

Each sandbox created during integration testing will be an exact copy of production, because each sandbox will eventually become production . This is a key step to building a fixed infrastructure , where any potential change in the combat system becomes part of the hotfix or future release fix package and immediate changes in production are prohibited and not needed .

This does not mean that such an approach can get rid of the environments directly used by product developers (integration testing of software also calls into question the need to use such environments, but this topic is beyond the scope of this article).

Documents the infrastructure

You no longer need to wade through numerous modules to figure out the infrastructure deployment plan. If there is a 100% coverage of the Terraform code (we will discuss this a bit later), the tests contain comprehensive information and act as a contract, the conditions of which the infrastructure must adhere to.

Allows you to tag versions and "releases" of the infrastructure

Since integration tests are designed to test the system as a whole , they allow you to tag Terraform code using git tags or similar tools. This functionality can be used to roll back to previous states (especially when combined with a blue-green deployment strategy ) or to allow developers to test how the functionality they develop will respond to different versions of the infrastructure.

Can be the first line of defense

Well-established integration tests serve as an early warning system for errors in third-party applications.

Let's say you created a chain in Jenkins or Bamboo, within which the Terraform-infrastructure integration tests are run twice a day. If errors occur, you receive the appropriate messages.

A few days later, you receive a warning that the integration test ended with an error. In the build log you see an error Chef, which says that it could not install IIS, because the installer was not found. Next, it turns out that the URL required to install IIS is outdated and requires updating.

After cloning the repository in which the corresponding cookbook resides, updating the URL, restarting the integration tests locally and waiting for them to complete, you create a pull request to the repository owner team asking you to accept the change.

My congratulations! You have just saved your weekend by correcting a mistake in the code in advance, instead of waiting for the release to start and reacting to its appearance as early as during this operation.

disadvantages

It may take a lot of time.

Depending on the resources created by the Terraform configuration and the number of modules to which they refer, launching Terraform can be very costly. As a result, feedback cycles are slowed down, in which the engineer makes a change, waits until the testing is completed, finds the cause of the error, and the cycle starts again. After a certain number of iterations, the engineer will eventually find a way around these tests .

It can be quite expensive.

Performing full-fledged integration tests in the sandbox involves mirroring the entire infrastructure for a short period of time (albeit on a smaller scale). Performing this procedure to check for small changes over time can result in a decent amount, especially if the mirror is large enough.

This should not bother those who work on their own servers, as they can apply Terraform configurations on unused hardware, and then delete them using terraform destroy . This, however, brings us to the next point, since cleaning and adjustment for the next test run may take some time.

Difficult to determine code coverage

Unlike most programming languages or proven configuration management tools such as Chef , Terraform does not have the means to get the configuration percentage that the integration tests correspond to. This means that teams that decide to go along this path should closely monitor the maintenance of a high percentage of code coverage, and most likely they will have to write their own necessary tools, which, for example, will scan all references to modules and look for their respective definitions of specifications.

Instruments

kitchen-terraform is the most popular integration testing tool for Terraform at the moment. This is the same Kitchen that you could use for Chef, and like test-kitchen, you can define the behavior of Terraform with .kitchen.yml - the kitchen will take care of everything else.

Goss is a simple validation / status check tool that allows you to determine the desired state of the system and either compare with a given definition or provide an end point, /health , which contains information about what is going

system check or not. This tool is similar to serverspec , which allows you to achieve a similar result (with the exception of a cool web-based state of health check) and works fine with Ruby.

Terraform Test Strategy # 2: Unit Tests

An experienced developer may ask: “Usually I write unit tests before integration. Is it the same with Terraform? ”Thank you for reading this far!

As noted earlier, integration testing allows you to check the correctness of the interaction of system components. Unit testing allows you to test the performance of these components separately, in isolation from each other. Unit tests should be quick, easy to understand and write. Good unit tests should run everywhere, with or without a network connection.

Returning to our Kubernetes cluster example, for the resources we defined above, a simple unit test for the popular Ruby RSpec test framework will look like this:

# spec/infrastructure/core/kubernetes_cluster_spec.rb require 'spec_helper' require 'set' describe "KubernetesCluster" do before(:all) do @vpc_details = $terraform_plan['aws_vpc.infrastructure'] @controllers_found = $terraform_plan['kubernetes-cluster'].select do |key,value| key.match /aws_instance\.kubernetes_controller/ end @coreos_amis = obtain_latest_coreos_version_and_ami! end context "Controller" do context "Metadata" do it "should have retrieved EC2 details" do expect(@controllers_found).not_to be_nil end end context "Sizing" do it "should be defined" do expect($terraform_tfvars['kubernetes_controller_count']).not_to be_nil end it "should be replicated the correct number of times" do expected_number_of_kube_controllers = \ $terraform_tfvars['kubernetes_controller_count'].to_i expect(@controllers_found.count).to eq expected_number_of_kube_controllers end it "should use the same AZ across all Kubernetes controllers" do # We aren't testing that these controllers actually have AZs # (it can be empty if not defined). We're solely testing that # they are the same within this AZ. azs_for_each_controller = @controllers_found.values.map do |controller_config| controller_config['availability_zone'] end deduplicated_az_set = Set.new(azs_for_each_controller) expect(deduplicated_az_set.count).to eq 1 end end it "should be fetching the latest stable release of CoreOS for region \ #{ENV['AWS_REGION']}" do @controllers_found.keys.each do |kube_controller_resource_name| this_controller_details = @controllers_found[kube_controller_resource_name] expected_ami_id = @coreos_amis[ENV['AWS_REGION']]['hvm'] actual_ami_id = this_controller_details['ami'] expect(expected_ami_id).to eq expected_ami_id end end it "should use the instance size requested" do @controllers_found.keys.each do |kube_controller_resource_name| this_controller_details = @controllers_found[kube_controller_resource_name] actual_instance_size = this_controller_details['instance_type'] expected_instance_size = $terraform_tfvars['kubernetes_controller_instance_size'] expect(expected_instance_size).to eq actual_instance_size end end it "should use the key provided" do @controllers_found.keys.each do |kube_controller_resource_name| this_controller_details = @controllers_found[kube_controller_resource_name] actual_ec2_instance_key_name = this_controller_details['key_name'] expected_ec2_instance_key_name = $terraform_tfvars['kubernetes_controller_ec2_instance_key_name'] expect(expected_ec2_instance_key_name).to eq actual_ec2_instance_key_name end end end end Here are some important points to look out for:

Easy to read

To determine what a test is and what it should do, we use operators that are formulated in a language very close to ordinary English. Look at this piece of code:

describe "KubernetesCluster" do before(:all) do @vpc_details = $terraform_plan['aws_vpc.infrastructure'] @controllers_found = $terraform_plan['kubernetes-cluster'].select do |key,value| key.match /aws_instance\.kubernetes_controller/ end @coreos_amis = obtain_latest_coreos_version_and_ami! end context "Controller" do context "Metadata" do it "should have retrieved EC2 details" do expect(@controllers_found).not_to be_nil end end Here we describe (describe) the Kubernetes cluster by performing some operators before all other tests, before we perform a series of controller -related tests that focus on the Kubernetes cluster metadata . We expect the details to be loaded from EC2 and that these details are an array of controllers found .

They are fast

If you have a generated Terraform plan (we will touch on this topic soon), unit tests will be performed in less than a second. There are no sandboxes to query, and no AWS API to call; we simply compare what we wrote with what Terraform generated from it.

Easy to write

Apart from a few special cases (see the section Disadvantages ), all test examples are taken from the RSpec documentation . There are no hidden methods or pitfalls here, unless of course you have previously added them yourself.

So why do we need unit-test Terraform code?

Benefits

Allows you to use the development through testing (test-driven development)

Test-driven development is a software development technique in which functionality is written after writing a test that determines what this functionality does. Thus, all the important methods get the tests that describe them. , - , , .

Terraform- , - ...

describe "VPC" do context "Networking" do it "should use the right CIDR" do expected_cidr_block = $terraform_tfvars['cidr_block'] expect($terraform_plan['aws_vpc.vpc']['cidr_block']).to eq expected_cidr_block end end end … main.tf , rake unit_test ( ) , , main.tf , . , , : ) ; ) , « ».

- Terraform Terraform. . , Terraform- , , «».

- ( VPC CIDR AWS), -. , , , , .

disadvantages

, - , . :

- Terraform

«» , , « », . , , , - .

TDD ,

, . , TDD, , . , ( ) .

Terraform , , Terraform . « » .

DevOps . , , Chef Terraform, . - Terraform . , Ruby. , , , , .

( )

- Terraform . .

-. . Terraform-, VPC, Kubernetes- ( ) Route53. , , . RSpec ( , , , AWS-, OpenStack-).

spec/spec_helper.rb . Terraform ( Jenkins Jenkinsfile S3 ) Terraform-. , Terraform . , , Terraform, .

- tfplan , , . , . , terraform show , , , . . . , , — tfjson Palantir, Terraform JSON. ( Terraform- , . Rakefile , Terraform.)

, (pull requests)!

- Terraform- Emre Erkunt . business driven development , - AWS-.

AWS- . , , .

:

- « » :

2. .

- . Terraform- , . - , , . - Git SVN, - (pull request) master .

- , serverspec kitchen-terraform. , CI- , . Terraform , , .

master «» . Terraform CI-, «» — , . DNS , ( -) ( , «» ).

, .

:

Source: https://habr.com/ru/post/335678/

All Articles