Agile in work with outsourcing

My name is Ilya Kitanin, I am the head of the Cofoundit development team - a service for finding employees in startups. Today, I’ll tell you how to work with third-party developers, not to overpay and stay on schedule, with the help of statistics and agile methodology principles.

Since March 2016, Cofoundit has been working in manual mode: we selected employees and co-founders for startups ourselves and studied user needs. We collected the requirements and in June began to develop the service. It took two months to create a prototype and another month to finalize the final version. We started working on the product in mid-June, in August we released a closed beta, at the end of September we officially started and continue to work.

From the very beginning, I considered only agile as a methodology. We devoted the first week to planning: we divided the task into small tasks, planned sprints, each week long. Most of the tasks fit into one sprint, but at first some occupied both two and three.

')

The first sprints were frankly unsuccessful - we delayed the release for a week and began to fall behind schedule. But this experience has helped us correctly estimate the time and abandon the excess functionality. Initially, we wanted to make a service with a symmetric search: so that both the specialist and the project could view each other’s profiles and begin communication. As a result, only the first part remained in the work: the candidate can view and select start-up questionnaires, and the project sees only those professionals who have already shown interest in him. That is, in fact, we launched a minimum viable product.

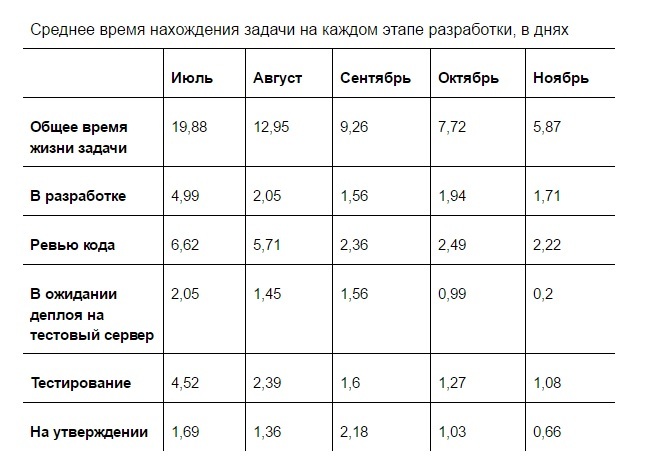

With the help of “Gira” and the Time in status plug-in, I constantly monitored how the team works and how long the task life cycle takes. I considered the time of the five main stages: in development (from the moment of creating a task to sending it to the review), review of the code (occurs on the side of the outsourcing team), deploy, testing and approval (from the service side, that is, me).

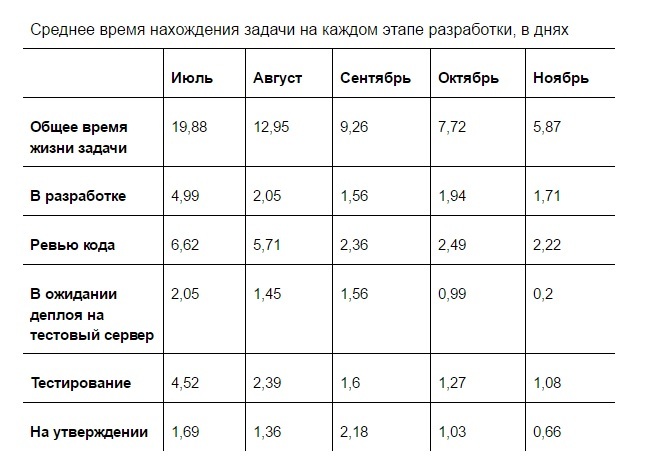

The table shows the number of days during which the task was at each stage of development. Despite the fact that in our case only one specialist (developer, tester or manager) worked on the task at a time, these data are not equal to man-hours: the task is in status, even if the work on it is not underway. I do not specifically show the collection of requirements, the waiting time for release and other stages that do not reflect the speed of the team. Statistics for June did not really gather, therefore, these data are not in the table.

According to the results of work in July, I noticed that the tasks are the longest in three statuses: in work, in testing and in review. The easiest thing was to solve the problem of long testing. The tester in our team worked part-time and simply did not have time to quickly test all tasks. We spoke with the contractor and transferred it to the project at full-time. This shortened the testing time by half.

For the main recurring scenarios, we began to do autotests on selenium. This allowed our tester to conduct regression testing faster. We have reduced the time to fill out forms, without which it is impossible to test the new functionality of the service. Then we continued to increase the number of autotests and improve performance indicators.

Secondarily, I began to deal with delays during the development phase. With weekly iterations, I could not allow the task to hang at work for five days (the entire sprint). Before the final release, I wanted to see the functionality at least several times. I solved the problem by dividing the tasks into smaller ones.

According to the results of August, we were lagging behind in the “Time for Review” indicator by the outsourcing team. At first I constantly reminded the performer that he should look at the code and give comments. In November, I transferred this responsibility to the tester. From this moment, as soon as he finished work on the task, he himself notified the person responsible for the review. We fully implemented this approach only in November, when deploying to the test server began to take less time.

In September, the “Statement” by the service team and “Waiting for deployment to the test server” were weak indicators. "Acceptance" was accelerated simply by more careful attention and quick response to the tasks. Moreover, by this time the development process had already entered into a rhythm, and not much of my time was spent on maintenance.

Deploy time to the test server in September took more than 15% - it was extremely strange. It turned out that after conducting a review of the task, the last performer was laying it out for a test. It was necessary to merge branches, sometimes conflicts arose, the performer was distracted from current tasks. In October, we screwed the automatic task uploading mechanism to the repository, which greatly reduced the deployment time. Then we debugged the process and in the end, in two months, we reduced the time to deploy by 8 (eight!) Times.

In total, in five months we have reduced the time to solve the problem almost four times. Previously, the path of the task from setting to the statement of the result took an average of 20 calendar days. Now that the deadline has been reduced to five calendar days, it has become easier to control the development stages, evaluate the final deadlines for solving problems, and plan the team load.

Another important task is an accurate timing estimate. Under the terms of the contract with the contractor, it was generally unprofitable for me to get into the assessment. If the task was done faster, we paid only for the time spent on it, and if the team did not fit the deadlines, then we paid less.

But I had other reasons for achieving accurate timing. For example, the speed of implementation sometimes affects the choice of functionality. If the introduction of one feature takes a day, and another takes three days, then the manager is more likely to choose a faster development option. At the same time, the “one-day” feature in practice may take the same three days, and if it were known in advance, the choice of options could be different.

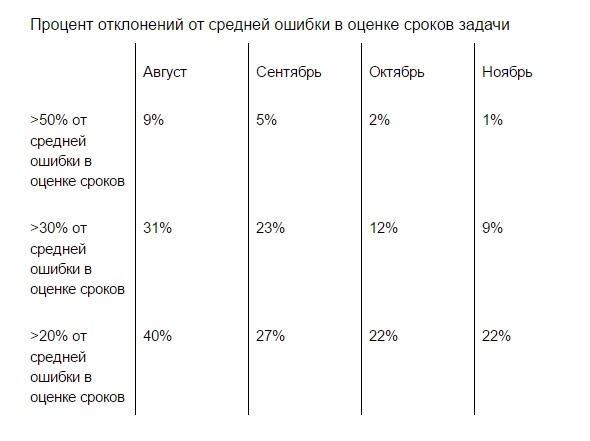

I calculated the percentage of accuracy with which each programmer falls into the assessment. As a result, I received coefficients that helped me plan timelines more accurately. For example, if the programmer estimated the task at 10 o'clock, but his error rate is about 40%, then I can safely assume that the task will take 14 hours. Profit

The difficulty lies in the fact that errors in estimating terms are non-systemic. A programmer can do some tasks four times slower, and others twice as fast. This greatly complicates the calculations and planning.

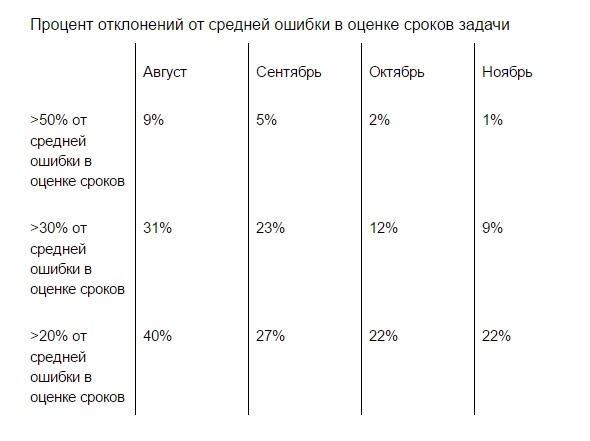

Therefore, I considered not only the average deviation in the estimate of terms for each developer, but also the percentage of deviation from the average. If it was less than 20%, I considered this a good result - such an error in the timing did not prevent me from planning. But there were deviations in 30% and 50%, and they strongly interfered with the work on the release.

For example, the developer estimated the deadlines to be 10 days, his standard deviation from the 40% deadlines, so the real deadline for the task becomes 14 days. But if the deviation from the average is 50%, then this is another 7 days of work on the task. In total, the term of work will be 21 days instead of the promised 10 and expected 14. It is impossible to work with such delays, so I tried to find their cause and fix it.

In "Djir" I analyzed the tardiness and their causes - I concluded that a greater deviation from the planned time falls on tasks that I do not have to complete and that the tester returns several times after testing.

First, I corrected the situation with the tasks from the "acceptance". The main reason for the return was that the tester did not analyze the task enough. During testing, he acted "according to circumstances", and sometimes did not understand how the function should work. We decided that the tester would prepare Definition of done (DoD) for each task: not detailed test cases, but an approximate description of what and in what direction he would look.

For my part, I looked through these DoDs and, in case of an erroneous understanding or insufficient testing, I immediately indicated this to the tester. As a result, the number of returns from the “acceptance” was reduced to almost zero, and it became easier to estimate the deadlines.

As for the frequent return of tasks from the test back to the development, it turned out that almost a third of the cases are due to an error during the deployment: for example, the necessary scripts are not running. That is, the option did not work at all, but this could be understood without the help of the tester.

We decided that when a task is deployed to a server, the programmer will check simple scripts himself to make sure that all the characteristics work. Only after that the task goes to the test. This procedure takes no more than five minutes, and the number of returns from testing has decreased by more than 30%.

For complex tasks, we made the decision to hold phoned with the developer, team lead and tester. I commented on the documentation and explained in detail what exactly and how I want it to be implemented. If the development team saw some flaws at this stage, we immediately made changes to the documentation. In such cases, the assessment of the time involved at least two people - the developer and timlid.

For four months, the average planning error decreased 1.4 times. The number of deviations more than 50% of the average error decreased from 9% to 1%.

Collecting and analyzing statistics helped me to see the weak points in time and correct the mistakes of team management. Some of them could occur at the "home" developers, some - a feature of working with outsourcing. I hope the principles of working with internal statistics will be useful to you.

Background and preparation

Since March 2016, Cofoundit has been working in manual mode: we selected employees and co-founders for startups ourselves and studied user needs. We collected the requirements and in June began to develop the service. It took two months to create a prototype and another month to finalize the final version. We started working on the product in mid-June, in August we released a closed beta, at the end of September we officially started and continue to work.

From the very beginning, I considered only agile as a methodology. We devoted the first week to planning: we divided the task into small tasks, planned sprints, each week long. Most of the tasks fit into one sprint, but at first some occupied both two and three.

')

The first sprints were frankly unsuccessful - we delayed the release for a week and began to fall behind schedule. But this experience has helped us correctly estimate the time and abandon the excess functionality. Initially, we wanted to make a service with a symmetric search: so that both the specialist and the project could view each other’s profiles and begin communication. As a result, only the first part remained in the work: the candidate can view and select start-up questionnaires, and the project sees only those professionals who have already shown interest in him. That is, in fact, we launched a minimum viable product.

Performance management

With the help of “Gira” and the Time in status plug-in, I constantly monitored how the team works and how long the task life cycle takes. I considered the time of the five main stages: in development (from the moment of creating a task to sending it to the review), review of the code (occurs on the side of the outsourcing team), deploy, testing and approval (from the service side, that is, me).

The table shows the number of days during which the task was at each stage of development. Despite the fact that in our case only one specialist (developer, tester or manager) worked on the task at a time, these data are not equal to man-hours: the task is in status, even if the work on it is not underway. I do not specifically show the collection of requirements, the waiting time for release and other stages that do not reflect the speed of the team. Statistics for June did not really gather, therefore, these data are not in the table.

According to the results of work in July, I noticed that the tasks are the longest in three statuses: in work, in testing and in review. The easiest thing was to solve the problem of long testing. The tester in our team worked part-time and simply did not have time to quickly test all tasks. We spoke with the contractor and transferred it to the project at full-time. This shortened the testing time by half.

For the main recurring scenarios, we began to do autotests on selenium. This allowed our tester to conduct regression testing faster. We have reduced the time to fill out forms, without which it is impossible to test the new functionality of the service. Then we continued to increase the number of autotests and improve performance indicators.

Secondarily, I began to deal with delays during the development phase. With weekly iterations, I could not allow the task to hang at work for five days (the entire sprint). Before the final release, I wanted to see the functionality at least several times. I solved the problem by dividing the tasks into smaller ones.

According to the results of August, we were lagging behind in the “Time for Review” indicator by the outsourcing team. At first I constantly reminded the performer that he should look at the code and give comments. In November, I transferred this responsibility to the tester. From this moment, as soon as he finished work on the task, he himself notified the person responsible for the review. We fully implemented this approach only in November, when deploying to the test server began to take less time.

In September, the “Statement” by the service team and “Waiting for deployment to the test server” were weak indicators. "Acceptance" was accelerated simply by more careful attention and quick response to the tasks. Moreover, by this time the development process had already entered into a rhythm, and not much of my time was spent on maintenance.

Deploy time to the test server in September took more than 15% - it was extremely strange. It turned out that after conducting a review of the task, the last performer was laying it out for a test. It was necessary to merge branches, sometimes conflicts arose, the performer was distracted from current tasks. In October, we screwed the automatic task uploading mechanism to the repository, which greatly reduced the deployment time. Then we debugged the process and in the end, in two months, we reduced the time to deploy by 8 (eight!) Times.

In total, in five months we have reduced the time to solve the problem almost four times. Previously, the path of the task from setting to the statement of the result took an average of 20 calendar days. Now that the deadline has been reduced to five calendar days, it has become easier to control the development stages, evaluate the final deadlines for solving problems, and plan the team load.

Timing prediction

Another important task is an accurate timing estimate. Under the terms of the contract with the contractor, it was generally unprofitable for me to get into the assessment. If the task was done faster, we paid only for the time spent on it, and if the team did not fit the deadlines, then we paid less.

But I had other reasons for achieving accurate timing. For example, the speed of implementation sometimes affects the choice of functionality. If the introduction of one feature takes a day, and another takes three days, then the manager is more likely to choose a faster development option. At the same time, the “one-day” feature in practice may take the same three days, and if it were known in advance, the choice of options could be different.

I calculated the percentage of accuracy with which each programmer falls into the assessment. As a result, I received coefficients that helped me plan timelines more accurately. For example, if the programmer estimated the task at 10 o'clock, but his error rate is about 40%, then I can safely assume that the task will take 14 hours. Profit

The difficulty lies in the fact that errors in estimating terms are non-systemic. A programmer can do some tasks four times slower, and others twice as fast. This greatly complicates the calculations and planning.

Therefore, I considered not only the average deviation in the estimate of terms for each developer, but also the percentage of deviation from the average. If it was less than 20%, I considered this a good result - such an error in the timing did not prevent me from planning. But there were deviations in 30% and 50%, and they strongly interfered with the work on the release.

For example, the developer estimated the deadlines to be 10 days, his standard deviation from the 40% deadlines, so the real deadline for the task becomes 14 days. But if the deviation from the average is 50%, then this is another 7 days of work on the task. In total, the term of work will be 21 days instead of the promised 10 and expected 14. It is impossible to work with such delays, so I tried to find their cause and fix it.

In "Djir" I analyzed the tardiness and their causes - I concluded that a greater deviation from the planned time falls on tasks that I do not have to complete and that the tester returns several times after testing.

First, I corrected the situation with the tasks from the "acceptance". The main reason for the return was that the tester did not analyze the task enough. During testing, he acted "according to circumstances", and sometimes did not understand how the function should work. We decided that the tester would prepare Definition of done (DoD) for each task: not detailed test cases, but an approximate description of what and in what direction he would look.

For my part, I looked through these DoDs and, in case of an erroneous understanding or insufficient testing, I immediately indicated this to the tester. As a result, the number of returns from the “acceptance” was reduced to almost zero, and it became easier to estimate the deadlines.

As for the frequent return of tasks from the test back to the development, it turned out that almost a third of the cases are due to an error during the deployment: for example, the necessary scripts are not running. That is, the option did not work at all, but this could be understood without the help of the tester.

We decided that when a task is deployed to a server, the programmer will check simple scripts himself to make sure that all the characteristics work. Only after that the task goes to the test. This procedure takes no more than five minutes, and the number of returns from testing has decreased by more than 30%.

For complex tasks, we made the decision to hold phoned with the developer, team lead and tester. I commented on the documentation and explained in detail what exactly and how I want it to be implemented. If the development team saw some flaws at this stage, we immediately made changes to the documentation. In such cases, the assessment of the time involved at least two people - the developer and timlid.

For four months, the average planning error decreased 1.4 times. The number of deviations more than 50% of the average error decreased from 9% to 1%.

Collecting and analyzing statistics helped me to see the weak points in time and correct the mistakes of team management. Some of them could occur at the "home" developers, some - a feature of working with outsourcing. I hope the principles of working with internal statistics will be useful to you.

Source: https://habr.com/ru/post/318032/

All Articles