MMO on WebRTC

Each programmer who wrote his MMORPG very quickly faces one common problem . The most common way for networking in client-server games is the star, where the central node is the server, and the sheets are the clients.

')

Such an organization has its undeniable advantages, for example, synchronization of game states of clients on the server, ease of implementation, and almost fixed delays to the user. Where there are pluses, there are usually disadvantages - this is a limited server bandwidth and rather large delays if the client is far from your server. How to deal with them in detail is written in the blog 0fps.net and there is an opportunity to solve these problems quite effectively staying in your favorite "star" - buy more servers, but what if

Do not undertake to create MMORPG

As you know, in every joke there is some joke - if you do not have enough resources to support the game-dev team or you are working on a game alone, it is better to reconsider your goals. When I started to make the network shooter Auxilium (cdpv out of it), it was clear that I had to somehow solve the problem with scaling and the obvious solution that is used in many network games was the introduction of gaming sessions or “fights” - in this case, you can just distributing these battles across physical machines and keeping the load up, but this was no longer a classic MMORPG with an open world. This was followed by three errors, after which the development of the game stopped:

The first mistake was to believe that good servers can be found for the imputed (for me) amount. The initial measurements of the performance of the game on Node.JS showed that one process pulls the game for 20-25 people. After this, eventloop subsidence and unrecoverable lags due to the delay in calculating physics on the server begin. Since 350 p. per month I found a VPS with 250 Mb of memory, I couldn’t start more than two processes with the game (at the start the process took ~ 100 Mb, yes, it was possible to tighten the screws and tighten). And that 50 players. Not very much, and Massive worked.

The second mistake was to develop the game not alone, but with a team (nine women can have one child in a month, right?). There is a good article about how to make the MMORPG - "We started the three of us, but by the end of the first year of development I was left alone." So it happened.

Well, the third mistake was to believe that TCP can be tolerated. We took WebSockets as a basis and added binary JSON compression to it in the hope that this would be enough. As many know, TCP is a protocol with guaranteed delivery of packets in the order in which they were sent, so if one packet is lost, then for the player the game will freeze until this packet is re-sent. Worst of all, after that, all the packages that queued for him will scroll - again, you can plan to save delays (add them artificially), but adding jewelry crutches for crutches (working with TCP instead of UPD) is a sure sign of the wrong path.

What they wanted and almost succeeded (from left to right):

But enough about the past - what has changed and where was the rake that you want to step on again?

WebRTC and STCP

Rethinking my mistakes as a developer, I decided to once again search through the field of technologies that can be used to create the MMORPG in the browser. For me, it is still fundamentally to remain within the framework of browsers, since it makes it very easy to run experiments without installing anything (especially since the Unreal Engine 4 is already preparing to exit in a browser-based form ).

As it turned out, during the development the world did not stand in place and WebRTC became a new hot topic - everyone started to do skype and chatroulette killers, but what really caught attention was the unreliable and unordered clauses in the used STCP protocol - and this means that problem number three is resolved ! But not without limitations.

WebRTC requires a so-called signal server, which will be responsible for the initial connection of two browsers on STCP by sending messages from STUN servers. This is done to bypass NAT and you can read more about this in the article “How we did the service on WebRTC” and “WebRTC in the real world: STUN, TURN and signaling” . From the last article for myself, I took out that if you provide WebRTC service for money, then you are in hell (you need to achieve 100% of work from all networks). Well, that is not our case.

Now the architecture of our client-server game can look like this:

As you can see, all the heavy interaction is transferred from the server to the clients. Such an approach is tempting, but it introduces quite non-trivial tasks to solve:

- Is it optimal to use a fully connected graph

- How to synchronize game status for the entire network

- What to do with dishonest players

If the answer to the last two questions depends in part on the game model you are developing, then the first question can be answered for the general case. Nevertheless, it is interesting to read about huntercoin - a project that uses bitcoins analog to synchronize the game, although it is not suitable for dynamic models and requires to have full synchronization of all transactions (if I understand correctly how bitcoins work). The rest of the arguments on these points I leave for the next article.

To test the hypothesis of a complete graph (as well as a server stress test), we will need a small demo game that will be able to measure latency between clients. If it turns out that direct connections are always faster than sending a message through another client, then the full graph, so the full graph (which I doubt).

MMO - Mouse Multiplayer Online

Demo :

Almost like a year ago the world saw a crowd-sourced music video. If you missed it, it's time to stop and look (and there is something for that). The idea is simple - we record the movement of the cursor during the video and add it to the already accumulated ones. As a result, we get a very entertaining video sequence from cursors (at the beginning it seemed to me that synchronization occurs in real time, but no). Watching this clip again - I got the idea for a demo game - we will move the cursors.

For implementation, we need something that facilitates work with the signal server and the creation of connections between clients. A good option is Peer.js - which provides a ready-made signal server (admittedly based on restify ) and even hosts a public signal server.

All the client-side code fits into 100 lines of JavaScript, the server part on Node.JS and even less - 55. I don’t see any reason to bring them in the article, since they are publicly available and fairly simple to write. The code itself is under the MIT license and I hope it will help beginners to make their breakable-toy.

What is the result?

After collecting small statistics from the game, I

Result

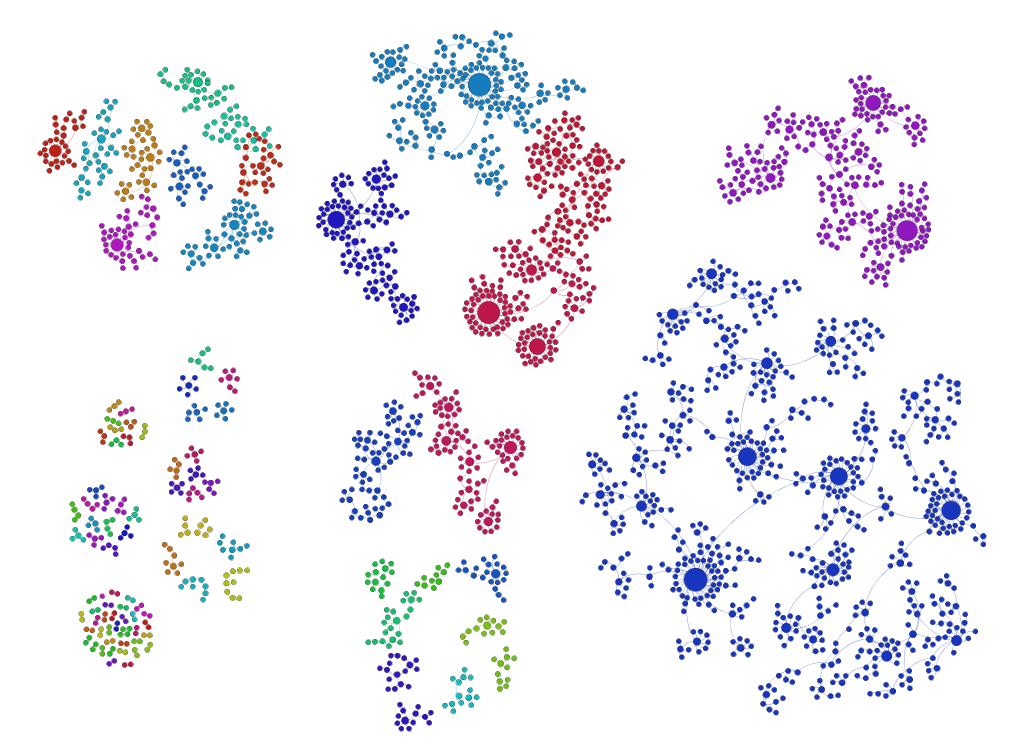

As you can see, something went wrong. We have a lot of peer-to-peer players (left-bottom), as well as many connected components and with a large number of participants. The largest component is on the lower right and it is obviously not a complete graph.

Why did it happen? There may be oddities in the implementation of the distribution of identifiers in Peer.js, since Math.random is used there - although this is unlikely for 2300 nodes . Most likely these are features of the WebRTC protocol operation - it’s clear that an infinite number of connections cannot be afforded .

During the experiment, it was noticed (maybe only by me) that the cursors 5 - 10 simultaneously moved, which makes it impossible to use the full graph to synchronize a large network. How to do it not through broadcast is the material for a separate article (if not a series of articles). Thank you all for participating!

Source: https://habr.com/ru/post/219155/

All Articles