Can I render realistic images without floating point numbers?

Introduction

“What happens if we replace floating-point numbers with rational numbers and try to render an image?”

I asked myself this question after thinking about the tweet of the researcher and computer graphics teacher Morgan McGwire. He talked about how much computer science students are surprised when they first learn that in order to store the usual floating-point numbers in modern computers, one has to make compromises. And these compromises make simple tasks difficult, for example, checking that a point belongs to a triangle. The problem, of course, is that checking for finding four points in one plane (coplanarity) with the help of a determinant or some vector multiplication (and in fact it’s the same thing) will never give a value exactly equal to zero, which is required these mathematical methods. Even if the real calculations on one plane were accurate, the same trade-offs with an accuracy of almost 1.0 would give the answer that the four points themselves are not coplanar.

This gave rise to the idea in me - if we assume that all the input data of the renderer (vertex coordinates, 3D transformations, etc.) would be specified as rational numbers, then all operations would be created, from beam creation, bypassing the accelerating structure to the intersection rays with triangles are only rational numbers? If that were the case, then we would be able to perform a coplanarity check for sure! You may be wondering why a 3D scene expressed in rational numbers should only give results in rational numbers ...

')

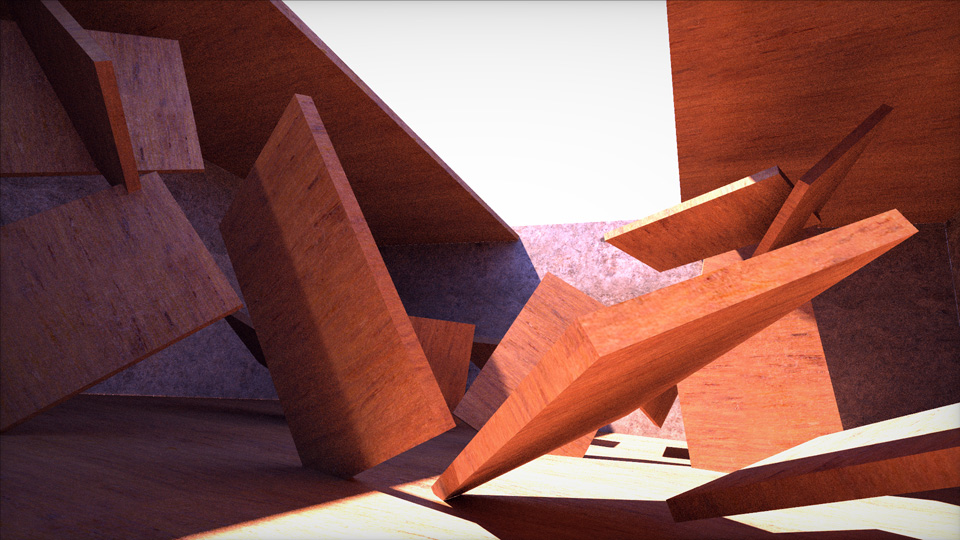

A simple scene, tracing the path in which performed a rational arithmetic. It uses a system of numbers "with a floating line fraction ", and not numbers with a floating comma .

First, a rational number is a number that can be expressed as the ratio of two integers, for example, 1/2 or 355/113. Secondly, “conventional rendering operations”, such as checking bounding box tests, checking for intersection of a ray with a triangle, ray reflections, etc., are based on vector and scalar products, as well as scalar division (this includes the transformations of coordinates and matrix inversion, quaternions, etc.), which in turn are based on four basic operations: addition, subtraction, multiplication and division. When adding, subtracting, multiplying and dividing rational numbers, we also get rational numbers. A mathematician would say that the set of rational numbers forms a field closed under four basic arithmetic operations. For us, this means that if you stick to extremely rational numbers, then you can actually move from the incoming data of the 3D scene to the fully rendered image, without leaving the world of rational numbers.

The exceptions to the rule “actions on rational numbers are rational numbers” are square roots and trigonometric / transcendental functions. As for the latter, I always say that if you had to perform trigonometric calculations in the geometric interior of your renderer, then most likely you are doing something wrong (and I showed how to fix the most standard cases ). As regards square roots, with the exception of conic sections (spheres, cylinders, etc.) and shading / DFOS / coloring, it is not necessary to normalize the rays and normals to the surfaces as often as is usually done. You certainly do not need to do this to create a beam, its passage, intersection, reflections, etc. Unfortunately, very often I see that programmers normalize values for no reason other than “well, I don’t know, I’m doing it to make sure”. In practice, in the part of the rendering where geometry tracing is performed, it is very rarely necessary to normalize the values, so I had the hope that you can trace the whole scene without leaving the world of rational numbers - this is what I would call rational rendering.

To put this into practice, I need to create a system of numbers based on rational numbers that a computer could use. Then on top of it, I could implement the usual path tracing algorithms, calculate images without losing accuracy, perform coplanarity checks that have accurate answers, and bring happiness to all students studying computer graphics.

This article is a story about two evenings investigating the realism of such an idea. I will talk about the many aspects that I have learned, about what I have invented, and about several surprises that I discovered in the process. The article is written in more or less chronological order of my work. In addition, it is written in my unusually informal and very unscientific style (which I am proud of). The image shown above is a kind of spoiler, but read the article to the end, because I will tell you about the good and the bad.

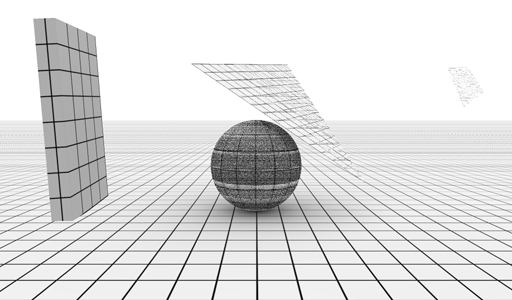

Training

The first thing I did was implement Shadertoy with a minimized tracer for an ultra- simple scene consisting of a plane, a sphere, a rectangular parallelepiped and a triangle - the building blocks of real-world renderers. Then I copied the code into the C ++ file and, after making a couple of minor changes, I compiled it using my piLibs framework. So I got to compare the traced image rendered on the CPU using ordinary IEEE754 floating-point numbers. I also removed all ray normalization from the trace code, because, as mentioned above, none of them are really needed. I recall that normalization requires a square root, and rational numbers are not saved when it is used (the square root of a rational number is not a rational number). A little later, we will see that, of course, you can still use square roots, I just wanted to make the code as mathematically pure as possible to see how far I can go with accurate arithmetic of rational numbers without rounding.

The last preparatory step - I took all the vec3, mat4x4 and other basic classes of algebra / mathematics, and then changed them so that they use “rational” instead of “float”. Since my rational structure overloads all standard operators (add, sub, mul, div, sign changes, comparisons, etc.), the replacement took place without problems. I quickly implemented the remaining normal operations (abs, sign, mod, fract, floor, sqrt, etc.), which theoretically were enough to produce beautiful, rational renders.

Test 1 - a naive solution

But let's see what this first implementation was. At first I always try the simplest, and then I look at the results. And the simplest way to implement rational values was to use two integers. As can be understood from the title of the section, this will not be my final decision, but for the first attempt it was a reasonable decision. So, each number x should have been represented as the numerator N and the denominator D , forming the value N / D. The value of x is approximated by the best possible N / D pair (within a given bit depth), which is closest to the true value of x . I decided that both numbers must be positive, and the number sign must be stored in a separate bit in order to simplify the work and get rid of ambiguities, although this is not very important. At this stage, both numerators and denominators were unsigned. But even with the separation of the sign N / D had a lot of redundancy: for example, 1/4 and 7/28 denote the same number, but have completely different bit representations. We will touch on this later, but for now let's not focus and see what the four basic arithmetic operations look like in this rational form.

First, note that subtracting a - b is simply the addition of a and the value opposite to b , i.e., a + ( -b ), where -b can be calculated by simply replacing the b sign. Similarly, the division of a / b is the same as the multiplication of a and the inverse of b . Or, in other words, a / b = a · (1 / b ), where (1 / b ) can be calculated by simply changing the places of the numerator b n and the denominator b d of the number b . So, here's the first interesting property of rational arithmetic - division and multiplication have the same costs, so unlike the usual floating-point rendering, in which divisions are usually avoided, postponed or hidden under slower requests for receiving textures, you don’t need to be afraid of .

Let us proceed to addition with multiplication: we know that it is trivial to calculate the opposite and inverse values, therefore we get:

The preservation of the sign during multiplication is trivial, it is just xor, because two positive values give a positive result, like two negative values. Preserving the sign for addition is a more complicated process and for a quick solution I implemented it through three branches (addition is trivial, if the signs for a and b coincide, but when they do not match, then you need to choose a smaller number and subtract it from the larger one - in the article I don’t I will describe such small details in more detail, but I’ll just post the source code somewhere).

Also I’ll skip the implementation of fract () and floor (); If you decide to try to implement them yourself, you will see their simplicity and beauty. Attention should also be paid to comparison operators. By taking care of the signs and accepting that a and b are positive, we get

It is important to note here that even for comparison, we need a couple of multiplication operations, which can lead to a transition to the next word size and will be important slightly lower.

Finally, we will look at the square roots in a separate section, knowing that for the most part we don’t need them (with the exception of the scope from this first test).

This was enough to launch the first render and trace the test scene (plane + sphere + triangle + rectangular parallelepiped) to see what happens. I generously used 65-bit rational numbers for this first test, which in fact represents a large amount of data (comparable to the “double” data type): 32 bits are taken up by the numerator, 32 bits are the denominator, and one more bit is the sign. The first is an image obtained with this naive approach, the second is an image made using floating-point numbers (reference):

"Naive" 65-bit rational numbers

Floating point reference

The result was rather bad, the box and the triangle did not even appear on the render, and the sphere and the floor plane were too noisy. The problem, of course, was that every time my rational numbers performed any basic arithmetic operation at any of the algorithmic rendering stages, the numerator and denominator uncontrollably became more and more, because integer multiplication was used. Think about the following: if the units of measurement of our original world were meters, and we would have tied the original geometry (vertices and the camera) to millimeter accuracy, then only the original data occupied 16 bits volume for a rather small scene. At the same time, with standard HD screen resolution and 4X anti-aliasing, rational directions of the beam direction would easily require 12 bits. That is, at the first interaction of the beam and geometry, the simplest arithmetic operation using both input data sets would turn the result into numbers of 28-bit length — close enough to the 32-bit constraint that I asked myself in this first implementation. And this is before we have completed the very first vector or scalar product. By the time the dot-product was completed, the renderer would have already needed hundreds of bits long rational numbers to represent numbers. Of course, this is the worst case, but the average case would be close to that. Taking into account that I singled out the total 32-bit capacity as the numerator and denominator, it is easy to understand how quickly the values go beyond the boundaries in this test - it is not surprising that with the exception of the floor plane and part of the sphere, almost nothing is visible.

Test 2 - reduction by the greatest common factor.

Then I improved the system by using the property that I briefly mentioned above - different rational numbers can denote the same value. Indeed, 6/12 is the same as 1/2, but it uses far more bits than the last. Therefore, the idea was as follows: if after each basic arithmetic operation (or after it) I would remove all common dividers from the numerator and denominators, and bring the fraction to its simplest form, then perhaps I will be able to keep everything under control and continue operations longer with accurate arithmetic without loss of accuracy. Perhaps you will be able to do this long enough to get clean rendered images? I will make a small digression to show another example: 588/910 can be simplified to 42/65, because 14 is a divider and 588, and 910. But for storing 42/65, obviously, you need less bits than 588/910. Finding the largest possible number that simultaneously divides two other numbers can be done using the greatest common divider algorithm (Great Common Divisor, GCD), which you can find effective implementations anywhere (I personally copied it straight from Wikipedia and sped up a bit, performing the scanning step bits using internal x64 operations). So, armed with the NOD algorithm, my “rational” class should constantly simplify fractions generated during the rendering process. This could be done in two ways:

The first is to convert the intermediate result of the addition and multiplication operators to the next type of bit data (in my current naive solution, it is uin64_t), search for GCD in this more voluminous data type, and then reduce the result to the original bit length (32). The second way is to analyze how a n , a d , b n and b d are combined with each other in both arithmetic operators and to extract common divisors from them before performing multiplication. The second approach, in principle, eliminated the need to use large bit lengths. Knowing that I might have to use them anyway, I decided to choose the first method, because it is easier to implement and he allowed me to speed up my work (the evening flies by very quickly). Having done all this, let's see which render I can create now:

65-bit rational numbers with an abbreviation for GCD

Floating point reference

Much better! So far away from the ideal, of course, but it looks promising. I made a box and a triangle appear, and the sphere now seems much more voluminous. However, a funny artifact appeared in the upper right corner, and rational numbers for many pixels still go beyond the boundaries, which leads to a lot of points in the image. However, it is worth noting that for some (many) pixels I began to get accurate and perfect results! That is, the tracer found mathematically exact intersections of points and distances, which was the primary reason for trying to use rational numbers.

Before proceeding to the next step in the process of proving the applicability of rational numbers, I want to briefly stop and share my findings regarding the GCD and the reduction of rational numbers.

The first discovery is associated with the bit volume of rational numbers. Even though I still cannot render beautiful images and this is more important than worrying about optimizing data volumes, and although this early implementation still used a large number of bits (1 + 32 + 32), I was already thinking about the previously mentioned waste bits in the form of excess fractions. In particular, after adding a stage with GCD, combinations of bits like 2/4 are no longer applicable, because they are automatically reduced to 1/2 before writing to any register or variable. That is, in a sense, of all 2 64 combinations of bits that could be the numerator and denominator, many remained untapped. And so it is impossible to spend bits in vain. Or can it? How much space do I actually lose? I made a small digression to explore this question.

Retreat - about mutually simple numbers

The illustrations below show the use of bits for rational numbers in 5/5 bits and 7/7 bits. The horizontal and vertical axes of the graphs represent the values of the numerator and denominator of all possible rational numbers that have numerators and denominators of up to 5 bits (31) and 7 bits (127). Black pixels are unused combinations, and white pixels are used fractions. For example, the entire diagonal is black, with the exception of the 1/1 pixel, because all fractions of the form n / n are reduced to 1/1.

Using bits for 5/5 rational

Using bits for 7/7 rational

If you count the pixels, as I did, then you can quickly understand that the proportion of useful pixels with an increase in the number of bits tends to 60.8%. A small online study showed me that this ratio turns out to be exactly 6 / π 2 , because it is also the probability of being mutually simple (not having common divisors) for two random numbers. You may ask, where did pi come from? It turns out that “six on pi” in the square ”is a value equal to one divided by the Riemann zeta function calculated at the point 2, 1 / (2). This should not surprise us, because the Riemann zeta function often comes up in problems involving simple and coprime numbers.

Anyway, it seems that in my rational representation I wasted about 40% of the bit combinations. And although this seems like a large number, I decided to look at it as if it were actually less than a bit ... so I could not be particularly upset. Taking this into account, I decided to move on, using other, completely different approaches, instead of trying to locally optimize this single problem. However, I briefly learned about the Stern-Brocot and Calkin-Wilf trees, which could allow me to fully use all the available bits, but the interval of values obtained with their help turns out to be very small, so I quickly abandoned this idea and moved on. I think at this point I must express my appreciation to Wikipedia as a constant source of my knowledge.

Let's return to the analysis of what we have received: I can render images with distortions, but I depend very much on the distribution of prime numbers in the calculations. It depends on these prime numbers whether the GCD algorithm can simplify the expression — as soon as any simple or multiple number falls into any number of the renderer (vectors, scalars, matrices), it “pollutes” all the numbers following it in further arithmetic manipulations, and remains in them forever. Therefore, gradually everything is guaranteed to begin to grow, it is only a matter of time. Besides the fact that it is inevitable, it is also necessary, because it is precisely mutually simple divisors that carry information about the meaning of a number. But at the same time, large prime numbers break everything very quickly. So there is a conflict.

The last thing to notice is that I still used twice as many bits as a standard floating point number, so there are no real advantages here. Of course, I tried to use 16/16-bit rational numbers, which would be a more honest comparison with the true requirements for floating-point arithmetic, but with an accuracy of 16/16, the system I wrote with numerator + denominator + NOD created completely unintelligible images.

Test 3 - normalization of rational numbers

So, at this moment I needed something really significant. It seemed that in order to prevent numbers from going out of line, one had to start cutting numbers and losing accuracy. My whole experiment began with the idea of researching accurate rendering, but by this time I felt ready to give up this idea and continue to explore other areas, if only for fun, and see what it will lead me to (the initial idea that stimulates the research process - this is exactly the idea to start the process, and after it you often end up in a completely unexpected place. Or, as John Cleese once said, bad ideas can lead to good ideas, the process of creativity is not necessarily always edovatelnostyu or logically true progression of steps).

Anyway, I decided to check what would happen to the renderers if I somehow protected the numerator and denominator from overflow. The simplest thing would be to shift both the numerator and the denominator, if necessary, by a sufficient number of bits to the right, until they again find themselves in the bit space allocated to them. In essence, this means an integer division of both the numerator and the denominator by one quantity, which means that the value of the number remains approximately unchanged. And here I deviated from the original goal of the experiment.

In my first implementation, I looked at the number of bits needed for the numerator and denominator, took the maximum for both, and shifted both by that number of bits (performing rounding to the nearest whole number). When this was implemented in addition and multiplication operators, everything began to look quite acceptable:

65-bit rational numbers with reduction on GCD and normalization

Floating point reference

Since everything looked pretty good, at this stage I started to solve the problem of a large amount of bits used in the current implementation. I tried using 32/32 (65 bits) 16/16 instead (33 bits), and the images were surprisingly good! I still saw that there were small holes in some edges of the sphere, and there were small tears in the figure of the texture of the triangle. But this is not bad for values close enough to floating point numbers. It gave me the energy to learn new ideas.

Test 4 - floating fraction

At this stage, I decided to distract myself and stop looking for excuses - if I want to find something interesting for rendering in rational numbers, then they should take 32 bits and no more. It is better to find a good idea or stop, and finish at that (this was at the beginning of the second evening of the experiments).

At first, I thought that it was worth adhering to the ideas of the GCD and normalization, but at the same time, it was smarter to approach the storage and use of bits. The first thing that occurred to me was that even though the numerator and denominator may become large, this often does not happen. Or at least it does not happen at the same time. Therefore, when the numerator is small, you can allow the denominator to be large, and vice versa. Unused bits of one of the two integer values can be used to represent larger values. Then I realized that similarly to this, a floating-point number is essentially a fixed-point format, where the “fixed” comma is made variable. I can take my rational numbers and also make the bit position of the fraction fraction variable. That is, not to set a fraction hard as 16/16, but to allow the same 32-bit variable to sometimes be 16/16, and sometimes 5/27 or 13/19, if necessary.

It was worth checking out. Anyway, a few lines of packing / unpacking code in internal setters and getters can be written quickly. The most logical scheme for me was 1 | 5 | 26, that is:

1 bit: sign

5 bits: slash position position (B)

26 bits: combined numerator and denominator data; the numerator is the top 26-B bits, the denominator is the bottom B bits,

where the fraction bar (B) determines the size of the denominator. For example, the number 7/3 will be written as

7/3 = 0 00010 000000000000000000000111 11,

where a 0 indicates a positive value, a 2 slash denotes a denominator (number 3), to represent which 2 bits are needed, and the rest of the bits go to the numerator.

Those readers who have worked with the IEEE754 standard may find this observation familiar: the binary representation of the denominator always starts with “1”, because the number of a fraction line always truncates it to the shortest presentation. That is, the first bit of the denominator is not necessary to store. In this case, the number “3” can be represented only by the binary value “1” and the value of the slash feature “1”:

7/3 = 0 00001 00000000000000000000111 1

This trick not only saved me one precious bit, but also has one excellent side effect: when the value of the fraction line is zero, this naturally means at the same time that the denominator is 1 and that no space is needed to store it. This means that my rational representation of numbers suddenly turned out to be fully compatible with ordinary integer representation and arithmetic, until the values of the numbers rise above 2 26, that is, to a sufficiently large threshold. What a wonderful surprise! That is, theoretically, I can use exactly the same data type, “rational”, to perform standard rendering and shading operations, but also to perform all the logic and tasks of the command flow in the path tracer — I no longer need to use two data types, as it happens in most renderers ("int" and "float") and perform conversions to one and the other side! However, time was pressing me, so I did not change all the indexes of the cycles from “int” to “rational”. The evening was coming to an end, but I still had to check out a lot of things to improve the quality of renders.

Having created the implementation, I was able to verify it:

32-bit rational numbers with a floating slash (1 | 5 | 26)

32-bit floating- point benchmark.

Oh, oh, not bad! I still have artifacts in the realm, which for the time being I will consider as the fault of my poor realization of the square root, but the parallelepiped and the triangle have become really clean. The number of accurately resolved image pixels also increased. I think that due to the fact that before the overflow, more numbers appear in the denominator or numerator, I increased the likelihood that the GCD will find common dividers and perform the reduction. That is, the floating fraction feature not only increased the interval of represented numbers and postponed the moment of normalization (with loss of accuracy) caused by overflow, but also took the next step in improving quality due to the increased likelihood of reductions.

At this stage, I was ready to conduct a more serious test (but still experimental, until the system is ready for operation). I implemented a path tracer with a minimal set of functions (not necessarily physically accurate or even taking into account physics) and created a scene with several rectangular parallelepipeds and two light sources, the reference implementation of which on the GPU is here: https://www.shadertoy.com/view/Xd2fzR .

I again converted the scene into a C ++ framework, again deleted a few unnecessary ray normalizations and started rendering. Here is what I got:

32-bit rational floating feature fractions

32-bit floating- point reference

Wow, this is really good! Although clearly visible leakage of light in the corners, where the edges of the floor and ceiling connect. Look at them in an approximation:

They may be caused by a problem in my implementation of the intersection of the ray and the rectangular parallelepiped, which is only expressed in rational numbers; I would not be surprised. Or maybe I rested on the boundaries of what rational numbers are capable of. Anyway, I'm quite pleased. In addition, I have other changes and experiments that I wanted to test in the remaining short time:

Some other experiments

64 bit exact arithmetic

The idea of exact arithmetic cannot be realized neither in naive 64-bit rational numbers, nor in 32-bit (1 | 5 | 26) rational numbers with a floating fraction line. Will 64-bit floating point numbers work?

I quickly implemented rational numbers 1 | 6 | 57 (although I had to study the new x64 internal mechanisms for the bit shift). These 57 bits of the numerator / denominator allowed to trace a much longer interval of distances. I actually managed to trace the scene with several triangles with all the exactarithmetic (not mentioned above scene with rectangular parallelepipeds and global illumination, but only a few triangles in front of the camera). And I was waiting for success! However, the coplanarity test, which I implemented to verify the correctness, required several scalar and vector product operations, which made the numbers begin to renormalize themselves. Therefore, even though I knew that the render was accurate, I could not “prove” this experimentally. What irony. In general, this means that 64 bits was enough for several triangles, but more complex scenes will still fall apart. However, it made me think about another question: is there any algorithm that can be used to test coplanarity, based not on absolute values, but on modular arithmetic? Maybe,In modular arithmetic, rational numbers should not “explode” in size? I did not have time to investigate all this, and I am not an expert in number theory.

Square roots

On the last (second) evening of research, I decided to briefly dwell on this topic and study the new information. I wanted to implement the best square root function possible for rational numbers. My current naive solution ( bad ) took the integer square root of the numerator (with the corresponding rounding), and then did the same with the denominator. Since the square root of a fraction is a fraction of the square roots of the numerator and denominator, in general, this approach returns decent results, not too different from the best answer. But he certainly does not return the best rational approximation of the square root of a rational number. Instead of one approach, it performs two.

I tried the following: in the end, here we are looking for two integers xand y , such that

Then we can rewrite this expression as finding the solution of the (non-trivial) following Diophantine equation (“Diophantine” means that we are only interested in integer solutions):

After a search on Wikipedia, I discovered that this particular equation is the so-called “Pell's modified equation” (Modified Pell's equation). There are algorithms that find the smallest x and y values to solve this equation. Unfortunately, my attention quickly shifted to another curious diophantine mathematics, and I did not begin to implement any of these algorithms.

More effective reduction

In the last minutes of the evening, I thought about exploring the idea of using multiple members that are combined in complex geometric operators, for example, in a vector product. Suppose the first component of a vector product was

assuming that sy = a / b, tz = c / d, ty = e / f, sz = g / h

This meant that now I can try to find common divisors, for example, between a and d, or e and h and use them for pre-cuts.

I had another idea: if at some stage the rendering speed becomes a problem, then you can completely disable the GCD search steps and apply only normalization. A quick check showed that in this case, the rendered images still remain acceptable and work well at a much higher speed. However, we, of course, get a smaller number of arithmetically accurate results.

As a compromise, you can refuse to implement the GCD procedure or scheme, and instead use something mathematically simple, hard-coded and effective in the code that determines the divisibility only by 2, 3 and 5. Even though we will not find an exhaustive number of dividers, In practice, this would lead to finding a large number of cuts. Consider - divisibility by 2 is three times more often than divisibility by 7, and 20 times more often than divisibility by 41!

Conclusion

After this experience, I began to believe that the existence of a representation of numbers based on rational numbers is quite possible, similar to what I call a “floating fraction”. A representation that is compatible with integers and that can perform many operations in exact arithmetic for many problems (provided that the input data is presented in a rational form). The 64-bit version (1 | 6 | 57) has a lot of potential, although the 32-bit version (1 | 5 | 26) already creates interesting renderings.

If it was not an experiment for two evenings, but something professional created in a studio or company, then in the future the following steps could be taken:

* Get a histogram of the number of exactly and not exactly traced pixels (in other words, the frequency of normalization)

* Try to implement hard-coded reduction on dividers 2, 3 and 5 and measure the percentage of lost exact pixels

* Show pixel difference between floating-point rendering and floating-line rendering

* Find creative ways to use unused values of the floating-line fraction character, for example , to designate Inf and NaN

* Implement NaN, Inf, underflow, overflow detection.

Overall, it was a fascinating study. In the process, I discovered several surprises, came up with one small invention and learned a lot about Pell's equation, square roots, GCD, x86_64 internal mechanisms, Riemann's zeta function, and some other aspects. I am very pleased with this!

Source: https://habr.com/ru/post/448558/

All Articles