Watchdog watching you (monitoring hosting)

Attention! This article for web-programmers - contains the sources and tech. details

Did you have such a thing - that a simple question plunged you into a stupor and deep thoughts? It happens to me every time when customers or friends ask me:

Did you have such a thing - that a simple question plunged you into a stupor and deep thoughts? It happens to me every time when customers or friends ask me:

And there is nothing to answer, because all (all!) Our measurements are not in favor of hosting companies, I will not even bring anyone specifically - you can check it yourself by following the recommendations in this article.

')

It would seem, where the dog is buried? After all, for us hosting is one of the most favorite corns, which are often attacked, because we are SEO-optimizers. We work - to bring our sites and clients to the tops, and poor and unstable hosting scares away the scanning robots of Yandex, Google and their ilk. However, hosting often falls during the day, especially during peak loads around 18:00 due to the influx of onlookers on the Internet in the evening.

Here is the simplest thing that happens - the site works fine during the day, while the support of the hoster is alert. And at night, sometimes regularly "lies" in a knockdown. For example, hoster scripts make backups and everything is overloaded. Customers sleep, buyers sleep, the site is asleep. Everyone is happy, except for search spiders.

The first thing we did was buy our expensive server and took it to the Caravan (thanks to the guys for the excellent quality of the collocation). But our server is not rubber, and we don’t provide hosting as a service. Therefore, let all our and we can not.

In order to somehow control the situation - I wrote a hosting stability monitor a couple of years ago. Now, when we already have a lot of other competitive advantages - we are ready to lay out the source code and work algorithm for Habra sociality, which is what this post is about.

The script is simple to ugliness, and the source is raw and uncombed, despite the help of our hax- friend Grox 'a. Please do not kick much for this.

So, archive with sorts (PHP + MySQL), 11 kb zip . Everything is open, without obfuscators.

1. We unpack the archive, download everything to our server, for example, my website / watchdog /

2. Create a database for the dog (preferably a separate one), and execute the sql.txt sql file (or upload it to PHPMyAdmin)

3. Register in the dbconnect.php file access to the database

4. Register in grox_config_users.php yourself as a user (login, password)

5. Go to my site / watchdog / and add the first - reference * site

6. We set kroons to pull the nip.php script once every 5 or 20 minutes, for example, * / 10 * * * * / usr / bin / wget -q "your site.ru / watchdog / nip.php "

7. Now we can add other sites to monitor

8. A week later, we collect the first cream.

9. ?????

10. PROFIT

Naturally, this way you can effectively monitor only other servers, but not your own.

* Reference (calibration) site is someone else's site with knowingly high-quality hosting (for example, they have many servers in different data centers). The one we use will not be specifically mentioned, otherwise we will inadvertently ask :) It is needed in order to eliminate the error due to the brakes of our checking server itself. How it works? We read further.

bones - "bones", the result of biting sites. The most important log file

bones_back - where without backups. Here we transfer everything that is older than three months.

samples - synchronization of bites, which allows to compare delays with the calibration site.

watch_dogs is a list of sites that watchdogs “bite” to check.

Each entry to check hosting has a unique number. Sites are polled randomly. This guarantees the work of the script in the conditions of hanging up a single site. If the “calibration” site fails at the same time as the one being checked, we conclude that the non-verifiable website was buggy, but our server itself, and we reject this sample. Comparison of samples is done in the sample_id field in the bones database.

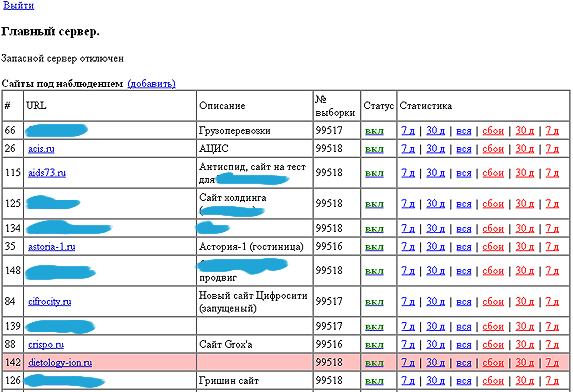

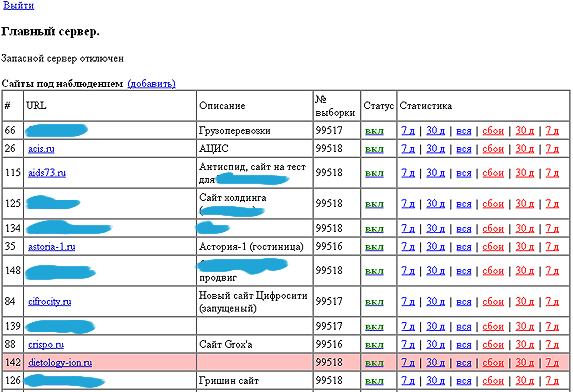

Here is the list of "dogs"

Here is an example of failures in the last 7 days.

The statistics are good enough, the morning delays are just the start of the backup.

1. Do not swear at register_globals, this script is for local access (not outside)

2. The user interface would be better

3. There is not enough information to put together a sample of the site and the termination site.

4. You can add tracking of the key phrase on the page that guarantees the normal operation of the database of the site being checked.

5. Failure notifications

6. A more “correct” script for clearing tags from the meaningful part of the site

7. Buttons for total suspension of the watchdog script in cases when you need to urgently reduce the load on the main server

There are similar services, even checking email and ftp availability, but often they are on Western servers, and are quite complex. We have successfully integrated our own script into the client’s office, and monthly internal reports, and in general we are satisfied with its simplicity and simplicity.

We give the script to everyone, if write a worthy sequel, we ask them to also share with everyone. It is possible through us (sending the source code to support@webprojects.ru).

If it will be useful to you - remember about us, do not remove the mention of the authors from the captions in the comments and footer.

PS The monitoring module is called a watch dog in honor of the remarkable quality of some AMR controllers. Initially, it was an interrupt with an internal counter, which monotonously decreases the value of the special register. As soon as the value reaches zero, a controller reload is initiated, and the program starts from scratch. In the main and reliable part of the program, the control register is reset to high values. If suddenly the software of your washing machine or TV hangs due to some kind of error, the watchdog will reboot itself.

Did you have such a thing - that a simple question plunged you into a stupor and deep thoughts? It happens to me every time when customers or friends ask me:

Did you have such a thing - that a simple question plunged you into a stupor and deep thoughts? It happens to me every time when customers or friends ask me:- Andrei, what kind of hosting would you recommend for our site?

And there is nothing to answer, because all (all!) Our measurements are not in favor of hosting companies, I will not even bring anyone specifically - you can check it yourself by following the recommendations in this article.

')

It would seem, where the dog is buried? After all, for us hosting is one of the most favorite corns, which are often attacked, because we are SEO-optimizers. We work - to bring our sites and clients to the tops, and poor and unstable hosting scares away the scanning robots of Yandex, Google and their ilk. However, hosting often falls during the day, especially during peak loads around 18:00 due to the influx of onlookers on the Internet in the evening.

Here is the simplest thing that happens - the site works fine during the day, while the support of the hoster is alert. And at night, sometimes regularly "lies" in a knockdown. For example, hoster scripts make backups and everything is overloaded. Customers sleep, buyers sleep, the site is asleep. Everyone is happy, except for search spiders.

The first thing we did was buy our expensive server and took it to the Caravan (thanks to the guys for the excellent quality of the collocation). But our server is not rubber, and we don’t provide hosting as a service. Therefore, let all our and we can not.

In order to somehow control the situation - I wrote a hosting stability monitor a couple of years ago. Now, when we already have a lot of other competitive advantages - we are ready to lay out the source code and work algorithm for Habra sociality, which is what this post is about.

So, more to the point

The script is simple to ugliness, and the source is raw and uncombed, despite the help of our hax- friend Grox 'a. Please do not kick much for this.

So, archive with sorts (PHP + MySQL), 11 kb zip . Everything is open, without obfuscators.

Fast start

1. We unpack the archive, download everything to our server, for example, my website / watchdog /

2. Create a database for the dog (preferably a separate one), and execute the sql.txt sql file (or upload it to PHPMyAdmin)

3. Register in the dbconnect.php file access to the database

4. Register in grox_config_users.php yourself as a user (login, password)

5. Go to my site / watchdog / and add the first - reference * site

6. We set kroons to pull the nip.php script once every 5 or 20 minutes, for example, * / 10 * * * * / usr / bin / wget -q "your site.ru / watchdog / nip.php "

7. Now we can add other sites to monitor

8. A week later, we collect the first cream.

9. ?????

10. PROFIT

Naturally, this way you can effectively monitor only other servers, but not your own.

* Reference (calibration) site is someone else's site with knowingly high-quality hosting (for example, they have many servers in different data centers). The one we use will not be specifically mentioned, otherwise we will inadvertently ask :) It is needed in order to eliminate the error due to the brakes of our checking server itself. How it works? We read further.

Database structure

bones - "bones", the result of biting sites. The most important log file

bones_back - where without backups. Here we transfer everything that is older than three months.

samples - synchronization of bites, which allows to compare delays with the calibration site.

watch_dogs is a list of sites that watchdogs “bite” to check.

How it works?

Each entry to check hosting has a unique number. Sites are polled randomly. This guarantees the work of the script in the conditions of hanging up a single site. If the “calibration” site fails at the same time as the one being checked, we conclude that the non-verifiable website was buggy, but our server itself, and we reject this sample. Comparison of samples is done in the sample_id field in the bones database.

Here is the list of "dogs"

Here is an example of failures in the last 7 days.

The statistics are good enough, the morning delays are just the start of the backup.

What can be improved / added?

1. Do not swear at register_globals, this script is for local access (not outside)

2. The user interface would be better

3. There is not enough information to put together a sample of the site and the termination site.

4. You can add tracking of the key phrase on the page that guarantees the normal operation of the database of the site being checked.

5. Failure notifications

6. A more “correct” script for clearing tags from the meaningful part of the site

7. Buttons for total suspension of the watchdog script in cases when you need to urgently reduce the load on the main server

There are similar services, even checking email and ftp availability, but often they are on Western servers, and are quite complex. We have successfully integrated our own script into the client’s office, and monthly internal reports, and in general we are satisfied with its simplicity and simplicity.

We give the script to everyone, if write a worthy sequel, we ask them to also share with everyone. It is possible through us (sending the source code to support@webprojects.ru).

If it will be useful to you - remember about us, do not remove the mention of the authors from the captions in the comments and footer.

PS The monitoring module is called a watch dog in honor of the remarkable quality of some AMR controllers. Initially, it was an interrupt with an internal counter, which monotonously decreases the value of the special register. As soon as the value reaches zero, a controller reload is initiated, and the program starts from scratch. In the main and reliable part of the program, the control register is reset to high values. If suddenly the software of your washing machine or TV hangs due to some kind of error, the watchdog will reboot itself.

Source: https://habr.com/ru/post/97634/

All Articles