Hyper-V Architecture

In this article we will talk about what Hyper-V is “from the inside,” and how it differs from VMware ESX in terms of architecture, not marketing leaflets. The article will be divided into three parts. In the first part I will talk about the hypervisor architecture itself, in the other two about how Hyper-V works with storage devices and with the network.

')

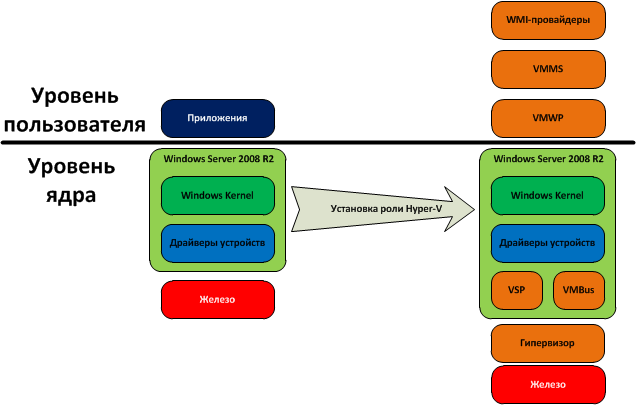

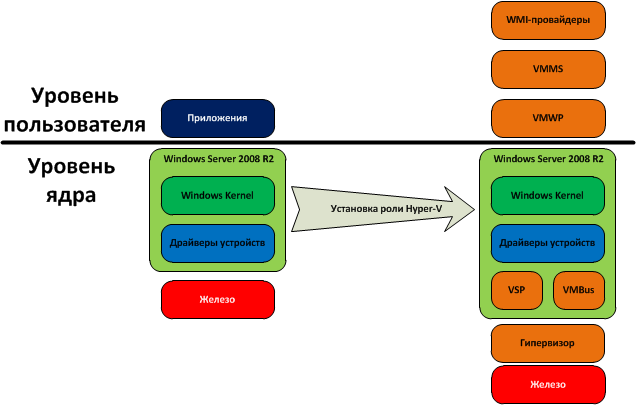

So, as we already know, Hyper-V is a server virtualization platform from Microsoft that replaces Virtual Server. Unlike the latter, Hyper-V is not a standalone product, but only a component of the Windows Server 2008 OS. So, let's see what happens with Windows Server 2008 after installing the Hyper-V role:

As we can see, after installing the Hyper-V role, the system architecture changes dramatically: if before the OS was working with memory, processor and hardware directly, then after installing the memory and processor time distribution is controlled by the hypervisor. Also, there are some new components, which will be discussed below. After installing Hyper-V, the system creates isolated environments in which guest OSs run, so-called partitions. Not to be confused with hard disk partitions. The host OS itself, in turn, since the installation of Hyper-V, works in the same way in an isolated partition called the parent partition. Partitions in which guest OS are started are referred to as child. The difference between the parent partition and the child part is that only the parent partition can directly access the server hardware. All other partitions are completely isolated both from each other and from the server hardware itself. All interaction with iron comes through drivers running inside the host OS, that is, through the parent partition. Each virtual machine has a set of virtual devices (network adapter, video adapter, disk controller, etc.) that interact with the parent partition through the so-called virtual device bus (VMBus). And already in the parent partition, all calls to the virtual devices are transmitted to the hardware drivers. This is the fundamental difference between Hyper-V and VMware ESX: in ESX, device drivers are built into the hypervisor itself. Good or bad - definitely impossible to say. On the one hand, this is certainly good: the ESX hypervisor can work on its own, without the need for a host OS, and therefore has much lower memory and disk space requirements. On the other hand, the list of supported hardware is very limited, and it is impossible to install new drivers in ESX. Therefore, it may be a situation that when using VMware ESX when, for example, a new driver appears that supports some special hardware functions, you will have to wait for the new version of the hypervisor. The opposite situation is also possible: when replacing iron with a new one, it may be necessary to switch to a new hypervisor. In Hyper-V, it will be enough just to install the appropriate drivers in the host OS.

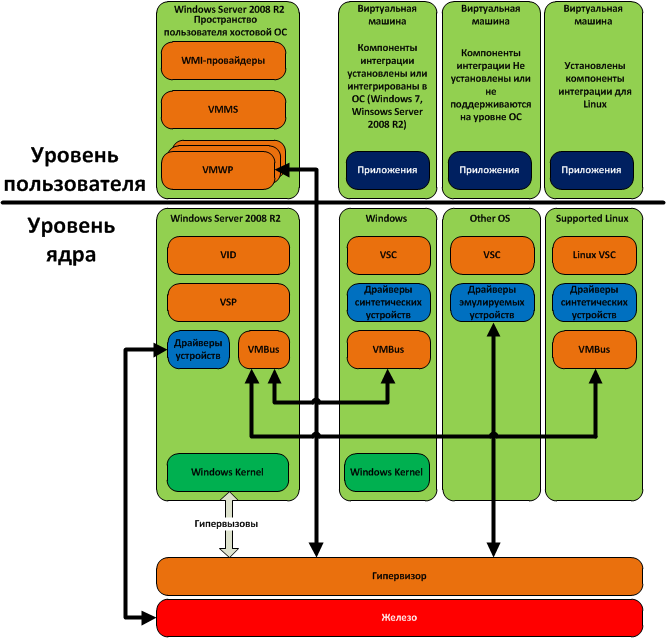

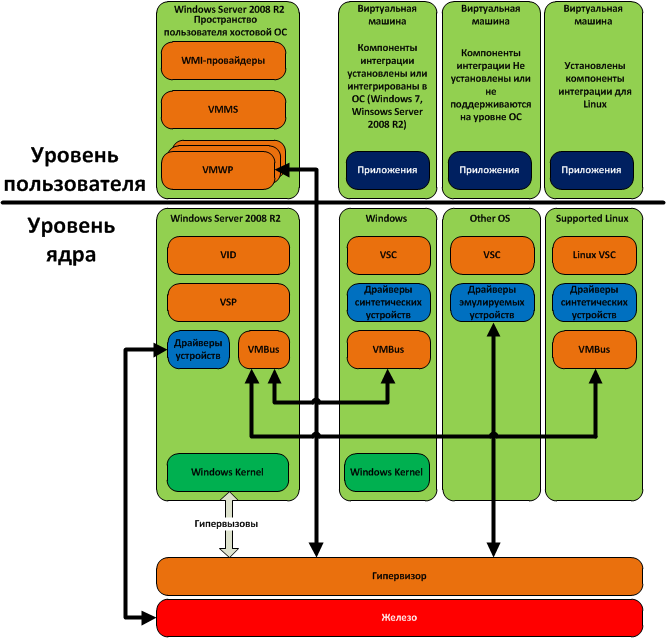

Now consider the Hyper-V environment, in which, in addition to the host OS, three more virtual machines are running. On one of them, Windows is installed and integration components are installed (Windows 7 and Windows Server 2008 R2 OS include integration components), another OS is installed that does not support integration components (or they are simply not installed), and the third OS is installed Linux, for which integration components exist (currently only SLES and RHEL are supported).

So, in the parent partition, in user space, there are:

In the kernel space of the parent partition, the following are performed:

Now consider the virtual machines themselves. As already mentioned, each virtual machine operates in its own isolated environment, called the partition. Inside each virtual machine there is a virtualization service client (VSC), which provides the guest OS with the interface of virtual devices and interacts with the parent partition through the VMBus. If integration components are installed inside the guest OS (all versions of Windows, starting with XP and Server 2003, as well as some versions of Linux - SLES and RHEL), then so-called synthetic devices can be used, that is, virtual devices that have specific Hyper-specific -V functionality For example, VMQ support with a virtual network adapter, or a virtual SCSI controller. All of these specific functions are provided by a virtualization service provider (VSP) operating in the parent partition. It also converts requests from virtual machines, going through VMBus, and redirects them to drivers of physical devices. If the integration components are not supported by the guest OS, or they have not been installed - emulated devices are used (Legacy Network Adapter, Virtual IDE Controller, etc.). In this case, VMBus is not used, and the guest OS handles the hypervisor directly through the drivers of the emulated devices. The hypervisor redirects these requests to device drivers in the parent partition through a virtual machine workflow (VMWP), which is executed not in kernel space, but in user space. This greatly affects the performance, and therefore it is highly recommended not to use emulated devices. They can only be used if the guest OS does not support the installation of integration components. If such OSes (* BSD, unsupported versions of Linux, etc.) are actively used in your organization, you should think about other virtualization platforms, for example, about the same VMware ESX.

There is no other way for virtual machines to interact with each other, with the host OS or with hardware. This was done for security reasons: virtual machines can interact with the host OS or with each other only via network interfaces, there is no “forwarding” of devices, either USB or PCI. This is again a double-edged sword: on the one hand, it increases security, but on the other hand, it does not allow the guest OS to use specific devices, such as HASP keys or modems. Therefore, if you need to use such devices in the guest OS (for example, software from 1C is installed there, requiring the presence of a hardware HASP key to start), you have to use tricks like USB passthrough via a TCP / IP network. This is done, as a rule, with the help of third-party software.

Memory allocation and processor time is handled by the hypervisor itself. Unlike VMware ESX, the Hyper-V hypervisor does not contain third-party device drivers and components. This allows you to reduce the size of the hypervisor itself, and increase its reliability and security. Guest OS, as already mentioned, do not have direct access to physical processors and interrupts. It is the hypervisor that acts as a scheduler, allocating the processor time of each partition (including the parent) in accordance with the settings, it is the hypervisor that receives requests for interruptions from partitions and transmits them to physical processors, and the hypervisor allocates memory areas to virtual machines from partitions to physical addresses. Partitions are managed by the host OS through a so-called hypercall interface. The user interface for managing the hypervisor is provided by WMI providers through a virtual infrastructure driver (VID).

On this, perhaps, the first part I finish. In the next article, I will discuss how Hyper-V works with storage devices (hard drives, external storage systems, etc.).

')

So, as we already know, Hyper-V is a server virtualization platform from Microsoft that replaces Virtual Server. Unlike the latter, Hyper-V is not a standalone product, but only a component of the Windows Server 2008 OS. So, let's see what happens with Windows Server 2008 after installing the Hyper-V role:

As we can see, after installing the Hyper-V role, the system architecture changes dramatically: if before the OS was working with memory, processor and hardware directly, then after installing the memory and processor time distribution is controlled by the hypervisor. Also, there are some new components, which will be discussed below. After installing Hyper-V, the system creates isolated environments in which guest OSs run, so-called partitions. Not to be confused with hard disk partitions. The host OS itself, in turn, since the installation of Hyper-V, works in the same way in an isolated partition called the parent partition. Partitions in which guest OS are started are referred to as child. The difference between the parent partition and the child part is that only the parent partition can directly access the server hardware. All other partitions are completely isolated both from each other and from the server hardware itself. All interaction with iron comes through drivers running inside the host OS, that is, through the parent partition. Each virtual machine has a set of virtual devices (network adapter, video adapter, disk controller, etc.) that interact with the parent partition through the so-called virtual device bus (VMBus). And already in the parent partition, all calls to the virtual devices are transmitted to the hardware drivers. This is the fundamental difference between Hyper-V and VMware ESX: in ESX, device drivers are built into the hypervisor itself. Good or bad - definitely impossible to say. On the one hand, this is certainly good: the ESX hypervisor can work on its own, without the need for a host OS, and therefore has much lower memory and disk space requirements. On the other hand, the list of supported hardware is very limited, and it is impossible to install new drivers in ESX. Therefore, it may be a situation that when using VMware ESX when, for example, a new driver appears that supports some special hardware functions, you will have to wait for the new version of the hypervisor. The opposite situation is also possible: when replacing iron with a new one, it may be necessary to switch to a new hypervisor. In Hyper-V, it will be enough just to install the appropriate drivers in the host OS.

Now consider the Hyper-V environment, in which, in addition to the host OS, three more virtual machines are running. On one of them, Windows is installed and integration components are installed (Windows 7 and Windows Server 2008 R2 OS include integration components), another OS is installed that does not support integration components (or they are simply not installed), and the third OS is installed Linux, for which integration components exist (currently only SLES and RHEL are supported).

So, in the parent partition, in user space, there are:

- WMI providers allow you to manage virtual machines both locally and remotely.

- Virtual Machine Management Service (VMMS) - in fact, manages virtual machines.

- Virtual Machine Workflows (VMWPs) are processes in which all actions of virtual machines are performed — accessing virtual processors, devices, etc.

In the kernel space of the parent partition, the following are performed:

- Virtual Infrastructure Driver (VID) - manages partitions, as well as processors and memory of virtual machines.

- Virtualization Service Provider (VSP) - provides specific functions of virtual devices (the so-called "synthetic devices) through VMBus and in the presence of integration components on the guest OS side.

- Virtual device bus (VMBus) - exchanges information between virtual devices within child partitions and the parent partition.

- Device drivers - as mentioned above, only the parent partition has direct access to hardware devices, and therefore all drivers work inside it.

- Windows Kernel - the actual host OS kernel.

Now consider the virtual machines themselves. As already mentioned, each virtual machine operates in its own isolated environment, called the partition. Inside each virtual machine there is a virtualization service client (VSC), which provides the guest OS with the interface of virtual devices and interacts with the parent partition through the VMBus. If integration components are installed inside the guest OS (all versions of Windows, starting with XP and Server 2003, as well as some versions of Linux - SLES and RHEL), then so-called synthetic devices can be used, that is, virtual devices that have specific Hyper-specific -V functionality For example, VMQ support with a virtual network adapter, or a virtual SCSI controller. All of these specific functions are provided by a virtualization service provider (VSP) operating in the parent partition. It also converts requests from virtual machines, going through VMBus, and redirects them to drivers of physical devices. If the integration components are not supported by the guest OS, or they have not been installed - emulated devices are used (Legacy Network Adapter, Virtual IDE Controller, etc.). In this case, VMBus is not used, and the guest OS handles the hypervisor directly through the drivers of the emulated devices. The hypervisor redirects these requests to device drivers in the parent partition through a virtual machine workflow (VMWP), which is executed not in kernel space, but in user space. This greatly affects the performance, and therefore it is highly recommended not to use emulated devices. They can only be used if the guest OS does not support the installation of integration components. If such OSes (* BSD, unsupported versions of Linux, etc.) are actively used in your organization, you should think about other virtualization platforms, for example, about the same VMware ESX.

There is no other way for virtual machines to interact with each other, with the host OS or with hardware. This was done for security reasons: virtual machines can interact with the host OS or with each other only via network interfaces, there is no “forwarding” of devices, either USB or PCI. This is again a double-edged sword: on the one hand, it increases security, but on the other hand, it does not allow the guest OS to use specific devices, such as HASP keys or modems. Therefore, if you need to use such devices in the guest OS (for example, software from 1C is installed there, requiring the presence of a hardware HASP key to start), you have to use tricks like USB passthrough via a TCP / IP network. This is done, as a rule, with the help of third-party software.

Memory allocation and processor time is handled by the hypervisor itself. Unlike VMware ESX, the Hyper-V hypervisor does not contain third-party device drivers and components. This allows you to reduce the size of the hypervisor itself, and increase its reliability and security. Guest OS, as already mentioned, do not have direct access to physical processors and interrupts. It is the hypervisor that acts as a scheduler, allocating the processor time of each partition (including the parent) in accordance with the settings, it is the hypervisor that receives requests for interruptions from partitions and transmits them to physical processors, and the hypervisor allocates memory areas to virtual machines from partitions to physical addresses. Partitions are managed by the host OS through a so-called hypercall interface. The user interface for managing the hypervisor is provided by WMI providers through a virtual infrastructure driver (VID).

On this, perhaps, the first part I finish. In the next article, I will discuss how Hyper-V works with storage devices (hard drives, external storage systems, etc.).

Source: https://habr.com/ru/post/96822/

All Articles