Data storage in the Windows Azure cloud

Introduction

Among the many options for storing data, of course, you can select a new and interesting to study information storage technology in the cloud. At the moment there are several services to provide services of this type, they can be counted on the fingers - this is Amazon Web Services, Sun Cloud, Windows Azure. Each of these services provides its own interfaces for data access, in this article I would like to focus on the Windows Azure service.

This cloud computing platform provides 3 types of information storage services:

- Blob service allows storing textual and binary information in specially organized blob containers.

- Queue service, allows you to organize an unlimited storage of messages (according to the documentation, each message can be no more than 8 KB).

- The table service allows you to store data structured in tables that is accessed via the REST API.

The last service listed above - Table Storage, at first glance, can be considered an analogue of the relational table storage; however, there are a number of important differences in its concept that I will try to reveal in the course of the article.

This storage can contain tables (Tables), tables in the Table Storage concept are a collection of entities (Entities), they are similar to tuples in relational storages, in turn, an entity is a set of certain properties (Properties), which, in turn, represent a pair name and typed value ”(entities and typed value pair), entities are similar to fields in a table in relational repositories. It should be noted here that the table in Table Storage does not define the structure of the stored entities, but on the contrary, the stored entities may contain different properties, but be in the same table.

- Let us consider in detail the rules for the formation of table names:

- Table names can contain only alphanumeric characters.

- The table name cannot begin with a number.

- Table names are case sensitive.

- Table names must contain from 3 to 63 characters.

- Property names are case sensitive and cannot contain more than 255 characters.

- Each entity does not exceed 253 user-defined properties.

- The total size of the data for each entity should be no more than 1 MB.

- Each entity must contain 3 system properties described below.

System properties of entities.

To support balanced data loading, the tables in the storage can be divided into sections (Partitions), so, to unequivocally tell which entity belongs to which partition, it must contain the PartitionKey property, which uniquely identifies the partition of the table in which this entity is located.

Another required property in each stored entity is the RowKey property, which already identifies a specific entity in a specific section. The described properties of RowKey and PartitionKey form a primary key pair (Primary Key), each entity and must be specified during insert, delete and update operations.

And the last, the most insignificant for the developer, will be the property - Timestamp, it is intended for the internal needs of the service and stores information about the time of the last modification of the entity.

As noted above, there is a REST API for accessing data in Table Storage, however, Microsoft provides a special library for accessing data called ADO.NET Data Services (in the latest version of the framework 4.0, it was renamed WCF Data Services). This library provides the developer with classes for working with data schemas, entities, and their properties. How to use it will be shown below, using the example of creating a client for Windows Azure data services.

Creating a test data model

Now armed with the original technical data, you can proceed to create a demonstration data model for the Azure Table Service. But first we need to prepare an environment for testing and creating client applications. To do this, we need to download and install an addon for Visual Studio and Windows Azure SDK: http://www.microsoft.com/downloads/details.aspx?FamilyID=5664019e-6860-4c33-9843-4eb40b297ab6&displaylang=en

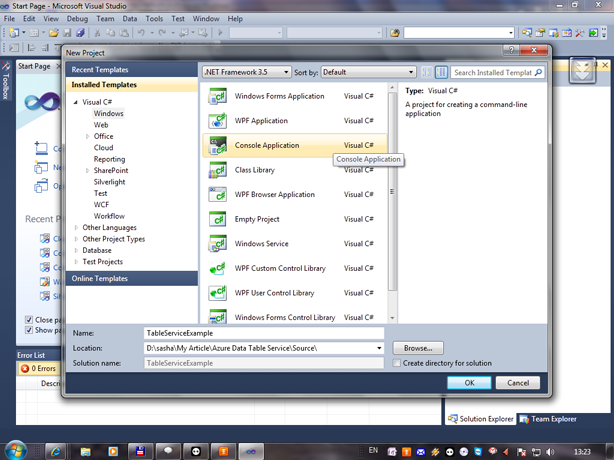

Then you can proceed directly to the programming. To do this, create in Visual Studio 2010 a simple console application called TableServiceExample:

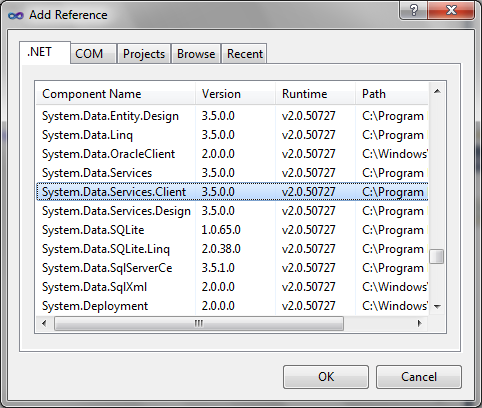

And add links to System.Data.Services.Client assemblies, Microsoft.WindowsAzure.StorageClient:

')

Now it is the turn to voice what is planned to be implemented, namely, it will be necessary to create a storage for two entities:

- The product must contain the following fields: Name, Brand, price, link to the product category.

- The product category must contain the following fields: category ID, name.

To display data in application objects, you need to create the appropriate classes, previously inheriting them from TableServiceEntity. Immediately I want to identify several aspects that relate to the objects being created: 1) All entities of the goods will be stored in one section (PartitionKey), called “ProductPartition”, and the species entities in the section “ProductKindPartition” 2) The RowKey identifier will be the string representation of the Guid specified when creating object.

Source code of the class "Product":

public class Product : TableServiceEntity

{

public const string PartitionName = "ProductPartition" ;

public Product()

{

}

private Product( string partitionKey, string rowKey)

: base (partitionKey, rowKey)

{

}

public static Product Create()

{

string rowKey = Guid .NewGuid().ToString();

return new Product(PartitionName, rowKey);

}

public string Name { get ; set ; }

public string Trademark { get ; set ; }

public int Kind { get ; set ; }

}

* This source code was highlighted with Source Code Highlighter .And the source code of the class "Product Type":

public class ProductKind : TableServiceEntity

{

public const string PartitionName = "ProductKindPartition" ;

public ProductKind()

{

}

private ProductKind( string partitionKey, string rowKey)

: base (partitionKey, rowKey)

{

}

public static ProductKind Create()

{

string rowKey = Guid .NewGuid().ToString();

return new ProductKind(PartitionName, rowKey);

}

public int Id { get ; set ; }

public string Name { get ; set ; }

}

* This source code was highlighted with Source Code Highlighter .

To create the necessary tables for storing the entities described above, it is necessary to determine the data context by the following criteria:

1) The context class must be inherited from TableServiceContext

2) For each table, you need to define a property of type IQueryable, where DataItemType is the type of entities that will be stored in the table.

Let's look at the source code of our data context:

public class TestServiceDataContext : TableServiceContext

{

public TestServiceDataContext( string baseAddress, StorageCredentials credentials)

: base (baseAddress, credentials)

{

}

public const string ProductTableName = "Product" ;

public const string ProductKindTableName = "ProductKind" ;

public IQueryable<Product> Product

{

get { return CreateQuery<Product>( ProductTableName ); }

}

public IQueryable<ProductKind> ProductKind

{

get { return CreateQuery<ProductKind>( ProductKindTableName ); }

}

}

* This source code was highlighted with Source Code Highlighter .Before deploying our data scheme in a tabular service, you need to become familiar with the principles of Windows Azure Data Services authentication.

Setting up access to Table Services

Each request to Azure tabular data services must be authenticated, in practice this means that each REST API request contains a specially crafted signature. We will not go into details, although if you are interested in reading the corresponding section of the SDK, due to the fact that all the rough work will be taken over by the ADO .NET Data Services package for Azure Storage, we just need to correctly specify the authentication data. Such data will be the account name (Account) and access key (Shared Key).

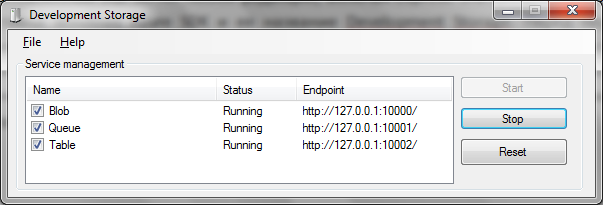

Before we add something to the program we are creating, we need to run the local Storage emulator on the computer, we need to immediately make a reservation that it additionally needs an installed SQL Server of any edition, including Express. This program is included with the Windows Azure SDK and its name Development Storage. Before the first launch, the application will create and initiate a database into which it will later be used to store information. If everything goes well, then the Development Storage icon will appear in the notification area and by clicking on it you can see which services are running at the moment, as well as their local addresses:

Authentication in them is based on well-known and pre-generated data:

- Account name: devstoreaccount1

- Account key: Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq / K1SZFPTOtr / KBHBeksoGMGw ==

This information will be used in the application. First, add the App.config configuration file and write the following lines to the appSettings section:

< configuration >

< appSettings >

< add key ="AccountName" value ="devstoreaccount1" />

< add key ="AccountSharedKey" value ="Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==" />

< add key ="TableStorageEndpoint" value ="http://127.0.0.1:10002/devstoreaccount1" />

</ appSettings >

</ configuration >

* This source code was highlighted with Source Code Highlighter .Now, in order to correctly format our code, we will create a class TableStoreManager and add the following fields to it:

- _credentials of type StorageCredentialsAccountAndKey - represents the data on access to the table storage (Table Storage), through a shared key authentication scheme (Shared Key)

- _tableStorage of type CloudTableClient - clicks for access to tabular storage.

- The _context of type TestServiceDataContext is the class created in the previous section with a description of all the tables.

After the TableStoreManager type being described, it is necessary to initialize all these fields with the following lines:

string accountKey = ConfigurationManager .AppSettings[ "AccountSharedKey" ];

string tableBaseUri = ConfigurationManager .AppSettings[ "TableStorageEndpoint" ];

string accountName = ConfigurationManager .AppSettings[ "AccountName" ];

_credentials = new StorageCredentialsAccountAndKey(accountName, accountKey);

_tableStorage = new CloudTableClient(tableBaseUri, _credentials);

_context = new TestServiceDataContext(tableBaseUri, _credentials);

* This source code was highlighted with Source Code Highlighter .Now that all the access components have been initialized, it is necessary to check whether tables need to be created and if it is required to create them in the storage, we will do it in a separate TryCreateShema method:

var list = from table in _tableStorage.ListTables()

where table == TestServiceDataContext.ProductKindTableName

|| table == TestServiceDataContext.ProductTableName select table;

if (list != null && list.ToList< string >().Count == 0){

CloudTableClient.CreateTablesFromModel( typeof (TestServiceDataContext),

ConfigurationManager .AppSettings[ "TableStorageEndpoint" ],

_credentials);

}

* This source code was highlighted with Source Code Highlighter .Here, first, all the tables of existing tables are sampled, and their names are compared with those in the schema, and if the tables are not found, then the program will create them according to the model we defined in the TestServiceDataContext class. And, lastly, you need to add a call to the created method to the Initialize method that was previously defined.

Fetching data from storage.

Azure Table Storage has a fairly diverse API for creating queries against the tables in the storage, for example, you can request a certain number of items, or return the first one, and also add filtering to specific fields. But here it should be noted that in the current implementation of the considered Storage there are some limitations, first of all, that more than a thousand elements cannot be returned at once and the fact that each request cannot be completed for more than five seconds if at least one of these conditions will not be satisfied, then the service will return an error. But back to querying, the ADO .NET Services Framework makes the chore of using REST APIs for storage services easier and provides the user with LINQ syntax for querying. Unfortunately, not all LINQ data access capabilities are supported in the current implementation, now you can use only a few operators:

- From - fully supported

- Where - fully supported

- Take - supported with a maximum limit of 1000 items

- First, FirstOrDefault - fully supported.

The list ends here. To compose queries, use the TestServiceDataContext.CreateQuery method, where T is the type of the returned entity.

We will build a method that returns all the available goods (the limit of 1000 items is not yet checked)

public List <Product> GetAllProducts()

{

var products = from product in _context.CreateQuery<Product>(TestServiceDataContext.ProductTableName) select product;

return products.ToList<Product>();

}

* This source code was highlighted with Source Code Highlighter .Next we write a sample of all the available categories, as well as a specific category by its identifier:

public List <ProductKind> GetProductKinds()

{

var productKinds = from productKind in

_context.CreateQuery<ProductKind>(TestServiceDataContext.ProductKindTableName)

select productKind;

return productKinds.ToList<ProductKind>();

}

public ProductKind GetProductKind( int id)

{

var productKinds = ( from productKind in

_context.CreateQuery<ProductKind>(TestServiceDataContext.ProductKindTableName)

where productKind.Id == id

select productKind);

var productKindList = productKinds.ToList<ProductKind>();

ProductKind result = null ;

if (productKindList.Count > 0) {

result = productKindList[0];

}

return result;

}

* This source code was highlighted with Source Code Highlighter .Now I would like to check our service at work, but if we run it and everything works correctly, we still won't see anything, since there is no data in the repository, therefore, for the first demonstration, we need to also implement the mechanism for adding entities.

Add data to the cloud

The procedure for adding new entities using ADO .NET Services looks pretty simple, just follow the added entries to have unique RowKey and PartiotionKey within the entire table. For the insert operation, the first parameter must be passed to the method of the created context TestServiceDataContext.AddObject - the name of the table where the additions will be made and the second parameter - the object to add. After calling two methods - DataServiceContext.SaveChanges - to force the sending of new data to the cloud storage, as well as DataServiceContext.Detach - to remove references from the context to the object. Thus, in the TableStoreManager class, we will create two Insert methods, respectively, to add the Product and ProductKind entities:

public void Insert(Product product)

{

_context.AddObject(TestServiceDataContext.ProductTableName, product);

_context.SaveChanges();

_context.Detach(product);

}

public void Insert(ProductKind productKind)

{

_context.AddObject(TestServiceDataContext.ProductKindTableName, productKind);

_context.SaveChanges();

_context.Detach(productKind);

}

* This source code was highlighted with Source Code Highlighter .The first testing service

After a long acquaintance with the architecture of Azure Table Storage, we can see this service in action, for this we will write in the Main method, our console program, the following lines:

try

{

TableStoreManager manager = new TableStoreManager();

manager.Initialize();

ProductKind productKind = ProductKind.Create();

productKind.Name = "" ;

productKind.Id = 1;

manager.Insert(productKind);

Product product = Product.Create();

product.Name = "" ;

product.Kind = productKind.Id;

product.Trademark = "Candy" ;

manager.Insert(product);

}

catch (Exception ex)

{

Console .WriteLine(ex.Message);

}

* This source code was highlighted with Source Code Highlighter .With this program, we, first, initialize the Table Services structure, creating the necessary tables there and, second, add one entry to the “Products” and “Types of Goods” tables. Now, before testing, you need to remember to start the local “cloud” DevelopmentStorage and execute our program. If everything is written and done correctly, the program will wait a few seconds and return control without a single error. Now you need to make sure that the data is really stored in the local storage, for this we change the Main method:

try

{

TableStoreManager manager = new TableStoreManager();

manager.Initialize();

var products = manager.GetAllProducts();

foreach (Product product in products)

{

Console .WriteLine( ": {0} {1}" , product.Name, product.Trademark);

ProductKind productKind = manager.GetProductKind(product.Kind);

if (productKind != null )

{

Console .WriteLine( " : {0}" , productKind.Name);

}

}

}

catch (Exception ex)

{

Console .WriteLine(ex.Message);

}

* This source code was highlighted with Source Code Highlighter .Let's run the program again, not forgetting about the DevelopmentStorage, and if everything is correct, then in the console we will see that all the saved data has returned and is ready for processing:

Entity update

The mechanism for updating entities in Table Storage is quite simple, each time you need to specify the RowKey and PatitionKey values of the object being changed. To ensure competitive access to entities, a mandatory flag was introduced - Etag (the If-Match header in the REST API). This flag determines when an object was changed, and if this Etag does not match the already existing storage does not allow changing the data, it will return an error signaling that it is necessary to re-request the information. But you can independently influence the unconditional update by setting the * symbol in the ETag.

To update an object through Ado .Net Data Services, you must first add it to the context using the TableServiceContext.AttachTo method, then call passing the same object to the TableServiceContext.UpdateObject method and send the information to the server by calling the TableServiceContext.SaveChanges method:

public void Update(Product product)

{

_context.Detach(product);

_context.AttachTo(TestServiceDataContext.ProductTableName, product, "*" );

_context.UpdateObject(product);

_context.SaveChanges();

}

* This source code was highlighted with Source Code Highlighter .At the very beginning, before updating, it is necessary to make sure that the object being updated is no longer in the Ado .NET Services vision field, for this we simply call the TableServiceContext.Detach method.

Now you can write a small test and make sure that everything works:

TableStoreManager manager = new TableStoreManager();

manager.Initialize();

var products = manager.GetAllProducts();

foreach (Product product in products)

{

product.Name += " 2" ;

product.Trademark = "" ;

manager.Update(product);

}

products = manager.GetAllProducts();

foreach (Product product in products)

{

Console .WriteLine(product.Name);

* This source code was highlighted with Source Code Highlighter .By running the written code, you can see that the data is safely changed, and the testing of the created procedure can be considered complete.

Deleting entities.

Like updating, deleting objects requires two properties - these are RowKey and PartitionKey, and also implies specifying the Etag flag - whose behavior is similar to that described in the previous section. In fact, when deleting an entity, it is marked as “deleted” and becomes inaccessible to clients, and physically it is deleted when periodic garbage collection takes place. The code for deleting our product-Product entity using ADO .NET Services is shown below:

public void Delete(Product product)

{

_context.Detach(product);

_context.AttachTo(TestServiceDataContext.ProductTableName, product, "*" );

_context.DeleteObject(product);

_context.SaveChanges();

}

* This source code was highlighted with Source Code Highlighter .To test the correctness of the work, you can write the following lines in the Main method:

TableStoreManager manager = new TableStoreManager();

manager.Initialize();

var products = manager.GetAllProducts();

foreach (Product product in products)

{

Console .WriteLine( ": {0} {1}" , product.Name, product.Trademark);

manager.Delete(product);

}

products = manager.GetAllProducts();

if (products.ToArray().Length == 0)

{

Console .WriteLine( " " );

}

* This source code was highlighted with Source Code Highlighter .Conclusion

This article showed a general approach to access one of the types of cloud services. The Ado .NET Services library has a number of interesting nuances to review which you can write another article, so I tried not to load the code with additional conditions, but brought it as lightly as possible.

Unfortunately, the full-fledged version of the Azure platform is currently unavailable for Russian consumers, so it’s impossible to test the written example. However, the author is the owner since the spring of 2009 an invite to CTP, which gives the right to create a number (with restrictions), on a real cloud, of services in order to familiarize with Azure. In this regard, this approach was tested by me about a year ago, by creating a cloud service that has been running non-stop for almost a year (it was launched in June 2009) - this indicates the true reliability of cloud computing over conservative ones.

PS

Sources can be downloaded here.

Source: https://habr.com/ru/post/91270/

All Articles