PLINQ: data distribution between workflows

Let there be some sequence of elements that we want to process using a PLINQ query. At the same time, there are a number of physical CPU cores that are ready to execute worker threads. How to distribute elements of the input collection between threads?

Imagine that the input collection remains monolithic, and workflows one by one begin to select elements. So the sample will be reduced to the following actions:

Obviously, this will give a large overhead on setting / unlocking. Especially they will be noticeable in the case of fast processing of the item by the workflow. You can get rid of this by splitting the original collection into parts.

How to divide the input sequence of elements so as to parallelize the processing as much as possible, using the capabilities of the equipment most efficiently? In general, only the number of worker threads that will process a LINQ request is known exactly. We can not predict in advance the processing time of each item. In addition, the length of the collection is unknown (of course, the number of elements can be pre-calculated, but this action is also potentially long and resource-intensive). That is, it does not turn out “fair” to distribute the elements between the execution threads. The optimal solution would be not to divide the entire original sequence at once, but to output data in the form of portions of different sizes. Parallel Extensions does just that, and works like this:

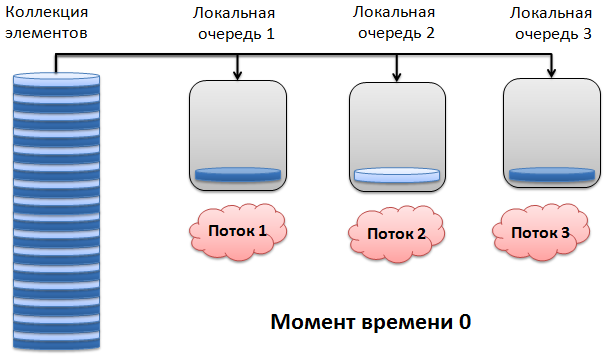

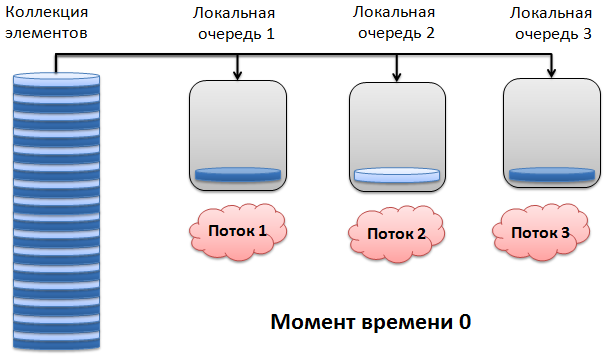

Here's what it looks like. At time 0, each worker thread gets 1 item from the collection:

')

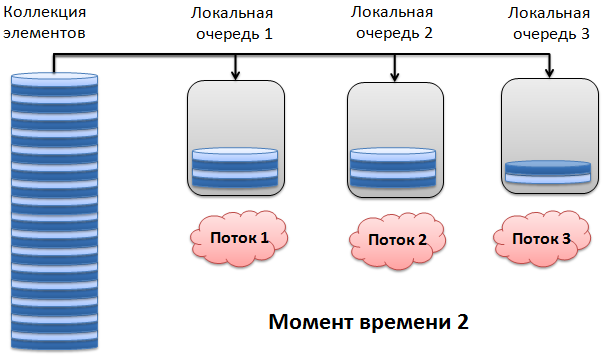

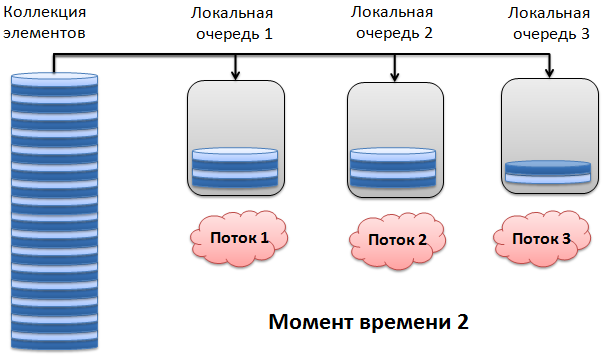

In the next moment, 1 workflows 1 and 2 will finish processing their items and select two items from the input collection. However, thread 3 is still processing the first selected item:

It takes some more time, threads 1 and 2 finish processing the elements obtained in the previous step and now receive 4 elements each. Stream 3 also completes processing and requests 2 new ones:

Well, and so on. Here, the load balance is observed: if one workflow processes an element for too long, the remaining threads will take more and more from the collection, thus increasing the overall efficiency. The thread's queue grows until its size reaches 512 bytes. That is, the maximum number of elements, for example, of the Int32 type, is 128.

Of course, the considered method is not universal, and in some cases will not be the best. You can offer a number of additions to the solution used. For example, measure the average processing time of each element, and change the size of the part, taking into account this parameter. But the developers of Parallel Extensions decided to focus on the approach described above. It is well established in most scenarios, and therefore was implemented.

However, there is the possibility of implementing its own principle of distribution of the elements of the original collection. There is a set of examples of applications using Paralell Extensions, among which there is an implementation of a different way of splitting the input collection.

Imagine that the input collection remains monolithic, and workflows one by one begin to select elements. So the sample will be reduced to the following actions:

- Lock installation

- Item selection

- Remove lock

Obviously, this will give a large overhead on setting / unlocking. Especially they will be noticeable in the case of fast processing of the item by the workflow. You can get rid of this by splitting the original collection into parts.

How to divide the input sequence of elements so as to parallelize the processing as much as possible, using the capabilities of the equipment most efficiently? In general, only the number of worker threads that will process a LINQ request is known exactly. We can not predict in advance the processing time of each item. In addition, the length of the collection is unknown (of course, the number of elements can be pre-calculated, but this action is also potentially long and resource-intensive). That is, it does not turn out “fair” to distribute the elements between the execution threads. The optimal solution would be not to divide the entire original sequence at once, but to output data in the form of portions of different sizes. Parallel Extensions does just that, and works like this:

- each workflow has its own elements for processing;

- the initial size of any queue is one, i.e. one item per stream is selected from the source collection;

- queue size grows: when you re-access the collection, it will be doubled. The queue length for each thread is calculated separately, and rises to a certain value, after which the growth stops;

Here's what it looks like. At time 0, each worker thread gets 1 item from the collection:

')

In the next moment, 1 workflows 1 and 2 will finish processing their items and select two items from the input collection. However, thread 3 is still processing the first selected item:

It takes some more time, threads 1 and 2 finish processing the elements obtained in the previous step and now receive 4 elements each. Stream 3 also completes processing and requests 2 new ones:

Well, and so on. Here, the load balance is observed: if one workflow processes an element for too long, the remaining threads will take more and more from the collection, thus increasing the overall efficiency. The thread's queue grows until its size reaches 512 bytes. That is, the maximum number of elements, for example, of the Int32 type, is 128.

Of course, the considered method is not universal, and in some cases will not be the best. You can offer a number of additions to the solution used. For example, measure the average processing time of each element, and change the size of the part, taking into account this parameter. But the developers of Parallel Extensions decided to focus on the approach described above. It is well established in most scenarios, and therefore was implemented.

However, there is the possibility of implementing its own principle of distribution of the elements of the original collection. There is a set of examples of applications using Paralell Extensions, among which there is an implementation of a different way of splitting the input collection.

Source: https://habr.com/ru/post/91082/

All Articles