MongoDB vs MySQL (vs Cassandra): And now a little more correct answer

Actually, today the topic was " Comparing the performance of MongoDB and MySQL using a simple example ", in which it was stated that MongoDB exceeds the performance of MySQL at times. Heh, when they write this, I immediately check and doubt. I got into the source code of the original test (thanks for the publication). And as it turned out, the author of the original topic made a mistake in three characters and in fact not everything is as follows:

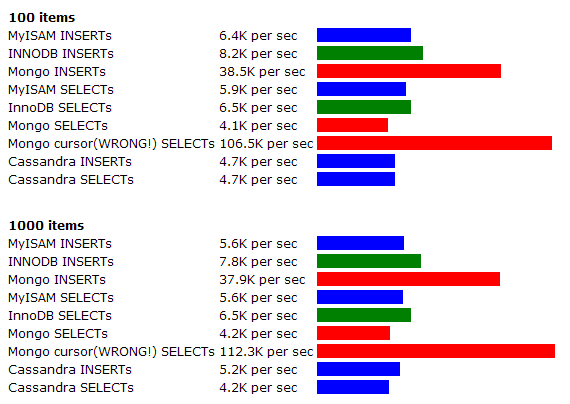

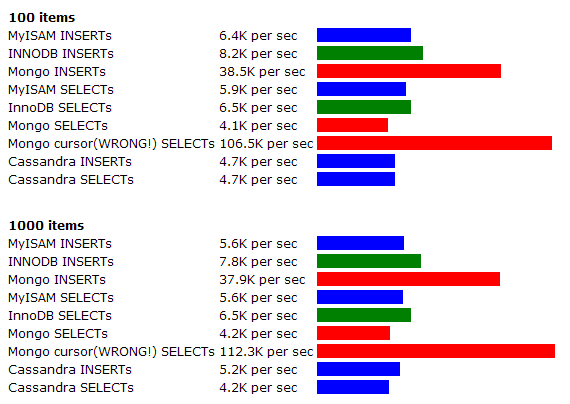

On the graph - the number of operations per second, (more - better), the logarithmic scale.

The last line is what the author of the original topic tested (incorrect, not critical, we all make mistakes and learn).

And now more about the error ...

')

So, the error of the original was that he did a SELECT like this:

which returned a Cursor (!), but not the object itself. That is, access to the database does not occur (or at least data reading does not occur). But what was necessary:

If the author would check that the stored value (INSERT) is equal to the pulled out value (SELECT) - there would be no such error.

In my test, I added assert, checking that what was saved is the same as we read. And he added a comparison with InnoDB (in the comments, many argued that it could be much better). InnoDB default settings.

The test itself: essentially both as “key-value storage” (save by primary key + value, select by primary key, read value). Yes, spherical, yes, in a vacuum.

Yes, inside the test there are calls assert and str. Of course, they otzhirat part of the performance, but for both tests - the same number. And we just need to compare the performance.

More results: Core2duo / WinXP SP3 .

More than 10,000 records tested - the ratio is maintained. And like neither Mongo nor MyISAM are blown away in speed.

Source:

yoihj.ru/habr/mongo_vs_mysql.py

I'm not saying that 100% is right (maybe I was wrong in what), so check me out too.

PS Yes, my sample was consistent, but if I switch it to a random one (to get an element with a random number each time), the situation changes, but not dramatically, the disposition of forces is still the same. You can make sure by replacing

In the comments, fallen tested the same code all under Linux + InnoDB_Plugin. The ratio of forces is about the same, but smoother:

Linux + InnoDB_Plugin

"Core i7 920, 2GB RAM, Fedora 12 x64, mysql 5.1.44 + InnoDB 1.0.6 compiled icc, mongodb 1.2.4 x64, sata disk is normal."

And for the sake of interest , Cassandra + pycassa (under win32) has been added - I will say straight away - I have no experience with it and a lot of incomprehensible (.remove () does not delete the records, but only clears them, they themselves remain ... + eventual consistency - it is difficult to test oooooooo! ) - complete jumping with a tambourine in the dark, so consider it just an entertaining test .

Yoi Haji

view from Habra

- In the original: MongoDB is faster MySQL writes 1.5 times (YES, though I have 3 times)

- In the original: MongoDB is faster MySQL reads 10 times (NO, in fact, MongoDB is about equal plus or minus 10-30%)

- InnoDB vs MyISAM - plus or minus (not originally tested)

On the graph - the number of operations per second, (more - better), the logarithmic scale.

The last line is what the author of the original topic tested (incorrect, not critical, we all make mistakes and learn).

And now more about the error ...

')

So, the error of the original was that he did a SELECT like this:

test.find({'_id':random.randrange(1, 999999)})which returned a Cursor (!), but not the object itself. That is, access to the database does not occur (or at least data reading does not occur). But what was necessary:

test.find({'_id':random.randrange(1, 999999)}) [0]If the author would check that the stored value (INSERT) is equal to the pulled out value (SELECT) - there would be no such error.

In my test, I added assert, checking that what was saved is the same as we read. And he added a comparison with InnoDB (in the comments, many argued that it could be much better). InnoDB default settings.

The test itself: essentially both as “key-value storage” (save by primary key + value, select by primary key, read value). Yes, spherical, yes, in a vacuum.

Yes, inside the test there are calls assert and str. Of course, they otzhirat part of the performance, but for both tests - the same number. And we just need to compare the performance.

More results: Core2duo / WinXP SP3 .

More than 10,000 records tested - the ratio is maintained. And like neither Mongo nor MyISAM are blown away in speed.

Source:

yoihj.ru/habr/mongo_vs_mysql.py

I'm not saying that 100% is right (maybe I was wrong in what), so check me out too.

PS Yes, my sample was consistent, but if I switch it to a random one (to get an element with a random number each time), the situation changes, but not dramatically, the disposition of forces is still the same. You can make sure by replacing

i1 = str(i+1) with i1 = str(random.randint(1,cnt-1)+1) in SELECTs.In the comments, fallen tested the same code all under Linux + InnoDB_Plugin. The ratio of forces is about the same, but smoother:

Linux + InnoDB_Plugin

"Core i7 920, 2GB RAM, Fedora 12 x64, mysql 5.1.44 + InnoDB 1.0.6 compiled icc, mongodb 1.2.4 x64, sata disk is normal."

Findings:

- Writing MongoDB is faster if used as key-value storage;

- Reading is about the same;

- Both systems are quite decent, nobody is outdated, nobody has killed anyone, there is no obvious loser .

vs Cassandra

And for the sake of interest , Cassandra + pycassa (under win32) has been added - I will say straight away - I have no experience with it and a lot of incomprehensible (.remove () does not delete the records, but only clears them, they themselves remain ... + eventual consistency - it is difficult to test oooooooo! ) - complete jumping with a tambourine in the dark, so consider it just an entertaining test .

import pycassa

client = pycassa.connect ()

cf = pycassa.ColumnFamily (client, 'Keyspace1', 'Standard1')

# CASSANDRA INSERT

start_time = time.clock ()

for i in xrange (cnt):

i1 = str (i + 1)

cf.insert (i1, {'value': i1})

report ('Cassandra INSERTs')

list (cf.get_range ())

# CASSANDRA SELECT

start_time = time.clock ()

for i in xrange (cnt):

i1 = str (i + 1)

obj = cf.get (i1) ['value']

assert (obj == i1)

report ('Cassandra SELECTs') Yoi Haji

view from Habra

Source: https://habr.com/ru/post/87620/

All Articles