How GIL works in Python

Why after paralleling the execution of your program can be halved?

Why after creating a stream does Ctrl-C stop working?

I present to you the translation of David Beazley 's article “Inside the Python GIL” . It discusses some of the subtleties of streaming and signal processing in Python.

As you know, in Python, Global Interpreter Lock (GIL) is used, which imposes some restrictions on threads. Namely, you can not use multiple processors at the same time. This is a hackneyed topic for Python's holivars, along with tail-call optimization, lambda, whitespace, etc.

I am not deeply perturbed about using GIL in Python. But for parallel computing using multiple CPUs, I prefer messaging and inter-process interaction using threads. However, I am interested in the unexpected behavior of GIL on multi-core processors.

')

Consider a trivial CPU-dependent function (i.e., a function whose execution speed depends mainly on processor performance):

First, run it twice in turn:

Now run it in parallel in two threads:

The following results were obtained on a dual-core MacBook:

I do not like inexplicable magical phenomena. As part of a project launched by me in May, I began to understand the implementation of GIL in order to understand why I received such results. I went through all the steps, starting with the Python scripts and ending with the source code of the pthreads library (yes, maybe I should go out more often). So let's get this in order.

Python threads are real threads (POSIX threads or Windows threads), fully controlled by the OS. Consider threading through the Python interpreter process (written in C). When creating a thread, it simply executes the run () method of the Thread object or any given function:

In fact, much more happens. Python creates a small data structure (PyThreadState) that lists: the current stack frame in Python code, the current recursion depth, the thread identifier, some information about exceptions. The structure is less than 100 bytes. Then a new thread (pthread) is started, in which C code calls PyEval_CallObject, which starts what is specified in Python callable.

The interpreter stores in the global variable a pointer to the current active thread. The actions performed are entirely dependent on this variable:

That's the catch: at any time, only one Python stream can be executed. Global interpreter locking - GIL - carefully monitors thread execution. GIL guarantees each thread exclusive access to interpreter variables (and the corresponding C-extension calls work correctly).

The principle of operation is simple. Threads hold GIL while they are running. However, they release it when blocking for I / O operations. Each time a thread is forced to wait, others that are ready for execution, the threads use their chance to start.

When working with CPU-dependent threads that never perform I / O operations, the interpreter periodically checks (“the periodic check”).

By default, this happens every 100 ticks, but this parameter can be changed using sys.setcheckinterval (). The check interval is a global counter that is completely independent of the order in which threads are switched.

When periodically checking in the main thread, signal handlers are started, if any. Then GIL turns off and on again. At this stage, it is possible to switch several CPU-dependent threads (with a short release of GIL, other threads have a chance to start).

Ticks roughly correspond to the execution of instructions of the interpreter. They are not based on time. In fact, a long operation can block everything:

Tiki can not be interrupted, Ctrl-C in this case will not stop the program.

Let's talk about Ctrl-C. A very common problem is that a program with multiple threads cannot be interrupted using keyboard interrupt. This is very annoying (you have to use kill -9 in a separate window). (From the translator: I managed to kill such programs by Ctrl + F4 in the terminal window.) It's amazing why Ctrl-C doesn't work?

When a signal arrives, the interpreter starts a “check” after each tick , until the main thread starts. Since signal handlers can only be run in the main thread, the interpreter often turns off and on GIL until the main thread starts.

Python does not have the means to determine which thread should start next. No prioritization, preemptive multitasking, round-robin, etc. This function is entirely assigned to the operating system. This is one of the reasons for the strange operation of the signals: the interpreter cannot control the start of threads in any way, it simply switches them as often as possible, hoping that the main thread will start.

Ctrl-C often does not work in multi-threaded programs, because the main thread is usually blocked by uninterrupted thread-join or lock. While it is locked, it will not start. As a result, it will not be able to execute a signal handler.

As an added bonus, the interpreter remains in a state where it tries to switch the stream after each tick. Not only can you not interrupt the program, it also works more slowly.

GIL is not an ordinary mutex. This is either a nameless POSIX semaphore, or the pthreads conditional variable. Interpreter blocking is based on sending signals.

Switching threads fraught with more subtleties than programmers usually think.

The delay between sending a signal and starting a stream can be quite substantial, it depends on the operating system. And it takes into account the priority of implementation. At the same time, tasks requiring I / O operations have a higher priority than CPU-dependent ones. If a signal is sent to a low priority thread, and the processor is busy with more important tasks, then this thread will not run for a long time.

As a result, the signals that the GIL stream sends out becomes too much.

Every 100 ticks, the interpreter blocks the mutex, sends a signal to a variable or semaphore process that waits for this all the time .

Let's measure the number of system calls.

For sequential execution: 736 (Unix), 117 (Mac).

For two streams: 1149 (Unix), 3.3 million (Mac).

For two threads on a dual-core system: 1149 (Unix), 9.5 million (Mac).

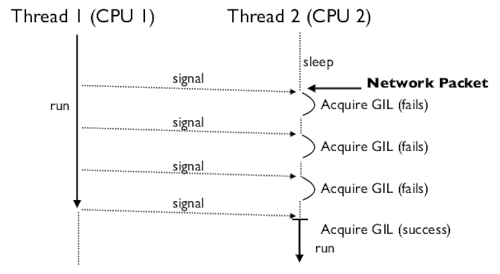

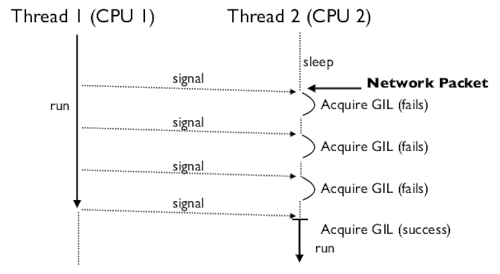

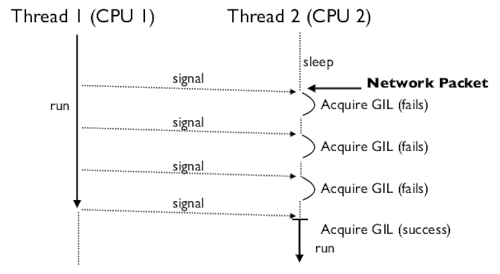

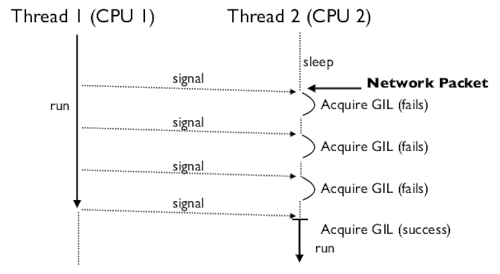

On a multi-core system, CPU-dependent processes are switched simultaneously (on different cores), resulting in a battle for GIL:

A waiting thread may make hundreds of unsuccessful attempts to capture GIL.

We see the battle for two mutually exclusive goals going on. Python just wants to run no more than one thread at a time. And the operating system (“Ooo, many cores!”) Generously switches threads, trying to extract the maximum benefit from all cores.

Even a single CPU-dependent thread causes problems — it increases the response time of an I / O-dependent thread.

The final example is the bizarre form of the problem of changing priorities. A CPU-dependent process (low priority) blocks the execution of an I / O-dependent (high priority). This happens only on multi-core processors, because the I / O stream cannot wake up quickly enough and get GIL before a CPU-dependent one.

The implementation of GIL in Python has hardly changed in the last 10 years. The corresponding code in Python 1.5.2 looks almost the same as in Python 3.0. I don’t know if GIL’s behavior has been well studied (especially on multi-core processors). It is more useful to delete GIL in general, than to change it. It seems to me that this subject requires further study. If GIL stays with us, it's worth correcting its behavior.

How to get rid of this problem? I have a few vague ideas, but they are all "complicated." It is necessary for Python to have its own thread manager (or at least an interaction mechanism with the OS dispatcher). But this requires a non-trivial interaction between the interpreter, the OS scheduler, the thread library, and, worst of all, the C-extension modules.

Is it worth it? Correcting GIL's behavior would make the execution of threads (even with GIL) more predictable and less demanding of resources. It may improve performance and decrease application response time. I hope, at the same time it will be possible to avoid a complete rewriting of the interpreter.

The original was designed as a presentation, so I had to change the order of the story a little to make the article easier to read. I also excluded traces of the interpreter's work - if you are interested, look in the original.

Habralyudi, advise interesting English articles on Python, which would be good to translate. I have in mind a couple of articles, but I want more options.

Why after creating a stream does Ctrl-C stop working?

I present to you the translation of David Beazley 's article “Inside the Python GIL” . It discusses some of the subtleties of streaming and signal processing in Python.

Introduction

As you know, in Python, Global Interpreter Lock (GIL) is used, which imposes some restrictions on threads. Namely, you can not use multiple processors at the same time. This is a hackneyed topic for Python's holivars, along with tail-call optimization, lambda, whitespace, etc.

Disclaimer

I am not deeply perturbed about using GIL in Python. But for parallel computing using multiple CPUs, I prefer messaging and inter-process interaction using threads. However, I am interested in the unexpected behavior of GIL on multi-core processors.

')

Performance test

Consider a trivial CPU-dependent function (i.e., a function whose execution speed depends mainly on processor performance):

def count(n): while n > 0: n -= 1 First, run it twice in turn:

count(100000000) count(100000000) Now run it in parallel in two threads:

t1 = Thread(target=count,args=(100000000,)) t1.start() t2 = Thread(target=count,args=(100000000,)) t2.start() t1.join(); t2.join() The following results were obtained on a dual-core MacBook:

- sequential launch - 24.6 s

- parallel launch - 45.5 s (almost 2 times slower!)

- parallel start after disabling one of the cores - 38.0 s

I do not like inexplicable magical phenomena. As part of a project launched by me in May, I began to understand the implementation of GIL in order to understand why I received such results. I went through all the steps, starting with the Python scripts and ending with the source code of the pthreads library (yes, maybe I should go out more often). So let's get this in order.

More about threads

Python threads are real threads (POSIX threads or Windows threads), fully controlled by the OS. Consider threading through the Python interpreter process (written in C). When creating a thread, it simply executes the run () method of the Thread object or any given function:

import time import threading class CountdownThread(threading.Thread): def __init__(self,count): threading.Thread.__init__(self) self.count = count → def run(self): while self.count > 0: print "Counting down", self.count self.count -= 1 time.sleep(5) return In fact, much more happens. Python creates a small data structure (PyThreadState) that lists: the current stack frame in Python code, the current recursion depth, the thread identifier, some information about exceptions. The structure is less than 100 bytes. Then a new thread (pthread) is started, in which C code calls PyEval_CallObject, which starts what is specified in Python callable.

The interpreter stores in the global variable a pointer to the current active thread. The actions performed are entirely dependent on this variable:

/* Python/pystate.c */ ... PyThreadState *_PyThreadState_Current = NULL; The infamous gil

That's the catch: at any time, only one Python stream can be executed. Global interpreter locking - GIL - carefully monitors thread execution. GIL guarantees each thread exclusive access to interpreter variables (and the corresponding C-extension calls work correctly).

The principle of operation is simple. Threads hold GIL while they are running. However, they release it when blocking for I / O operations. Each time a thread is forced to wait, others that are ready for execution, the threads use their chance to start.

When working with CPU-dependent threads that never perform I / O operations, the interpreter periodically checks (“the periodic check”).

By default, this happens every 100 ticks, but this parameter can be changed using sys.setcheckinterval (). The check interval is a global counter that is completely independent of the order in which threads are switched.

When periodically checking in the main thread, signal handlers are started, if any. Then GIL turns off and on again. At this stage, it is possible to switch several CPU-dependent threads (with a short release of GIL, other threads have a chance to start).

/* Python/ceval.c */ ... if (--_Py_Ticker < 0) { ... _Py_Ticker = _Py_CheckInterval; ... if (things_to_do) { if (Py_MakePendingCalls() < 0) { ... } } if (interpreter_lock) { /* */ ... PyThread_release_lock(interpreter_lock); /* */ PyThread_acquire_lock(interpreter_lock, 1); ... } Ticks roughly correspond to the execution of instructions of the interpreter. They are not based on time. In fact, a long operation can block everything:

>>> nums = xrange(100000000) >>> -1 in nums # 1 (6,6 ) False >>> Tiki can not be interrupted, Ctrl-C in this case will not stop the program.

Signals

Let's talk about Ctrl-C. A very common problem is that a program with multiple threads cannot be interrupted using keyboard interrupt. This is very annoying (you have to use kill -9 in a separate window). (From the translator: I managed to kill such programs by Ctrl + F4 in the terminal window.) It's amazing why Ctrl-C doesn't work?

When a signal arrives, the interpreter starts a “check” after each tick , until the main thread starts. Since signal handlers can only be run in the main thread, the interpreter often turns off and on GIL until the main thread starts.

Thread Scheduler

Python does not have the means to determine which thread should start next. No prioritization, preemptive multitasking, round-robin, etc. This function is entirely assigned to the operating system. This is one of the reasons for the strange operation of the signals: the interpreter cannot control the start of threads in any way, it simply switches them as often as possible, hoping that the main thread will start.

Ctrl-C often does not work in multi-threaded programs, because the main thread is usually blocked by uninterrupted thread-join or lock. While it is locked, it will not start. As a result, it will not be able to execute a signal handler.

As an added bonus, the interpreter remains in a state where it tries to switch the stream after each tick. Not only can you not interrupt the program, it also works more slowly.

GIL implementation

GIL is not an ordinary mutex. This is either a nameless POSIX semaphore, or the pthreads conditional variable. Interpreter blocking is based on sending signals.

- To enable GIL, check if it is free. If not, wait for the next signal.

- To turn GIL off, release it and send a signal.

Switching threads fraught with more subtleties than programmers usually think.

The delay between sending a signal and starting a stream can be quite substantial, it depends on the operating system. And it takes into account the priority of implementation. At the same time, tasks requiring I / O operations have a higher priority than CPU-dependent ones. If a signal is sent to a low priority thread, and the processor is busy with more important tasks, then this thread will not run for a long time.

As a result, the signals that the GIL stream sends out becomes too much.

Every 100 ticks, the interpreter blocks the mutex, sends a signal to a variable or semaphore process that waits for this all the time .

Let's measure the number of system calls.

For sequential execution: 736 (Unix), 117 (Mac).

For two streams: 1149 (Unix), 3.3 million (Mac).

For two threads on a dual-core system: 1149 (Unix), 9.5 million (Mac).

On a multi-core system, CPU-dependent processes are switched simultaneously (on different cores), resulting in a battle for GIL:

A waiting thread may make hundreds of unsuccessful attempts to capture GIL.

We see the battle for two mutually exclusive goals going on. Python just wants to run no more than one thread at a time. And the operating system (“Ooo, many cores!”) Generously switches threads, trying to extract the maximum benefit from all cores.

Even a single CPU-dependent thread causes problems — it increases the response time of an I / O-dependent thread.

The final example is the bizarre form of the problem of changing priorities. A CPU-dependent process (low priority) blocks the execution of an I / O-dependent (high priority). This happens only on multi-core processors, because the I / O stream cannot wake up quickly enough and get GIL before a CPU-dependent one.

Conclusion

The implementation of GIL in Python has hardly changed in the last 10 years. The corresponding code in Python 1.5.2 looks almost the same as in Python 3.0. I don’t know if GIL’s behavior has been well studied (especially on multi-core processors). It is more useful to delete GIL in general, than to change it. It seems to me that this subject requires further study. If GIL stays with us, it's worth correcting its behavior.

How to get rid of this problem? I have a few vague ideas, but they are all "complicated." It is necessary for Python to have its own thread manager (or at least an interaction mechanism with the OS dispatcher). But this requires a non-trivial interaction between the interpreter, the OS scheduler, the thread library, and, worst of all, the C-extension modules.

Is it worth it? Correcting GIL's behavior would make the execution of threads (even with GIL) more predictable and less demanding of resources. It may improve performance and decrease application response time. I hope, at the same time it will be possible to avoid a complete rewriting of the interpreter.

Afterword from the translator

The original was designed as a presentation, so I had to change the order of the story a little to make the article easier to read. I also excluded traces of the interpreter's work - if you are interested, look in the original.

Habralyudi, advise interesting English articles on Python, which would be good to translate. I have in mind a couple of articles, but I want more options.

Source: https://habr.com/ru/post/84629/

All Articles