ACM ICPC 2010 Unofficial Broadcast - How It Was

A post based on the ACM ICPC 2010 finals last Friday, about how to literally raise the mirror of a dying page under a load on the knee, fasten a chat to it with its discussion, and do not bend over the load yourself :)

A post based on the ACM ICPC 2010 finals last Friday, about how to literally raise the mirror of a dying page under a load on the knee, fasten a chat to it with its discussion, and do not bend over the load yourself :)The post will be more interesting to web programmers than to Olympiads.

Some statistics, nginx configs, useful tricks, as well as a number of rakes, which should be well known to people with experience, but which many still often attack ...

')

Prehistory

A couple of days ago, one of the main events of the year in sports programming took place, which has already been written on Habré .

In narrow circles of fans of these competitions (these are several thousand people all over the world) there is a tradition: during the final for 5 hours, gather around the screens of monitors and follow the “live” table of results. Interest is akin to that with which normal people watch World Cup footballs :) (which Russia, alas, did not make it, unlike).

Unfortunately, the official page with the cherished tablet annually falls under Habr-, Topcoder- and other effects, and is not very readable, so for the 4th time I made its unofficial mirror , with

Brief statistics

In just 5 hours:

- 6000 unique IP (at the peak of the 10-minute interval - 2000)

- 1M requests to static files

- 500K long-poll requests

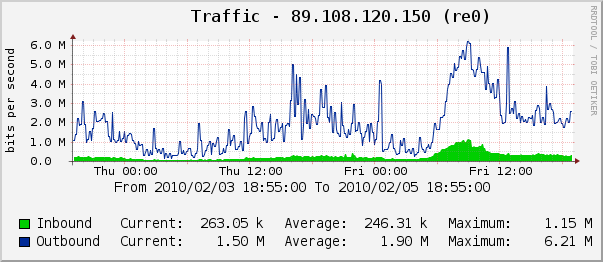

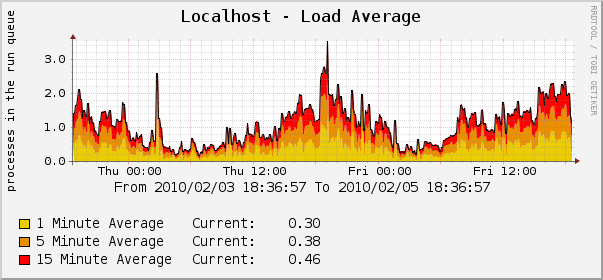

The load on the server at peak time (9 am Moscow time - the beginning of the last hour of the competition, the table “freeze” time) - 1.5K connections to nginx, 50M of memory eaten by it, less than 1% of CPU load, outgoing traffic 7 Mbit / sec. That is, VDS in close to the minimum configuration or even a laptop on your lap would be enough - the main thing is to allow the channel.

A few graphs from cacti (data for 2 days, so that you can compare with the server load in "peacetime"):

Configuration

In the past years, the whole "face" - it was a simple page, pulling updates to its main part - the table, using ajax every 15-30 seconds (the period was configured by the user). The banal Apache + php bundle with minimal caching coped with the entire load with a bang - the official table was downloaded no more than once every 10 seconds, parsed into json and then distributed to all those asking.

This year, I wanted to try something new, having read a lot of articles about such things as long-polling, comet, etc., and decided to do something about it. Yes, and long wanted to tie the chat ...

Let me remind you that the essence of long-polling is “hanging up” client requests until the arrival of new data on the server, as a result, clients receive a response as soon as this data appears. To implement this function, nginx_http_push_module was used.

Why he, and not realplexor , cometd or samopisny demon? Because he knows exactly what he wanted, namely:

- full control of the data format - a simple POST request is made on the server for updating the data, all of the content of which is sent to the waiting clients exactly as it is

- there is no need to use additional libraries, the same ajax request is being made on the client, it is just executed too :)

- support for analog gzip_static (compression is not at the time of issuance to the client, but preliminary), thanks to the first item

To be safe, the client was able to work in the old mode, using regular timer ajax requests, and the new mode was turned on by the user by choosing “real-time” in the settings.

Due to the flexibility of nginx_http_push_module, the mode change consisted only in changing the URL for polling and timers - the data came exactly the same.

System components:

- homepage - a shell with all client code, ideally loaded once

- dynamic data in the form of two files - standings.js (table) and chat.js (last 30 chat messages) - their client pulls, either by timer or by long-poll requests

- a daemon constantly running regardless of apache in php, downloading and downloading the official table every 3 seconds and saving it in standings.js

- a php script that accepts (also Ajax) chat messages, recompiling chat.js

- nginx_http_push_module, into which the two previous scripts also threw updates (the contents of the corresponding files compressed in gzip), and to which clients using the “real-time” mode were hooked.

The nginx configuration that implements the above logic:

location ~ ^ / fairy / post / ([a-z0-9 ._-] +) $ {

access_log /var/log/nginx-fairy.log main;

push_publisher;

set $ push_channel_id $ 1; # so that the channels are named by file name

push_message_timeout 2h; # longer storage time

push_min_message_recipients 0;

push_message_buffer_length 1; # Advanced logic with a message queue is not needed, the last one will suffice

}

location ~ ^ / fairy / ([a-z0-9 ._-] +) $ {

access_log /var/log/nginx-fairy.log main;

push_subscriber;

push_authorized_channels_only on; # forbid to read from non-existent channels

push_subscriber_concurrency broadcast; # channels are not personalized, but shared

set $ push_channel_id $ 1;

default_type text / plain;

add_header Content-Encoding gzip; # emulation gzip_static

expires epoch; # see below about caching

}

(why / fairy? yes because the comet name annoys :))

It turned out that the "channels" emulated the usual js files, the same was given to the address /fairy/standings.js as to the address /standings.js (static file), but with the "hanging" before it was updated, and the update was done via POST /fairy/post/standings.js, such a simple and clear URL scheme.

Some thoughts on good and evil

1. gzip - good

The official table (html weighing 50K, updated via meta refresh every 30 seconds) did not have any gzip at all, and as a result, the channel died.

(to the credit of the organizers, they later made her a mirror, where gzip did appear)

2. gzip_static - welcome

Why compress all these megabits of outgoing traffic on the fly and spend on this CPU if you can create a file next to it along with the updated file .gz and tell nginx to give it away if the client supports it?

3. update the file by writing over (instead of writing next to + rename) - evil

If at this moment to swing it (and it WILL BE swing) - troubles begin.

The official table was so updated, because of what users sometimes saw the “torn” table, and if my script dragged it in this form ... everything would be fine, but he considered the difference between the new and previous versions of the tables and threw information about new “pluses” in chat. As a result, the chat periodically flooded with a wave of false positives, which caused shock to untrained viewers :)

(somehow managed to fix it on the go by adding additional checks)

4. load time optimization - welcome

All client code weighs less than 15k in gzip - for this reason I did not use my favorite jquery and other frameworks - I do not like it when the framework weighs twice as much as everything else taken together :)

Also, in order to avoid the “loading ...” labels at the first entry, data files were added to the page via <script src = "..."> and loaded without waiting for onload / documentready.

The result is a pre-assembled table + last chat messages I had loaded from scratch in 0.2–0.3 seconds, including ping to the server (according to firebug readings), came out faster than Google’s main page :)

5. properly configured client caching is good

Until now, very often I see crutches with the addition of something like & random = 74925 to the query ...

Meanwhile, it is enough to put the Expires header in the past tense so that the browser always makes a request (in nginx this is done in a single line - expires epoch), and teach the client script to handle the answer 304.

You also need to make sure that when ajax requests are sent the correct If-Modified-Since, the same nginx_http_push_module uses it actively.

I put the contents of the Last-Modified header from the last response I received to the same URL (in general, the browser does this itself, but if the user doesn’t have caching turned off, it’s not).

6. the lack of OpenID - evil

The chat participants had the opportunity to subscribe their nicknames to TopCoder (to highlight their names with the appropriate color), and I really did not have the opportunity to verify their authenticity without special registration (which is impossible for one-night script). However, the majority of the participants behaved surprisingly culturally, but the most overdue personalities had to be treated with an IP ban :)

Unfortunately, because of their presence, I was forced to turn off the chat at the end, when I finally decided to sleep a bit - I just did not dare to leave everything without moderation.

7. Failure to support IE6 - welcome

Finding shortly before the start that the chat is not working in all of our “favorite” browsers (it seems, because of the XMLHTTPRequest that is a little incompatible in details), I hung up a sign with a clear conscience in the spirit of “sorry, in IE6 something may not work, update already finally "and engaged in more useful things for other users.

I think that this is the way to do it, because the main function — displaying a table of results — continued to work even there.

It seems to have turned out too many letters. But I hope someone my experience will be useful.

And yes, PPNH :)

Source: https://habr.com/ru/post/83385/

All Articles