The computer of your dreams. Part 3: Hidden Horizons

Part 1 | Part 2

By reading are required - the material is their direct continuation.

In the previous part of the article, key components of a modern PC were considered, but no final conclusion was made. Yes, we looked at the most important characteristics and their impact on performance. Knowing this, you can take a look at the proposed range and find the right device. But for higher performance, in any case, you will have to pay more, and you don’t want to do it like that ...

Are there ways to overcome the differences between the younger and older models of hardware, or just to increase system performance without paying extra for it? Definitely there =)

Overclocking is the operation of computer components in abnormal modes of operation in order to increase their speed. Occupation, very popular among zhelezyachnikov, and at the same time and the source of9000 of a huge number of disputes and holivarov =)

Very often, when I advise someone to new hardware with a reference to further overclocking, in response, I hear “no, I won’t do anything!”. It is very difficult to convince people, sometimes it is almost impossible.

In order to understand this attitude, you first need to figure out what exactly is overclocking in practice.

In total there are two main areas:

Extreme overclocking

This lesson is aimed at squeezing all its potential out of iron by any means. The ultimate goal is to set records for the maximum frequency or the maximum number of “parrots” in any synthetic benchmark. To achieve the result, all possible means are used: extreme cooling (multistage freon, dry ice, liquid nitrogen, liquid helium), modification of components, etc. The selection of iron for such a system is quite tough - up to individual instances, and from the overclocker you need to possess a fairly large theoretical base and solid practical experience. Extreme overclocking is quite risky, to kill any piece of iron because of inexperience in such conditions is quite easy. Well and the main thing - this occupation has no practical use, it is aimed exclusively at setting records, demonstrating the extreme possibilities of iron and just getting a fan.

')

Household acceleration

It is quite another thing - household overclocking, the ultimate goal of which is to increase the productivity of existing iron with the expectation of long-term (relatively) promising work. The process of selecting iron for the system “under overclocking” is reduced to the purchase of more “loyal” and optimal components. However, no one bothers to overclock everything that comes to hand;) From the overclocker, knowledge of only the basic principles and methods of overclocking is required, as well as some features of specific hardware. The risk with proper selection of components is reduced to a minimum - as a consequence of the presence of various defense mechanisms, it is rather difficult to kill a modern system, even if it wants to be done on purpose. And most importantly, in contrast to extreme overclocking, home overclocking has a clear practical benefit - a free performance boost =)

All stereotypes associated with overclocking, as well as dislike for this activity, arise because of the association of the word “overclocking” in people exclusively with the first option - extreme overclocking. Or, with the unfortunate experience of bearded times, when even domestic overclocking was a rather complicated procedure, but they did not stutter on the extreme. At the present time, household overclocking is nothing special. I will talk about him in this article.

Before proceeding to the issues of overclocking individual components, one cannot do without a couple of words on the technical side of overclocking. What generally allows this phenomenon to exist?

The answer lies in the production technology of semiconductor products, namely, at the stages of quality control and rejection of unusable chips.

You have probably heard more than once about quality control. Its essence lies in conducting a series of different tests that determine the final compliance of the chips with the required characteristics.

The following is important here:

We now turn to more approximate things and consider acceleration on the example of the main components of the system, which were discussed in the previous section.

I will not describe here a detailed method of overclocking, I will consider only general principles. The main thing is to understand the essence of the process itself and the benefits it can bring.

As you know, the frequency of the CPU cores is obtained by multiplying the frequency (real, not effective) of the system bus or the system generator by the CPU multiplier. For example, Intel Pentium DC E5200 has a frequency of 2500 MHz with a FSB of 200 MHz and a multiplier of 12.5.

In order to change the final frequency, it is necessary, respectively, to increase either the bus or the multiplier.

Speaking of the multiplier. In bearded times, the presence of an unlocked upward multiplier was almost the only opportunity to disperse a stone. The reason lay in the motherboards - they did not contribute to the functioning of iron in abnormal conditions. Now the picture is completely different - even the most low-cost glands allow you to change the basic parameters and are able to keep more or less good overclocking, not to mention middle-class devices, and even more so about special overclocking models. Therefore, if we talk about domestic acceleration, a free multiplier is unlikely to give any special advantages. You can certainly recall the problem of FSB Strap (switching the operating mode of chipsets and a sharp drop in performance after reaching a certain FSB frequency) on Intel P945 and P965 - but these are all things of the past days.

The classic way of overclocking processors and RAM is changing the parameters of their work in the BIOS Setup.

The frequency / multiplier increases in steps (due to the good overclocking potential of modern CPUs, the first steps are usually large, as they increase in frequency, they become smaller). First, it turns out the maximum mode of operation in which the computer is able to boot the operating system. After this, the load testing phase begins. The most common and effective CPU / memory stress tests are Linpack , S & M , OCCT , Everest Stress Test . According to the results of their (non) passage, the frequency / multiplier decreases until the system is stable.

Auxiliary tool is the voltage on the core, the increase of which can significantly improve the result. The safe range of its change is 5-15% of the nominal value, a higher voltage can lead to degradation of the crystal or even its failure. Another unpleasant moment can be the shutdown of energy-saving technologies (which are useful not only for savings, but also slightly prolong the life of the processor) when setting any voltage except Auto on some motherboards. However, now it is already rare.

The alternative is overclocking directly from under Windows using special utilities (for example, SetFSB). It is not recommended for use (although sometimes you cannot do without it).

It is recommended to use the RAM at the time of overclocking the CPU in the most gentle mode - on the settings that are not very different from the factory settings. This is of course if the overclocking potential of specific memory modules is not known in advance (by the way, starting with finding out will be the right approach).

Now about the frequency potential of the CPU. It depends directly on the specific core and its stepping, and modern processors are quite high - if in olden days 30% was already considered a very good increase, then recently the ironworkers turn up their noses from stones unable to work at a frequency exceeding the nominal one and a half times.

AMD K8 and AMD K10 have modest capabilities by today's standards - their maximum frequencies only slightly exceed 3 GHz, which makes overclocking older models a meaningless exercise. This, however, does not prevent to drive the younger stones to the level of the older ones.

On average, AMD K10.5 chases up to 3.2-3.6 GHz, in some cases, overcoming the threshold in 4 GHz.

Intel Conroe conquer frequencies from 3 to 4 GHz. A good indicator for the latest stepping is at the level of 3.2-3.6 GHz.

Intel Wolfdale easily cope with frequencies in the range of 3.5-4 GHz, and 4.2-4.5 GHz submits to many specimens.

At the same time, due to their design features, quad-core processors Conroe and Wolfdale are worse off than their dual core processors. Especially frustrating are the Q8xxx ruler stones, often unable to take 3.5GHz.

Intel Nehalem in all variations easily copes with 3.8-4GHz, in some cases - 4.2-4.5GHz.

Notice, all of this - the numbers obtained on a completely normal systems with completely normal motherboards and the usual (albeit good) air-cooled. No liquid nitrogen and hardware modifications. Moreover, the lower strips of the specified ranges often obey even on standard boxed coolers and without raising the voltage on the core.

In general, a good scope for activities.

It remains to deal with the benefits of such events. Comparing the nominal frequencies of modern processors with their potential, we can conclude that overclocking makes sense in any case and it is foolish to ignore it even if the initially powerful processor is taken.

But this material is still devoted to the problem of choosing a computer, so we’ll put the question a little differently: is it worth saving on a processor to free up financial resources for other components of the system?

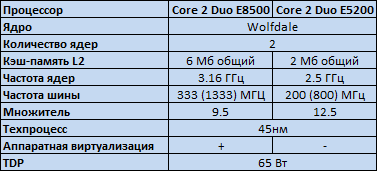

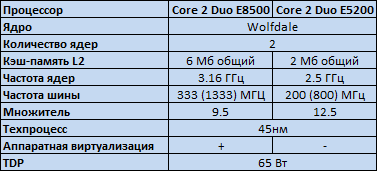

As an example, we consider the Core 2 Duo E8500, as a typical solution for production systems on the S775 platform, and the Pentium DC E5200, as a representative of the younger line, but still not greatly reduced in performance.

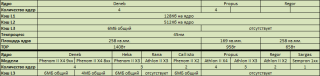

Processors are made on the same core Wolfdale, their key differences:

So, in favor of the E8500 at 26% higher frequency, as well as 6MB cache. Let's return to the previous article and see what this difference gives in practice.

The maximum gain from increasing frequency is linear, i.e. makes the same 26%. The maximum gain from increasing the amount of cache memory to 6MB is 14%.

Challenge: Increase E5200 performance to E8500 using overclocking.

By simple calculations (2500 * 1.26 * 1.14) we conclude that for this we need 3.6 GHz enough. It is a normal figure for Wolfdale. Even if we take into account that not all applications show a linear dependence of performance on frequency - in theory, everything is already wonderful. However, practice tends to disagree with theory.

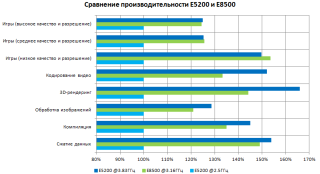

Let's return to the test results. Fortunately, the article turned out to be at hand, as one of the participants considering the devices we need - E8500 at regular frequency and Pentium DC E5200, overclocked to 3.83 GHz (+ 53%).

In general, everything is clear and without explanation. We achieved the performance of its older brother from the E5200, and somewhere we got an even more impressive result.

Now look at the prices. As their source, I will use the price list of Nix . The company is old, reputable, with good prices, a rich assortment and a convenient price list.

201 dollar against 69. The difference is almost threefold.

But, on the side of the older model, there is still another advantage: as we remember, the E5200 is deprived of the support of hardware virtualization technology Intel VT.

It is important to begin to understand - do you really need it? And in general, what is it for?

Hardware virtualization is useful (in terms of performance) when continuous operation of multiple virtual machines is required.

Hardware virtualization is necessary if you need to run 64-bit guest OS on Intel processors - due to the implementation features of the EM64T instruction set without its support, this cannot be done. AMD processors have no such problem.

If the cases listed above do not concern you, it is planned to use the virtual axis only occasionally - in order to check a questionable file for viruses or something else - support of hardware virtualization is not needed at all.

Suppose you did find an urgent need for Intel VT.

Let's return to the price list.

Slightly higher frequency, about $ 10 difference in price, and ... Support for Intel VT. Like the Core 2 Duo E8xxx (with the exception of the E8190), the Pentium DC E6xxx line (not to be confused with the Core 2 Duo E6xxx - older models on the Conroe core) supports the full Intel VT. A more detailed study of the specs reveals the necessary option in some revisions of the E5300 and E5400.

I will not give a similar example for AMD processors - everyone can open the price himself, compare characteristics, make elementary calculations and make a conclusion. But I cannot fail to mention the products of the competitive camp - after all, their products in recent times may please the overclocker with something more interesting than just increasing the frequency.

The roots of unlocking grow from the same place, from where the usual frequency acceleration comes from the quality control process of the manufactured products. It is simply not profitable for the manufacturer to have a large zoo of various kernels - the factory produces only a few varieties of crystals, disabling the “extra” blocks of low-end models at the microcode level. Screenings are made on the same principle as in the case of frequency, and, accordingly, disconnecting a block does not always imply its inoperability.

The problem is that, unlike the frequency, we cannot simply take it back and turn it back - we have to look for various loopholes and undocumented features. And it was AMD who had already become famous for several incidents involving their stones.

Back in the days of the AMD K7 processors (Athlon, Athlon XP, Duron), enthusiasts often engaged in unlocking the system multiplier, turning on the trimmed half of the L2 cache and turning the Athlon XP into an Athlon MP (supporting dual processor systems). To do this, it was necessary to connect certain contacts on the processor case. Finding out about this, AMD began to put a stick in the wheel, but enthusiasts still found ways to get freebies.

Since then, about unlocking in general, nothing was heard. During the reign of K8, occasionally flashed individual cases in the news, but it was all very doubtful and did not acquire the nature of mass character.

This was the case with the K10 generation processors, and could continue for the most modern K10.5, but the peace of mind was disturbed.

It all started with the fact that AMD has released a new south bridges SB750 and SB710, one of the features of which was the Advanced Clock Calibration (ACC) function, designed to increase the stability of processors during overclocking.

Due to an error in the implementation of this function, it turned out to be a very pleasant side effect. It was first discovered on the Phenom II X3 720 Black Edition processor (a special overclocking version with an unlocked multiplier) and was expressed in the inclusion of the fourth core that was initially inaccessible. Rose not weak agiotage. If at first this possibility was attributed to the Black Edition series, then later it turned out that all AMD processors are subject to this in general, and not only the modern K10.5, but also the previous generation K10.

In general, you can unlock all the features of the kernel on which the processor is executed.

For example, for K10.5 processors, the picture is as follows:

Somewhere you can unlock additional cache memory, somewhere additional kernels, somewhere - all at once.

Phenom II X2 and Athlon II X3 on the Rana core look very interesting in this respect. The likelihood of obtaining a full-fledged Phenom II X4 from them is quite high, although sometimes there are also unsuccessful specimens. If you want to insure, a more reliable option is the Phenom II X3 - they can be unlocked almost without exception.

And again, the opportunity to get not just the same performance, namely the older processor for a much lower price.

All these arguments do not give a definitive answer to the question "which processor to choose," but certain conclusions can be made. In the case of the Core 2 Duo, for example, the difference between the older and younger processors with the same overclocking reduces to the difference in the amount of cache memory, which is not as significant as their initial differences.

The processor now is not the component that is always at the head of the table. Attempting to speed up the system by investing in it as much as possible does not always bring the expected results. The competent choice of the processor and its subsequent overclocking can save quite a serious amount, which can be spent on strengthening other components of the system or simply leaving it for other purposes.

RAM can be overclocked in two directions - increasing the frequency and reducing the timings.

In general, the previous article concluded that both of these parameters, with today's memory characteristics, have little effect on overall system performance.

However, memory overclocking may be necessary even when it was not planned in principle.

The problem is that the frequency of the RAM is not set by a separate clock generator, but is synchronized with the frequency of the system bus or the system generator by means of a divider. The best option, of course, is a 1: 1 divider, but in practice such a mode is not always possible. For example, the Core 2 Duo E8xxx processors have a real FSB speed of 333 MHz, and the most common DDR2 memory modules - 800 MHz. The reducing divider is necessary even without overclocking. The set of its values is fixed and depends on the capabilities of the motherboard - in budget products it is very limited (especially downwards). So, when overclocking the processor over the bus, sooner or later we run into a situation where even with the minimum available divider the frequency of the RAM begins to exceed the default one. And continuing to increase the frequency of the system bus, we inevitably overclock and memory.

Naturally, memory also has its own frequency threshold. It depends on the used memory chips (detailed information can be found on thematic resources) and is detected experimentally.

If the threshold is reached - you can try to raise it. This can be done by raising either the timings or the voltage on the memory modules. Naturally, all this helps again, only up to a certain level. In any case, each memory module has its own limit, after which, nothing will help to increase the frequency. And do not forget that the voltage can also be increased not infinitely, but only by 5-15% of the nominal (for example, for DDR2 modules with a native voltage of 1.8V it is not recommended to increase it to values higher than 2.1V).

If the dividers allow you to set the frequency, not much higher than (or not at all) the standard - you can try to play with the timings. Most modules allow them to be reduced by 1-2 steps with a slight increase in voltage on the modules. Of course, productivity gains will not be great - at best, 5%, but then you don’t need to pay extra for it;)

In general, modern RAM is chasing very well. Buying special overclocking memory modules, as a rule, increases the success of the event. The main thing is not to overdo it, because such solutions are sometimes several times more expensive than standard modules, and conclusions about the performance of the system from memory were made in the previous article.

Another important point that should be taken into account when overclocking memory: failure of the subsystem of the OP can lead to read / write errors, and therefore loss of data on external media. Personally, I came across this twice - overclocking the memory crashed the partition table on the system HDD. Therefore, for the duration of this event, it is better to disable hard drives with important information, and on hand to have a boot disk / USB flash drive with a partition manager and a recovery utility.

Speaking of the video card, we are faced with a rather interesting situation. In contrast to the CPU, the release of end products is not directly involved in the production of GPs, but in partner companies, so-called subvendors. Of course, there is a reference, the so-called reference design (and reference characteristics), but strictly no one obliges to adhere to it.

The scope of activity of subvendors is quite wide. You can release a completely unique product, leaving from the original only GP and the general logic of the organization. In addition, each change can be made in two ways - in the direction of improvement, or vice versa simplification.

Therefore, when buying a video card, you need to carefully choose the final model - despite the apparent closeness of the names, the glands can be very different.

What to choose - reference or non-reference - is everyone’s business, and generally depends on the specific case. There are initially good products that do not need improvement, but there are not entirely successful solutions. This problem is particularly acute in relation to the cooling systems of the products of the middle price sector - they cope with their task directly, but at the same time, as a rule, they make a lot of noise. And if you are engaged in overclocking, their power may not be enough. As an example of such “bad” solutions, reference GeForce 8800GT / 9800GT and Radeon HD4850 / 4830 can be cited. As examples of initially good products - GeForce 9800GTX and Radeon HD5700.

In addition to making changes to the design of the video card itself, subvendors love to produce products with non-standard frequencies (and other parameters). There are two approaches to this.

The first is simple. The frequency of the GP rises by 50 MHz, memory by 100, a dozen or two raccoons are added to the price and the device is sent to the counter. For a sub-vendor, the situation is generally win-win - such minor manipulations with the frequencies of a video card can withstand almost all the time, i.e.risk is minimal. The performance gain from this is not serious, but such glands disperse among a certain audience "with a bang."

Another approach is more honest. First, there is no longer without its own PCB and CO. Frequencies increase more seriously. Sometimes even a special selection of chips with the best frequency potential is made. Such a product sometimes happens to be noticeably more expensive than the stock option, but the differences are more noticeable.

However, the situation may be reversed. Many manufacturers do not miss the opportunity to make money on the fanatics of various eco-green technologies, releasing devices with ECO or Green suffixes in the name. They differ from the reference options with reduced power consumption, which, as you might guess, is achieved by reducing the operating frequencies.

Sometimes there are times when a slower memory is put on the video card compared to the reference memory, or a memory bus is cut down. In this case, the price of the device is lower, but these manipulations are not always reflected on the name. What is interesting, not only “second-rate vendors” were caught in such acts, but also such eminent companies as, for example, ASUS (Magic video cards).

Overclocking of video cards consists in increasing the frequencies of the GP and the video memory.

The most common way - with the help of special tools. You can use both branded software from manufacturers and alternative universal solutions ( RivaTuner , ATI Tray Tools). Personally, I prefer the second option, although sometimes it is better and more convenient to use branded utilities.

Another technique is flashing changed parameters directly in the video adapter BIOS. This is already applied, as a rule, after the most stable frequencies have been elucidated. The goal is clear - “flash and forget” =) You can

raise the frequency threshold again by raising the voltage. With this harder. Some video cards allow you to control the voltage on the core and memory using the same proprietary utilities. Some of the desired value can be flashed in the BIOS. There are exotic products that allow switching the voltage by interchanging jumpers (jumpers) on the map. Well, a very significant part of thezooThe range of video cards is made up of devices, for voltage control on which you will need some knowledge of electronics, as well as the ability to handle a soldering iron.

Testing of video cards for stability includes two main points:

Test for artifacts and overheating. The most effective in this area are the "hairy bagels" - FurMark and OCCT 3 . ATITool's “hairy cube” doesn’t heat up the piece of iron so much, but it detects image artifacts faster. As an alternative (or better addition), you can recommend multiple runs of the main 3DMark gaming tests, or simply hard games;

Test for friezes. The first tests of 3DMark 05/06, as well as some games reveal them quite well. From personal experience - NFS ProStreet hung on the map, having successfully worked 15 minutes under a bagel and 10 passes of game tests 06 mark.

As for unlocking, here again, the freebie lives in the AMD camp. This phenomenon is, however, infrequent, but sometimes successful events flash in the news, and modified BIOSes appear on the network, allowing the disabled blocks to be returned to the video card. From the latter one can recall the unlock of all 800 shader units on some HD4830, which actually turned it into a full-fledged more expensive HD4850.

In general, a video card is not the thing that is worth saving on when it comes to games. To achieve with the help of overclocking and other manipulations from the younger models of the results of the senior level will not work, but it can not be ignored either. Overclocking allows you to improve the performance of the system, and it concerns all video cards in general. Middle-class devices obtained by cutting back on older models are chasing well by definition, while younger cards have a low TDP, which also has a positive effect on overclocking. Examples and graphs will not give, the benefit of the relevant reviews on the network car and a small truck.

And a small note about multi-GPU systems. Multi-chip video cards have a noticeably larger TDP than single-chip solutions, as a result of which they are much more difficult to cool. Installing several video cards close together raises the temperature of the surrounding space. In both of these cases, overclocking of video cards is associated with additional difficulties and the glands show worse results than in single configurations.

When overclocking components (especially with an increase in their voltage), their power consumption and heat dissipation increases. This gives important consequences:

1. More efficient cooling is required for overclocked components;

2. For the overclocked system requires a large power supply.

It is the neglect of these two consequences that causes problems in the process of overclocking.

3. Overclocked system is less economical in terms of power consumption.

And this puts into question the expediency of overclocking laptops.

A detailed analysis of the issues of power and cooling will be devoted to this material. For now, it’s just worth remembering and assimilating these important notes.

And even the failure of the iron due to overclocking is a non-warranty case. Although in not very straight arms anything can break at all;)

The knowledge of some iron subtleties opens up new, previously hidden horizons for the user.

Overclocking is a great way to improve the performance of your system without losing anything, and ignoring such an opportunity is a real blasphemy.

At this, the problem of choosing key components can be considered solved. Of course, not all the questions were answered, but a certain clarity was already introduced into the situation. If something is completely incomprehensible - ready to answer in the comments;)

The next part of the material will be devoted to another very important component of the system - the motherboard.

PSI apologize for the two-week delay, but I still could not get the article to mind. That is the case, then simply “the creature is not the first.” Just finished today. Now over time, like it became more free, so that these parts will go faster =)

PPS Once again I remind you that this series of articles is not aimed at, zhelezyachnikov guru tehnomanyakov and extreme overclockers, while the ordinary people close to IT, but poorly understood exactly in the iron field.

PPPS

By reading are required - the material is their direct continuation.

In the previous part of the article, key components of a modern PC were considered, but no final conclusion was made. Yes, we looked at the most important characteristics and their impact on performance. Knowing this, you can take a look at the proposed range and find the right device. But for higher performance, in any case, you will have to pay more, and you don’t want to do it like that ...

Are there ways to overcome the differences between the younger and older models of hardware, or just to increase system performance without paying extra for it? Definitely there =)

Part 3. Hidden horizons

- Hot Finnish guys dispersed the Radeon to 1 GHz!

- Now can not catch up?

Overclocking is the operation of computer components in abnormal modes of operation in order to increase their speed. Occupation, very popular among zhelezyachnikov, and at the same time and the source of

Very often, when I advise someone to new hardware with a reference to further overclocking, in response, I hear “no, I won’t do anything!”. It is very difficult to convince people, sometimes it is almost impossible.

In order to understand this attitude, you first need to figure out what exactly is overclocking in practice.

In total there are two main areas:

Extreme overclocking

This lesson is aimed at squeezing all its potential out of iron by any means. The ultimate goal is to set records for the maximum frequency or the maximum number of “parrots” in any synthetic benchmark. To achieve the result, all possible means are used: extreme cooling (multistage freon, dry ice, liquid nitrogen, liquid helium), modification of components, etc. The selection of iron for such a system is quite tough - up to individual instances, and from the overclocker you need to possess a fairly large theoretical base and solid practical experience. Extreme overclocking is quite risky, to kill any piece of iron because of inexperience in such conditions is quite easy. Well and the main thing - this occupation has no practical use, it is aimed exclusively at setting records, demonstrating the extreme possibilities of iron and just getting a fan.

')

Household acceleration

It is quite another thing - household overclocking, the ultimate goal of which is to increase the productivity of existing iron with the expectation of long-term (relatively) promising work. The process of selecting iron for the system “under overclocking” is reduced to the purchase of more “loyal” and optimal components. However, no one bothers to overclock everything that comes to hand;) From the overclocker, knowledge of only the basic principles and methods of overclocking is required, as well as some features of specific hardware. The risk with proper selection of components is reduced to a minimum - as a consequence of the presence of various defense mechanisms, it is rather difficult to kill a modern system, even if it wants to be done on purpose. And most importantly, in contrast to extreme overclocking, home overclocking has a clear practical benefit - a free performance boost =)

All stereotypes associated with overclocking, as well as dislike for this activity, arise because of the association of the word “overclocking” in people exclusively with the first option - extreme overclocking. Or, with the unfortunate experience of bearded times, when even domestic overclocking was a rather complicated procedure, but they did not stutter on the extreme. At the present time, household overclocking is nothing special. I will talk about him in this article.

What? Where? When?

Before proceeding to the issues of overclocking individual components, one cannot do without a couple of words on the technical side of overclocking. What generally allows this phenomenon to exist?

The answer lies in the production technology of semiconductor products, namely, at the stages of quality control and rejection of unusable chips.

You have probably heard more than once about quality control. Its essence lies in conducting a series of different tests that determine the final compliance of the chips with the required characteristics.

The following is important here:

- 1. A multi-level test system is applied. At first, the chips are tested for the maximum possible parameters for their revision - the frequency, the correct functioning of all the blocks, etc. If the control is not passed - a softer test is applied, for the selection of chips, which later will be labeled as a younger model in the line.

- 2. Quality control is done in batches. Of course, each chip passes tests (at least the producers say so), but, taking into account the production volumes, we still have to operate the batches at the factory. And if a high-level test failed at least one chip from a batch, it’s enough to make it FULLY go to the next lower level. And the better the technical process is debugged, the less “failing” chips in the batch;)

- 3. Further debugging of the technical process can lead to the fact that only a tiny percentage of the produced crystals fail to pass the top level tests. Those. roughly speaking, they can all be labeled and put on sale as an older model. For obvious reasons, this is not done, and the chips, despite the passage of the "older" tests are labeled as younger models.

- Of these three statements, a logical conclusion suggests itself - the younger models of chips obtained by rejection , with a rather high probability, can function on the parameters of the older models.

We now turn to more approximate things and consider acceleration on the example of the main components of the system, which were discussed in the previous section.

I will not describe here a detailed method of overclocking, I will consider only general principles. The main thing is to understand the essence of the process itself and the benefits it can bring.

CPU

As you know, the frequency of the CPU cores is obtained by multiplying the frequency (real, not effective) of the system bus or the system generator by the CPU multiplier. For example, Intel Pentium DC E5200 has a frequency of 2500 MHz with a FSB of 200 MHz and a multiplier of 12.5.

In order to change the final frequency, it is necessary, respectively, to increase either the bus or the multiplier.

Speaking of the multiplier. In bearded times, the presence of an unlocked upward multiplier was almost the only opportunity to disperse a stone. The reason lay in the motherboards - they did not contribute to the functioning of iron in abnormal conditions. Now the picture is completely different - even the most low-cost glands allow you to change the basic parameters and are able to keep more or less good overclocking, not to mention middle-class devices, and even more so about special overclocking models. Therefore, if we talk about domestic acceleration, a free multiplier is unlikely to give any special advantages. You can certainly recall the problem of FSB Strap (switching the operating mode of chipsets and a sharp drop in performance after reaching a certain FSB frequency) on Intel P945 and P965 - but these are all things of the past days.

The classic way of overclocking processors and RAM is changing the parameters of their work in the BIOS Setup.

The frequency / multiplier increases in steps (due to the good overclocking potential of modern CPUs, the first steps are usually large, as they increase in frequency, they become smaller). First, it turns out the maximum mode of operation in which the computer is able to boot the operating system. After this, the load testing phase begins. The most common and effective CPU / memory stress tests are Linpack , S & M , OCCT , Everest Stress Test . According to the results of their (non) passage, the frequency / multiplier decreases until the system is stable.

Auxiliary tool is the voltage on the core, the increase of which can significantly improve the result. The safe range of its change is 5-15% of the nominal value, a higher voltage can lead to degradation of the crystal or even its failure. Another unpleasant moment can be the shutdown of energy-saving technologies (which are useful not only for savings, but also slightly prolong the life of the processor) when setting any voltage except Auto on some motherboards. However, now it is already rare.

The alternative is overclocking directly from under Windows using special utilities (for example, SetFSB). It is not recommended for use (although sometimes you cannot do without it).

It is recommended to use the RAM at the time of overclocking the CPU in the most gentle mode - on the settings that are not very different from the factory settings. This is of course if the overclocking potential of specific memory modules is not known in advance (by the way, starting with finding out will be the right approach).

Now about the frequency potential of the CPU. It depends directly on the specific core and its stepping, and modern processors are quite high - if in olden days 30% was already considered a very good increase, then recently the ironworkers turn up their noses from stones unable to work at a frequency exceeding the nominal one and a half times.

AMD K8 and AMD K10 have modest capabilities by today's standards - their maximum frequencies only slightly exceed 3 GHz, which makes overclocking older models a meaningless exercise. This, however, does not prevent to drive the younger stones to the level of the older ones.

On average, AMD K10.5 chases up to 3.2-3.6 GHz, in some cases, overcoming the threshold in 4 GHz.

Intel Conroe conquer frequencies from 3 to 4 GHz. A good indicator for the latest stepping is at the level of 3.2-3.6 GHz.

Intel Wolfdale easily cope with frequencies in the range of 3.5-4 GHz, and 4.2-4.5 GHz submits to many specimens.

At the same time, due to their design features, quad-core processors Conroe and Wolfdale are worse off than their dual core processors. Especially frustrating are the Q8xxx ruler stones, often unable to take 3.5GHz.

Intel Nehalem in all variations easily copes with 3.8-4GHz, in some cases - 4.2-4.5GHz.

Notice, all of this - the numbers obtained on a completely normal systems with completely normal motherboards and the usual (albeit good) air-cooled. No liquid nitrogen and hardware modifications. Moreover, the lower strips of the specified ranges often obey even on standard boxed coolers and without raising the voltage on the core.

In general, a good scope for activities.

It remains to deal with the benefits of such events. Comparing the nominal frequencies of modern processors with their potential, we can conclude that overclocking makes sense in any case and it is foolish to ignore it even if the initially powerful processor is taken.

But this material is still devoted to the problem of choosing a computer, so we’ll put the question a little differently: is it worth saving on a processor to free up financial resources for other components of the system?

As an example, we consider the Core 2 Duo E8500, as a typical solution for production systems on the S775 platform, and the Pentium DC E5200, as a representative of the younger line, but still not greatly reduced in performance.

Processors are made on the same core Wolfdale, their key differences:

So, in favor of the E8500 at 26% higher frequency, as well as 6MB cache. Let's return to the previous article and see what this difference gives in practice.

The maximum gain from increasing frequency is linear, i.e. makes the same 26%. The maximum gain from increasing the amount of cache memory to 6MB is 14%.

Challenge: Increase E5200 performance to E8500 using overclocking.

By simple calculations (2500 * 1.26 * 1.14) we conclude that for this we need 3.6 GHz enough. It is a normal figure for Wolfdale. Even if we take into account that not all applications show a linear dependence of performance on frequency - in theory, everything is already wonderful. However, practice tends to disagree with theory.

Let's return to the test results. Fortunately, the article turned out to be at hand, as one of the participants considering the devices we need - E8500 at regular frequency and Pentium DC E5200, overclocked to 3.83 GHz (+ 53%).

In general, everything is clear and without explanation. We achieved the performance of its older brother from the E5200, and somewhere we got an even more impressive result.

Now look at the prices. As their source, I will use the price list of Nix . The company is old, reputable, with good prices, a rich assortment and a convenient price list.

CPU Intel Core 2 Duo E8500 3.16 GHz / 6MB / 1333MHz LGA775 201

Intel Pentium Dual-Core E5200 2.5 GHz / 2 MB / 800 MHz LGA775 69

201 dollar against 69. The difference is almost threefold.

But, on the side of the older model, there is still another advantage: as we remember, the E5200 is deprived of the support of hardware virtualization technology Intel VT.

It is important to begin to understand - do you really need it? And in general, what is it for?

Hardware virtualization is useful (in terms of performance) when continuous operation of multiple virtual machines is required.

Hardware virtualization is necessary if you need to run 64-bit guest OS on Intel processors - due to the implementation features of the EM64T instruction set without its support, this cannot be done. AMD processors have no such problem.

If the cases listed above do not concern you, it is planned to use the virtual axis only occasionally - in order to check a questionable file for viruses or something else - support of hardware virtualization is not needed at all.

Suppose you did find an urgent need for Intel VT.

Let's return to the price list.

Intel Pentium E6300 2.8 GHz / 2 MB / 1066 MHz LGA775 81 CPU

Slightly higher frequency, about $ 10 difference in price, and ... Support for Intel VT. Like the Core 2 Duo E8xxx (with the exception of the E8190), the Pentium DC E6xxx line (not to be confused with the Core 2 Duo E6xxx - older models on the Conroe core) supports the full Intel VT. A more detailed study of the specs reveals the necessary option in some revisions of the E5300 and E5400.

I will not give a similar example for AMD processors - everyone can open the price himself, compare characteristics, make elementary calculations and make a conclusion. But I cannot fail to mention the products of the competitive camp - after all, their products in recent times may please the overclocker with something more interesting than just increasing the frequency.

Unlock

The roots of unlocking grow from the same place, from where the usual frequency acceleration comes from the quality control process of the manufactured products. It is simply not profitable for the manufacturer to have a large zoo of various kernels - the factory produces only a few varieties of crystals, disabling the “extra” blocks of low-end models at the microcode level. Screenings are made on the same principle as in the case of frequency, and, accordingly, disconnecting a block does not always imply its inoperability.

The problem is that, unlike the frequency, we cannot simply take it back and turn it back - we have to look for various loopholes and undocumented features. And it was AMD who had already become famous for several incidents involving their stones.

Back in the days of the AMD K7 processors (Athlon, Athlon XP, Duron), enthusiasts often engaged in unlocking the system multiplier, turning on the trimmed half of the L2 cache and turning the Athlon XP into an Athlon MP (supporting dual processor systems). To do this, it was necessary to connect certain contacts on the processor case. Finding out about this, AMD began to put a stick in the wheel, but enthusiasts still found ways to get freebies.

Since then, about unlocking in general, nothing was heard. During the reign of K8, occasionally flashed individual cases in the news, but it was all very doubtful and did not acquire the nature of mass character.

This was the case with the K10 generation processors, and could continue for the most modern K10.5, but the peace of mind was disturbed.

It all started with the fact that AMD has released a new south bridges SB750 and SB710, one of the features of which was the Advanced Clock Calibration (ACC) function, designed to increase the stability of processors during overclocking.

Due to an error in the implementation of this function, it turned out to be a very pleasant side effect. It was first discovered on the Phenom II X3 720 Black Edition processor (a special overclocking version with an unlocked multiplier) and was expressed in the inclusion of the fourth core that was initially inaccessible. Rose not weak agiotage. If at first this possibility was attributed to the Black Edition series, then later it turned out that all AMD processors are subject to this in general, and not only the modern K10.5, but also the previous generation K10.

In general, you can unlock all the features of the kernel on which the processor is executed.

For example, for K10.5 processors, the picture is as follows:

Somewhere you can unlock additional cache memory, somewhere additional kernels, somewhere - all at once.

Phenom II X2 and Athlon II X3 on the Rana core look very interesting in this respect. The likelihood of obtaining a full-fledged Phenom II X4 from them is quite high, although sometimes there are also unsuccessful specimens. If you want to insure, a more reliable option is the Phenom II X3 - they can be unlocked almost without exception.

AMD Phenom II X4 945 3.0 GHz / 2 + 6 Mb CPU / 4000 MHz CPU Socket AM3 189

AMD Phenom II X4 925 CPU 2.8 GHz / 2 + 6 MB / 4000 MHz Socket AM3 179

AMD Phenom II X3 720 CPU 2.8 GHz / 1.5 + 6 MB / 4000 MHz Socket AM3 144

AMD Athlon II X3 425 CPU 2.7 GHz / 1.5 MB / 4000 MHz Socket AM3 81

And again, the opportunity to get not just the same performance, namely the older processor for a much lower price.

All these arguments do not give a definitive answer to the question "which processor to choose," but certain conclusions can be made. In the case of the Core 2 Duo, for example, the difference between the older and younger processors with the same overclocking reduces to the difference in the amount of cache memory, which is not as significant as their initial differences.

The processor now is not the component that is always at the head of the table. Attempting to speed up the system by investing in it as much as possible does not always bring the expected results. The competent choice of the processor and its subsequent overclocking can save quite a serious amount, which can be spent on strengthening other components of the system or simply leaving it for other purposes.

RAM

RAM can be overclocked in two directions - increasing the frequency and reducing the timings.

In general, the previous article concluded that both of these parameters, with today's memory characteristics, have little effect on overall system performance.

However, memory overclocking may be necessary even when it was not planned in principle.

The problem is that the frequency of the RAM is not set by a separate clock generator, but is synchronized with the frequency of the system bus or the system generator by means of a divider. The best option, of course, is a 1: 1 divider, but in practice such a mode is not always possible. For example, the Core 2 Duo E8xxx processors have a real FSB speed of 333 MHz, and the most common DDR2 memory modules - 800 MHz. The reducing divider is necessary even without overclocking. The set of its values is fixed and depends on the capabilities of the motherboard - in budget products it is very limited (especially downwards). So, when overclocking the processor over the bus, sooner or later we run into a situation where even with the minimum available divider the frequency of the RAM begins to exceed the default one. And continuing to increase the frequency of the system bus, we inevitably overclock and memory.

Naturally, memory also has its own frequency threshold. It depends on the used memory chips (detailed information can be found on thematic resources) and is detected experimentally.

If the threshold is reached - you can try to raise it. This can be done by raising either the timings or the voltage on the memory modules. Naturally, all this helps again, only up to a certain level. In any case, each memory module has its own limit, after which, nothing will help to increase the frequency. And do not forget that the voltage can also be increased not infinitely, but only by 5-15% of the nominal (for example, for DDR2 modules with a native voltage of 1.8V it is not recommended to increase it to values higher than 2.1V).

If the dividers allow you to set the frequency, not much higher than (or not at all) the standard - you can try to play with the timings. Most modules allow them to be reduced by 1-2 steps with a slight increase in voltage on the modules. Of course, productivity gains will not be great - at best, 5%, but then you don’t need to pay extra for it;)

In general, modern RAM is chasing very well. Buying special overclocking memory modules, as a rule, increases the success of the event. The main thing is not to overdo it, because such solutions are sometimes several times more expensive than standard modules, and conclusions about the performance of the system from memory were made in the previous article.

Another important point that should be taken into account when overclocking memory: failure of the subsystem of the OP can lead to read / write errors, and therefore loss of data on external media. Personally, I came across this twice - overclocking the memory crashed the partition table on the system HDD. Therefore, for the duration of this event, it is better to disable hard drives with important information, and on hand to have a boot disk / USB flash drive with a partition manager and a recovery utility.

Video card

Speaking of the video card, we are faced with a rather interesting situation. In contrast to the CPU, the release of end products is not directly involved in the production of GPs, but in partner companies, so-called subvendors. Of course, there is a reference, the so-called reference design (and reference characteristics), but strictly no one obliges to adhere to it.

The scope of activity of subvendors is quite wide. You can release a completely unique product, leaving from the original only GP and the general logic of the organization. In addition, each change can be made in two ways - in the direction of improvement, or vice versa simplification.

Therefore, when buying a video card, you need to carefully choose the final model - despite the apparent closeness of the names, the glands can be very different.

What to choose - reference or non-reference - is everyone’s business, and generally depends on the specific case. There are initially good products that do not need improvement, but there are not entirely successful solutions. This problem is particularly acute in relation to the cooling systems of the products of the middle price sector - they cope with their task directly, but at the same time, as a rule, they make a lot of noise. And if you are engaged in overclocking, their power may not be enough. As an example of such “bad” solutions, reference GeForce 8800GT / 9800GT and Radeon HD4850 / 4830 can be cited. As examples of initially good products - GeForce 9800GTX and Radeon HD5700.

In addition to making changes to the design of the video card itself, subvendors love to produce products with non-standard frequencies (and other parameters). There are two approaches to this.

The first is simple. The frequency of the GP rises by 50 MHz, memory by 100, a dozen or two raccoons are added to the price and the device is sent to the counter. For a sub-vendor, the situation is generally win-win - such minor manipulations with the frequencies of a video card can withstand almost all the time, i.e.risk is minimal. The performance gain from this is not serious, but such glands disperse among a certain audience "with a bang."

Another approach is more honest. First, there is no longer without its own PCB and CO. Frequencies increase more seriously. Sometimes even a special selection of chips with the best frequency potential is made. Such a product sometimes happens to be noticeably more expensive than the stock option, but the differences are more noticeable.

However, the situation may be reversed. Many manufacturers do not miss the opportunity to make money on the fanatics of various eco-green technologies, releasing devices with ECO or Green suffixes in the name. They differ from the reference options with reduced power consumption, which, as you might guess, is achieved by reducing the operating frequencies.

Sometimes there are times when a slower memory is put on the video card compared to the reference memory, or a memory bus is cut down. In this case, the price of the device is lower, but these manipulations are not always reflected on the name. What is interesting, not only “second-rate vendors” were caught in such acts, but also such eminent companies as, for example, ASUS (Magic video cards).

Overclocking of video cards consists in increasing the frequencies of the GP and the video memory.

The most common way - with the help of special tools. You can use both branded software from manufacturers and alternative universal solutions ( RivaTuner , ATI Tray Tools). Personally, I prefer the second option, although sometimes it is better and more convenient to use branded utilities.

Another technique is flashing changed parameters directly in the video adapter BIOS. This is already applied, as a rule, after the most stable frequencies have been elucidated. The goal is clear - “flash and forget” =) You can

raise the frequency threshold again by raising the voltage. With this harder. Some video cards allow you to control the voltage on the core and memory using the same proprietary utilities. Some of the desired value can be flashed in the BIOS. There are exotic products that allow switching the voltage by interchanging jumpers (jumpers) on the map. Well, a very significant part of the

Testing of video cards for stability includes two main points:

Test for artifacts and overheating. The most effective in this area are the "hairy bagels" - FurMark and OCCT 3 . ATITool's “hairy cube” doesn’t heat up the piece of iron so much, but it detects image artifacts faster. As an alternative (or better addition), you can recommend multiple runs of the main 3DMark gaming tests, or simply hard games;

Test for friezes. The first tests of 3DMark 05/06, as well as some games reveal them quite well. From personal experience - NFS ProStreet hung on the map, having successfully worked 15 minutes under a bagel and 10 passes of game tests 06 mark.

As for unlocking, here again, the freebie lives in the AMD camp. This phenomenon is, however, infrequent, but sometimes successful events flash in the news, and modified BIOSes appear on the network, allowing the disabled blocks to be returned to the video card. From the latter one can recall the unlock of all 800 shader units on some HD4830, which actually turned it into a full-fledged more expensive HD4850.

In general, a video card is not the thing that is worth saving on when it comes to games. To achieve with the help of overclocking and other manipulations from the younger models of the results of the senior level will not work, but it can not be ignored either. Overclocking allows you to improve the performance of the system, and it concerns all video cards in general. Middle-class devices obtained by cutting back on older models are chasing well by definition, while younger cards have a low TDP, which also has a positive effect on overclocking. Examples and graphs will not give, the benefit of the relevant reviews on the network car and a small truck.

And a small note about multi-GPU systems. Multi-chip video cards have a noticeably larger TDP than single-chip solutions, as a result of which they are much more difficult to cool. Installing several video cards close together raises the temperature of the surrounding space. In both of these cases, overclocking of video cards is associated with additional difficulties and the glands show worse results than in single configurations.

What should not be forgotten

When overclocking components (especially with an increase in their voltage), their power consumption and heat dissipation increases. This gives important consequences:

1. More efficient cooling is required for overclocked components;

2. For the overclocked system requires a large power supply.

It is the neglect of these two consequences that causes problems in the process of overclocking.

3. Overclocked system is less economical in terms of power consumption.

And this puts into question the expediency of overclocking laptops.

A detailed analysis of the issues of power and cooling will be devoted to this material. For now, it’s just worth remembering and assimilating these important notes.

And even the failure of the iron due to overclocking is a non-warranty case. Although in not very straight arms anything can break at all;)

Instead of output

The knowledge of some iron subtleties opens up new, previously hidden horizons for the user.

Overclocking is a great way to improve the performance of your system without losing anything, and ignoring such an opportunity is a real blasphemy.

At this, the problem of choosing key components can be considered solved. Of course, not all the questions were answered, but a certain clarity was already introduced into the situation. If something is completely incomprehensible - ready to answer in the comments;)

The next part of the material will be devoted to another very important component of the system - the motherboard.

PSI apologize for the two-week delay, but I still could not get the article to mind. That is the case, then simply “the creature is not the first.” Just finished today. Now over time, like it became more free, so that these parts will go faster =)

PPS Once again I remind you that this series of articles is not aimed at, zhelezyachnikov guru tehnomanyakov and extreme overclockers, while the ordinary people close to IT, but poorly understood exactly in the iron field.

PPPS

Source: https://habr.com/ru/post/82781/

All Articles