The computer of your dreams. Part 2: Reality and Fiction

Continue to be!

Part 1

The tasks are set, and it seems to be a good time to move on to reading various reviews and tests, but ...

Where to begin? Which way to look at?

The second part of the opus will be devoted to the key components of the modern system - the central processor, RAM and the video card. It is unlikely that anyone doubts that these components are basic, the question is different - which of them is more important? What should I look for first of all when assembling a machine for certain tasks? What device and its characteristics?

To begin, I will make a small digression.

My previous article caused a rather ambiguous reaction in Habré. The essence of the most negative comments boiled down to one thing: “These are all obvious things! Nothing new! Everything is already known! Where is the specifics? ".

What can I do, I can not write briefly (yes, brevity - the sister of talent, but not mine), I can not make various digressions. I can’t write instructions like “do it once, do two, do three”.

And my previous topic is not an instruction, not a manual “how to assemble a computer”. This is an introduction for one big material, describing only general principles. And the material itself is also not an instruction and not a guide, but food for considerations, a reason to change some of your views and start thinking in situations where you thought it was superfluous.

I planned to publish the second part 2-3 days after the first one and began to write almost immediately, but somehow it did not go well. A week later, 60 percent was ready. I sat down, read what was written, and I did not like it at all. It turned out really too stretched and boring. Therefore, everything was rewritten from scratch. I tried to make the material more readable and, as far as possible, to shorten the length. I would be glad if my efforts were not in vain =)

Articles about computer hardware just a huge amount. There are many different resources, laboratories and even individuals who write them. Everyone has their own methodology, their own criteria, their own sets of test programs, and, finally, iron is different everywhere. Well, if we find the tests of the device we are interested in in the right application. And if not? If the piece of iron is too new, or just unpopular? And what if the application of interest is not in high esteem among observers. It becomes bad. The only way out of this situation is to work out a large amount of material close to the issue of interest, and on its basis draw general conclusions and determine trends. Actually, my opus and is the result of such research.

During the writing of this article, a large number of reviews of the following resources were used:

I consider them to be worthy and high-quality reviewers.

One of the main principles of comparing the results were:

The picture below does not claim to be absolutely accurate, but rather reflects the current situation in the field of iron.

Characteristics are what really matters. You can recognize them by the full (attention! Full) name of the line and processor model on the official website of the manufacturer or decent thematic resources.

I will not list here all the characteristics of modern CPUs, there are too many of them. We are interested in the following:

')

We need to know the name of the kernel if only because it determines the belonging of the processor model to a specific micro-architecture. And without information about this comparison of all the other characteristics can give results that are very far from reality.

Now 5 microarchitectures are quite common:

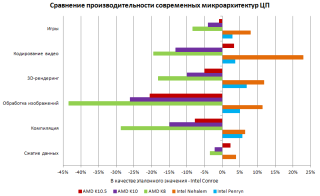

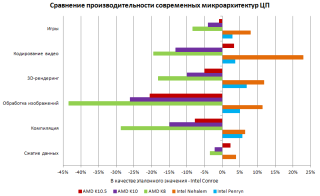

The graph visually displays a comparison of the performance of modern micro-architectures in various tasks. The reference values are Intel Conroe results.

Immediately you can see the hopeless obsolescence of K8 and not the best picture with K10. K10.5 shows more pleasant results, in some places even overtaking Intel products, but it upsets the complete overflow of all AMD processors in graphics-related tasks. If we talk about Intel products, a planned growth in the transition to Penryn, and even more enjoyable, to a more modern Nehalem, which apart from everything else, was distinguished in video coding.

In general, the conclusions about the initial alignment of forces can be made quite accurately.

As for stepping (revision) of the core, it affects mainly the TDP and the frequency potential of the processor. It turns out that new steppings bring additional sets of instructions and extensions. Check on the manufacturer's website =)

The war between the supporters of 2-cores and 4-cores began even when the latter were just flashing in the announcements, and the spears in the disputes still break. Over time, compromise solutions entered into the “war” - 3-core CPUs (proposed, however, only AMD), which naturally gave rise to new issues. How important is multi-core? Or even not - where exactly is multi-core, in which applications?

The first thing that can be seen from the graph is clearly not in the games. In general, there are of course several games where the performance gain is quite high (up to 50%), but there are very few of them - fewer than fingers (the most poisonous - GTA IV and World in Conflict) =) Most of them are from 0 to 15% and decreases with increasing quality and resolution settings.

Most efficient multi-core configurations show themselves in 3D rendering and compilation. Good results also in video coding. When working with graphics, there is an increase, but it is not as high as we would like.

Finally, a strong blow to stereotypes is data compression. Archivers show impressive numbers in the embedded synthetic benchmarks, but when it comes to working with real data, it turns out that the extra core to them is like a fifth leg to a dog.

The three core configuration really turns out to be quite interesting - excellent results in applications optimized for multithreading and much more useful than from 4 cores in non-optimized ones. If there is not a strong price difference with 2-core CPUs, it can be a good choice.

As for the Hyper-Threading technology (virtual paralleling), revived in Intel Core i7 processors, it is useful only in applications that are very sensitive to the number of cores (those that have sausage longer than 40 on the chart)). Well, the inclusion of HT naturally does not give any mythical double growth - only 1/3 of what would have happened if the real number of cores were doubled. Charts will not lead.

A parameter that has historically been “the main gauge of performance,” and indeed it is now.

Yes, the performance dependency on the CPU frequency is much more noticeable than on the number of cores. For example, with increasing frequency from 2.8 to 3.8 GHz, i.e. by 35%, in most cases we will have a productivity increase of more than 30%, which is very good. With games, the picture is worse, the effect of frequency is less than two times, and besides, as is the case with the number of cores, it decreases with increasing settings for quality, resolution, and ... frequency, i.e. this dependence is nonlinear. If you delve into the tests, it can be concluded that the most important area - from 2 to 3 GHz, and the ultra-high frequencies (above 4 GHz) games are pretty cold.

Most modern processors have a factory frequency of just 2 to 3 GHz, i.e. buying a model with a higher frequency (at 300-500 MHz) will really give an advantage everywhere.

Manufacturers love to be measured by innovations in the field of system tires, with might and main touting various QuickPaths with HyperTransports, which by their throughput plug the “classic” FSB into the belt.

If there are those who believe in these words - I recommend to return to the first chart. Maybe the achievements of high-performance tires are there, but this is definitely not the factor that has a strong influence on the result.

No, at one time it was of course very important. But now even budget solutions with the support of the very "obsolete" FSB work initially with an effective frequency of 800 MHz and have a bandwidth of 6.4 Gb / s. Here we are faced with the concept of "sufficiency".

In general, the system bus frequency in terms of performance is most important as the underlying speed of data exchange between the processor and RAM. To realize the potential of high-speed tires, it is important to use high-speed RAM (the opposite is true). So let's postpone this question until the RAM tests.

As for peripheral devices - the younger Nehalem, for example, use a DMI bus with a capacity of 2 Gb / s to communicate with them. And again - enough.

The system multiplier determines the resulting frequency of the nuclei. It is multiplied accordingly by the frequency of the system bus, or by the frequency of the system generator, depending on the implementation. The multiplier can be completely blocked (rarely now), unlocked downwards (it is found almost everywhere, since this function is one of the leaving energy-saving technologies) or completely unlocked (special overclocking processor models).

Hence the conclusion - on the type and frequency of the system bus, as well as the multiplier, in general, you can ignore.

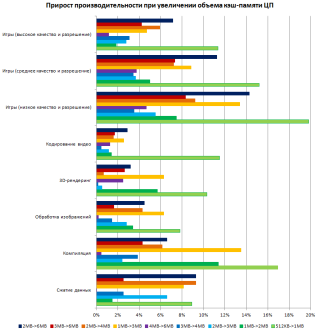

Cache size is one of the parameters most strongly influencing the positioning of the processor in the model range. For example, the Core 2 Duo E8300 and Pentium Dual-Core E6300 are made on the same core - Wolfdale, and they differ primarily in the cache size - 6mb versus 2mb, similar to the Phenom II X4 925, it differs from the Athlon II X4 630 only in the presence of 6mb cache - memory 3 levels, etc. Large and the difference in prices between the representatives of the younger and older lines of processors. And what in practice?

As you can see, the influence of the cache size is different everywhere and depends strongly on a specific task. There is no need to talk about linearity, but the transition 4Mb-> 6Mb gives a very small increase almost everywhere, i.e. some conclusions about sufficiency are already asking for. And yet, unlike previous tests, games show a strong love for the cache.

Of all this variety of results, what interests us the most is that which is shown in black in the graph, i.e. 2MB-> 6MB. Why? Younger Intel Pentium have 2MB of cache memory, and older Core 2 Duo - 4-6MB. Among modern products, AMD 2mb cache 2 levels generally has the majority of processors, and Phenom II, in addition, also has a level 3 cache with a volume of 4-6mb (for the first Phenom, this indicator is more modest - 2Mb). Given the above words about sufficiency, we can conclude that the “black sausage” very clearly shows the performance ratio between the younger and older processor lines. A deeper study of the tests confirms this conclusion.

The configuration of the memory subsystem has not so many important characteristics for us:

It is fundamental to the remaining memory characteristics, but in terms of performance is not important in itself.

By itself, the frequency of the memory is not important. This parameter is important as its bandwidth. PSP is formed based on their product of the effective frequency and the width of the memory bus. The width of the memory bus in modern PCs is 64 bits, hence the DDR2-800 memory bandwidth will be 6400 MB / s, which is indicated as one of the memory characteristics (PC2-6400, for example).

The following graph shows the increase in performance when increasing the memory bandwidth from 6400Mb / s to 12800Mb / s, i.e. twice.

Absolutely not serious - with the growth of memory bandwidth in half the productivity growth in most cases does not exceed even 5-6%. The conclusion suggests one thing - the adequacy of the bandwidth of modern memory.

In fact, the same can be put and the final point in the issue of bandwidth system bus.

Multichannel memory modes are designed again to increase the bandwidth.

When you turn on dual-channel mode, you can achieve at best a 2-3% performance boost, and the praised three-channel controller of Intel Core i7 processors on the S1366 platform in most cases can not break away from the single-channel one. The reason is the increased number of clock cycles spent on synchronizing such a scheme.

Memory timings are the time delays of the signal (expressed by the number of ticks) during memory access operations. There are a lot of timings in total, but the main scheme includes 4 most important - CAS Latency, RAS to CAS Delay, RAS Precharge Time, Row Active Time. The smaller the delay, the higher the speed of access to memory, however, with increasing frequency, in order to maintain stability, they have to be increased. Therefore, in practice it is necessary to decide the question of what is more important - memory bandwidth or access speed.

The effect of timings on performance is also very small. For systems with a memory controller built into the processor, you can win 2-5% while lowering the timings by one level. For systems with a memory controller located in the chipset, to achieve such a result, it is necessary to lower them already by two steps.

If we consider the sensitivity of individual applications to timing, the alignment is about the same as with the memory bandwidth.

One of the urgent questions, which in principle is difficult to find a definite answer.

In general, for most applications and games, 2GB of memory is sufficient. Some games have a very positive attitude to its increase to 3-4 GB. Further increasing volumes does not make sense either in games or in other ordinary tasks.

When can you really need a memory size exceeding the ordinary 3-4GB?

Some here may cite improved multitasking as an argument for the need for large amounts of memory (by the way, ardent supporters of multi-core configurations like the same argument). I would advise you to ask yourself the question - how often will you, for example, simultaneously play krayzis, rip a fresh BD disc, and in the meantime also archive something? It seems to me that very, very rarely. And in general, if you want to do something like that, then most likely the performance will be limited to the capabilities of the disk subsystem and no gigabytes of memory will save here.

As one of the options for the use of large volumes - disabling a swap or moving it into a virtual RAM disk. But this is also a double-edged sword.

By the way, to work with memory sizes above 4GB (in general, Windows is already limited to 3.25GB) you will need a 64-bit operating system (no, of course there is a crutch in the form of PAE, but this is still a crutch ...). And so that more than 4GB can address one application, it must also be 64-bit accordingly.

There remains one more question question - the configuration of the slats. The optimal volume can be typed in several ways: 1x2GB, 2x1GB, 2x2GB, 3x1GB, 1GB + 2GB ... In principle, any option is acceptable. Modern memory controllers are quite picky, it is enough to have the same operating mode in the SPD of all modules. But in general, the smaller the bars, the more preferable - the load on the controller and the power consumption of the system are reduced.

With a video card, the situation is not at all the same as with the central processor.

In general, for a start it would be nice to figure out why this device is actually needed. Practice shows that even among the “non-licenses” there are people who do not quite understand it.

What is the video card for?

Before talking about video cards, it is important to clarify one thing. The central processors are manufactured directly at the manufacturer’s factory, labeled there, and then shipped to suppliers. Video cards are different.

Of course, a video card manufacturer develops a reference PCB design, defines reference characteristics, etc., but at the output of its factory only a graphics processor provides (it happens that also some auxiliary chips, but this does not change the essence). The assembly of video cards based on them is done by subvendors. And here they have full scope for activities. If the first wave of video cards goes on the reference design (the subvendor simply does not have enough time to develop its PCB), then further there is a scattering of exclusive solutions. And they start experimenting with frequencies right away ...

Therefore, when comparing the performance of specific products, it is important to know how close their characteristics are to the reference ones.

If we consider the video card from this point of view, then the characteristics we are interested in are exclusively “consumer”:

If we talk about the current situation - the choice here will be small - either a discrete motherboard with a PCI Express interface, or a solution integrated into the chipset (Intel perverted to the point that they began to embed the video core into the central processor - Core i3 and Pentium G, but the essence of this changed little - the use of this kernel is possible only with certain chipsets - Intel H55 / H57, which actually, IMHO, killed a good idea), using the same PCI Express. AGP video cards are completely extinct, and occasional devices for good old PCI are classified as “perverts”. As for support for PCI Express 2.0, it is important if you are going to build a system using several high-performance video cards - motherboards in this case, as a rule, switch PCIE slots to less efficient modes and double the bandwidth turns out to be the way.

Here the choice depends solely on what you are going to display the image. Interfaces DVI and VGA (usually via the DVI-VGA adapter) support all video cards polls. Many have HDMI support (more often through the DVI-HDMI adapter). DisplayPort is rare, but it is something that you don’t see on the technology =)

By the way, do not be upset if the selected device is completely devoid of HDMI support. Most of the reviews about trying to use this interface for its intended purpose are replete with anger and abuse. Personal experience gave the same picture - a wonderful image through an “outdated” VGA to the end and terribly crappy through the “new high-tech” HDMI. Well, besides this - absolutely zhlobsky prices for the corresponding cables.

In this issue, the multi-monitor capabilities of the video card are more important. Even with 3-4 external interfaces, the device can only display images at the same time only by 2, and the same HDMI is often paralleled with DVI, which therefore does not allow using them together. So, if you want to connect 3 or more monitors to your computer, you will either have to look for a device with such a function (a good manufacturer will not forget to mention this as one of the key features), or to reserve a second video card.

As for the output modes directly, even budget solutions have the support of a wide range of these modes, up to 2560x1600. Although reinsure and clarify no one bothers.

It all depends on the availability and quality of drivers for the desired axis. This question was especially painful in its time for adherents of penguin-like operating systems, but now, it seems, everything has been fine. If we talk about Windows, the quality of AMD drivers is a frequent subject of holivars. Objectively, it is difficult to say something here, but the stone in the “red” garden can still be thrown: AMD releases driver updates strictly once a month, and neither the suddenly detected bugs, nor the arrival of UFOs, nor other factors can affect this schedule; NVIDIA does not suffer from this insanity - updates are released as needed, and there are always public beta versions. Draw your own conclusions.

The capabilities of the video card as a 3D accelerator will determine the overall system performance in three-dimensional applications, primarily in games. It is on the video card, and not on the processor and memory should be focused when the gaming machine is going.

In terms of performance, the following characteristics are important to us:

In general, it would be logical to assume that here, as in the case of the CPU, there will be an analysis of each of the characteristics with graphs, analysis of their influence, etc. However, this trick will not work with video cards. Their architectures are too different, the characteristics of the final solutions are too different.

, — .

. .

, :

, , TDP .

, , , — .

AMD, , 5 , , 5 =)

, , .

GeForce 8400 Radeon HD4300 , « ». Ridiculous. . . «», ( , IT — ) , — . . , , , , — (, , — , ). , 256bit GDDR3 2 128bit GDDR5 4, , .

— 512, 1 . , (19201080, ), . — «».

, , , , « ». Those. Radeon HD4870X2 2GB, , 2 , 1 . Who cares? — . - =)

API Shader Model , , . , . , ( ) API ( ...), .

, , , .

,

.

FPS (Frames Per Second) — , .

« » 25-30FPS.

The “comfort level” is double, i.e. 50-60FPS.

From where the numbers are taken, I will not paint. These are common values and they have become them for a reason. If there is a desire - look for yourself.

As with any dynamic value, you can consider the maximum, minimum, and average FPS.

Peak performance is of little interest to us, because it is very short-lived and does not affect the overall picture, but the average and minimum is very even.

Some game engines, however, suffer from a bad feature - to lag (twitch) with a kind of decent FPS. Fortunately, this effect is rare.

, FPS 50-60 , 25-30, // — .

— . «», (FSAA), . , . , , FSAA , , — , , , FPS =)

DirectX Video Acceleration — . , . (GeForce 8 , , G80, Radeon HD2000 ) ( , , — Vista/7 Enhanced Video Renderer). , , « » (, , ).

General Purpose Graphics Processing Units — . Those. , .

4 — CUDA (NVIDIA GeForce 8 ), Stream (AMD Radeon HD4000 ), Compute Shader ( MS DirectX 11), OpenCL ( , ).

CUDA, .

http://www.nvidia.com/object/cuda_home.html

. Folding@home SETI@home, .

, .

CoreAVC, , DXVA, — , (H264/AVC).

Badaboom, MediaCoder, TMPGEnc, Movavi Video Suite Cyberlink. , , (Badaboom MediaCoder) — , . , =)

The performance gain in the above applications when you turn on CUDA is one and a half to TEN (!!!) times. A big role is played naturally by a bunch of CPU-GP, especially the latter, but even middle-class video cards show very interesting results.

But what about the motherboard, hard drives, power supply, etc.?

Have patience.Articles are so exorbitant. On the first part they cursed that it was long, and this one was even twice as long. All will be. 4 .

, 3 — , . — ;) , , , .

Part 1

The tasks are set, and it seems to be a good time to move on to reading various reviews and tests, but ...

Where to begin? Which way to look at?

The second part of the opus will be devoted to the key components of the modern system - the central processor, RAM and the video card. It is unlikely that anyone doubts that these components are basic, the question is different - which of them is more important? What should I look for first of all when assembling a machine for certain tasks? What device and its characteristics?

To begin, I will make a small digression.

My previous article caused a rather ambiguous reaction in Habré. The essence of the most negative comments boiled down to one thing: “These are all obvious things! Nothing new! Everything is already known! Where is the specifics? ".

What can I do, I can not write briefly (yes, brevity - the sister of talent, but not mine), I can not make various digressions. I can’t write instructions like “do it once, do two, do three”.

And my previous topic is not an instruction, not a manual “how to assemble a computer”. This is an introduction for one big material, describing only general principles. And the material itself is also not an instruction and not a guide, but food for considerations, a reason to change some of your views and start thinking in situations where you thought it was superfluous.

I planned to publish the second part 2-3 days after the first one and began to write almost immediately, but somehow it did not go well. A week later, 60 percent was ready. I sat down, read what was written, and I did not like it at all. It turned out really too stretched and boring. Therefore, everything was rewritten from scratch. I tried to make the material more readable and, as far as possible, to shorten the length. I would be glad if my efforts were not in vain =)

Part 2. Reality and Fiction

Articles about computer hardware just a huge amount. There are many different resources, laboratories and even individuals who write them. Everyone has their own methodology, their own criteria, their own sets of test programs, and, finally, iron is different everywhere. Well, if we find the tests of the device we are interested in in the right application. And if not? If the piece of iron is too new, or just unpopular? And what if the application of interest is not in high esteem among observers. It becomes bad. The only way out of this situation is to work out a large amount of material close to the issue of interest, and on its basis draw general conclusions and determine trends. Actually, my opus and is the result of such research.

During the writing of this article, a large number of reviews of the following resources were used:

I consider them to be worthy and high-quality reviewers.

One of the main principles of comparing the results were:

- Maximum possible equality of testing conditions;

- Refusal to use the results of synthetic tests and benchmarks.

The picture below does not claim to be absolutely accurate, but rather reflects the current situation in the field of iron.

CPU

- You can not choose a heater - buy an AMD processor!For starters, throw all that shit out of your head. Any number of holivars on Intel vs AMD can be arranged as much as you like, but there is no use for it.

- Why Pentium and not Kor2Duo?And what pentium are you going to compare? Already 6 generations of processors are produced under this brand, in each - several varieties and a large number of end models with different characteristics.

Characteristics are what really matters. You can recognize them by the full (attention! Full) name of the line and processor model on the official website of the manufacturer or decent thematic resources.

I will not list here all the characteristics of modern CPUs, there are too many of them. We are interested in the following:

- The core and its stepping;

- The number of cores;

- Clock frequency;

- The type and frequency of the system bus;

- The type and value of the system multiplier;

- Characteristics of the cache (the number of levels and volume on each of them);

- Sets of instructions and extensions, support for various proprietary technologies;

- The level of energy consumption and heat dissipation (heat pack, TDP).

')

Core

We need to know the name of the kernel if only because it determines the belonging of the processor model to a specific micro-architecture. And without information about this comparison of all the other characteristics can give results that are very far from reality.

Now 5 microarchitectures are quite common:

- AMD K8 (almost all Athlon 64 and Athlon 64 X2, with the exception of literally a couple of models);

- AMD K10 (Athlon 7xxx and Phenom first generation, as well as a couple of models Athlon 64 X2);

- AMD K10.5 (Athlon II and Phenom II, as well as the new Sempron - all models of this micro-architecture have a three-digit index);

- Intel Core 2 (includes two generations of processors - 65nm Conroe and 45nm Penryn);

- Intel Nehalem (Core i7, i5, i3, etc.).

The graph visually displays a comparison of the performance of modern micro-architectures in various tasks. The reference values are Intel Conroe results.

Immediately you can see the hopeless obsolescence of K8 and not the best picture with K10. K10.5 shows more pleasant results, in some places even overtaking Intel products, but it upsets the complete overflow of all AMD processors in graphics-related tasks. If we talk about Intel products, a planned growth in the transition to Penryn, and even more enjoyable, to a more modern Nehalem, which apart from everything else, was distinguished in video coding.

In general, the conclusions about the initial alignment of forces can be made quite accurately.

As for stepping (revision) of the core, it affects mainly the TDP and the frequency potential of the processor. It turns out that new steppings bring additional sets of instructions and extensions. Check on the manufacturer's website =)

Number of Cores

The war between the supporters of 2-cores and 4-cores began even when the latter were just flashing in the announcements, and the spears in the disputes still break. Over time, compromise solutions entered into the “war” - 3-core CPUs (proposed, however, only AMD), which naturally gave rise to new issues. How important is multi-core? Or even not - where exactly is multi-core, in which applications?

The first thing that can be seen from the graph is clearly not in the games. In general, there are of course several games where the performance gain is quite high (up to 50%), but there are very few of them - fewer than fingers (the most poisonous - GTA IV and World in Conflict) =) Most of them are from 0 to 15% and decreases with increasing quality and resolution settings.

Most efficient multi-core configurations show themselves in 3D rendering and compilation. Good results also in video coding. When working with graphics, there is an increase, but it is not as high as we would like.

Finally, a strong blow to stereotypes is data compression. Archivers show impressive numbers in the embedded synthetic benchmarks, but when it comes to working with real data, it turns out that the extra core to them is like a fifth leg to a dog.

The three core configuration really turns out to be quite interesting - excellent results in applications optimized for multithreading and much more useful than from 4 cores in non-optimized ones. If there is not a strong price difference with 2-core CPUs, it can be a good choice.

As for the Hyper-Threading technology (virtual paralleling), revived in Intel Core i7 processors, it is useful only in applications that are very sensitive to the number of cores (those that have sausage longer than 40 on the chart)). Well, the inclusion of HT naturally does not give any mythical double growth - only 1/3 of what would have happened if the real number of cores were doubled. Charts will not lead.

Clock frequency

A parameter that has historically been “the main gauge of performance,” and indeed it is now.

Yes, the performance dependency on the CPU frequency is much more noticeable than on the number of cores. For example, with increasing frequency from 2.8 to 3.8 GHz, i.e. by 35%, in most cases we will have a productivity increase of more than 30%, which is very good. With games, the picture is worse, the effect of frequency is less than two times, and besides, as is the case with the number of cores, it decreases with increasing settings for quality, resolution, and ... frequency, i.e. this dependence is nonlinear. If you delve into the tests, it can be concluded that the most important area - from 2 to 3 GHz, and the ultra-high frequencies (above 4 GHz) games are pretty cold.

Most modern processors have a factory frequency of just 2 to 3 GHz, i.e. buying a model with a higher frequency (at 300-500 MHz) will really give an advantage everywhere.

System bus frequency and multiplier

Manufacturers love to be measured by innovations in the field of system tires, with might and main touting various QuickPaths with HyperTransports, which by their throughput plug the “classic” FSB into the belt.

If there are those who believe in these words - I recommend to return to the first chart. Maybe the achievements of high-performance tires are there, but this is definitely not the factor that has a strong influence on the result.

No, at one time it was of course very important. But now even budget solutions with the support of the very "obsolete" FSB work initially with an effective frequency of 800 MHz and have a bandwidth of 6.4 Gb / s. Here we are faced with the concept of "sufficiency".

In general, the system bus frequency in terms of performance is most important as the underlying speed of data exchange between the processor and RAM. To realize the potential of high-speed tires, it is important to use high-speed RAM (the opposite is true). So let's postpone this question until the RAM tests.

As for peripheral devices - the younger Nehalem, for example, use a DMI bus with a capacity of 2 Gb / s to communicate with them. And again - enough.

The system multiplier determines the resulting frequency of the nuclei. It is multiplied accordingly by the frequency of the system bus, or by the frequency of the system generator, depending on the implementation. The multiplier can be completely blocked (rarely now), unlocked downwards (it is found almost everywhere, since this function is one of the leaving energy-saving technologies) or completely unlocked (special overclocking processor models).

Hence the conclusion - on the type and frequency of the system bus, as well as the multiplier, in general, you can ignore.

Cache size

Cache size is one of the parameters most strongly influencing the positioning of the processor in the model range. For example, the Core 2 Duo E8300 and Pentium Dual-Core E6300 are made on the same core - Wolfdale, and they differ primarily in the cache size - 6mb versus 2mb, similar to the Phenom II X4 925, it differs from the Athlon II X4 630 only in the presence of 6mb cache - memory 3 levels, etc. Large and the difference in prices between the representatives of the younger and older lines of processors. And what in practice?

As you can see, the influence of the cache size is different everywhere and depends strongly on a specific task. There is no need to talk about linearity, but the transition 4Mb-> 6Mb gives a very small increase almost everywhere, i.e. some conclusions about sufficiency are already asking for. And yet, unlike previous tests, games show a strong love for the cache.

Of all this variety of results, what interests us the most is that which is shown in black in the graph, i.e. 2MB-> 6MB. Why? Younger Intel Pentium have 2MB of cache memory, and older Core 2 Duo - 4-6MB. Among modern products, AMD 2mb cache 2 levels generally has the majority of processors, and Phenom II, in addition, also has a level 3 cache with a volume of 4-6mb (for the first Phenom, this indicator is more modest - 2Mb). Given the above words about sufficiency, we can conclude that the “black sausage” very clearly shows the performance ratio between the younger and older processor lines. A deeper study of the tests confirms this conclusion.

RAM

The configuration of the memory subsystem has not so many important characteristics for us:

- Type of memory;

- Real and effective frequency;

- The number of channels;

- Timing Scheme;

- Volume

Memory type

It is fundamental to the remaining memory characteristics, but in terms of performance is not important in itself.

Frequency

By itself, the frequency of the memory is not important. This parameter is important as its bandwidth. PSP is formed based on their product of the effective frequency and the width of the memory bus. The width of the memory bus in modern PCs is 64 bits, hence the DDR2-800 memory bandwidth will be 6400 MB / s, which is indicated as one of the memory characteristics (PC2-6400, for example).

The following graph shows the increase in performance when increasing the memory bandwidth from 6400Mb / s to 12800Mb / s, i.e. twice.

Absolutely not serious - with the growth of memory bandwidth in half the productivity growth in most cases does not exceed even 5-6%. The conclusion suggests one thing - the adequacy of the bandwidth of modern memory.

In fact, the same can be put and the final point in the issue of bandwidth system bus.

Number of channels

Multichannel memory modes are designed again to increase the bandwidth.

When you turn on dual-channel mode, you can achieve at best a 2-3% performance boost, and the praised three-channel controller of Intel Core i7 processors on the S1366 platform in most cases can not break away from the single-channel one. The reason is the increased number of clock cycles spent on synchronizing such a scheme.

Timing Scheme

Memory timings are the time delays of the signal (expressed by the number of ticks) during memory access operations. There are a lot of timings in total, but the main scheme includes 4 most important - CAS Latency, RAS to CAS Delay, RAS Precharge Time, Row Active Time. The smaller the delay, the higher the speed of access to memory, however, with increasing frequency, in order to maintain stability, they have to be increased. Therefore, in practice it is necessary to decide the question of what is more important - memory bandwidth or access speed.

The effect of timings on performance is also very small. For systems with a memory controller built into the processor, you can win 2-5% while lowering the timings by one level. For systems with a memory controller located in the chipset, to achieve such a result, it is necessary to lower them already by two steps.

If we consider the sensitivity of individual applications to timing, the alignment is about the same as with the memory bandwidth.

Volume

One of the urgent questions, which in principle is difficult to find a definite answer.

In general, for most applications and games, 2GB of memory is sufficient. Some games have a very positive attitude to its increase to 3-4 GB. Further increasing volumes does not make sense either in games or in other ordinary tasks.

When can you really need a memory size exceeding the ordinary 3-4GB?

- Professional work with graphics. Storing the history of edits for heavy files can seriously eat the RAM, but in practice this will only be reflected in a slightly faster work of the same undo / redo functions.

- Several virtual machines. Let us omit the question of the necessity of keeping several VMs working simultaneously on one computer, but if there is such a need, then naturally, we will also need memory.

- Web servers, terminal servers, etc. In a word - the server. In general, serious servers with a large number of simultaneously running applications may require both 16 and 32GB, and even more, but this question is already beyond the scope of this article =)

Some here may cite improved multitasking as an argument for the need for large amounts of memory (by the way, ardent supporters of multi-core configurations like the same argument). I would advise you to ask yourself the question - how often will you, for example, simultaneously play krayzis, rip a fresh BD disc, and in the meantime also archive something? It seems to me that very, very rarely. And in general, if you want to do something like that, then most likely the performance will be limited to the capabilities of the disk subsystem and no gigabytes of memory will save here.

As one of the options for the use of large volumes - disabling a swap or moving it into a virtual RAM disk. But this is also a double-edged sword.

By the way, to work with memory sizes above 4GB (in general, Windows is already limited to 3.25GB) you will need a 64-bit operating system (no, of course there is a crutch in the form of PAE, but this is still a crutch ...). And so that more than 4GB can address one application, it must also be 64-bit accordingly.

There remains one more question question - the configuration of the slats. The optimal volume can be typed in several ways: 1x2GB, 2x1GB, 2x2GB, 3x1GB, 1GB + 2GB ... In principle, any option is acceptable. Modern memory controllers are quite picky, it is enough to have the same operating mode in the SPD of all modules. But in general, the smaller the bars, the more preferable - the load on the controller and the power consumption of the system are reduced.

Video card

With a video card, the situation is not at all the same as with the central processor.

In general, for a start it would be nice to figure out why this device is actually needed. Practice shows that even among the “non-licenses” there are people who do not quite understand it.

What is the video card for?

- The video card as a graphic adapter is used to display the image on the screen;

- The video card as a 3D accelerator is used to process three-dimensional graphics.

- The video card is NOT used as an accelerator during 2D graphics processing;

- The video card is NOT used as an accelerator during video playback.

Before talking about video cards, it is important to clarify one thing. The central processors are manufactured directly at the manufacturer’s factory, labeled there, and then shipped to suppliers. Video cards are different.

Of course, a video card manufacturer develops a reference PCB design, defines reference characteristics, etc., but at the output of its factory only a graphics processor provides (it happens that also some auxiliary chips, but this does not change the essence). The assembly of video cards based on them is done by subvendors. And here they have full scope for activities. If the first wave of video cards goes on the reference design (the subvendor simply does not have enough time to develop its PCB), then further there is a scattering of exclusive solutions. And they start experimenting with frequencies right away ...

Therefore, when comparing the performance of specific products, it is important to know how close their characteristics are to the reference ones.

Video card as output device

If we consider the video card from this point of view, then the characteristics we are interested in are exclusively “consumer”:

- Internal interface;

- External interfaces;

- Supported image output modes;

- Supported OS;

- Tdp

Internal interface

If we talk about the current situation - the choice here will be small - either a discrete motherboard with a PCI Express interface, or a solution integrated into the chipset (Intel perverted to the point that they began to embed the video core into the central processor - Core i3 and Pentium G, but the essence of this changed little - the use of this kernel is possible only with certain chipsets - Intel H55 / H57, which actually, IMHO, killed a good idea), using the same PCI Express. AGP video cards are completely extinct, and occasional devices for good old PCI are classified as “perverts”. As for support for PCI Express 2.0, it is important if you are going to build a system using several high-performance video cards - motherboards in this case, as a rule, switch PCIE slots to less efficient modes and double the bandwidth turns out to be the way.

Front end

Here the choice depends solely on what you are going to display the image. Interfaces DVI and VGA (usually via the DVI-VGA adapter) support all video cards polls. Many have HDMI support (more often through the DVI-HDMI adapter). DisplayPort is rare, but it is something that you don’t see on the technology =)

By the way, do not be upset if the selected device is completely devoid of HDMI support. Most of the reviews about trying to use this interface for its intended purpose are replete with anger and abuse. Personal experience gave the same picture - a wonderful image through an “outdated” VGA to the end and terribly crappy through the “new high-tech” HDMI. Well, besides this - absolutely zhlobsky prices for the corresponding cables.

Supported image output modes

In this issue, the multi-monitor capabilities of the video card are more important. Even with 3-4 external interfaces, the device can only display images at the same time only by 2, and the same HDMI is often paralleled with DVI, which therefore does not allow using them together. So, if you want to connect 3 or more monitors to your computer, you will either have to look for a device with such a function (a good manufacturer will not forget to mention this as one of the key features), or to reserve a second video card.

As for the output modes directly, even budget solutions have the support of a wide range of these modes, up to 2560x1600. Although reinsure and clarify no one bothers.

Supported OS

It all depends on the availability and quality of drivers for the desired axis. This question was especially painful in its time for adherents of penguin-like operating systems, but now, it seems, everything has been fine. If we talk about Windows, the quality of AMD drivers is a frequent subject of holivars. Objectively, it is difficult to say something here, but the stone in the “red” garden can still be thrown: AMD releases driver updates strictly once a month, and neither the suddenly detected bugs, nor the arrival of UFOs, nor other factors can affect this schedule; NVIDIA does not suffer from this insanity - updates are released as needed, and there are always public beta versions. Draw your own conclusions.

Graphics card as a 3D accelerator

The capabilities of the video card as a 3D accelerator will determine the overall system performance in three-dimensional applications, primarily in games. It is on the video card, and not on the processor and memory should be focused when the gaming machine is going.

In terms of performance, the following characteristics are important to us:

- GPU and its revision;

- The number of texture and rasterization blocks;

- The number and type of shader processors;

- GP frequencies (can be set separately for raster and shader domains);

- Characteristics of video memory (memory bus width, memory type, real and effective frequency, volume);

- Support level DirectX, OpenGL and Shader Model.

Graphics processor

In general, it would be logical to assume that here, as in the case of the CPU, there will be an analysis of each of the characteristics with graphs, analysis of their influence, etc. However, this trick will not work with video cards. Their architectures are too different, the characteristics of the final solutions are too different.

, — .

. .

, :

- GeForce 8800GTS/512MB

- GeForce 9800GTX

- GeForce 9800GTX+

- GeForce 250GTS

, , TDP .

, , , — .

AMD, , 5 , , 5 =)

, , .

GeForce 8400 Radeon HD4300 , « ». Ridiculous. . . «», ( , IT — ) , — . . , , , , — (, , — , ). , 256bit GDDR3 2 128bit GDDR5 4, , .

— 512, 1 . , (19201080, ), . — «».

, , , , « ». Those. Radeon HD4870X2 2GB, , 2 , 1 . Who cares? — . - =)

API

API Shader Model , , . , . , ( ) API ( ...), .

, , , .

,

.

FPS (Frames Per Second) — , .

« » 25-30FPS.

The “comfort level” is double, i.e. 50-60FPS.

From where the numbers are taken, I will not paint. These are common values and they have become them for a reason. If there is a desire - look for yourself.

As with any dynamic value, you can consider the maximum, minimum, and average FPS.

Peak performance is of little interest to us, because it is very short-lived and does not affect the overall picture, but the average and minimum is very even.

Some game engines, however, suffer from a bad feature - to lag (twitch) with a kind of decent FPS. Fortunately, this effect is rare.

, FPS 50-60 , 25-30, // — .

— . «», (FSAA), . , . , , FSAA , , — , , , FPS =)

DXVA

DirectX Video Acceleration — . , . (GeForce 8 , , G80, Radeon HD2000 ) ( , , — Vista/7 Enhanced Video Renderer). , , « » (, , ).

GPGPU

General Purpose Graphics Processing Units — . Those. , .

4 — CUDA (NVIDIA GeForce 8 ), Stream (AMD Radeon HD4000 ), Compute Shader ( MS DirectX 11), OpenCL ( , ).

CUDA, .

http://www.nvidia.com/object/cuda_home.html

. Folding@home SETI@home, .

, .

CoreAVC, , DXVA, — , (H264/AVC).

Badaboom, MediaCoder, TMPGEnc, Movavi Video Suite Cyberlink. , , (Badaboom MediaCoder) — , . , =)

The performance gain in the above applications when you turn on CUDA is one and a half to TEN (!!!) times. A big role is played naturally by a bunch of CPU-GP, especially the latter, but even middle-class video cards show very interesting results.

Teh End?

But what about the motherboard, hard drives, power supply, etc.?

Have patience.Articles are so exorbitant. On the first part they cursed that it was long, and this one was even twice as long. All will be. 4 .

, 3 — , . — ;) , , , .

Source: https://habr.com/ru/post/80962/

All Articles