Acquaintance with the levels of parallelization

You can parallelize the solution of a problem on several levels. There is no clear boundary between these levels and a specific parallelization technology; it can be difficult to attribute to one of them. The division given here is conditional and serves to demonstrate the diversity of approaches to the problem of parallelization.

Task-level Parallelization

Often paralleling at this level is the easiest and most efficient at the same time. Such parallelization is possible in cases where the problem being solved naturally consists of independent subtasks, each of which can be solved separately. A good example is audio album compression. Each entry can be processed separately, as it is not related to the others.

Parallelization at the task level shows us the operating system by running programs on different cores on a multi-core machine. If the first program shows us a movie, and the second is a file-sharing client, then the operating system will easily be able to organize their parallel work.

')

Other examples of parallelization at this level of abstraction are parallel compilation of files in Visual Studio 2008, data processing in batch modes.

As mentioned above, this type of parallelization is simple and in some cases is very effective. But if we are dealing with a homogeneous task, then this kind of parallelization is not applicable. The operating system cannot speed up a program that uses only one processor, no matter how many cores are available. A program that divides the encoding of sound and image in a video into two tasks will not get anything from the third or fourth core. To parallelize homogeneous tasks, you need to go down to the level below.

Level of data parallelism

The name of the model “data parallelism” comes from the fact that parallelism consists in applying the same operation to a set of data elements. Data parallelism is demonstrated by an archiver using several processor cores for packaging. Data is divided into blocks, which are uniformly processed (packaged) at different nodes.

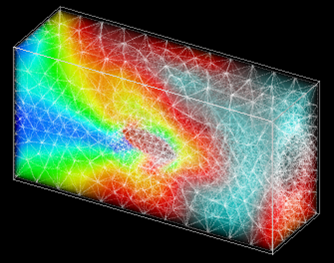

This type of parallelism is widely used in solving problems of numerical simulation. The counting region is represented in the form of cells describing the state of the medium at the corresponding points in space — pressure, density, percentage ratio of gases, temperature, and so on. The number of such cells can be huge - millions and billions. Each of these cells must be processed in the same way. Here, the data parallelism model is extremely convenient, since it allows you to load each core by allocating a certain set of cells to it. The counting area is divided into geometric objects, such as parallelepipeds, and the cells that are included in this area are given for processing to a specific core. In mathematical physics, this type of parallelism is called geometric parallelism.

Although geometric parallelism may seem similar to task-level parallelization, it is more complex to implement. In the case of modeling problems, it is necessary to transfer data obtained at the boundaries of geometric regions to other cores. Often, special methods of increasing the speed of calculation are used, due to load balancing between computing nodes.

In a number of algorithms, the speed of computation, where processes actively take place, takes longer than where the environment is calm. As shown in the figure, breaking the counting region into unequal parts can result in a more uniform loading of the cores. Kernels 1, 2, and 3 process small areas where the body moves, and core 4 processes a large area that has not yet been disturbed. All this requires additional analysis and the creation of a balancing algorithm.

The reward for this complication is the ability to solve problems of long-term movement of objects for an acceptable calculation time. An example is the launch of a rocket.

Algorithm Parallelization Level

The next level is the parallelization of individual procedures and algorithms. These include parallel sorting algorithms, matrix multiplication, the solution of a system of linear equations. At this level of abstraction, it is convenient to use such parallel programming technology as OpenMP.

OpenMP (Open Multi-Processing) is a set of compiler directives, library procedures, and environment variables that are designed to program multi-threaded applications on multiprocessor systems. OpenMP uses the branch-merge parallel execution model. An OpenMP program begins as a single thread of execution called the initial thread. When a thread encounters a parallel construct, it creates a new group of threads, consisting of itself and a certain number of additional threads, and becomes the main one in the new group. All members of the new group (including the main thread) execute code inside the parallel structure. At the end of the parallel construction there is an implicit barrier. After a parallel construct, only the main thread continues to execute user code. Other parallel regions may be nested in a parallel region.

Due to the idea of "incremental parallelization" OpenMP is ideal for developers who want to quickly parallelize their computing programs with large parallel loops. The developer does not create a new parallel program, but simply sequentially adds OpenMP directives to the text of a sequential program.

The task of implementing parallel algorithms is quite complex and therefore there is a sufficiently large number of libraries of parallel algorithms that allow building programs like cubes, without going into the device of parallel data processing implementations.

Instruction level parallelism

The lowest level of parallelism implemented at the level of parallel processing by the processor of several instructions. At the same level is batch processing of several data items with a single processor command. We are talking about technologies MMX, SSE, SSE2 and so on. This kind of parallelism is sometimes distinguished into an even deeper level of parallelization — parallelism at the bit level.

The program is a stream of instructions executed by the processor. You can change the order of these instructions, distribute them into groups that will be executed in parallel, without changing the result of the entire program. This is called instruction level concurrency. To implement this type of parallelism in microprocessors, several instruction pipelines are used, such technologies as command prediction, register renaming.

The programmer rarely looks at this level. Yes, and this makes no sense. Work on the location of commands in the most convenient sequence for the processor performs the compiler. This level of parallelization can be of interest only for a narrow group of specialists squeezing out all the possibilities from SSEx or compiler developers.

Instead of conclusion

This text does not pretend to be complete about the levels of parallelism, but simply shows the many facets of the issue of using multicore systems. For those interested in program development, I want to offer several links to resources devoted to parallel programming issues:

- Community of software developers. I am not an Intel employee, but I highly recommend this resource as a member of this community. A lot of interesting articles, blog entries and discussions related to parallel programming.

- Reviews of articles on parallel programming using OpenMP technology.

- http://www.parallel.ru/ Everything about the world of supercomputers and parallel computing. Academic community. Technologies, conferences, discussion club (forum) on parallel computing.

Source: https://habr.com/ru/post/80342/

All Articles