Notes on NLP (Part 9)

(The first parts: 1 2 3 4 5 6 7 8 ). Yes, the minus will rejoice, today I present to the readers the last, apparently, part of the Notes. As expected, we will talk about further semantic analysis; I also speculate a little about what, in principle, you can do in our area and what the difficulties of a “scientific-political” nature are.

In the previous part, we discussed the “syntax-semantic analyzer”, when analyzing a phrase, paying attention not only to the syntax of the phrase, but also to the meaning of the words in the sentence. Then it seemed to me that the easiest way to equate "semantics" to the translation: we are able to translate a word, which means we understand its meaning.

It must be said that in some cases, knowledge of the basic semantics of a word is necessary even for syntactic analysis, if syntactic analysis is understood not only to establish a connection between words, but also to reveal its role. For example, the phrases “Ivan came from home” and “Ivan came from politeness” are syntactically the same. However, in the first case, “from home” is the circumstance of the place (from where), and in the second, the reason (why). If you do not see the difference between "home" and "politeness", you will not be able to determine the role of a member of a sentence.

')

However, this is so, note aside. The main question is: is there any other way to define “meaning” other than translation into another language? Apparently, words can only be defined through other words, and this is the problem. The comments have already discussed “mediating languages” - interlingua and aymara. In fact, their purpose is precisely the “reference” description of the semantics of the word. The words of a natural language (Russian, English, etc.) are in some way translated into interlingua, and then from interlingua to the target language. Accordingly, the task of the interlingua is to store the meaning of the word obtained from the source language and map the meaning to the target language.

However, there are attempts to approach the task more scientifically, namely, to develop a special formal language for the description of meanings. My supervisor (Vitaly Alekseevich Aces) himself was engaged in this area. It is clear that his approach is best known to me. However, I think that there is more in common than different approaches between different approaches.

The meaning of the work is as follows. Any explanatory dictionary treats words informally: one is determined through the other. To a certain extent, this requires a certain cultural “background” from us, otherwise we risk falling into the trap of Lemovian sepules , that is, walking in a circle, instead of the desired meaning getting new and new words on our head.

Here's the math, let's say, doesn't work that way. There are axioms, they are few. And then, using strict language of reasoning, theorems are built on these axioms. Is it possible to describe the words of the Russian language in the same way? Let's see what happened in Melchuk:

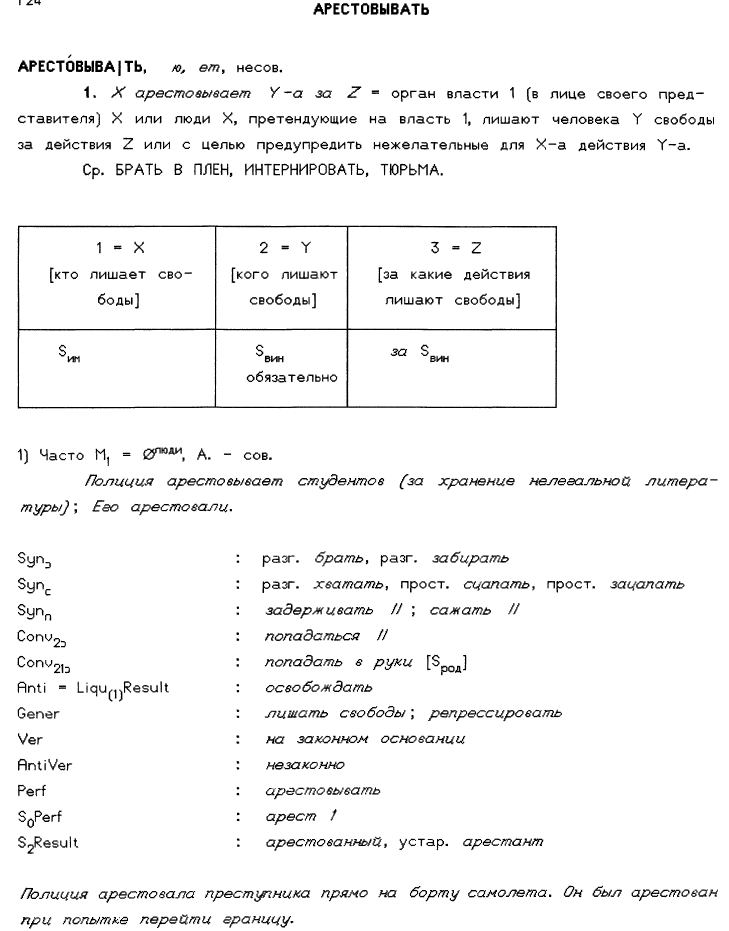

You see, the word is described as formally as possible, even with letter variables :) Pay attention to these chased formulations:

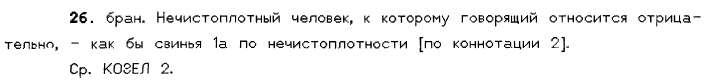

("Causated" means "called"). Note that a person does not even act “just like a pig,” but as “pig 2b”:

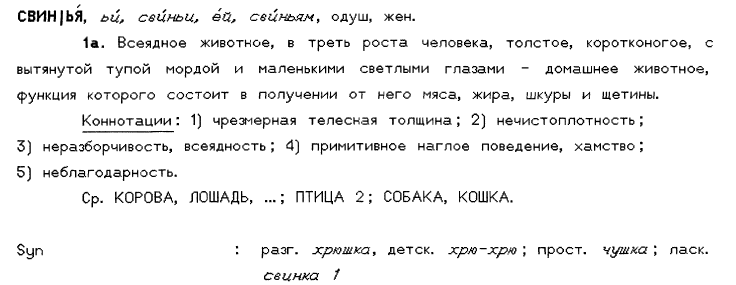

Accordingly, the “pig 1a” mentioned here is described as follows:

Fortunately, Melchuk is a famous person, he does not need my compliments. But in any case, I want to say that the very idea of such a dictionary is very progressive, and regardless of the role that the dictionary will eventually occupy in science and culture of the 20th century, I would pay tribute to this work. So you think, how many humanitarians are capable of expressing interpretations of words in such a mathematical way?

In addition to the strict description of words, Melchuk has another great idea: lexical functions. Their essence is that we use a variety of words to describe the same semantic operation. For example, Melchuk uses the Magn () function, which “amplifies” the word argument passed to her. What is common between the words "heavy", "stormy" and "big"? Here's what:

Secondly, the “semantic funtsky” mechanism, similar to Melchuk’s lexical functions, was developed. The difference is that Melchuk still created a dictionary for people - he explains the words of the language. Aces, by and large, operates only with meanings, and which specific words are behind them - the second question. If a word can be described using “atoms” and semantic functions, it is described in the same way. For example, there are functions “become”, “have property” and “strengthen”: IncepCopul (), Copul () and Magn (). Then with their help, you can express the words mulatto , blush and huge as follows:

Here I would not like to focus on the features of this particular formalism - approaches, ideas can be very different. The main idea should be clear: to develop a system of "atomic" concepts and "functions" over concepts, with the help of which one can describe new concepts. Then you can try to develop a formal explanatory dictionary that mathematically describes words (rather than driving around in a circle, trying to determine one through the other). Thus, we move away from words and arrive at meanings.

If a word is described by a formula, and a sentence, respectively, is a composition of formulas, then words and sentences can somehow be studied mathematically. For example, “open parentheses”, apply operations and so on. Food for the mind in this idea abound ... By the way, something like this is expressed in the works of Harris about Operator Grammars , but honestly, I have not mastered his works. The text is much closer to traditional linguistics (many words and little math :)).

Semantic formulas can be useful at least in machine translation. Even if you cannot translate any piece, you can simply “uncover” the formula, replacing lexical functions with chased mathematical text. Suppose you know the word "mulatto" and "woman" in English, but you do not have the English word "mulatto" in the dictionary. But there is a Russian-language explanatory semantic dictionary. Then, having met the "mulatto", one can translate the corresponding phrase from the semantic dictionary: "mulatto, who is a woman."

Ideally, whole phrases from different languages should be mapped to the same semantic formulas. A teacher is a “teacher who is a woman.” And in English there is no one word “teacher”, they will write something like “female teacher”, if you need to underline the gender - but the output will be the same formula.

By adding to the database of translations of semantic formulas, it is possible to achieve less and less clumsiness of the translation. That is, the automatic translator starts with “the mulatto, who is a woman,” and with a full database already understands that the correct translation is just a “mulatto”. Just, in principle, as we are with you - without knowing the exact translation, we begin to explain the word through more simple ones.

And sometimes it will be (by the way, do not forget, I theorize!) Just to save the situation, if the required word in the target language simply does not exist - then the “disclosure of the formula” can give an idea of what is happening. An example is not idle. Say, in Finnish there is no verb "to have", no matter how strange it sounds. You can say “I have” (“you have”, “he has”), but you cannot “abstract” in the abstract. And to translate even such a simple phrase as “good to have a dog!” Turns out to be more difficult than it would seem. There is, however, the verb “owning”, but in Finnish “owning a dog well!” Sounds just as stupid as in Russian.

Now let's move from semantics to politics :)

The problem of computational linguistics is that testing any hypothesis requires a lot of effort. Well, imagine, tomorrow I’ll start writing XDG grammar for Russian. Maybe for retirement finish. At the same time (pobryuzhu bit, okay?) Pushing into the scientific community small prototypes is also not easy. For example, a couple of times my articles were rejected with such an explanation: it is not clear how the stated ideas are able to cope with the whole variety of complexities of natural language. The idea is quite understandable, and even correct. But, on the other hand, how can you prove the ability to “cope with the whole variety of difficulties” without writing a grammar / dictionary / whatever that can cope with everything? And this is a full-scale project.

For myself, I have come up with such a thing so far: NLP in education . Let's see how it works. The essence is as follows. If you look at the software that teaches physics or chemistry, everything is already fine there: the real virtual labs. From the programs that teach a foreign language, just want to cry. Everyone, I think, saw these miseries: nightmarish clipart images, a couple of hundreds of voiced words, colorful interfaces made on the knee ... And the output is just a hybrid of a book and a tape recorder, that is, in reality, the computer has not become something more progressive than traditional equipment.

So I think, what is missing in the educational software (for learning a language) to turn into a virtual laboratory? But NLP is not enough! Let's start with the simple: the same morphological analyzer / synthesizer. I am sure that such a software will be useful to every person learning a language. And if you go further? For example, now I run around the idea of "lego-cubes" for words. Imagine a card with the words on the computer screen. They can be glued together in phrases, and phrases - in sentences. But thus the words which are not combined among themselves will not be glued. For example, the "red" and "cow" do not stick together until you agree them in gender, number and case. You can come up with powerful tools for checking spelling (not as in Word, but for the needs and typical mistakes of newbies ...)

And why, actually, education? Yes, because the vocabulary of a novice is small, and the constructions used by him are also limited. So, even a prototype for 500 words can be really useful, it can be useful to someone, get attention ... And then it will result in something more serious. I don’t know yet :) But I haven’t decided on an existing project yet. What where is good? As far as I remember, in the Czech Republic they are trying to work with XDK. But this is the Czech language - so let's start with learning Czech, and then join, if the project is still alive at that time? ;) And so everywhere!

In general, on this positive note we end this long cycle. Further - most likely, in the wake of the discussions and at the request of readers. Well, or when there will be interesting news in our wonderful area. Thank you all for your attention!

In the previous part, we discussed the “syntax-semantic analyzer”, when analyzing a phrase, paying attention not only to the syntax of the phrase, but also to the meaning of the words in the sentence. Then it seemed to me that the easiest way to equate "semantics" to the translation: we are able to translate a word, which means we understand its meaning.

It must be said that in some cases, knowledge of the basic semantics of a word is necessary even for syntactic analysis, if syntactic analysis is understood not only to establish a connection between words, but also to reveal its role. For example, the phrases “Ivan came from home” and “Ivan came from politeness” are syntactically the same. However, in the first case, “from home” is the circumstance of the place (from where), and in the second, the reason (why). If you do not see the difference between "home" and "politeness", you will not be able to determine the role of a member of a sentence.

')

However, this is so, note aside. The main question is: is there any other way to define “meaning” other than translation into another language? Apparently, words can only be defined through other words, and this is the problem. The comments have already discussed “mediating languages” - interlingua and aymara. In fact, their purpose is precisely the “reference” description of the semantics of the word. The words of a natural language (Russian, English, etc.) are in some way translated into interlingua, and then from interlingua to the target language. Accordingly, the task of the interlingua is to store the meaning of the word obtained from the source language and map the meaning to the target language.

However, there are attempts to approach the task more scientifically, namely, to develop a special formal language for the description of meanings. My supervisor (Vitaly Alekseevich Aces) himself was engaged in this area. It is clear that his approach is best known to me. However, I think that there is more in common than different approaches between different approaches.

Combinatory dictionary

Probably, it will be fair to mention about the well - known explanatory-combinatorial dictionary of Melchuk before Tuzov. This is a truly grandiose undertaking: I can’t say exactly how many words of the Russian language were included in it, but for French over 20 years of work (I don’t know how active) only about five hundred words were described. That is a difficult matter.The meaning of the work is as follows. Any explanatory dictionary treats words informally: one is determined through the other. To a certain extent, this requires a certain cultural “background” from us, otherwise we risk falling into the trap of Lemovian sepules , that is, walking in a circle, instead of the desired meaning getting new and new words on our head.

Here's the math, let's say, doesn't work that way. There are axioms, they are few. And then, using strict language of reasoning, theorems are built on these axioms. Is it possible to describe the words of the Russian language in the same way? Let's see what happened in Melchuk:

|

You see, the word is described as formally as possible, even with letter variables :) Pay attention to these chased formulations:

|

("Causated" means "called"). Note that a person does not even act “just like a pig,” but as “pig 2b”:

|

Accordingly, the “pig 1a” mentioned here is described as follows:

|

Fortunately, Melchuk is a famous person, he does not need my compliments. But in any case, I want to say that the very idea of such a dictionary is very progressive, and regardless of the role that the dictionary will eventually occupy in science and culture of the 20th century, I would pay tribute to this work. So you think, how many humanitarians are capable of expressing interpretations of words in such a mathematical way?

In addition to the strict description of words, Melchuk has another great idea: lexical functions. Their essence is that we use a variety of words to describe the same semantic operation. For example, Melchuk uses the Magn () function, which “amplifies” the word argument passed to her. What is common between the words "heavy", "stormy" and "big"? Here's what:

Magn() =

Magn() =

Magn() =Machine Readable Semantic Dictionary

Aces tried to reduce Melchuk's descriptions to machine-readable formulas. For this, firstly, the "axiomatics" was expanded. Say, the word "pig" was considered atomic - that is, we can assume that the "pig" is an object of the semantic field of the language, and there is no need to "explain" it to a computer. Attributes can be attributed if desired. The explanation will inevitably result in a long abracadabra, covering a variety of pig aspects.Secondly, the “semantic funtsky” mechanism, similar to Melchuk’s lexical functions, was developed. The difference is that Melchuk still created a dictionary for people - he explains the words of the language. Aces, by and large, operates only with meanings, and which specific words are behind them - the second question. If a word can be described using “atoms” and semantic functions, it is described in the same way. For example, there are functions “become”, “have property” and “strengthen”: IncepCopul (), Copul () and Magn (). Then with their help, you can express the words mulatto , blush and huge as follows:

Copul(, ) // ,

IncepCopul(x, ) // "x "

Copul(x, Magn()) // x , ""Here I would not like to focus on the features of this particular formalism - approaches, ideas can be very different. The main idea should be clear: to develop a system of "atomic" concepts and "functions" over concepts, with the help of which one can describe new concepts. Then you can try to develop a formal explanatory dictionary that mathematically describes words (rather than driving around in a circle, trying to determine one through the other). Thus, we move away from words and arrive at meanings.

If a word is described by a formula, and a sentence, respectively, is a composition of formulas, then words and sentences can somehow be studied mathematically. For example, “open parentheses”, apply operations and so on. Food for the mind in this idea abound ... By the way, something like this is expressed in the works of Harris about Operator Grammars , but honestly, I have not mastered his works. The text is much closer to traditional linguistics (many words and little math :)).

Semantic formulas can be useful at least in machine translation. Even if you cannot translate any piece, you can simply “uncover” the formula, replacing lexical functions with chased mathematical text. Suppose you know the word "mulatto" and "woman" in English, but you do not have the English word "mulatto" in the dictionary. But there is a Russian-language explanatory semantic dictionary. Then, having met the "mulatto", one can translate the corresponding phrase from the semantic dictionary: "mulatto, who is a woman."

Ideally, whole phrases from different languages should be mapped to the same semantic formulas. A teacher is a “teacher who is a woman.” And in English there is no one word “teacher”, they will write something like “female teacher”, if you need to underline the gender - but the output will be the same formula.

By adding to the database of translations of semantic formulas, it is possible to achieve less and less clumsiness of the translation. That is, the automatic translator starts with “the mulatto, who is a woman,” and with a full database already understands that the correct translation is just a “mulatto”. Just, in principle, as we are with you - without knowing the exact translation, we begin to explain the word through more simple ones.

And sometimes it will be (by the way, do not forget, I theorize!) Just to save the situation, if the required word in the target language simply does not exist - then the “disclosure of the formula” can give an idea of what is happening. An example is not idle. Say, in Finnish there is no verb "to have", no matter how strange it sounds. You can say “I have” (“you have”, “he has”), but you cannot “abstract” in the abstract. And to translate even such a simple phrase as “good to have a dog!” Turns out to be more difficult than it would seem. There is, however, the verb “owning”, but in Finnish “owning a dog well!” Sounds just as stupid as in Russian.

Now let's move from semantics to politics :)

Where to go?

Here I am not an expert myself in the process :) But I would like to voice some thoughts. Generally speaking, we are still experiencing the effects of AI Winter . On AI in general, and on computer linguistics in particular, too high hopes were cast at first. A lot of things burst, and ambitious projects like the Explanatory Combinatory Dictionary are not in fashion now. While smart people are thinking about why this happened , resource allocators have focused on clear applications of individual methods. We are able to perform morphological analysis? Fine! We are able to divide the text into sentences? Fine - let's apply! So little by little have achieved some good results. But in general, a certain stagnation is present. For example, perhaps the most popular commercial machine translator is SYSTRAN , and this is the technology of the 60-70s!The problem of computational linguistics is that testing any hypothesis requires a lot of effort. Well, imagine, tomorrow I’ll start writing XDG grammar for Russian. Maybe for retirement finish. At the same time (pobryuzhu bit, okay?) Pushing into the scientific community small prototypes is also not easy. For example, a couple of times my articles were rejected with such an explanation: it is not clear how the stated ideas are able to cope with the whole variety of complexities of natural language. The idea is quite understandable, and even correct. But, on the other hand, how can you prove the ability to “cope with the whole variety of difficulties” without writing a grammar / dictionary / whatever that can cope with everything? And this is a full-scale project.

For myself, I have come up with such a thing so far: NLP in education . Let's see how it works. The essence is as follows. If you look at the software that teaches physics or chemistry, everything is already fine there: the real virtual labs. From the programs that teach a foreign language, just want to cry. Everyone, I think, saw these miseries: nightmarish clipart images, a couple of hundreds of voiced words, colorful interfaces made on the knee ... And the output is just a hybrid of a book and a tape recorder, that is, in reality, the computer has not become something more progressive than traditional equipment.

So I think, what is missing in the educational software (for learning a language) to turn into a virtual laboratory? But NLP is not enough! Let's start with the simple: the same morphological analyzer / synthesizer. I am sure that such a software will be useful to every person learning a language. And if you go further? For example, now I run around the idea of "lego-cubes" for words. Imagine a card with the words on the computer screen. They can be glued together in phrases, and phrases - in sentences. But thus the words which are not combined among themselves will not be glued. For example, the "red" and "cow" do not stick together until you agree them in gender, number and case. You can come up with powerful tools for checking spelling (not as in Word, but for the needs and typical mistakes of newbies ...)

And why, actually, education? Yes, because the vocabulary of a novice is small, and the constructions used by him are also limited. So, even a prototype for 500 words can be really useful, it can be useful to someone, get attention ... And then it will result in something more serious. I don’t know yet :) But I haven’t decided on an existing project yet. What where is good? As far as I remember, in the Czech Republic they are trying to work with XDK. But this is the Czech language - so let's start with learning Czech, and then join, if the project is still alive at that time? ;) And so everywhere!

In general, on this positive note we end this long cycle. Further - most likely, in the wake of the discussions and at the request of readers. Well, or when there will be interesting news in our wonderful area. Thank you all for your attention!

Source: https://habr.com/ru/post/80268/

All Articles