Compress Unicode data

In one future project, the challenge was to transfer and store data in a VCard format, which contain Cyrillic letters. Since the size of the transmitted information is limited, it was necessary to reduce the size of the data.

There were several options:

Beautiful, but useless, results graph:

Business card format VCard 3.0 (length 260 characters):

')

All the results below concern this example. For Cyrillic business cards, the results should not differ. For business cards in other languages, probably, additional research will be required, this was not part of the task.

Option using CP1251 dropped immediately. Despite the small size of the finished files, its use (as well as any other traditional encoding) severely limits the capabilities of the service.

The standard compression scheme (The Standard Compression Scheme for Unicode, SCSU) is based on Reuters development.

The main idea of SCSU is the definition of dynamic windows in Unicode code space. Characters belonging to small alphabets (for example, Cyrillic) can be encoded with one byte, which indicates the character index in the current window. The windows are pre-installed to the blocks that are most often used, so the encoder does not have to set them.

For large alphabets, including Chinese, SCSU allows switching between single-byte and “Unicode” modes, which is actually UTF-16BE.

Control bytes ("tags") are inserted into the text to change windows and modes. Screening tags ("quote" tags) are used to switch to another window only for the next character. This is useful for encoding a single character outside the current window, or a character that conflicts with the tags.

Before the first Cyrillic letter, the coder uses the SC2 tag (hexadecimal 12) to switch to the dynamic window No. 2, which is preset to the Cyrillic character block. When reusing Cyrillic characters, the tag is not used.

The concept of Binary Ordered Compression (The Binary Ordered Compression for Unicode, BOCU) was developed in 2001 (by Mark Davis and Markus Scherer for the ICU project).

The BOCU-1 based idea is to encode each character as a difference (distance in the Unicode table) to the previous character. Small differences occupy fewer bytes than large ones. By coding differences, BOCU-1 achieves the same compression for small alphabets, whatever block they are in.

BOCU-1 supplements the concept with the following rules:

For each block change (transition from Cyrillic to Latin), the encoder needs two bytes (d3 - to switch to Cyrillic block, 4c - for the colon).

To compare the efficiency, the gzip compression algorithm was chosen. For large texts, gzip shows a greater degree of compression in comparing SCSU and BOCU-1 (since the number of different characters, even in multilingual documents, is limited). On small texts, like VCard in the example, it is difficult to get an unambiguous result.

A significant drawback of universal compression algorithms is the complexity of the implementation of the compressor and decompressor.

The question of two-stage compression (for example, SCSU + gzip) remains open for me.

The results of the SCSU and BOCU-1 algorithms for the source data in comparison with CP1251 and UTF8 are shown in the first column.

The second column represents the number of bytes issued directly by the encoder (without the Marker bayt order, BOM, which indicates the type of Unicode representation in the file).

The third column is a compressed gzip file.

The fourth column shows the number of data bytes in the gzip archive (no header, 18 bytes).

All files in the archive (5 kb).

SQL Server 2008 R2 uses SCSU to store nchar (n) and nvarchar (n). Symbian OS uses SCSU to serialize strings.

You can use ICU or SC UniPad to work with SCSU and BOCU-1

.

I was very surprised that none of the common barcode readers recognize SCSU or BOCU-1.

Unicode Technical Note # 14. A Survey of Unicode Compression

Unicode Technical Standard # 6. A Standard Compression Scheme for Unicode

Unicode Technical Note # 6. BOCU-1: MIME-Compatible Unicode Compression

There were several options:

- Use traditional encodings (for Cyrillic - CP1251).

- Use Unicode compression formats. Today it is SCSU and BOCU-1. I provide a detailed description of these two formats below.

- Use universal compression algorithms (gzip).

Beautiful, but useless, results graph:

Input data

Business card format VCard 3.0 (length 260 characters):

')

BEGIN: VCARD VERSION: 3.0 N: Pupkin; Vasily FN: Vasily Pupkin ORG: Horns and Hoofs LLC TITLE: The Most Important TEL; TYPE = WORK, VOICE: +380 (44) 123-45-67 ADR; TYPE = WORK:; 1; Khreshchatyk; Kiev ;; 01001; UKRAINE EMAIL; TYPE = PREF, INTERNET: vasiliy.pupkin@example.com END: VCARD

All the results below concern this example. For Cyrillic business cards, the results should not differ. For business cards in other languages, probably, additional research will be required, this was not part of the task.

Option using CP1251 dropped immediately. Despite the small size of the finished files, its use (as well as any other traditional encoding) severely limits the capabilities of the service.

SCSU

The standard compression scheme (The Standard Compression Scheme for Unicode, SCSU) is based on Reuters development.

The main idea of SCSU is the definition of dynamic windows in Unicode code space. Characters belonging to small alphabets (for example, Cyrillic) can be encoded with one byte, which indicates the character index in the current window. The windows are pre-installed to the blocks that are most often used, so the encoder does not have to set them.

For large alphabets, including Chinese, SCSU allows switching between single-byte and “Unicode” modes, which is actually UTF-16BE.

Control bytes ("tags") are inserted into the text to change windows and modes. Screening tags ("quote" tags) are used to switch to another window only for the next character. This is useful for encoding a single character outside the current window, or a character that conflicts with the tags.

Example

... N: P of p to to and N ... FN: In and with and l and th ... ... 4E 3A 12 9F C3 BF BA B8 BD ... 46 4E 3A 92 B0 C1 B8 BB B8 B9 ...

Before the first Cyrillic letter, the coder uses the SC2 tag (hexadecimal 12) to switch to the dynamic window No. 2, which is preset to the Cyrillic character block. When reusing Cyrillic characters, the tag is not used.

Benefits

- Text encoded in SCSU generally takes up as much space as traditional encoding. The overhead of tags is relatively minor.

- SCSU decoder is easy to implement, compared to universal compression algorithms.

disadvantages

- SCSU uses ASCII control characters, not only as tags, but also as part of normal characters. This makes SCSU an inappropriate format for protocols such as MIME, which interpret control characters without decoding them.

BOCU-1

The concept of Binary Ordered Compression (The Binary Ordered Compression for Unicode, BOCU) was developed in 2001 (by Mark Davis and Markus Scherer for the ICU project).

The BOCU-1 based idea is to encode each character as a difference (distance in the Unicode table) to the previous character. Small differences occupy fewer bytes than large ones. By coding differences, BOCU-1 achieves the same compression for small alphabets, whatever block they are in.

BOCU-1 supplements the concept with the following rules:

- The previous or base value is aligned in the middle of the block, in order to avoid big jumps from the beginning of the block to the end.

- 32 control characters ASCII, as well as the spaces do not change when encoding, for compatibility with e-mail and to preserve the binary order

- The space does not cause a change in the base value. This means that when encoding non-Latin words separated by spaces, there is no need to make a big jump to U + 0020 and back.

- ASCII control characters reset the base value, which makes the adjacent lines in the file independent.

Example

... N: P u p to and n; B ... FN: B a s and l and th ... ... 9e 8a d3 d3 93 8f 8a 88 8d 4c 11 d3 c6 ... 96 9e 8a d3 c6 80 91 88 8b 88 89 ...

For each block change (transition from Cyrillic to Latin), the encoder needs two bytes (d3 - to switch to Cyrillic block, 4c - for the colon).

Benefits

- ASCII control characters remain when encoding

- Like SCSU, BOCU-1 requires little overhead compared to traditional encodings

disadvantages

- Latin letters (and all ASCII characters, except the control ones) change their meaning when encoding. For example, the XML parser should obtain BOCU-1 encoding information from a higher level protocol.

- The BOCU-1 algorithm is not formally described anywhere, except for the software code accompanying UTN # 6.

Universal compression algorithms

To compare the efficiency, the gzip compression algorithm was chosen. For large texts, gzip shows a greater degree of compression in comparing SCSU and BOCU-1 (since the number of different characters, even in multilingual documents, is limited). On small texts, like VCard in the example, it is difficult to get an unambiguous result.

A significant drawback of universal compression algorithms is the complexity of the implementation of the compressor and decompressor.

The question of two-stage compression (for example, SCSU + gzip) remains open for me.

results

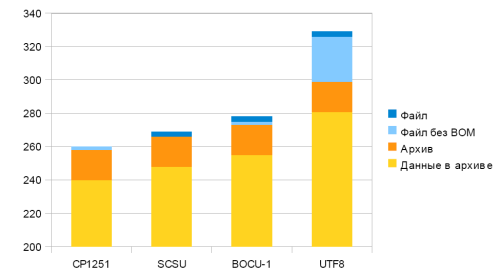

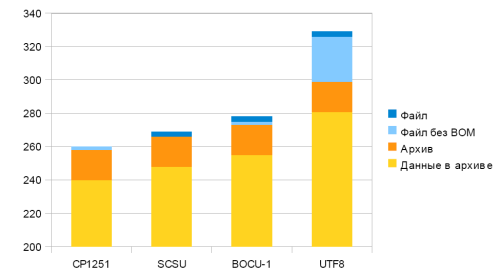

The results of the SCSU and BOCU-1 algorithms for the source data in comparison with CP1251 and UTF8 are shown in the first column.

The second column represents the number of bytes issued directly by the encoder (without the Marker bayt order, BOM, which indicates the type of Unicode representation in the file).

The third column is a compressed gzip file.

The fourth column shows the number of data bytes in the gzip archive (no header, 18 bytes).

| File | File without bom | In the archive | The length of the data in the archive | |

| CP1251 | 260 | 260 | 258 | 240 |

| SCSU | 267 | 264 | 266 | 248 |

| BOCU-1 | 278 | 275 | 273 | 255 |

| UTF8 | 329 | 326 | 299 | 281 |

All files in the archive (5 kb).

Application

SQL Server 2008 R2 uses SCSU to store nchar (n) and nvarchar (n). Symbian OS uses SCSU to serialize strings.

You can use ICU or SC UniPad to work with SCSU and BOCU-1

.

I was very surprised that none of the common barcode readers recognize SCSU or BOCU-1.

What else to read

Unicode Technical Note # 14. A Survey of Unicode Compression

Unicode Technical Standard # 6. A Standard Compression Scheme for Unicode

Unicode Technical Note # 6. BOCU-1: MIME-Compatible Unicode Compression

Source: https://habr.com/ru/post/79200/

All Articles