Flies, math ... Robots?

Formalizing the activity of fly brain cells involved in visual processes, scientists have found a new way to extract motion paths from raw visual data.

Although they built the system, the researchers do not quite understand how it works. However, despite the mystery of the formulas obtained, they could be used for programming vision systems for combat miniature unmanned aerial vehicles, search-and-rescue robots, car navigation systems and other systems, where computing power is highly respected.

')

“We can create a system that works perfectly, inspired by nature, without a full understanding of how the elements of this system interact. This is a non-linear system, said David O'Carroll, a computational neurophysiologist studying insect vision at the Australian University of Adelaide. “The number of calculations is quite small. We can get results with tens of thousands of times less floating-point calculations than using traditional methods. ”

The most famous of these is the Lucas-Canada method , which calculates shifts — up and down, side to side — by comparison, frame by frame, how each pixel changes in the visual field. It is used for control in many experimental unmanned vehicles, but such a brute force requires huge computational power, which makes this method impractical in small systems.

To make the production of small flying robots possible, researchers would like to find an easier way to handle motion. And then the scientists noticed flies, which use a relatively small number of neurons to maneuver with extraordinary dexterity. And for more than ten years, O'Carroll and others researchers have carefully studied the optical schemes of the flight of flies, measuring their cellular activity and turning the result of evolution into a set of computational principles.

In a paper published on Friday in the Public Library of Scientific Computational Biology, O'Carroll and his colleague Russell Brinkworth talk about the results of applying this method in practice.

»Laptops use at least tens of watts of electricity. We can implement what we have developed with chips that consume only tenths of MW, "said O'Carrol.

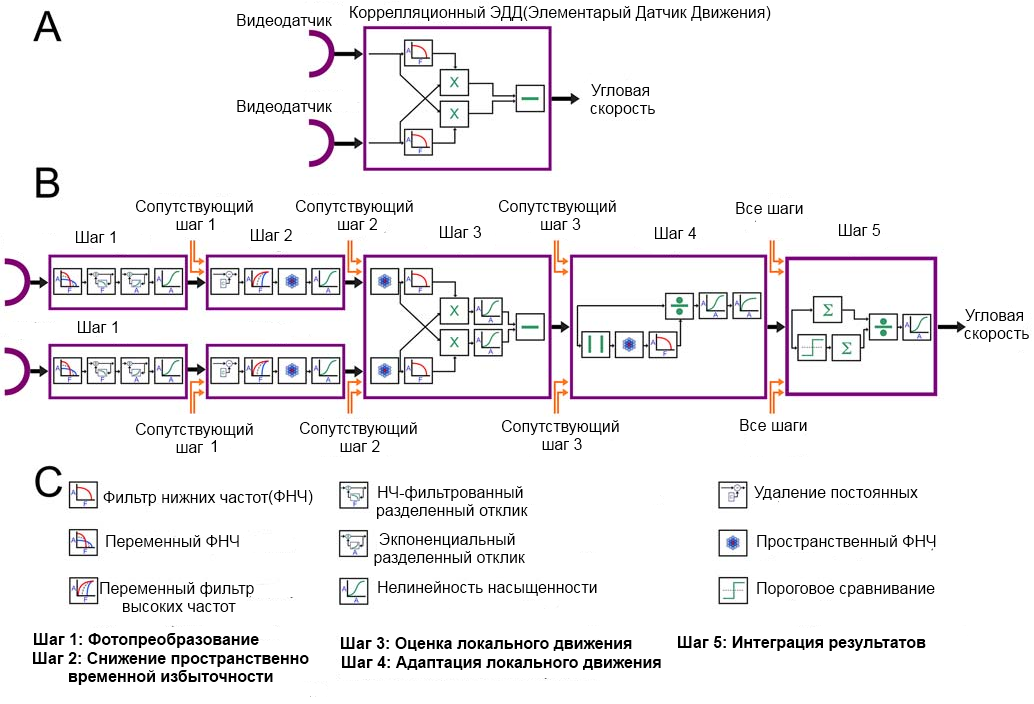

The algorithm of researchers consists of a series of five formulas through which data from cameras is passed. Each formula is a technique used by flies to handle changes in brightness, contrast and movement, and their parameters are constantly changing depending on what is fed to the input. Unlike the Lucas-Canada method, the algorithm does not return a frame-by-frame comparison of each pixel, but emphasizes large-scale changes. In this sense, it is similar to video compression systems that ignore solid areas.

To test the algorithm, O'Carroll and Brinkworth analyzed several high-resolution animated images using programs like those that can be run in a robot. When they compared the results with the input data, they found that it worked in various natural light conditions that conventional motion detectors usually misled.

“This is an amazing job,” said Sean Humbert, an aerospace engineer from the University of Maryland, who builds miniature autonomous flying robots, some of which work on early versions of the O'Carroll algorithm . To accommodate traditional navigation systems, the device must be capable of carrying a large enough payload. But the payload of small flying robots is quite small - just a pair of Tic-Tacs. You never put a couple of dual cores into a pair of tic-tacs. The algorithms that insects use are very simple compared to the things we design, and even easier for smaller vehicles. ”

Interestingly, the algorithm does not work as well if any one operation is missed. The sum is greater than the whole, and O'Carroll and Brinkworth do not know why. Since the parameters are in constant feedback, it produces a cascade of nonlinear equations, which are difficult to understand in retrospect, and almost impossible to predict.

"We were inspired by the sight of insects, and built a model that is suitable for real use, but at the same time, we built a system almost as complex as the insect itself," said O'Carroll. “This is one of the most fascinating things. This will not necessarily lead us to a full understanding of how this system works, but it will probably bring us closer to understanding how nature itself was able to do it right. ”

The researchers got their algorithm from neural circuits related to the lateral view, but O'Carroll believes that similar formulas can probably be used to calculate other optical flows, such as those produced to move back and forth in three-dimensional space.

Source: https://habr.com/ru/post/75279/

All Articles