Protected by router: QoS

QoS is a big topic. Before we talk about the subtleties of settings and various approaches in the application of traffic processing rules, it makes sense to recall what QoS is in general.

Quality of Service (QoS) is a technology for providing different classes of traffic with different priorities in service.

First, it is easy to understand that any prioritization makes sense only when there is a queue for service. It is there, in the queue, that you can “slip in” first, using your right.

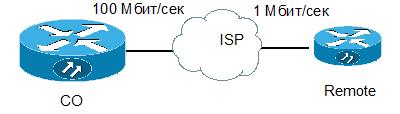

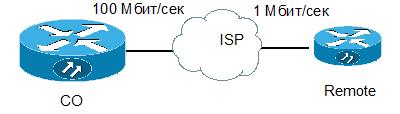

The queue is formed where the narrow (usually such places are called "bottle neck", bottle-neck). A typical “neck” is an Internet connection to an office, where computers connected to the network at least at a speed of 100 Mbit / s all use the channel to the provider, which rarely exceeds 100 Mbit / s, and often amounts to a small 1-2-10 Mbit / s . For everyone.

')

Secondly, QoS is not a panacea: if the “neck” is too narrow, then the physical interface buffer is often full, where all the packets that are about to exit through this interface are placed. And then newly arrived packages will be destroyed, even if they are super-necessary. Therefore, if the queue on the interface on average exceeds 20% of its maximum size (on cisco routers, the maximum queue size is usually 128-256 packets), there is reason to think hard about the design of your network, build additional routes or extend the band to the provider.

We will deal with the components of technology

(further under the cut, a lot)

Marking In the fields of the headers of various network protocols (Ethernet, IP, ATM, MPLS, etc.) there are special fields allocated for marking traffic. To mark the same traffic is necessary for the subsequent more simple processing in queues.

Ethernet The Class of Service (CoS) field is 3 bits. Allows you to divide traffic into 8 streams with different markings.

IP. There are 2 standards: old and new. In the old one there was a ToS field (8 bits), from which, in turn, 3 bits were allocated called IP Precedence. This field has been copied to the CoS Ethernet header field.

Later a new standard was defined. The ToS field has been renamed DiffServ, and an additional 6 bits are allocated for the Differencial Service Code Point (DSCP) field, in which the parameters required for this type of traffic can be transmitted.

Marking data is best closer to the source of this data. For this reason, most IP phones themselves add the DSCP = EF or CS5 field to the IP header of voice packets. Many applications also tag traffic on their own in the hope that their packets will be processed priority. For example, this "sin" peer-to-peer networks.

The queues.

Even if we do not use any prioritization technologies, this does not mean that there are no queues. In a narrow place the queue will arise in any case and will provide the standard First In First Out FIFO mechanism. Such a queue, obviously, will allow not to destroy the packets immediately, saving them before sending them to the buffer, but will not provide any preferences, say, to voice traffic.

If you want to give some selected class an absolute priority (that is, packets from this class will always be processed first), then this technology is called Priority queuing . All packets that are in the physical outgoing interface buffer will be divided into 2 logical queues and packets from the privileged queue will be sent until it becomes empty. Only then will the packets from the second queue be transmitted. This technology is simple, rather rough, it can be considered obsolete, because processing of non-priority traffic will constantly stop. On cisco routers you can create

4 queues with different priorities. They maintain a strict hierarchy: packets from less privileged queues will not be served until all queues with a higher priority are empty.

Fair Queuing . A technology that allows each class of traffic to grant the same rights. As a rule, it is not used, because gives little in terms of improving the quality of service.

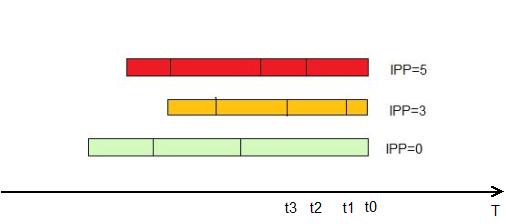

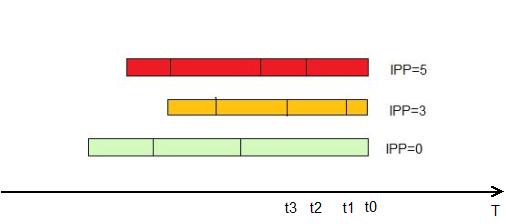

Weighted Fair Queuing (WFQ ). A technology that provides different rights to different classes of traffic (it can be said that the “weight” is different for different queues), but at the same time serves all the queues. "On the fingers" it looks like this: all packets are divided into logical queues, using

IP Precedence as a criterion. The same field sets the priority (the more, the better). Further, the router calculates the packet from which queue "faster" to transmit and sends it.

He considers it according to the formula:

dT = (t (i) -t (0)) / (1 + IPP)

IPP - IP Precedence field value

t (i) - The time required for the actual transmission of the packet by the interface. It can be calculated as L / Speed, where L is the packet length, and Speed is the interface transfer rate

This queue is enabled by default on all interfaces of cisco routers, except for point-to-point interfaces (HDLC or PPP encapsulation).

WFQ has a number of drawbacks: such a queue uses previously marked packets, and does not allow to determine traffic classes and allocated band independently. Moreover, as a rule, no one marks the IP Precedence field, so packets are unmarked, i.e. all fall into one queue.

The development of WFQ has become a weighted fair queue based on classes ( Class-Based Weighted Fair Queuing, CBWFQ ). In this queue, the administrator himself sets up traffic classes, following various criteria, for example, using ACLs as a template or analyzing protocol headers (see NBAR). Next, for these classes

the “weight” is determined and the packets of their queues are serviced in proportion to the weight (more weight - more packets will go out of this queue per unit of time)

But such a queue does not provide for the strict transmission of the most important packets (as a rule, voice packets or packets of other interactive applications). Therefore, a hybrid of Priority and Class-Based Weighted Fair Queuing - PQ-CBWFQ , also known as Low Latency Queuing (LLQ), appeared . In this technology, you can set up to 4 priority queues, the rest of the classes served by the mechanism of CBWFQ

LLQ is the most convenient, flexible and frequently used mechanism. But it requires setting up classes, setting policies and applying policies on the interface.

I will tell you more about the settings further.

Thus, the process of providing quality service can be divided into 2 stages:

Marking Close to the sources.

Packet handling Putting them in a physical queue on the interface, subdivision into logical queues and providing these logical queues with various resources.

QoS technology is quite resource-intensive and loads the processor quite significantly. And the more it loads, the deeper the headers have to climb to classify packets. For comparison: it is much easier for a router to look at the header of an IP packet and analyze 3 bits of IPP there, rather than unwind the stream almost to the application level, determining what protocol goes inside (NBAR technology)

To simplify further traffic processing, as well as to create a so-called “trusted boundary” (trusted boundary), where we believe all QoS-related headers, we can do the following:

1. On switches and access level routers (close to client machines), catch packets, scatter them into classes

2. In the policy as an action, repaint the headers in one's own way or transfer the values of the higher level QoS headers to the lower ones.

For example, on the router we catch all the packets from the guest WiFi domain (we assume that there may be non-managed computers and software that can use non-standard QoS headers), change any IP headers to default ones, match header 3 headers (DSCP) level (CoS)

so that further switches can efficiently prioritize traffic using only the data link layer label.

LLQ Setup

Setting up the queues is to configure the classes, then for these classes it is necessary to determine the parameters of the bandwidth and apply the entire structure to the interface.

Creating classes:

class-map NAME

match?

access-group Access group

Any Any packets

class-map Class map

cos IEEE 802.1Q / ISL class of service / user priority values

destination-address Destination address

discard-class Discard behavior identifier

dscp Match DSCP in IP (v4) and IPv6 packets

flow flow based QoS parameters

fr-de Match on frame-relay de bit

fr-dlci Match on fr-dlci

input interface select an input interface to match

ip IP specific values

mpls multi protocol label switching

not Negate this match result

packet layer 3 packet length

precedence Match Precedence in IP (v4) and IPv6 packets

protocol protocol

qos-group Qos-group

source-address Source address

vlan vlans to match

Packages can be sorted into classes by various attributes, for example, by specifying an ACL as a template, or by a DSCP field, or by highlighting a specific protocol (NBAR technology is turned on)

Creating a policy:

policy-map POLICY

class NAME1

?

bandwidth bandwidth

compression Activate Compression

drop Drop all packets

log log IPv4 and ARP packets

netflow-sampler netflow action

police police

priority Strict Scheduling Priority for this Class

queue-limit Queue Max Threshold for Tail Drop

Random -detect Enable Early Detection as drop policy

service-policy Configure Flow Next

set set QoS values

shape Traffic Shaping

For each class in politics, you can either allocate a priority piece of the band:

policy-map POLICY

class NAME1

priority?

[8-2000000] Kilo Bits per second

percent % of total bandwidth

and then packages of this class will always be able to count at least on this piece.

Or describe what “weight” this class has within the CBWFQ

policy-map POLICY

class NAME1

bandwidth?

[8-2000000] Kilo Bits per second

percent % of total Bandwidth

remaining % of the remaining bandwidth

In both cases, you can specify both the absolute value and the percentage of the entire available band.

A reasonable question arises: how does the router know the whole band? The answer is trivial: from the bandwidth parameter on the interface. Even if it is not configured explicitly, some of its value is required. It can be viewed with the sh int command.

Also be sure to remember that by default you do not dispose of the entire band, but only 75%. Packages that are clearly not included in other classes fall into the class-default. This setting for the default class can be set explicitly.

policy-map POLICY

class class-default

bandwidth percent 10

max-reserved-bandwidth [percent]

Routers jealously watch that the admin does not accidentally give out more bandwidth than there is and swear at such attempts.

It seems that the policy will issue no more than what is written to the classes. However, this situation will be only if all the queues are full. If some kind of is empty, then the line allocated to it will fill the queues in proportion to its “weight”.

All this construction will work like this:

If there are packets from the class with the priority indication, then the router focuses on the transfer of these packets. Moreover, since There may be several such priority queues, then the band is divided between them in proportion to the specified percentages.

Once all priority packets have ended, the CBWFQ queue comes. For each countdown from each queue, the share of packets specified in the settings for this class is “scratched out”. If part of the queues is empty, then their band is divided in proportion to the class “weight” between the loaded queues.

Application on the interface:

int s0 / 0

service-policy [input | output] POLICY

And what to do if you need to strictly cut packages from the class that go beyond the permitted speed? After all, the indication of bandwidth only distributes the band between classes when the queues are loaded.

To solve this problem, for the traffic class, the policy has technology

police [speed] [birst] conform-action [action] exceed-action [action]

it allows you to explicitly specify the desired average speed (speed), the maximum “outlier”, i.e. amount of data transmitted per unit of time. The larger the “overshoot”, the greater the actual transfer rate may deviate from the desired average. Also indicated: action for normal traffic, not exceeding

specified speed and action for traffic exceeding the average speed. Actions may be

police 100000 8000 conform-action?

drop drop packet

exceed-action

conform + exceed burst

set-clp-transmit set atm clp and send it

set-discard-class-transmit set discard-class and send it

set-dscp-transmit set-up dscp and send it

FR DE send and send it

set-mpls-exp-imposition-transmit set

set-mpls-exp-top- set

set-prec-transmit packet forwarding and send it

set-qos-transmit set-qos-group and send it

transmit transmit packet

Often there is also another task. Suppose that it is necessary to limit the flow going towards the neighbor with the slow channel.

In order to accurately predict which packets will reach the neighbor, and which will be destroyed due to channel congestion on the “slow” side, it is necessary to create a policy on the “fast” side that would process queues in advance and destroy redundant packets.

And here we are faced with one very important thing: to solve this problem, we need to emulate the “slow” channel. For this emulation, it is not enough just to scatter packets in queues, you also need to emulate the physical buffer of the “slow” interface. Each interface has a packet rate. Those. per unit of time, each interface can transmit no more than N packets. Usually, the physical interface buffer is calculated so as to provide an “autonomous” operation of the interface for several units of time. Therefore, the physical buffer, say, GigabitEthernet will be ten times larger than any Serial interface.

What is wrong with remembering a lot? Let's take a closer look at what will happen if the buffer on the fast transmitting side is substantially larger than the buffer of the host.

Let for simplicity there is 1 queue. On the “fast” side we simulate a low transmission rate. This means that when packages fall under our policy, they will start to accumulate in the queue. Because Since the physical buffer is large, then the logical queue will be impressive. Some applications (working through TCP) will receive a late notification that some packets have not been received and will keep the large window size for a long time, loading the receiving side. This will occur in the ideal case, when the transmission rate will be equal to or less than the reception rate. But the host interface can also be loaded by other packages.

and then a small queue on the receiving side will not be able to accommodate all the packets transmitted to it from the center. There will be losses that will entail additional transfers, but there will still remain a solid “tail” of previously accumulated packets in the transfer buffer, which will be transmitted “idle”, since on the receiving side did not wait for an earlier package, which means that more of the post will be simply ignored.

Therefore, in order to correctly solve the problem of reducing the transmission rate to a slow neighbor, the physical buffer must also be limited.

This is done by the team

shape average [speed]

And now the most interesting thing: what to do if, in addition to emulating a physical buffer, I need to create logical queues inside it? For example, prioritize voice?

For this, a so-called nested policy is created, which is applied inside the main one and divides into logical queues what gets into it from the parent.

It is time to disassemble some romping example based on the above picture.

Suppose we are going to create sustainable voice channels via the Internet between CO and Remote. For simplicity, let the Remote network (172.16.1.0/24) have only a connection to the CO (10.0.0.0/8). The interface speed on the Remote is 1 Mbps and 25% of this speed is allocated to voice traffic.

Then first we need to highlight the priority class of traffic from both sides and create a policy for this class. In addition, create a class that describes the traffic between offices.

WITH:

class-map RTP

match protocol rtp

policy-map RTP

class RTP

priority percent 25

ip access-list extended CO_REMOTE

permit ip 10.0.0.0 0.255.255.255 172.16.1.0 0.0.0.255

class-map CO_REMOTE

match access-list CO_REMOTE

On the Remote, we’ll do it differently: let us, because of the iron's strength, we cannot use NBAR, then all we have to do is explicitly describe the ports for RTP

ip access-list extended RTP

permit udp 172.16.1.0 0.0.0.255 range 16384 32768 10.0.0.0 0.255.255.255 range 16384 32768

class-map RTP

match access-list RTP

policy-map QoS

class RTP

priority percent 25

Next, you need to emulate a slow interface in CO, apply a nested policy to prioritize voice packets

policy-map QoS

class CO_REMOTE

shape average 1,000,000

service-policy RTP

and apply the policy on the interface

int g0 / 0

service-policy output QoS

On the Remote, set the bandwidth parameter (in kbps) in accordance with the speed of the interface. Let me remind you that it is from this parameter that 25% will be considered. And apply the policy.

int s0 / 0

bandwidth 1000

service-policy output QoS

The narrative would not be complete if not cover the capabilities of the switches. It is clear that pure L2 switches are not able to look so deep into packets and divide them into classes according to the same criteria.

On smarter L2 / 3 switches on routed interfaces (i.e., either on interface vlan, or if the port is derived from the second level with the no switchport command), the same construction is used that works on routers, and if the port or the entire switch works in L2 mode (true for models 2950/60), then only the indication “police” can be used for the traffic class, and priority or bandwidth are not available.

From a purely defensive point of view, knowledge of the fundamentals of QoS will quickly prevent bottlenecks caused by the work of worms. As you know, the worm itself is quite aggressive in the propagation phase and creates a lot of parasitic traffic, i.e. essentially denial of service (DoS) attack.

And often the worm spreads through the ports required for operation (TCP / 135,445.80, etc.). It would be rash to simply close these ports on the router, so it’s more humane to do this:

1. We collect statistics on network traffic. Either via NetFlow, or NBAR, or via SNMP.

2. We identify the profile of normal traffic, i.e. According to statistics, on average, the HTTP protocol takes no more than 70%, ICMP does not exceed 5%, and so on. Such a profile can either be created manually or by applying statistics accumulated by NBAR. , ,

autoqos :)

3. , . , : .

4. ( class-map — policy-map — service-policy ) , .

Quality of Service (QoS) is a technology for providing different classes of traffic with different priorities in service.

First, it is easy to understand that any prioritization makes sense only when there is a queue for service. It is there, in the queue, that you can “slip in” first, using your right.

The queue is formed where the narrow (usually such places are called "bottle neck", bottle-neck). A typical “neck” is an Internet connection to an office, where computers connected to the network at least at a speed of 100 Mbit / s all use the channel to the provider, which rarely exceeds 100 Mbit / s, and often amounts to a small 1-2-10 Mbit / s . For everyone.

')

Secondly, QoS is not a panacea: if the “neck” is too narrow, then the physical interface buffer is often full, where all the packets that are about to exit through this interface are placed. And then newly arrived packages will be destroyed, even if they are super-necessary. Therefore, if the queue on the interface on average exceeds 20% of its maximum size (on cisco routers, the maximum queue size is usually 128-256 packets), there is reason to think hard about the design of your network, build additional routes or extend the band to the provider.

We will deal with the components of technology

(further under the cut, a lot)

Marking In the fields of the headers of various network protocols (Ethernet, IP, ATM, MPLS, etc.) there are special fields allocated for marking traffic. To mark the same traffic is necessary for the subsequent more simple processing in queues.

Ethernet The Class of Service (CoS) field is 3 bits. Allows you to divide traffic into 8 streams with different markings.

IP. There are 2 standards: old and new. In the old one there was a ToS field (8 bits), from which, in turn, 3 bits were allocated called IP Precedence. This field has been copied to the CoS Ethernet header field.

Later a new standard was defined. The ToS field has been renamed DiffServ, and an additional 6 bits are allocated for the Differencial Service Code Point (DSCP) field, in which the parameters required for this type of traffic can be transmitted.

Marking data is best closer to the source of this data. For this reason, most IP phones themselves add the DSCP = EF or CS5 field to the IP header of voice packets. Many applications also tag traffic on their own in the hope that their packets will be processed priority. For example, this "sin" peer-to-peer networks.

The queues.

Even if we do not use any prioritization technologies, this does not mean that there are no queues. In a narrow place the queue will arise in any case and will provide the standard First In First Out FIFO mechanism. Such a queue, obviously, will allow not to destroy the packets immediately, saving them before sending them to the buffer, but will not provide any preferences, say, to voice traffic.

If you want to give some selected class an absolute priority (that is, packets from this class will always be processed first), then this technology is called Priority queuing . All packets that are in the physical outgoing interface buffer will be divided into 2 logical queues and packets from the privileged queue will be sent until it becomes empty. Only then will the packets from the second queue be transmitted. This technology is simple, rather rough, it can be considered obsolete, because processing of non-priority traffic will constantly stop. On cisco routers you can create

4 queues with different priorities. They maintain a strict hierarchy: packets from less privileged queues will not be served until all queues with a higher priority are empty.

Fair Queuing . A technology that allows each class of traffic to grant the same rights. As a rule, it is not used, because gives little in terms of improving the quality of service.

Weighted Fair Queuing (WFQ ). A technology that provides different rights to different classes of traffic (it can be said that the “weight” is different for different queues), but at the same time serves all the queues. "On the fingers" it looks like this: all packets are divided into logical queues, using

IP Precedence as a criterion. The same field sets the priority (the more, the better). Further, the router calculates the packet from which queue "faster" to transmit and sends it.

He considers it according to the formula:

dT = (t (i) -t (0)) / (1 + IPP)

IPP - IP Precedence field value

t (i) - The time required for the actual transmission of the packet by the interface. It can be calculated as L / Speed, where L is the packet length, and Speed is the interface transfer rate

This queue is enabled by default on all interfaces of cisco routers, except for point-to-point interfaces (HDLC or PPP encapsulation).

WFQ has a number of drawbacks: such a queue uses previously marked packets, and does not allow to determine traffic classes and allocated band independently. Moreover, as a rule, no one marks the IP Precedence field, so packets are unmarked, i.e. all fall into one queue.

The development of WFQ has become a weighted fair queue based on classes ( Class-Based Weighted Fair Queuing, CBWFQ ). In this queue, the administrator himself sets up traffic classes, following various criteria, for example, using ACLs as a template or analyzing protocol headers (see NBAR). Next, for these classes

the “weight” is determined and the packets of their queues are serviced in proportion to the weight (more weight - more packets will go out of this queue per unit of time)

But such a queue does not provide for the strict transmission of the most important packets (as a rule, voice packets or packets of other interactive applications). Therefore, a hybrid of Priority and Class-Based Weighted Fair Queuing - PQ-CBWFQ , also known as Low Latency Queuing (LLQ), appeared . In this technology, you can set up to 4 priority queues, the rest of the classes served by the mechanism of CBWFQ

LLQ is the most convenient, flexible and frequently used mechanism. But it requires setting up classes, setting policies and applying policies on the interface.

I will tell you more about the settings further.

Thus, the process of providing quality service can be divided into 2 stages:

Marking Close to the sources.

Packet handling Putting them in a physical queue on the interface, subdivision into logical queues and providing these logical queues with various resources.

QoS technology is quite resource-intensive and loads the processor quite significantly. And the more it loads, the deeper the headers have to climb to classify packets. For comparison: it is much easier for a router to look at the header of an IP packet and analyze 3 bits of IPP there, rather than unwind the stream almost to the application level, determining what protocol goes inside (NBAR technology)

To simplify further traffic processing, as well as to create a so-called “trusted boundary” (trusted boundary), where we believe all QoS-related headers, we can do the following:

1. On switches and access level routers (close to client machines), catch packets, scatter them into classes

2. In the policy as an action, repaint the headers in one's own way or transfer the values of the higher level QoS headers to the lower ones.

For example, on the router we catch all the packets from the guest WiFi domain (we assume that there may be non-managed computers and software that can use non-standard QoS headers), change any IP headers to default ones, match header 3 headers (DSCP) level (CoS)

so that further switches can efficiently prioritize traffic using only the data link layer label.

LLQ Setup

Setting up the queues is to configure the classes, then for these classes it is necessary to determine the parameters of the bandwidth and apply the entire structure to the interface.

Creating classes:

class-map NAME

match?

access-group Access group

Any Any packets

class-map Class map

cos IEEE 802.1Q / ISL class of service / user priority values

destination-address Destination address

discard-class Discard behavior identifier

dscp Match DSCP in IP (v4) and IPv6 packets

flow flow based QoS parameters

fr-de Match on frame-relay de bit

fr-dlci Match on fr-dlci

input interface select an input interface to match

ip IP specific values

mpls multi protocol label switching

not Negate this match result

packet layer 3 packet length

precedence Match Precedence in IP (v4) and IPv6 packets

protocol protocol

qos-group Qos-group

source-address Source address

vlan vlans to match

Packages can be sorted into classes by various attributes, for example, by specifying an ACL as a template, or by a DSCP field, or by highlighting a specific protocol (NBAR technology is turned on)

Creating a policy:

policy-map POLICY

class NAME1

?

bandwidth bandwidth

compression Activate Compression

drop Drop all packets

log log IPv4 and ARP packets

netflow-sampler netflow action

police police

priority Strict Scheduling Priority for this Class

queue-limit Queue Max Threshold for Tail Drop

Random -detect Enable Early Detection as drop policy

service-policy Configure Flow Next

set set QoS values

shape Traffic Shaping

For each class in politics, you can either allocate a priority piece of the band:

policy-map POLICY

class NAME1

priority?

[8-2000000] Kilo Bits per second

percent % of total bandwidth

and then packages of this class will always be able to count at least on this piece.

Or describe what “weight” this class has within the CBWFQ

policy-map POLICY

class NAME1

bandwidth?

[8-2000000] Kilo Bits per second

percent % of total Bandwidth

remaining % of the remaining bandwidth

In both cases, you can specify both the absolute value and the percentage of the entire available band.

A reasonable question arises: how does the router know the whole band? The answer is trivial: from the bandwidth parameter on the interface. Even if it is not configured explicitly, some of its value is required. It can be viewed with the sh int command.

Also be sure to remember that by default you do not dispose of the entire band, but only 75%. Packages that are clearly not included in other classes fall into the class-default. This setting for the default class can be set explicitly.

policy-map POLICY

class class-default

bandwidth percent 10

(UPD, thanks OlegD)

Change the maximum available band from the default 75% can be a command on the interfacemax-reserved-bandwidth [percent]

Routers jealously watch that the admin does not accidentally give out more bandwidth than there is and swear at such attempts.

It seems that the policy will issue no more than what is written to the classes. However, this situation will be only if all the queues are full. If some kind of is empty, then the line allocated to it will fill the queues in proportion to its “weight”.

All this construction will work like this:

If there are packets from the class with the priority indication, then the router focuses on the transfer of these packets. Moreover, since There may be several such priority queues, then the band is divided between them in proportion to the specified percentages.

Once all priority packets have ended, the CBWFQ queue comes. For each countdown from each queue, the share of packets specified in the settings for this class is “scratched out”. If part of the queues is empty, then their band is divided in proportion to the class “weight” between the loaded queues.

Application on the interface:

int s0 / 0

service-policy [input | output] POLICY

And what to do if you need to strictly cut packages from the class that go beyond the permitted speed? After all, the indication of bandwidth only distributes the band between classes when the queues are loaded.

To solve this problem, for the traffic class, the policy has technology

police [speed] [birst] conform-action [action] exceed-action [action]

it allows you to explicitly specify the desired average speed (speed), the maximum “outlier”, i.e. amount of data transmitted per unit of time. The larger the “overshoot”, the greater the actual transfer rate may deviate from the desired average. Also indicated: action for normal traffic, not exceeding

specified speed and action for traffic exceeding the average speed. Actions may be

police 100000 8000 conform-action?

drop drop packet

exceed-action

conform + exceed burst

set-clp-transmit set atm clp and send it

set-discard-class-transmit set discard-class and send it

set-dscp-transmit set-up dscp and send it

FR DE send and send it

set-mpls-exp-imposition-transmit set

set-mpls-exp-top- set

set-prec-transmit packet forwarding and send it

set-qos-transmit set-qos-group and send it

transmit transmit packet

Often there is also another task. Suppose that it is necessary to limit the flow going towards the neighbor with the slow channel.

In order to accurately predict which packets will reach the neighbor, and which will be destroyed due to channel congestion on the “slow” side, it is necessary to create a policy on the “fast” side that would process queues in advance and destroy redundant packets.

And here we are faced with one very important thing: to solve this problem, we need to emulate the “slow” channel. For this emulation, it is not enough just to scatter packets in queues, you also need to emulate the physical buffer of the “slow” interface. Each interface has a packet rate. Those. per unit of time, each interface can transmit no more than N packets. Usually, the physical interface buffer is calculated so as to provide an “autonomous” operation of the interface for several units of time. Therefore, the physical buffer, say, GigabitEthernet will be ten times larger than any Serial interface.

What is wrong with remembering a lot? Let's take a closer look at what will happen if the buffer on the fast transmitting side is substantially larger than the buffer of the host.

Let for simplicity there is 1 queue. On the “fast” side we simulate a low transmission rate. This means that when packages fall under our policy, they will start to accumulate in the queue. Because Since the physical buffer is large, then the logical queue will be impressive. Some applications (working through TCP) will receive a late notification that some packets have not been received and will keep the large window size for a long time, loading the receiving side. This will occur in the ideal case, when the transmission rate will be equal to or less than the reception rate. But the host interface can also be loaded by other packages.

and then a small queue on the receiving side will not be able to accommodate all the packets transmitted to it from the center. There will be losses that will entail additional transfers, but there will still remain a solid “tail” of previously accumulated packets in the transfer buffer, which will be transmitted “idle”, since on the receiving side did not wait for an earlier package, which means that more of the post will be simply ignored.

Therefore, in order to correctly solve the problem of reducing the transmission rate to a slow neighbor, the physical buffer must also be limited.

This is done by the team

shape average [speed]

And now the most interesting thing: what to do if, in addition to emulating a physical buffer, I need to create logical queues inside it? For example, prioritize voice?

For this, a so-called nested policy is created, which is applied inside the main one and divides into logical queues what gets into it from the parent.

It is time to disassemble some romping example based on the above picture.

Suppose we are going to create sustainable voice channels via the Internet between CO and Remote. For simplicity, let the Remote network (172.16.1.0/24) have only a connection to the CO (10.0.0.0/8). The interface speed on the Remote is 1 Mbps and 25% of this speed is allocated to voice traffic.

Then first we need to highlight the priority class of traffic from both sides and create a policy for this class. In addition, create a class that describes the traffic between offices.

WITH:

class-map RTP

match protocol rtp

policy-map RTP

class RTP

priority percent 25

ip access-list extended CO_REMOTE

permit ip 10.0.0.0 0.255.255.255 172.16.1.0 0.0.0.255

class-map CO_REMOTE

match access-list CO_REMOTE

On the Remote, we’ll do it differently: let us, because of the iron's strength, we cannot use NBAR, then all we have to do is explicitly describe the ports for RTP

ip access-list extended RTP

permit udp 172.16.1.0 0.0.0.255 range 16384 32768 10.0.0.0 0.255.255.255 range 16384 32768

class-map RTP

match access-list RTP

policy-map QoS

class RTP

priority percent 25

Next, you need to emulate a slow interface in CO, apply a nested policy to prioritize voice packets

policy-map QoS

class CO_REMOTE

shape average 1,000,000

service-policy RTP

and apply the policy on the interface

int g0 / 0

service-policy output QoS

On the Remote, set the bandwidth parameter (in kbps) in accordance with the speed of the interface. Let me remind you that it is from this parameter that 25% will be considered. And apply the policy.

int s0 / 0

bandwidth 1000

service-policy output QoS

The narrative would not be complete if not cover the capabilities of the switches. It is clear that pure L2 switches are not able to look so deep into packets and divide them into classes according to the same criteria.

On smarter L2 / 3 switches on routed interfaces (i.e., either on interface vlan, or if the port is derived from the second level with the no switchport command), the same construction is used that works on routers, and if the port or the entire switch works in L2 mode (true for models 2950/60), then only the indication “police” can be used for the traffic class, and priority or bandwidth are not available.

From a purely defensive point of view, knowledge of the fundamentals of QoS will quickly prevent bottlenecks caused by the work of worms. As you know, the worm itself is quite aggressive in the propagation phase and creates a lot of parasitic traffic, i.e. essentially denial of service (DoS) attack.

And often the worm spreads through the ports required for operation (TCP / 135,445.80, etc.). It would be rash to simply close these ports on the router, so it’s more humane to do this:

1. We collect statistics on network traffic. Either via NetFlow, or NBAR, or via SNMP.

2. We identify the profile of normal traffic, i.e. According to statistics, on average, the HTTP protocol takes no more than 70%, ICMP does not exceed 5%, and so on. Such a profile can either be created manually or by applying statistics accumulated by NBAR. , ,

autoqos :)

3. , . , : .

4. ( class-map — policy-map — service-policy ) , .

Source: https://habr.com/ru/post/62831/

All Articles