How to compact up to 90% storage of backups in object storage

Our Turkish clients asked us to configure the backup for the data center correctly. We are doing similar projects in Russia, but it was here that the story was more about researching how best to do it.

Given: there is a local S3 storage, there is Veritas NetBackup, which has acquired new advanced functionality for moving data to object stores now with deduplication support, and there is a problem with free space in this local storage.

Objective: to make everything so that the backup storage process is fast and cheap.

')

Actually, before that, in S3 everything was just files, and these were complete casts of critical data center machines. That is not so that it is very optimized, but everything worked at the start. Now it's time to figure it out and do it right.

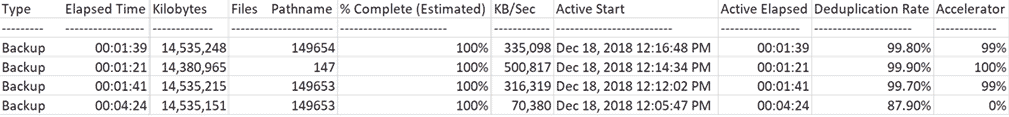

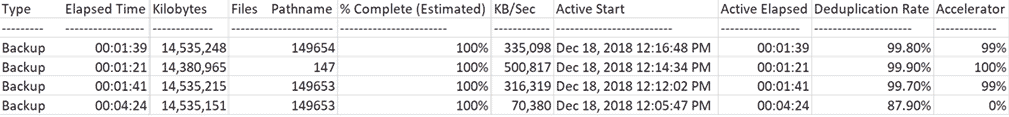

In the picture, what we have come to:

As you can see, the first backup was done slowly (70 Mb / s), and subsequent backups of the same systems were much faster.

Actually, further a little more details about what features are there.

Customers want to backup as often as possible and store as cheaply as possible. It is cheap to store them best in S3 type object storages, because they are the cheapest at the price of maintenance per megabyte from where you can roll back up in a reasonable amount of time. When there are a lot of backups, it becomes not very cheap, because most of the storage is occupied by copies of the same data. In the case of HaaS, Turkish colleagues can compact the storage by about 80-90%. It is clear that this applies specifically to their specifics, but I would definitely count on at least 50% of the dedup.

To solve the problem, the main vendors have long made gateways on Amazon S3. All of their methods are compatible with local S3 if they support the Amazon API. In the Turkish data center, backup is done in our S3, as well as in the T-III “Compressor” in Russia, since such a scheme of work proved to be good with us.

And our S3 is fully compatible with backup methods in Amazon S3. That is, all the backup tools that support these methods allow you to copy everything to such a storage “out of the box”.

Veritas NetBackup made a CloudCatalyst feature:

That is, between the machines that need to be backed up, and the gateway there is an intermediate Linux server through which the backup traffic from the CPC agents passes and deduplication is done on the fly before transferring them to S3. If before there were 30 backups of 20 GB each with compression, now (due to the similarity of the machines) they have become 90% smaller in volume. The deduplication engine is used the same as when stored on regular disks using Netbackup.

Here's what happens before the staging server:

We tested and concluded that when implemented in our data centers, this saves space in S3 storages for us and for customers. As the owner of commercial data centers, of course, we charge for the occupied volume, but still it is very profitable for us too - because we start earning at more scalable places in software, and not on iron rental. Well, this is a reduction in internal costs.

The accelerator allows you to reduce traffic from agents, because only data changes are transmitted, that is, even full backups do not pour in whole, since the media server collects subsequent full backups from incremental backups.

The intermediate server has its own repository where it writes a "cache" of data and holds the base for deduplication.

In full architecture, it looks like this:

It all starts with a full scan - this is a full-fledged full backup. At this point, the media server takes everything, deduplicates it and transfers it to S3. The speed to the media server is low, from it - higher. The main limitation is the processing power of the server.

The following backups are made complete from the point of view of all systems, but in reality it is something like synthetic full backups. That is, the actual transfer and recording to the media server is only those data blocks that have not yet been seen in VM backups before. And the transfer and recording to S3 is only those data blocks whose hash is not in the deduplication database of the media server. If in simpler words - that there were no VMs in any backup before.

When restoring, the media server requests the necessary deduplicated objects from S3, rehydrates them and passes them to the CPC agents, i.e. it is necessary to take into account the volume of traffic during the restore, which will be equal to the real volume of data being restored.

Here's what it looks like:

The integrity of the data is ensured by the protection of S3 itself - there is good redundancy for protection against hardware failures such as a dead hard drive spindle.

The media server needs 4 terabytes of cache - this is Veritas’s recommendation for the minimum size. Better more, but we did just that.

When a partner threw 20 GB into our S3, we stored 60 GB, because we provide three-time geo-reservation of data. Now the traffic is much less, which is good for the channel, and for storage charging.

In this case, the routes are closed past the "big Internet", but you can drive traffic through VPN L2 via the Internet, but it’s better to set the media server to the provider’s entrance.

If you are interested in learning about these features in our Russian data centers or if you have questions about how to implement it, ask in the comments or ekorotkikh@croc.ru mail.

Given: there is a local S3 storage, there is Veritas NetBackup, which has acquired new advanced functionality for moving data to object stores now with deduplication support, and there is a problem with free space in this local storage.

Objective: to make everything so that the backup storage process is fast and cheap.

')

Actually, before that, in S3 everything was just files, and these were complete casts of critical data center machines. That is not so that it is very optimized, but everything worked at the start. Now it's time to figure it out and do it right.

In the picture, what we have come to:

As you can see, the first backup was done slowly (70 Mb / s), and subsequent backups of the same systems were much faster.

Actually, further a little more details about what features are there.

Backup logs for those who are ready to read half a page of dump

Full with rescan

Dec 18, 2018 12:09:43 PM - Info bpbkar (pid = 4452) accelerator sent 14883996160 bytes out of 14883994624 bytes to server, optimization 0.0%

Dec 18, 2018 12:10:07 PM - Info NBCC (pid = 23002) StorageServer = PureDisk_rhceph_rawd: s3.cloud.ngn.com.tr; Report = PDDO Stats (multi-threaded stream used) for (NBCC): scanned: 14570817 KB, CR sent: 1760761 KB, CR sent over FC: 0 KB, dedup: 87.9%, cache disabled

Full

Dec 18, 2018 12:13:18 PM - Info bpbkar (pid = 2864) accelerator sent 181675008 bytes out of 14884060160 bytes to server, optimization 98.8%

Dec 18, 2018 12:13:40 PM - Info NBCC (pid = 23527) StorageServer = PureDisk_rhceph_rawd: s3.cloud.ngn.com.tr; Report = PDDO Stats for (NBCC): scanned: 14569706 KB, CR sent: 45145 KB, CR sent over FC: 0 KB, dedup: 99.7%, cache disabled

Incremental

Dec 18, 2018 12:15:32 PM - Info bpbkar (pid = 792) accelerator sent 9970688 bytes out of 14726108160 bytes to server, optimization 99.9%

Dec 18, 2018 12:15:53 PM - Info NBCC (pid = 23656) StorageServer = PureDisk_rhceph_rawd: s3.cloud.ngn.com.tr; Report = PDDO Stats for (NBCC): scanned: 14383788 KB, CR sent: 15700 KB, CR sent over FC: 0 KB, dedup: 99.9%, cache disabled

Full

Dec 18, 2018 12:18:02 PM - Info bpbkar (pid = 3496) accelerator sent 171746816 bytes out of 14884093952 bytes to server, optimization 98.8%

Dec 18, 2018 12:18:24 PM - Info NBCC (pid = 23878) StorageServer = PureDisk_rhceph_rawd: s3.cloud.ngn.com.tr; Report = PDDO Stats for (NBCC): scanned: 14569739 KB, CR sent: 34120 KB, CR sent over FC: 0 KB, dedup: 99.8%, cache disabled

Dec 18, 2018 12:09:43 PM - Info bpbkar (pid = 4452) accelerator sent 14883996160 bytes out of 14883994624 bytes to server, optimization 0.0%

Dec 18, 2018 12:10:07 PM - Info NBCC (pid = 23002) StorageServer = PureDisk_rhceph_rawd: s3.cloud.ngn.com.tr; Report = PDDO Stats (multi-threaded stream used) for (NBCC): scanned: 14570817 KB, CR sent: 1760761 KB, CR sent over FC: 0 KB, dedup: 87.9%, cache disabled

Full

Dec 18, 2018 12:13:18 PM - Info bpbkar (pid = 2864) accelerator sent 181675008 bytes out of 14884060160 bytes to server, optimization 98.8%

Dec 18, 2018 12:13:40 PM - Info NBCC (pid = 23527) StorageServer = PureDisk_rhceph_rawd: s3.cloud.ngn.com.tr; Report = PDDO Stats for (NBCC): scanned: 14569706 KB, CR sent: 45145 KB, CR sent over FC: 0 KB, dedup: 99.7%, cache disabled

Incremental

Dec 18, 2018 12:15:32 PM - Info bpbkar (pid = 792) accelerator sent 9970688 bytes out of 14726108160 bytes to server, optimization 99.9%

Dec 18, 2018 12:15:53 PM - Info NBCC (pid = 23656) StorageServer = PureDisk_rhceph_rawd: s3.cloud.ngn.com.tr; Report = PDDO Stats for (NBCC): scanned: 14383788 KB, CR sent: 15700 KB, CR sent over FC: 0 KB, dedup: 99.9%, cache disabled

Full

Dec 18, 2018 12:18:02 PM - Info bpbkar (pid = 3496) accelerator sent 171746816 bytes out of 14884093952 bytes to server, optimization 98.8%

Dec 18, 2018 12:18:24 PM - Info NBCC (pid = 23878) StorageServer = PureDisk_rhceph_rawd: s3.cloud.ngn.com.tr; Report = PDDO Stats for (NBCC): scanned: 14569739 KB, CR sent: 34120 KB, CR sent over FC: 0 KB, dedup: 99.8%, cache disabled

What is the problem

Customers want to backup as often as possible and store as cheaply as possible. It is cheap to store them best in S3 type object storages, because they are the cheapest at the price of maintenance per megabyte from where you can roll back up in a reasonable amount of time. When there are a lot of backups, it becomes not very cheap, because most of the storage is occupied by copies of the same data. In the case of HaaS, Turkish colleagues can compact the storage by about 80-90%. It is clear that this applies specifically to their specifics, but I would definitely count on at least 50% of the dedup.

To solve the problem, the main vendors have long made gateways on Amazon S3. All of their methods are compatible with local S3 if they support the Amazon API. In the Turkish data center, backup is done in our S3, as well as in the T-III “Compressor” in Russia, since such a scheme of work proved to be good with us.

And our S3 is fully compatible with backup methods in Amazon S3. That is, all the backup tools that support these methods allow you to copy everything to such a storage “out of the box”.

Veritas NetBackup made a CloudCatalyst feature:

That is, between the machines that need to be backed up, and the gateway there is an intermediate Linux server through which the backup traffic from the CPC agents passes and deduplication is done on the fly before transferring them to S3. If before there were 30 backups of 20 GB each with compression, now (due to the similarity of the machines) they have become 90% smaller in volume. The deduplication engine is used the same as when stored on regular disks using Netbackup.

Here's what happens before the staging server:

We tested and concluded that when implemented in our data centers, this saves space in S3 storages for us and for customers. As the owner of commercial data centers, of course, we charge for the occupied volume, but still it is very profitable for us too - because we start earning at more scalable places in software, and not on iron rental. Well, this is a reduction in internal costs.

Logs

228 Jobs (0 Queued 0 Active 0 Waiting for Retry 0 Suspended 0 Incomplete 228 Done - 13 selected)

(Filter Applied [13])

Job Id Type State State Details Status Job Policy Job Schedule Client Media Server Start Time Elapsed Time End Time Storage Unit Attempt Operation Kilobytes Files Pathname% Complete (Estimated) Job PID Owner Copy Parent Job ID KB / Sec Active Start Active Elapsed Robot Vault Profile Session ID Media to Eject Data Movement Off-Host Type Master Priority Deduplication Rate Transport Accelerator Optimization Instance or Database Share Host

- 1358 Snapshot Done 0 VMware - NGNCloudADC NBCC Dec 18, 2018 12:16:19 PM 00:02:18 Dec 18, 2018 12:18:37 PM STU_DP_S3 _ **** backup 1 100% root 1358 Dec 18, 2018 12 : 16: 27 PM 00:02:10 Instant Recovery Disk Standard WIN - *********** 0

1360 Backup Done 0 VMware Full NGNCloudADC NBCC Dec 18, 2018 12:16:48 PM 00:01:39 Dec 18, 2018 12:18:27 PM STU_DP_S3 _ **** backup 1 14,535,248 149654 100% 23858 root 1358 335,098 Dec 18 , 2018 12:16:48 PM 00:01:39 Instant Recovery Disk Standard WIN - *********** 0 99.8% 99%

1352 Snapshot Done 0 VMware - NGNCloudADC NBCC Dec 18, 2018 12:14:04 PM 00:02:01 Dec 18, 2018 12:16:05 PM STU_DP_S3 _ **** backup 1 100% root 1352 Dec 18, 2018 12: 14:14 PM 00:01:51 Instant Recovery Disk Standard WIN - *********** 0

1354 Backup Done 0 VMware Incremental NGNCloudADC NBCC Dec 18, 2018 12:14:34 PM 00:01:21 Dec 18, 2018 12:15:55 PM STU_DP_S3 _ **** backup 1 14,380,965 147 100% 23617 root 1352 500,817 Dec 18 , 2018 12:14:34 PM 00:01:21 Instant Recovery Disk Standard WIN - *********** 0 99.9% 100%

1347 Snapshot Done 0 VMware - NGNCloudADC NBCC Dec 18, 2018 12:11:45 PM 00:02:08 Dec 18, 2018 12:13:53 PM STU_DP_S3 _ **** backup 1 100% root 1347 Dec 18, 2018 12: 11:45 PM 00:02:08 Instant Recovery Disk Standard WIN - *********** 0

1349 Backup Done 0 VMware Full NGNCloudADC NBCC Dec 18, 2018 12:12:02 PM 00:01:41 Dec 18, 2018 12:13:43 PM STU_DP_S3 _ **** backup 1 14,535,215 149653 100% 23508 root 1347 316,319 Dec 18 , 2018 12:12:02 PM 00:01:41 Instant Recovery Disk Standard WIN - *********** 0 99.7% 99%

1341 Snapshot Done 0 VMware - NGNCloudADC NBCC Dec 18, 2018 12:05:28 PM 00:04:53 Dec 18, 2018 12:10:21 PM STU_DP_S3 _ **** backup 1 100% root 1341 Dec 18, 2018 12: 05:28 PM 00:04:53 Instant Recovery Disk Standard WIN - *********** 0

1342 Backup Done 0 VMware Full_Rescan NGNCloudADC NBCC Dec 18, 2018 12:05:47 PM 00:04:24 Dec 18, 2018 12:10:11 PM STU_DP_S3 _ **** backup 1 14,535,151 149653 100% 22999 root 1341 70,380 Dec 18 , 2018 12:05:47 PM 00:04:24 Instant Recovery Disk Standard WIN - *********** 0 87.9% 0%

1339 Snapshot Done 150 VMware - NGNCloudADC NBCC Dec 18, 2018 11:05:46 AM 00:00:53 Dec 18, 2018 11:06:39 AM STU_DP_S3 _ **** backup 1 100% root 1339 Dec 18, 2018 11: 05:46 AM 00:00:53 Instant Recovery Disk Standard WIN - *********** 0

1327 Snapshot Done 0 VMware - *******. ********. Cloud NBCC Dec 17, 2018 12:54:42 PM 05:51:38 Dec 17, 2018 6:46:20 PM STU_DP_S3 _ **** backup 1 100% root 1327 Dec 17, 2018 12:54:42 PM 05:51:38 Instant Recovery Disk Standard WIN - *********** 0

1328 Backup Done 0 VMware Full *******. ********. Cloud NBCC Dec 17, 2018 12:55:10 PM 05:29:21 Dec 17, 2018 6:24:31 PM STU_DP_S3 _ **** backup 1 222,602,719 258932 100% 12856 root 1327 11,326 Dec 17, 2018 12:55:10 PM 05:29:21 Instant Recovery Disk Standard WIN - *********** 0 87.9% 0%

1136 Snapshot Done 0 VMware - *******. ********. Cloud NBCC Dec 14, 2018 4:48:22 PM 04:05:16 Dec 14, 2018 8:53:38 PM STU_DP_S3 _ **** backup 1 100% root 1136 Dec 14, 2018 4:48:22 PM 04:05:16 Instant Recovery Disk Standard WIN - *********** 0

1140 Backup Done 0 VMware Full_Scan *******. ********. Cloud NBCC Dec 14, 2018 4:49:14 PM 03:49:58 Dec 14, 2018 8:39:12 PM STU_DP_S3 _ **** backup 1 217,631,332 255465 100% 26438 root 1136 15,963 Dec 14, 2018 4:49:14 PM 03:49:58 Instant Recovery Disk Standard WIN - *********** 0 45.2% 0%

(Filter Applied [13])

Job Id Type State State Details Status Job Policy Job Schedule Client Media Server Start Time Elapsed Time End Time Storage Unit Attempt Operation Kilobytes Files Pathname% Complete (Estimated) Job PID Owner Copy Parent Job ID KB / Sec Active Start Active Elapsed Robot Vault Profile Session ID Media to Eject Data Movement Off-Host Type Master Priority Deduplication Rate Transport Accelerator Optimization Instance or Database Share Host

- 1358 Snapshot Done 0 VMware - NGNCloudADC NBCC Dec 18, 2018 12:16:19 PM 00:02:18 Dec 18, 2018 12:18:37 PM STU_DP_S3 _ **** backup 1 100% root 1358 Dec 18, 2018 12 : 16: 27 PM 00:02:10 Instant Recovery Disk Standard WIN - *********** 0

1360 Backup Done 0 VMware Full NGNCloudADC NBCC Dec 18, 2018 12:16:48 PM 00:01:39 Dec 18, 2018 12:18:27 PM STU_DP_S3 _ **** backup 1 14,535,248 149654 100% 23858 root 1358 335,098 Dec 18 , 2018 12:16:48 PM 00:01:39 Instant Recovery Disk Standard WIN - *********** 0 99.8% 99%

1352 Snapshot Done 0 VMware - NGNCloudADC NBCC Dec 18, 2018 12:14:04 PM 00:02:01 Dec 18, 2018 12:16:05 PM STU_DP_S3 _ **** backup 1 100% root 1352 Dec 18, 2018 12: 14:14 PM 00:01:51 Instant Recovery Disk Standard WIN - *********** 0

1354 Backup Done 0 VMware Incremental NGNCloudADC NBCC Dec 18, 2018 12:14:34 PM 00:01:21 Dec 18, 2018 12:15:55 PM STU_DP_S3 _ **** backup 1 14,380,965 147 100% 23617 root 1352 500,817 Dec 18 , 2018 12:14:34 PM 00:01:21 Instant Recovery Disk Standard WIN - *********** 0 99.9% 100%

1347 Snapshot Done 0 VMware - NGNCloudADC NBCC Dec 18, 2018 12:11:45 PM 00:02:08 Dec 18, 2018 12:13:53 PM STU_DP_S3 _ **** backup 1 100% root 1347 Dec 18, 2018 12: 11:45 PM 00:02:08 Instant Recovery Disk Standard WIN - *********** 0

1349 Backup Done 0 VMware Full NGNCloudADC NBCC Dec 18, 2018 12:12:02 PM 00:01:41 Dec 18, 2018 12:13:43 PM STU_DP_S3 _ **** backup 1 14,535,215 149653 100% 23508 root 1347 316,319 Dec 18 , 2018 12:12:02 PM 00:01:41 Instant Recovery Disk Standard WIN - *********** 0 99.7% 99%

1341 Snapshot Done 0 VMware - NGNCloudADC NBCC Dec 18, 2018 12:05:28 PM 00:04:53 Dec 18, 2018 12:10:21 PM STU_DP_S3 _ **** backup 1 100% root 1341 Dec 18, 2018 12: 05:28 PM 00:04:53 Instant Recovery Disk Standard WIN - *********** 0

1342 Backup Done 0 VMware Full_Rescan NGNCloudADC NBCC Dec 18, 2018 12:05:47 PM 00:04:24 Dec 18, 2018 12:10:11 PM STU_DP_S3 _ **** backup 1 14,535,151 149653 100% 22999 root 1341 70,380 Dec 18 , 2018 12:05:47 PM 00:04:24 Instant Recovery Disk Standard WIN - *********** 0 87.9% 0%

1339 Snapshot Done 150 VMware - NGNCloudADC NBCC Dec 18, 2018 11:05:46 AM 00:00:53 Dec 18, 2018 11:06:39 AM STU_DP_S3 _ **** backup 1 100% root 1339 Dec 18, 2018 11: 05:46 AM 00:00:53 Instant Recovery Disk Standard WIN - *********** 0

1327 Snapshot Done 0 VMware - *******. ********. Cloud NBCC Dec 17, 2018 12:54:42 PM 05:51:38 Dec 17, 2018 6:46:20 PM STU_DP_S3 _ **** backup 1 100% root 1327 Dec 17, 2018 12:54:42 PM 05:51:38 Instant Recovery Disk Standard WIN - *********** 0

1328 Backup Done 0 VMware Full *******. ********. Cloud NBCC Dec 17, 2018 12:55:10 PM 05:29:21 Dec 17, 2018 6:24:31 PM STU_DP_S3 _ **** backup 1 222,602,719 258932 100% 12856 root 1327 11,326 Dec 17, 2018 12:55:10 PM 05:29:21 Instant Recovery Disk Standard WIN - *********** 0 87.9% 0%

1136 Snapshot Done 0 VMware - *******. ********. Cloud NBCC Dec 14, 2018 4:48:22 PM 04:05:16 Dec 14, 2018 8:53:38 PM STU_DP_S3 _ **** backup 1 100% root 1136 Dec 14, 2018 4:48:22 PM 04:05:16 Instant Recovery Disk Standard WIN - *********** 0

1140 Backup Done 0 VMware Full_Scan *******. ********. Cloud NBCC Dec 14, 2018 4:49:14 PM 03:49:58 Dec 14, 2018 8:39:12 PM STU_DP_S3 _ **** backup 1 217,631,332 255465 100% 26438 root 1136 15,963 Dec 14, 2018 4:49:14 PM 03:49:58 Instant Recovery Disk Standard WIN - *********** 0 45.2% 0%

The accelerator allows you to reduce traffic from agents, because only data changes are transmitted, that is, even full backups do not pour in whole, since the media server collects subsequent full backups from incremental backups.

The intermediate server has its own repository where it writes a "cache" of data and holds the base for deduplication.

In full architecture, it looks like this:

- The master server manages the configuration, updates, and more, and is located in the cloud.

- The media server (intermediate * nix machine) should be located closest to the redundant systems in terms of network availability. Here backups are deduplicated from all redundant machines.

- There are agents on the redundant machines that generally send to the media server only what is not in its storage.

It all starts with a full scan - this is a full-fledged full backup. At this point, the media server takes everything, deduplicates it and transfers it to S3. The speed to the media server is low, from it - higher. The main limitation is the processing power of the server.

The following backups are made complete from the point of view of all systems, but in reality it is something like synthetic full backups. That is, the actual transfer and recording to the media server is only those data blocks that have not yet been seen in VM backups before. And the transfer and recording to S3 is only those data blocks whose hash is not in the deduplication database of the media server. If in simpler words - that there were no VMs in any backup before.

When restoring, the media server requests the necessary deduplicated objects from S3, rehydrates them and passes them to the CPC agents, i.e. it is necessary to take into account the volume of traffic during the restore, which will be equal to the real volume of data being restored.

Here's what it looks like:

And here is another piece of logs

169 Jobs (0 Queued 0 Active 0 Waiting for Retry 0 Suspended 0 Incomplete 169 Done - 1 selected)

Job Id Type State State Details Status Job Policy Job Schedule Client Media Server Start Time Elapsed Time End Time Storage Unit Attempt Operation Kilobytes Files Pathname% Complete (Estimated) Job PID Owner Copy Parent Job ID KB / Sec Active Start Active Elapsed Robot Vault Profile Session ID Media to Eject Data Movement Off-Host Type Master Priority Deduplication Rate Transport Accelerator Optimization Instance or Database Share Host

- 1372 Restore Done 0 nbpr01 NBCC Dec 19, 2018 1:05:58 PM 00:04:32 Dec 19, 2018 1:10:30 PM 1 14,380,577 1 100% 8548 root 1372 70,567 Dec 19, 2018 1:06:00 PM 00:04:30 WIN - *********** 90,000

Job Id Type State State Details Status Job Policy Job Schedule Client Media Server Start Time Elapsed Time End Time Storage Unit Attempt Operation Kilobytes Files Pathname% Complete (Estimated) Job PID Owner Copy Parent Job ID KB / Sec Active Start Active Elapsed Robot Vault Profile Session ID Media to Eject Data Movement Off-Host Type Master Priority Deduplication Rate Transport Accelerator Optimization Instance or Database Share Host

- 1372 Restore Done 0 nbpr01 NBCC Dec 19, 2018 1:05:58 PM 00:04:32 Dec 19, 2018 1:10:30 PM 1 14,380,577 1 100% 8548 root 1372 70,567 Dec 19, 2018 1:06:00 PM 00:04:30 WIN - *********** 90,000

The integrity of the data is ensured by the protection of S3 itself - there is good redundancy for protection against hardware failures such as a dead hard drive spindle.

The media server needs 4 terabytes of cache - this is Veritas’s recommendation for the minimum size. Better more, but we did just that.

Total

When a partner threw 20 GB into our S3, we stored 60 GB, because we provide three-time geo-reservation of data. Now the traffic is much less, which is good for the channel, and for storage charging.

In this case, the routes are closed past the "big Internet", but you can drive traffic through VPN L2 via the Internet, but it’s better to set the media server to the provider’s entrance.

If you are interested in learning about these features in our Russian data centers or if you have questions about how to implement it, ask in the comments or ekorotkikh@croc.ru mail.

Source: https://habr.com/ru/post/461717/

All Articles