Docker + Laravel + RoadRunner = ❤

This post is written at the request of workers who periodically ask about "How to run the Illuminate / Symfony / MyOwn Psr7 application in the docker." I don’t feel like giving a link to a previously written post , because my views on how to solve the problem have changed quite a lot.

Everything that will be written below is a subjective experience, which (as always) does not claim to be considered the only right decision, but some approaches and solutions may seem interesting and useful to you.

As an application, I will also use Laravel, since it is most familiar to me and quite widespread. Adapting to other PSR-7 -based frameworks / components is possible, but this story is not about that.

Error handling

I would like to start with what turned out to be "not the best practices" in the context of the previous article :

- The need to change the structure of files in the repository

- Using FPM. If we want performance from our applications, then perhaps one of the best solutions even at the stage of technology selection will be to abandon it in favor of something faster and “adapted” to the fact that memory may leak. RoadRunner by lachezis is here as never before

- A separate image with source and assets. Despite the fact that using this approach, we can reuse the same image to build a more complex routing of incoming requests (nginx at the front to return static; requests for dynamics are served by another container into which volume is thrown with the same sources - for better scaling) - this scheme has proved to be rather complicated in product operation. And what's more, RR itself perfectly renders statics, and if there are a lot of statics (or the resource can load and display user-generated content) - we take it out to CDN (the S3 + CloudFront + CloudFlare bundle works fine) and forget about this problem in principle

- Complex CI. This became a real problem when the period of active "building meat" began at the stages of assembly and automatic testing. A dude who did not support this CI before, it becomes very difficult to make changes to it without fear of breaking anything.

Now, knowing what problems need to be fixed and with an understanding of how to do this, I propose proceeding with their elimination. The set of "developer tools" has not changed - it's all the same docker-ce , docker-compose and the powerful Makefile .

As a result, we get:

- A standalone container with an application without the need to mount additional volume

- An example of using git-hooks - we will put the necessary dependencies after

git pullautomatically and prohibit pushing the code if the tests fail (hooks will be stored under the git, of course) - RoadRunner will handle HTTP (s) requests

- Developers will still be able to execute

dd(..)anddump(..)for debugging, and nothing will crash in their browser - Tests can be run directly from the PHPStorm IDE, while they will be run in the container with the application

- CI will collect images for us when publishing a new application version tag

- Let us take the strict rule of maintaining the files

CHANGELOG.mdandENVIRONMENT.md

Visual introduction of a new approach

For a visual demonstration, I will break the whole process into several stages, the changes within which will be made out as separate MRs (after the merger, all brunches will remain in their places; references to MR in the headings of the “steps”). The starting point is the Laravel skeleton of an application created using composer create-project latavel/laravel :

$ docker run \ --rm -i \ -v "$(pwd):/src" \ -u "$(id -u):$(id -g)" \ composer composer create-project --prefer-dist laravel/laravel \ /src/laravel-in-docker-with-rr "5.8.*" Step 1 - Docking + RR

First of all, you need to teach the application to run in the container. To do this, we need a Dockerfile , docker-compose.yml for a description of “how to lift and link containers”, and a Makefile in order to reduce an already simplified process to one or two commands.

Dockerfile

The basic image I use php:XXX-alpine as the easiest and contains what you need to run. Moreover - all subsequent updates to the interpreter are reduced to simply changing the value in this line (now it’s nowhere easier to update PHP).

Composer and the RoadRunner binary file are delivered to the container using multistage and COPY --from=... - this is very convenient, and all the values associated with the versions are not "scattered", but are at the beginning of the file. This works fast, and without dependencies on curl / git clone / make build . 512k / roadrunner images are supported by me, if you want - you can assemble the binary file yourself.

An interesting story happened with the environment variable PS1 (responsible for the prompt in the shell) - it turns out you can use emoji in it, and everything works locally, but if you try to start the image with a variable containing emoji in, say, rancher, it will crash (in swarm everything works without problems).In Dockerfile I start generating a self-signed SSL certificate in order to use it for incoming HTTPS requests. Naturally - nothing prevents the use of a "normal" certificate.

I would also like to say about:

COPY ./composer.* /app/ RUN set -xe \ && composer install --no-interaction --no-ansi --no-suggest --prefer-dist \ --no-autoloader --no-scripts \ && composer install --no-dev --no-interaction --no-ansi --no-suggest \ --prefer-dist --no-autoloader --no-scripts Here the meaning is the following - the composer.lock and composer.json files are delivered in a separate layer to the image, after which all the dependencies described in them are installed. This is done so that during subsequent builds of the image using --cache-from , if the composition and versions of the installed dependencies have not changed, then composer install not executed, taking this layer from the cache, thereby saving build time and traffic (thanks for the idea jetexe ).

composer install is executed twice (the second time with --no-dev ) to "warm up" the cache of dev dependencies, so that when we put all the dependencies on the CI to run the tests, they are put from the composer cache, which is already in the image, and not stretched from distant galaxies.

With the last RUN instruction, we display the versions of the installed software and the composition of the PHP modules both for the history in the build logs and to make sure that "it is at least there and somehow starts."

I also use my Entrypoint, because before starting the application somewhere in the cluster I really want to check the availability of dependent services - DB, redis, rabbit and others.

Roadrunner

To integrate RoadRunner with a Laravel application, a package was written that reduces all integration to a couple of commands in the shell (by running docker-compose run app sh ):

$ composer require avto-dev/roadrunner-laravel "^2.0" $ ./artisan vendor:publish --provider='AvtoDev\RoadRunnerLaravel\ServiceProvider' --tag=rr-config Add APP_FORCE_HTTPS=true to the ./docker/docker-compose.env file, and specify the path to the SSL certificate in the container in the .rr*.yaml .

In order to be able to usedump(..)anddd(..)and everything would work, there is another package -avto-dev/stacked-dumper-laravel. All that is required is to add a pefix to these helpers, namely\dev\dd(..)and\dev\dump(..)respectively. Without this, you will observe an error of the form:worker error: invalid data found in the buffer (possible echo)

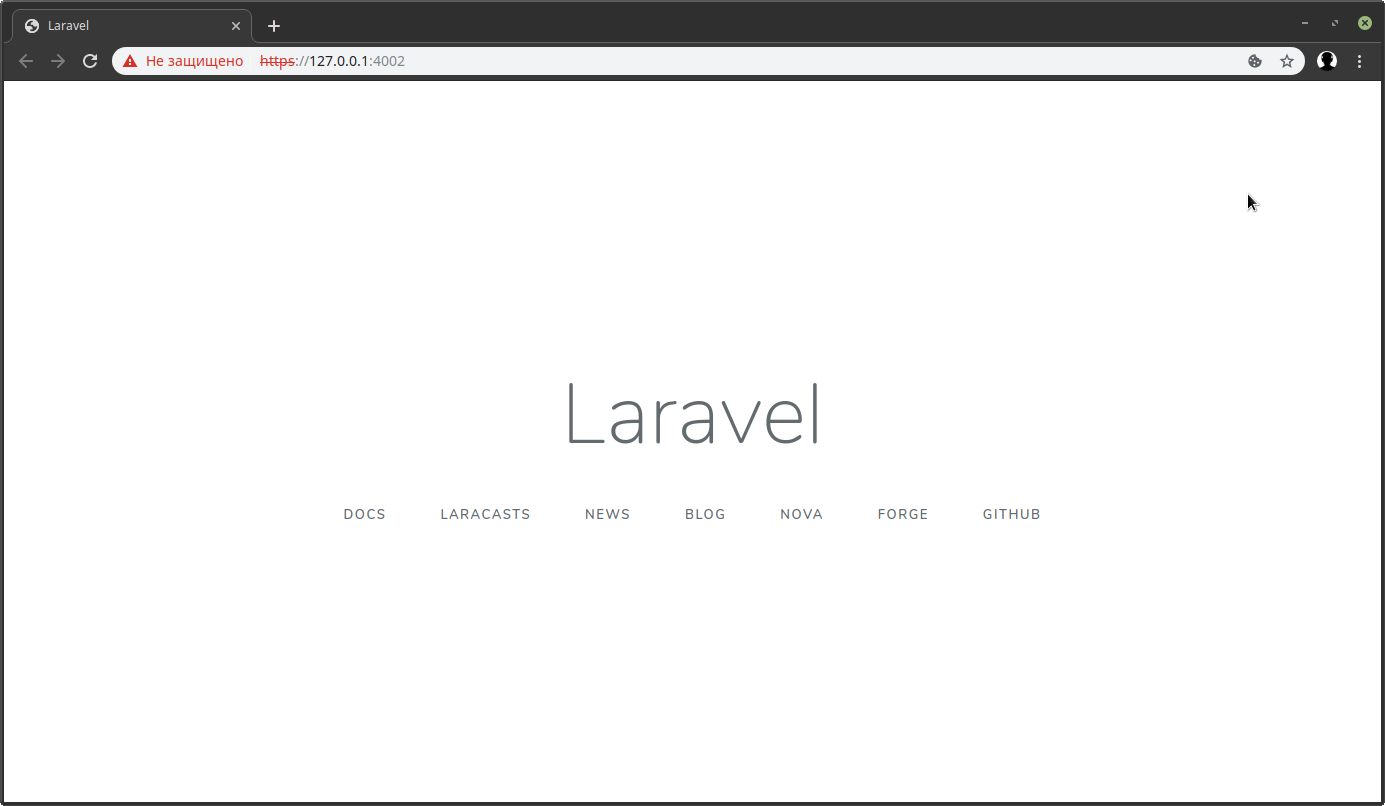

After all the manipulations, do docker-compose up -d and voila:

The PostgeSQL database, redis, and RoadRunner workers successfully ran in containers.

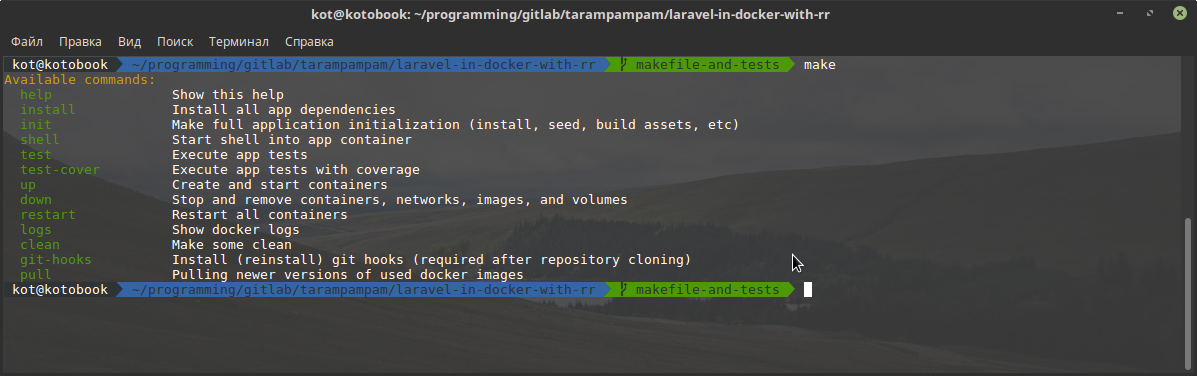

Step 2 - Makefile and Tests

As I wrote earlier, a Makefile is a very underrated item. Dependent goals, your own syntactic sugar, 99% chance that he already stands on the linux / mac development machine, autocomplete “out of the box” - a small list of its advantages.

Adding it to our project and doing make without parameters, we can observe:

To run unit tests, we can either do a make test , or get a shell inside the container with the application ( make shell ), run composer phpunit . To get a coverage report, just make make test-cover , and before running the tests, xdebug with its dependencies will be delivered to the container and the tests will be launched (since this procedure is not performed often and not by CI - this solution seems to be better than keeping a separate image with all dev-lotions).

Git hooks

Hooks in our case will fulfill 2 important roles - not allowing pushing code into the origin whose tests are not successful; and automatically put all the necessary dependencies, if pulling the changes to your machine it turns out that composer.lock has changed. In the Makefile , there is a separate target for this:

cwd = $(shell pwd) git-hooks: ## Install (reinstall) git hooks (required after repository cloning) -rm -f "$(cwd)/.git/hooks/pre-push" "$(cwd)/.git/hooks/pre-commit" "$(cwd)/.git/hooks/post-merge" ln -s "$(cwd)/.gitlab/git-hooks/pre-push.sh" "$(cwd)/.git/hooks/pre-push" ln -s "$(cwd)/.gitlab/git-hooks/pre-commit.sh" "$(cwd)/.git/hooks/pre-commit" ln -s "$(cwd)/.gitlab/git-hooks/post-merge.sh" "$(cwd)/.git/hooks/post-merge" Doing make git-hooks simply takes away the existing hooks and puts those in the .gitlab/git-hooks directory in their place. Their source can be viewed at this link .

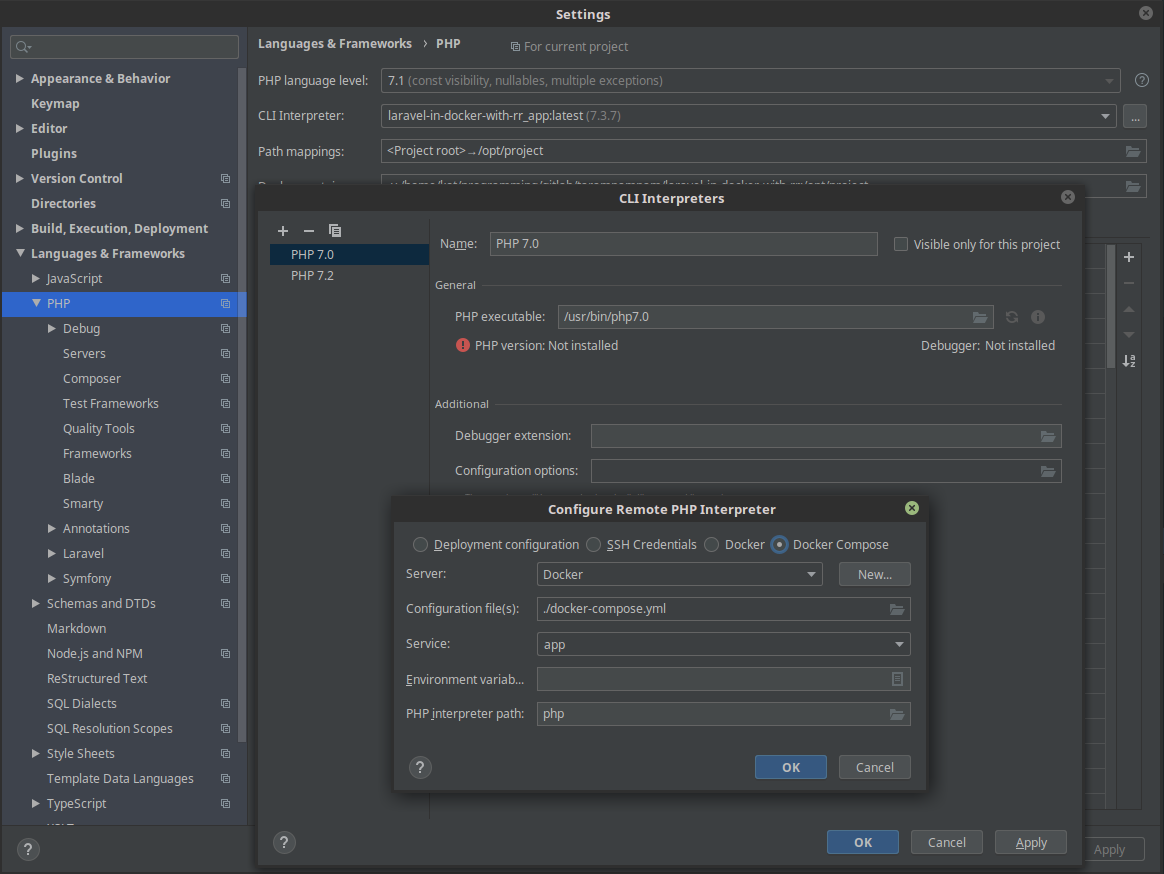

Running tests from PhpStorm

Despite the fact that it is quite simple and convenient - I used it for a long time ./vendor/bin/phpunit --group=foo instead of just pressing the hotkey directly while writing a test or code associated with it.

Click File > Settings > Languages & Frameworks > PHP > CLI interpreter > [...] > [+] > From Docker, Vargant, VM, Remote . Select Docker compose , and the service name is app .

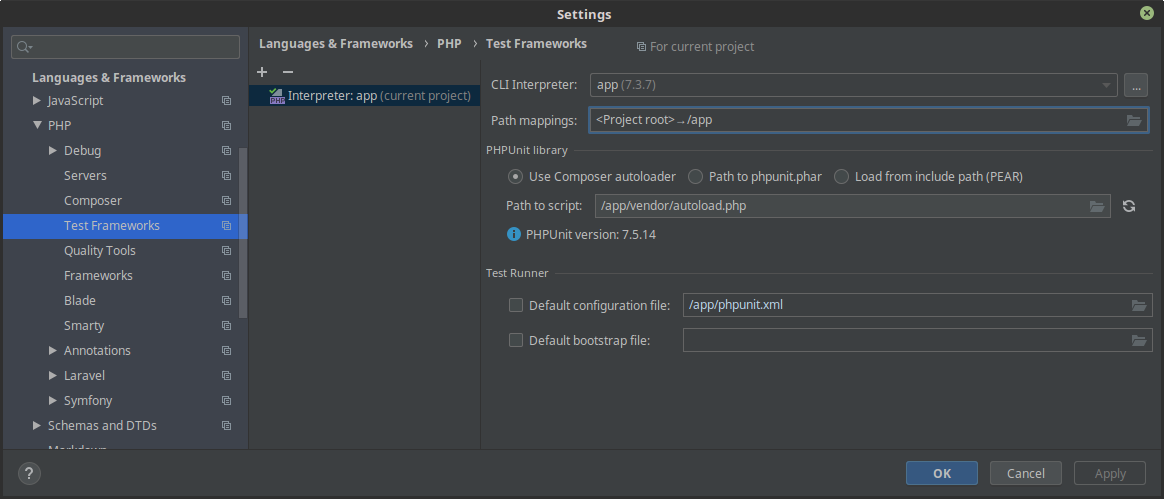

The second step is to tell phpunit to use the interpreter from the container: File > Settings > Test frameworks > [+] > PHPUnit by remote interpreter and select the previously created remote interpreter. In the Path to script /app/vendor/autoload.php , specify /app/vendor/autoload.php , and in Path mappings specify the project root directory as mounted in /app .

And now we can run tests directly from the IDE using the interpreter inside the image with the application, pressing (by default, Linux) Ctrl + Shift + F10.

Step 3 - Automation

All that remains for us to do is to automate the process of running tests and building the image. To do this, create the .gitlab-ci.yml in the root directory of the application, filling it with the following contents . The main idea of this configuration is to be as simple as possible, but not to lose functionality at the same time.

The image is assembled at each brunch, at each commit. Using --cache-from assembling an image upon re-commit is very fast. The need for rebuilding is due to the fact that on each brunch we have an image with the changes that were made as part of this brunch, and as a result, nothing prevents us from rolling it out to swarm / k8s / etc in order to make sure "live" that everything works, and works as it should even before the merge with the master light.

After the assembly, run unit tests and check the launch of the application in the container, sending curl-th requests to the health-check endpoint (this action is optional, but several times this test helped me a lot).

For the "release of the release" - just publish a tag of the form vX.XX (if you still stick to semantic versioning - it will be very cool) - CI will collect the image, run the tests, and perform the actions that you specify in deploy to somewhere .

Do not forget in the project settings (if possible) to limit the ability to publish tags only to persons who are allowed to "release releases".

CHANGELOG.md and ENVIRONMENT.md

Before accepting one or another MR, the inspector must, without fail, check the CHANGELOG.md and ENVIRONMENT.md files CHANGELOG.md ENVIRONMENT.md . If the first is more and less clear , then the relative second I will give an explanation. This file is used to describe all environment variables that the container with the application responds to. Those. if the developer adds or removes support for one or another environment variable, this must be reflected in this file. And at the moment when the question “We urgently need to redefine this and that” arises - no one frantically begins to delve into the documentation or source codes - but looks in a single file. Very comfortably.

Conclusion

In this article, we examined the rather painless process of transferring application development and launching into the Docker environment, integrated RoadRunner, and using a simple CI script automated the assembly and testing of the image with our application.

After cloning the repository, developers need to do make git-hooks && make install && make up and start writing useful code. To companions * ops-am - to take an image with the necessary tag and roll it on their clusters.

Naturally - this scheme is also simplified, and on the "combat" projects I’m wrapping up a lot more, but if the approach described in the article helps someone, I’ll know that I wasted my time.

')

Source: https://habr.com/ru/post/461687/

All Articles