What factors can predict the success of a game on Steam?

On Reddit, I saw a lot of discussions, comments and questions about what determines the success of the game. How important is quality? Is the only defining aspect really the great popularity of the game on the market before its release? Do demos help or hurt? If the performance of the game at release turned out to be bad, then what is the likelihood of their correction? Is it possible to at least approximately predict the sales of a game before its release?

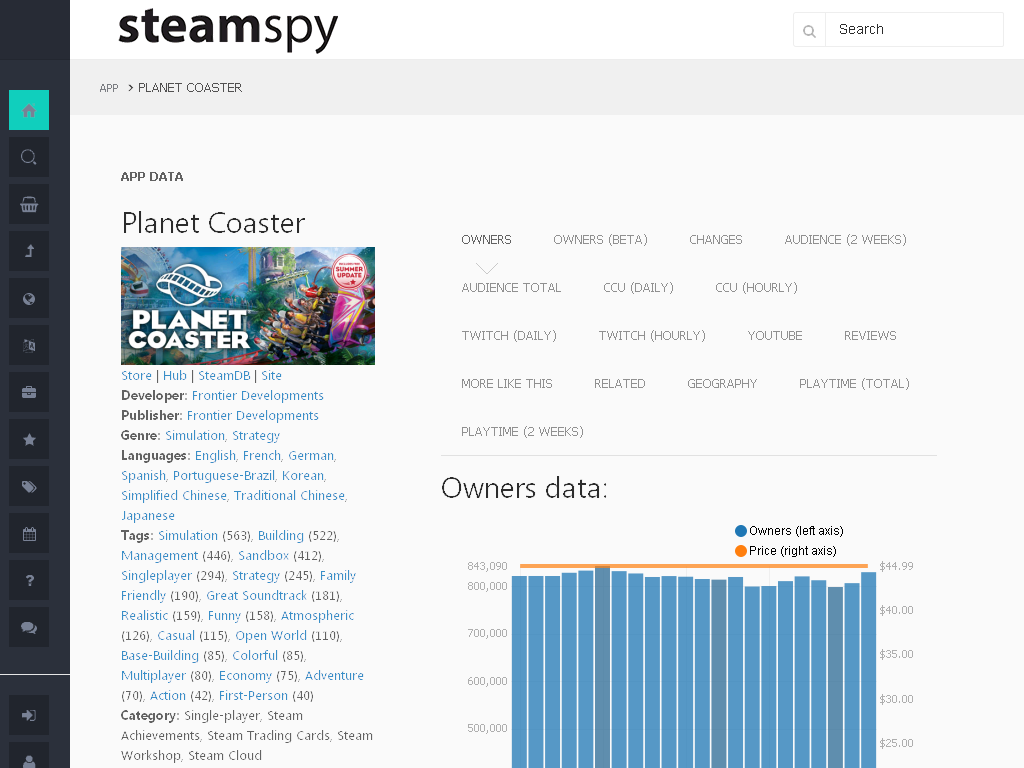

Preparing to release my own game , I spent a lot of time monitoring the released releases in an attempt to find answers to these questions. I compiled a spreadsheet, recorded subscribers, availability of early access, the number of reviews for the first week, month and quarter.

Now I decided to share this data in the hope that they will help other developers understand and predict the sales of their games.

')

First notes on the data:

- One of the most important data sources is the number of reviews on Steam. There is reliable evidence that it strongly correlates with the number of copies sold, the ratio of “50 sales per review on Steam” is often mentioned, but the range of values is quite wide. It seems that most Steam games fall in the range from 25 to 120 sales per review on Steam, but there are also outliers. In addition, games with a very small number of reviews are more likely to be outliers in this regard. My game is the only one in which I have clear sales figures. You can read my long post about her release on Reddit , but the most important thing for us is that I sold 1,587 copies in the first week and 3,580 copies in the first quarter.

- Total number of games in the selection: 115.

- I chose the games semi-randomly from the Popular Upcoming and All Upcoming sections. This inclines the sample more towards popular games, and I did it on purpose: I wanted to have a diverse selection, but so that games with zero sales would not completely dominate it.

- Games are ordered by release date, which is in the range from 10/26/18 to 12/20/18.

| A game | Price | Release Discount | Week Assumption | Valid week | 3 months | 3 Month / week | Followers | Early access | Demo | Review Rating |

|---|---|---|---|---|---|---|---|---|---|---|

| Pit of doom | 9.99 | 0 | 7 | 27 | 43 | 1.592592593 | 295 | Y | N | 0.8 |

| Citrouille | 9.99 | 0.2 | sixteen | eight | 12 | 1.5 | 226 | N | N | |

| Corspe party: book | 14.99 | 0.1 | 32 | 40 | 79 | 1.975 | 1015 | N | N | 0.95 |

| Call of cthulhu | 44.99 | 0 | 800 | 875 | 1595 | 1.822857143 | 26600 | N | N | 0.74 |

| On space | 0.99 | 0.4 | 0 | 0 | 0 | four | N | N | ||

| Orphan | 14.99 | 0 | 50 | 0 | eight | 732 | N | N | ||

| Black bird | 19.99 | 0 | 20 | 13 | 34 | 2.615384615 | 227 | N | N | |

| Gloom | 6.99 | 0 | 20 | eight | 17 | 2.125 | 159 | N | N | |

| Gilded rails | 5.99 | 0.35 | 2 | 3 | 7 | 2.333333333 | eleven | N | Y | |

| The quiet man | 14.99 | 0.1 | 120 | 207 | 296 | 1.429951691 | 5596 | N | N | 0.31 |

| Kartcraft | 19.99 | 0.1 | 150 | 90 | 223 | 2.477777778 | 7691 | Y | N | 0.84 |

| The other half | 7.99 | 0 | 2 | 3 | 27 | 9 | 91 | N | Y | 0.86 |

| Parabolus | 14.99 | 0.15 | 0 | 0 | 0 | sixteen | N | Y | ||

| Yet Another Tower Defense | 1.99 | 0.4 | 20 | 22 | 38 | 1.727272727 | 396 | N | N | 0.65 |

| Galaxy Squad | 9.99 | 0.25 | eight | 42 | 5.25 | 3741 | Y | N | 0.87 | |

| Swords and Soldiers 2 | 14.99 | 0.1 | 65 | 36 | 63 | 1.75 | 1742 | N | N | 0.84 |

| Spitkiss | 2.99 | 0 | 3 | one | 2 | 2 | 63 | N | N | |

| Holy potatoes | 14.99 | 0 | 24 | eleven | 22 | 2 | 617 | N | N | 0.7 |

| Kursk | 29.99 | 0.15 | 90 | 62 | 98 | 1.580645161 | 2394 | N | N | 0.57 |

| SimpleRockets 2 | 14.99 | 0.15 | 90 | 142 | 272 | 1.915492958 | 3441 | Y | N | 0.85 |

| Progress | 14.99 | 0.15 | 160 | 44 | 75 | 1.704545455 | 7304 | Y | N | 0.67 |

| Kynseed | 9.99 | 0 | 600 | 128 | 237 | 1.8515625 | 12984 | Y | N | 0.86 |

| 11-11 Memories | 29.99 | 0 | thirty | ten | 69 | 6.9 | 767 | N | N | 0.96 |

| Rage in peace | 12.99 | 0.1 | 15 | ten | 42 | 4.2 | 377 | N | N | 0.85 |

| One hour one life | 19.99 | 0 | 12 | 153 | 708 | 4.62745098 | 573 | N | N | 0.81 |

| Optica | 9.99 | 0 | 0 | 2 | 3 | 1.5 | 18 | N | N | |

| Cybarian | 5.99 | 0.15 | eight | four | 18 | 4.5 | 225 | N | N | |

| Zeon 25 | 3.99 | 0.3 | 3 | eleven | 12 | 1.090909091 | 82 | Y | N | |

| Of gods and men | 7.99 | 0.4 | 3 | ten | 18 | 1.8 | 111 | N | Y | |

| Welcome to princeland | 4.99 | 0.1 | one | 15 | 55 | 3.666666667 | thirty | N | N | 0.85 |

| Zero Caliber VR | 24.99 | 0.1 | 100 | 169 | 420 | 2.485207101 | 5569 | Y | N | 0.73 |

| Hellsign | 14.99 | 0 | 100 | 131 | 334 | 2.549618321 | 3360 | Y | N | 0.85 |

| Thief simulator | 19.99 | 0.15 | 400 | 622 | 1867 | 3.001607717 | 10670 | N | N | 0.81 |

| Last stanza | 7.99 | 0.1 | eight | 2 | four | 2 | 228 | N | Y | |

| Evil Bank Manager | 11.99 | 0.1 | 106 | 460 | 4.339622642 | 8147 | Y | N | 0.78 | |

| Oppai puzzle | 0.99 | 0.3 | 36 | 93 | 2.583333333 | 54 | N | N | 0.92 | |

| Hexen hegemony | 9.99 | 0.15 | 3 | one | five | five | 55 | Y | N | |

| Blokin | 2.99 | 0 | 0 | 0 | 0 | 0 | ten | N | N | |

| Light Fairytale Ep 1 | 9.99 | 0.1 | 80 | 23 | 54 | 2.347826087 | 4694 | Y | N | 0.89 |

| The last sphinx | 2.99 | 0.1 | 0 | 0 | one | 0 | 17 | N | N | |

| Glassteroids | 9.99 | 0.2 | 0 | 0 | 0 | 0 | five | Y | N | |

| Hitman 2 | 59.99 | 0 | 2000 | 2653 | 3677 | 1.385978138 | 52226 | N | N | 0.88 |

| Golf peaks | 4.99 | 0.1 | one | eight | 25 | 3.125 | 46 | N | N | one |

| Sipho | 13.99 | 0 | 24 | five | 14 | 2.8 | 665 | Y | N | |

| Distraint 2 | 8.99 | 0.1 | 40 | 104 | 321 | 3.086538462 | 1799 | N | N | 0.97 |

| Healing harem | 12.99 | 0.1 | 24 | ten | 15 | 1.5 | 605 | N | N | |

| Spark five | 2.99 | 0.3 | 0 | 0 | 0 | 0 | 7 | N | N | |

| Bad Dream: Fever | 9.99 | 0.2 | thirty | 78 | 134 | 1.717948718 | 907 | N | N | 0.72 |

| Underworld ascendant | 29.99 | 0.15 | 200 | 216 | 288 | 1.333333333 | 8870 | N | N | 0.34 |

| Reentry | 19.99 | 0.15 | eight | 24 | 78 | 3.25 | 202 | Y | N | 0.95 |

| Zvezda | 5.99 | 0 | 2 | 0 | 0 | 0 | 25 | Y | Y | |

| Space gladiator | 2.99 | 0 | 0 | one | 2 | 2 | five | N | N | |

| Bad north | 14.99 | 0.1 | 500 | 360 | 739 | 2.052777778 | 15908 | N | N | 0.8 |

| Sanctus mortort | 9.99 | 0.15 | 3 | 3 | 3 | one | 84 | N | Y | |

| The occluder | 1.99 | 0.2 | one | one | one | one | 13 | N | N | |

| Dark Fantasy: Jigsaw | 2.99 | 0.2 | one | 9 | 36 | four | 32 | N | N | 0.91 |

| Farming simulator 19 | 34.99 | 0 | 1500 | 3895 | 5759 | 1.478562259 | 37478 | N | N | 0.76 |

| Don't Forget Our Esports Dream | 14.99 | 0.13 | 3 | sixteen | 22 | 1.375 | 150 | N | N | one |

| Space toads mayhem | 3.99 | 0.15 | one | 2 | 3 | 1.5 | 18 | N | N | |

| Cattle call | 11.99 | 0.1 | ten | nineteen | 53 | 2.789473684 | 250 | Y | N | 0.71 |

| Raalf | 9.99 | 0.2 | 0 | 0 | 2 | 0 | 6 | N | N | |

| Elite archery | 0.99 | 0.4 | 0 | 2 | 3 | 1.5 | five | Y | N | |

| Evidence of life | 4.99 | 0 | 0 | 2 | four | 2 | ten | N | N | |

| Trinity vr | 4.99 | 0 | 2 | eight | 15 | 1.875 | 61 | N | N | |

| Quiet as a stone | 9.99 | 0.1 | one | one | four | four | 42 | N | N | |

| Overdungeon | 14.99 | 0 | 3 | 86 | 572 | 6.651162791 | 77 | Y | N | 0.91 |

| Protocol | 24.99 | 0.15 | 60 | 41 | 117 | 2.853658537 | 1764 | N | N | 0.68 |

| Scraper: First Strike | 29.99 | 0 | 3 | 3 | 15 | five | 69 | N | N | |

| Experiment Gone Rogue | 16.99 | 0 | one | one | five | five | 27 | Y | N | |

| Emerald shores | 9.99 | 0.2 | 0 | one | 2 | 2 | 12 | N | N | |

| Age of Civilizations II | 4.99 | 0 | 600 | 1109 | 2733 | 2.464382326 | 18568 | N | N | 0.82 |

| Dereliction | 4.99 | 0 | 0 | 0 | 0 | # DIV / 0! | 18 | N | N | |

| Poopy philosophy | 0.99 | 0 | 0 | 6 | ten | 1.666666667 | 6 | N | N | |

| Noce | 17.99 | 0.1 | one | 3 | four | 1.333333333 | 35 | N | N | |

| Qu-tros | 2.99 | 0.4 | 0 | 3 | 7 | 2.333333333 | four | N | N | |

| Mosaics Galore. Challenging Journey | 4.99 | 0.2 | one | one | eight | eight | 14 | N | N | |

| Zquirrels jump | 2.99 | 0.4 | 0 | one | four | four | 9 | N | N | |

| Dark siders III | 59.99 | 0 | 2400 | 1721 | 2708 | 1.573503777 | 85498 | N | N | 0.67 |

| R-Type Dimensions Ex | 14.99 | 0.2 | ten | 48 | 64 | 1.333333333 | 278 | N | N | 0.92 |

| Artifact | 19.99 | 0 | 7000 | 9700 | 16584 | 1.709690722 | 140,000 | N | N | 0.53 |

| Crimson Keep | 14.99 | 0.15 | 20 | five | 6 | 1.2 | 367 | N | N | |

| Ival megagun | 14.99 | 0 | 35 | 26 | 31 | 1.192307692 | 818 | N | N | |

| Santa's workshop | 1.99 | 0.1 | 3 | one | one | one | eight | N | N | |

| Hentai shadow | 1.99 | 0.3 | 2 | 12 | 6 | 14 | N | N | ||

| Ricky runner | 12.99 | 0.3 | 3 | 6 | 13 | 2.166666667 | 66 | Y | N | 0.87 |

| Pro fishing simulator | 39.99 | 0.15 | 24 | 20 | nineteen | 0.95 | 609 | N | N | 0.22 |

| Broken reality | 14.99 | 0.1 | 60 | 58 | 138 | 2.379310345 | 1313 | N | Y | 0.98 |

| Rapture rejects | 19.99 | 0 | 200 | 82 | 151 | 1.841463415 | 9250 | Y | N | 0.64 |

| Lost cave | 19.99 | 0 | 3 | eight | eleven | 1.375 | 43 | Y | N | |

| Epic Battle Fantasy 5 | 14.99 | 0 | 300 | 395 | 896 | 2.26835443 | 4236 | N | N | 0.97 |

| Ride 3 | 49.99 | 0 | 75 | 161 | 371 | 2.304347826 | 1951 | N | N | 0.74 |

| Escape Doodland | 9.99 | 0.2 | 25 | sixteen | nineteen | 1.1875 | 1542 | N | N | |

| Hillbilly apocalypse | 5.99 | 0.1 | 0 | one | 2 | 2 | eight | N | N | |

| X4 | 49.99 | 0 | 1500 | 2638 | 4303 | 1.63115997 | 38152 | N | N | 0.7 |

| Splotches | 9.99 | 0.15 | 0 | 2 | one | 0.5 | ten | N | N | |

| Above the fold | 13.99 | 0.15 | five | 2 | 6 | 3 | 65 | Y | N | |

| The seven chambers | 12.99 | 0.3 | 3 | 0 | 0 | # DIV / 0! | 55 | N | N | |

| Terminal conflict | 29.99 | 0 | five | four | eleven | 2.75 | 125 | Y | N | |

| Just cause 4 | 59.99 | 0 | 2400 | 2083 | 3500 | 1.680268843 | 50,000 | N | N | 0.34 |

| Grapple force rena | 14.99 | 0 | eleven | 12 | 29th | 2.416666667 | 321 | N | Y | |

| Beholder 2 | 14.99 | 0.1 | 479 | 950 | 1.983298539 | 16000 | N | N | 0.84 | |

| Blueprint word | 1.99 | 0 | 12 | 15 | 1.25 | 244 | N | Y | ||

| Aeon of sands | 19.99 | 0.1 | 20 | 12 | 25 | 2.083333333 | 320 | N | N | |

| Oakwood | 4.99 | 0.1 | 32 | 68 | 2.125 | 70 | N | N | 0.82 | |

| Endhall | 4.99 | 0 | four | 22 | 42 | 1.909090909 | 79 | N | N | 0.84 |

| Dr. Cares - Family Practice | 12.99 | 0.25 | 6 | 3 | eight | 2.666666667 | 39 | N | N | |

| Treasure hunter | 16.99 | 0.15 | 200 | 196 | 252 | 1.285714286 | 4835 | N | N | 0.6 |

| Forex Trading | 1.99 | 0.4 | 7 | ten | 14 | 1.4 | 209 | N | N | |

| Ancient frontier | 14.99 | 0 | 24 | five | sixteen | 3.2 | 389 | N | N | |

| Fear the night | 14.99 | 0.25 | 25 | 201 | 440 | 2.189054726 | 835 | Y | N | 0.65 |

| Subterraneus | 12.99 | 0.1 | four | 0 | 3 | # DIV / 0! | 82 | N | N | |

| Starcom: Nexus | 14.99 | 0.15 | 53 | 119 | 2.245283019 | 1140 | Y | N | 0.93 | |

| Subject 264 | 14.99 | 0.2 | 25 | 2 | 3 | 1.5 | 800 | N | N | |

| Gris | 16.9 | 0 | 100 | 1484 | 4650 | 3.133423181 | 5779 | N | N | 0.96 |

| Exiled to the void | 7.99 | 0.3 | 9 | four | eleven | 2.75 | 84 | Y | N |

Column Explanation

- Release discount: first week discount, 0.25 = 25% discount

- Week, assumption: this is my assumption, made before the release of the game, about how many customer reviews on Steam it will have after exactly one week.

- Week Valid: Number of reviews received by the game after 1 week.

- 3 months: the number of reviews received by the game in 3 months.

- Subscribers: the number of subscribers to the game group before its release. In some cases, this parameter was fixed right before the release, in others - a week before it.

- Review score: The percentage of positive reviews on Steam after one month. Games must have at least 20 reviews to get a rating.

Question 1: can quality predict success?

I recently read a post stating that the main success metric for an indie game is its quality.

Quality, of course, is a subjective metric. The most obvious way to objectively measure quality for Steam games is by their percentage of positive reviews. This is the percentage of reviews of game buyers who gave the game a positive rating. I excluded all games that did not have at least 20 reviews in the first month, which reduced the selection to 56 games.

The correlation (Pearson) between the rating of the game and the number of reviews three months after the release was -0.2. But 0.2 (plus or minus) is not such a strong correlation. More importantly, Pearson correlation can fluctuate if the data contain large outliers. Looking at the games themselves, you can see that the difference is an ejection artifact. Literally: Valve's Artifact had the largest number of reviews three months later and one of the lowest ratings (at that time 53%). When I removed this game from the data, the correlation essentially became zero.

An alternative correlation model called the Spearman coefficient, which performs rank correlation and minimizes the effect of large outliers, showed a similar result.

Conclusion: if the correlation between the quality of the game (measured as an estimate by reviews on Steam) and the first quarter of sales (measured by the total number of reviews) exists, then it is too small to be found in this data.

Question 2: do demos, early access or discounts affect the success / failure at the time of release of the game?

Unfortunately, there were so few games that had demos before release (10) that only a very strong correlation could tell us anything. As it turned out, no significant correlations were found.

There were more games with early access (28), but the correlation was again too small to be significant.

More than half of the game had a discount per week of release, and in fact there is a moderate negative correlation of -0.3 between the discount and the number of reviews in the first week. However, it seems that this is mainly the result of the tendency of developers of AAA games (selling the most copies) not to make discounts during the release of the game. If we remove the games that most likely earned more than $ 1 million in the first week, the correlation will drop to almost zero.

Conclusion: not enough data. No clear correlations were found between demos, early access or release discounts and the number of reviews: even if they help or hurt sales, the influence is not so coordinated as to be noticeable in such a sample.

Question 3: Does success predict the game’s popularity before release (for example, the number of subscribers on Steam)?

The number of “subscribers” to any game on Steam can be found by finding its automatically created Game Center . Before the release of the game, this is a good approximate indicator of the level of its popularity in the market.

The correlation between subscribers shortly before the release and the number of reviews after 3 months was 0.89. This is a very strong positive correlation. The rank correlation also turned out to be high (0.85), and this tells us that the result is caused not only by a few highly anticipated games.

With the exception of a single outlier (which will be described below), the ratio between the number of reviews for 3 months and the number of subscribers before the release of the game ranged from 0 (for several games that did not receive a single review) to 1.8 with a median value of 0.1. If you have 1,000 subscribers right before the release, then by the end of the first quarter you should expect “about” 100 reviews.

I noticed that there were several games, the number of subscribers of which seemed too large compared to secondary indicators of the game’s popularity on the market, for example, threads of discussions on forums and attention on Twitter. After conducting a study, I came to the conclusion that the Steam platform considers subscribers to activate the keys before the release. If the game developer handed out a lot of Steam keys before the release (for example, as rewards in Kickstarter or as part of a beta test), it turns out that the game attracted more subscribers than it would receive “organically”.

Conclusion: the organic subscribers collected before the release of the game are a serious indicator of continued success.

Question 4: what about the price?

The correlation between the price and the number of reviews after 3 months is 0.36, which is a moderate correlation. I’m not entirely sure of the usefulness of this data: it’s pretty obvious that games with a large budget have a large marketing budget.

The correlation between price and ratings in reviews is -0.41. It seems likely that players consider the price in their reviews, and that there are more requirements for a $ 60 game than for a $ 10 game.

Question 5: Do first-week sales predict first-quarter results?

The correlation between the number of reviews after 1 week and the number of reviews after 3 is 0.99. Spearman's correlation is 0.97. This is the largest correlation I found in this data.

If we exclude games that sold a very small number of copies (less than 5 reviews in the first week), then most games after 3 months have about twice as many reviews than after 1 week. From this it can be assumed that in the first week as many copies are sold as will be sold in total over the next 12 weeks. The vast majority of games have a tail ratio (ratio of reviews after 3 months and after 1 week) ranging from 1.3 to 3.2.

I often saw questions from developers whose release of games on Steam went poorly. They wanted to know what could be done to improve sales. I’m sure that marketing after release can affect future sales, but it seems that the first week still draws a clear line of results.

Conclusion: everything says that the connection exists

Question 6: Does quality help tail sales of the game?

In the previous question, we said that despite the strong correlation of sales of the first week with the first quarter, ratios still vary in a wide range. Let us designate as the tail coefficient the ratio of reviews after 3 months to reviews after 1 week. The lowest value is 0.95 for Pro Fishing Simulator, which even managed to lose one review. The maximum coefficient was 6.9, we will consider this extremely large surge later. The worst “tail” corresponds to a game with a score of 22%, and the best - to a score of 96%, and this is most likely not a coincidence.

The overall correlation between the tail coefficient and Steam ratings is 0.42.

Conclusion: even despite the absence of a clear correlation between quality and the total number of reviews / sales, there is a moderate correlation between the rating of the game and its “tail”. This hints to us that “good games” show themselves in the long run better than “bad games”, but the influence of this factor is small compared to the more important factor in the popularity of games on the market.

Question 7: is it possible to predict the success of a game before its release without knowing the data on wishlists?

When I collected data for each game, sometimes before its planned release date, I made a forecast about how many reviews she would receive in the first week, and entered this forecast in a spreadsheet.

The main factor on the basis of which I made a forecast was the number of subscribers. Sometimes I corrected the forecast when I felt that the value was incorrect and used auxiliary sources, for example, activity on the Steam forum and attention on Twitter.

The correlation between my guesses and the true value is 0.96, and this is a very strong correlation. As you can see in the data, my forecasts are mostly approximately true, except in a few cases where I was very wrong.

In my experience, multiplying the number of subscribers by 0.1, in most cases we get an approximate estimate of the number of reviews in the

Conclusion: yes, with some exceptions, using the data on subscribers and other indicators, you can approximately predict the results of the first week. Given the strong correlation between the sales of the first week and quarter, you can get an approximate understanding of the results of the first quarter even before the release.

Last question: what about the emissions I mentioned?

There were several games in the data that stood out in one way or another.

Burst 1: Overdungeon . Shortly before the release, the game had 77 subscribers - a rather small number, and based on only these data, I would expect less than a dozen reviews in the first week. As a result, there were 86 of them. But there was more to it: the game had a strong tail and ended the first quarter with 572 reviews. With a large margin, it has the largest ratio in the sample between the number of reviews and the number of subscribers.

Based on the reviews, you can understand that it is an analogue of Slay the Spire, but is very popular in Asia. It seems that 90% of the reviews are written in Japanese or Chinese. If anyone has any idea about the reasons for the unusual apparent success of the game, then I would be interested to hear them.

This seems to be the only clear example of a game data with a minimum number of subscribers before the release, which had solid sales in the first quarter.

Surge 2: 11-11 Memories Retold . This game had just before the release of 767 subscribers, ten times more than Overdungeon. This is still not very much even for a small indie game. But she had a good favorable factor: Yoan Fanise, who was the co-director of the popular Valiant Hearts game with a similar theme, became the director of the game. It was animated by Aardman Studios, famous for the cartoon Wallace and Gromit. The publisher was Bandai Namco Europe, not some inexperienced company. The voice acting of the game was done by Sebastian Koch and Elijah Wood. The game has received many good reviews in both the gaming and regular press. It currently has a rating of 95% positive reviews on Steam.

And despite all this, no one bought it. 24 hours after the release, she literally had zero reviews on Steam. A week later, they became only 10. Three months later, she showed the largest “tail” in the data, but even then reached only 69 reviews. Now there are about 100 of them - an incredible tail coefficient, but the game most likely turned out to be a commercial failure.

This is a great example that a good game + good development quality does not always mean good sales.

Notes: The most important findings from this analysis:

- The success of the game on Steam is very dependent on the performance of the first week

- The indicators of the first week are strongly correlated with the popularity of the game on the market before release

- Quality does not greatly affect the performance of the first week, but can have a positive impact on the tail of game sales.

- All conclusions regarding sales depend on the relationship between the number of reviews and sales.

Source: https://habr.com/ru/post/461457/

All Articles