Security Week 30: privacy, technology and society

July 12, the press has not yet officially confirmed reports that Facebook went to an agreement with the US Federal Trade Commission regarding the leak of user information. The main topic of the FTC investigation was the actions of Cambridge Analytica, which back in 2015 received data from tens of millions of Facebook users. Facebook is accused of insufficient protection of user privacy, and if the messages are confirmed, the social network will pay the US state commission the largest fine in history in the amount of $ 5 billion.

July 12, the press has not yet officially confirmed reports that Facebook went to an agreement with the US Federal Trade Commission regarding the leak of user information. The main topic of the FTC investigation was the actions of Cambridge Analytica, which back in 2015 received data from tens of millions of Facebook users. Facebook is accused of insufficient protection of user privacy, and if the messages are confirmed, the social network will pay the US state commission the largest fine in history in the amount of $ 5 billion.The Facebook and Cambridge Analytica scandal is the first, but by no means the last example of a discussion of technical problems using completely non-technical methods. In this digest we will look at some recent examples of such discussions. More specifically, how issues of user privacy are discussed without regard to the specific features of the operation of network services.

Example One: Google reCaptcha v3

The new version of the Google bot control tool reCaptcha v3 was introduced in October 2018 . The first version of "captcha" from Google distinguished robots from people in text recognition. The second version changed the text to pictures, and then - showed them only to those whom it considered suspicious.

')

The third version does not ask for anything, relying mainly on analysis of user behavior on the website. Each visitor is assigned a specific rating, based on which the site owner can, for example, force an authorization request through a mobile phone if he considers the login to be suspicious. Everything seems to be fine, but not quite. On June 27, the Fast Company publication published a large article where, referring to two researchers, it identifies the problem areas of reCaptcha v3 in terms of privacy.

Firstly, when analyzing the latest version of CAPTCHA, it was found that users logged into a Google account, by definition, get a higher rating. Similarly, if you open a website through a VPN or Tor network, you are more likely to be marked as a suspicious visitor. Finally, Google recommends installing reCaptcha on all pages of the website (and not just those where you need to verify the user). This gives more information about user behavior. But this same feature theoretically provides Google with a large amount of information about user behavior on hundreds of thousands of sites (according to Fast Company, reCaptcha v3 is installed on 650 thousand sites, several millions use previous versions).

This is a typical example of an ambiguous discussion of technologies: on the one hand, the more data a useful tool for fighting bots will have, the better both site owners and users. On the other hand, the service provider gains access to a huge selection of data, and at the same time controls who should be admitted to digital data right away and who makes life difficult. Obviously, proponents of maximum privacy, trying not to keep extra cookies in their browser, constantly using VPNs, do not like this progress at all. From the point of view of Google, there is no problem: the company claims that the data “captcha” is not used for advertising targeting, and the complication of life for bot drivers is a good reason for such innovations.

Example Two: Data Access in Android Applications

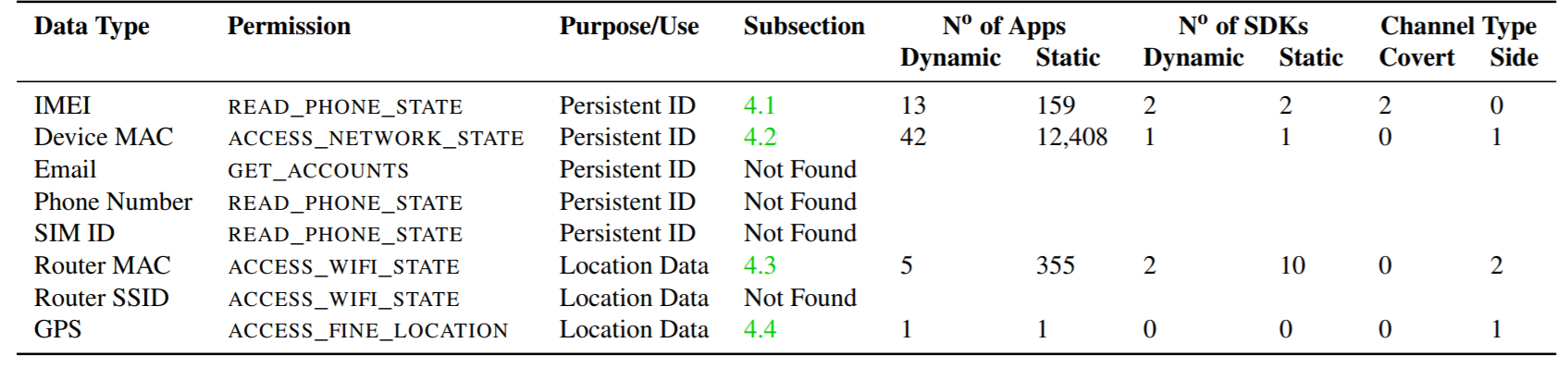

At the end of June, that same US Federal Trade Commission held a PrivacyCon conference. The report of the researcher Serge Egelman was devoted to how developers of applications for Android bypass the limitations of the system and receive data that, in theory, should not be received. In a scientific study , 88 thousand applications from the American Google Play Store were analyzed, of which 1325 were able to circumvent system limitations.

How do they do it? For example, Shutterfly's photo-processing application gains access to geolocation data, even if the user prohibits them from doing so. It's simple: the app processes geotags stored in photos to which access is open. The representative of the developer reasonably comments that the processing of geotags is a standard functionality of the program used to sort photos. A number of applications bypassed the ban on access to geolocation by scanning the MAC addresses of the nearest Wi-Fi points and thus determining the approximate location of the user.

A slightly more “criminal” method of circumventing restrictions was discovered in 13 applications: one app can have access to the IMEI of the smartphone. He saves it to a memory card, from where other programs that do not have access can read it. So, for example, one ad network from China does, whose SDK is embedded in the code of other applications. Access to IMEI allows you to uniquely identify the user for subsequent advertising targeting. By the way, another study is connected with universal access to data on the memory card: the (theoretical) possibility of substitution of media files when communicating in the Telegram and Whatsapp messengers was revealed. As a result, the data access rules in the new version of Android Q will be further tightened .

Example Three: Facebook IDs in Pictures

A few years ago, such a tweet could trigger, at best, a technical discussion for a couple of dozen posts, but now Forbes blog posts are writing about it. Since at least 2014, Facebook has added its own identifiers to the IPTC metadata of images that are uploaded to Facebook itself or to the Instagram social network. Facebook identifiers are discussed in more detail here , but nobody knows how exactly the social network uses them.

Given the negative news background, this behavior can be interpreted as "another way to monitor users, but what is it like they could." But in fact, the identifiers in the metadata can be used, for example, for carpet bans of illegal content, and you can block not only reposts within the network, but also scripts “downloaded the picture and uploaded it again”. Such functionality may be useful.

The problem of public discussion of privacy issues is that the real technical features of a service are often ignored. The most telling example: a discussion of government backdoors in encryption systems for instant messengers or data on a computer or smartphone. Those interested in hassle-free access to encrypted data speak of the fight against crime. Encryption experts have argued that encryption doesn’t work this way, and the intentional weakening of cryptographic algorithms will make them vulnerable to everyone . But at least we are talking about a scientific discipline that is several decades old.

What is privacy, what kind of control over our data is necessary, how to monitor compliance with such standards - there is no general, understandable answer to these questions yet. In all the examples in this post, our data is collected for some useful functionality, however, this benefit is not always for the end user (more often for the advertiser). But when you look at traffic jams on your way home, this is also the result of collecting data about you and hundreds of thousands of other people.

I don’t want to end the post with a stupid conclusion “it’s not so simple”, so let’s try this way: the further, the more often developers of programs, devices and services will have to solve not only technical issues (“how to make it work”), but also socially political (“how not to pay fines, do not read to yourself angry articles in the media and do not lose competition to other companies where there is more privacy at least in words”). How will the industry change in this new reality? Will we have more control over the data? Will the development of new technologies slow down due to “public pressure”, which requires spending time and resources? This evolution we will observe.

Disclaimer: The opinions expressed in this digest may not always coincide with the official position of Kaspersky Lab. Dear editors generally recommend treating any opinions with healthy skepticism.

Source: https://habr.com/ru/post/460933/

All Articles