Minimum possible font

Task: using the smallest possible amount of resources, render a meaningful text.

- How small can a readable font be?

- How much memory will it take to store it?

- How much code will it take to use it?

Let's see what we can do. Spoiler:

Introduction to bitmaps

Computers represent bitmaps as bitmaps. This is not about .bmp format, but about how to store pixels in memory. To understand what is happening, we need to know something about this way.

Layers

The image usually contains several layers located one on top of the other. Most often they correspond to the coordinates of the RGB color space . One layer for red , one for green and one for blue . If the image format supports transparency, then a fourth layer is created for it, usually called alpha . Roughly speaking, a color image is three (or four, if there is an alpha channel) of black and white, located one above the other.

- RGB is not the only color space; JPEG format, for example, uses YUV . But in this article we will not need the other color spaces, so we do not consider them.

A set of layers can be represented in memory in two ways. Either they are stored separately, or values from different layers are interspersed. In the latter case, the layers are called channels , and this is how most modern formats work.

Suppose we have a 4x4 pattern containing three layers: R for red, G for green, and B for the blue component of each of the pixels. It can be represented like this:

RRRR RRRR RRRR RRRR GGGG GGGG GGGG GGGG BBBB BBBB BBBB BBBB All three layers are stored separately. Interleaved format looks different:

RGB RGB RGB RGB RGB RGB RGB RGB RGB RGB RGB RGB RGB RGB RGB RGB - each triple character matches exactly one pixel

- values within three are in the order of RGB . Sometimes a different order may be used (for example, BGR ), but this one is the most common.

For simplicity, I arranged the pixels in the form of a two-dimensional matrix, because it is so clearer where one or another triple is in the image. But in fact, the computer's memory is not two-dimensional, but one-dimensional, so the 4x4 picture will be stored like this:

RGB RGB RGB RGB RGB RGB RGB RGB RGB RGB RGB RGB RGB RGB RGB RGB bpp

The abbreviation bpp stands for the number of bits or bytes per pixel (bits / bytes per pixel). You might have come across 24bpp or 3bpp . These two characteristics mean the same thing - 24 bits per pixel or 3 bytes per pixel . Since the byte is always 8 bits, by the magnitude of the value you can guess which of the units in question.

Memory view

24bpp , also 3bpp as 3bpp is the most common format for storing colors. So at the level of individual bits looks one pixel in the order of RGB .

0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 RRRRRRRRGGGGGGGGBBBBB BBB - One byte for R , one for G and one for B , for a total of three bytes.

- Each of them contains a value from 0 to 255.

So if this pixel has the following color:

R 255G 80B 100

Then 255 is stored in the first byte, 80 in the second, and 100 in the third.

Most often, these values are represented in hexadecimal . Say, #ff5064 . So much more convenient and more compact: R = 0xff (ie, R=255 in the decimal representation), G = 0x50 (= G=80 ), B=0x64 (= B=100 ).

- The hexadecimal representation has one useful property. Since each byte of color is represented by two characters, each character encodes exactly half a byte, or four bits. 4 bits, by the way, are called nibble .

Line width

When the pixels go one after another and each contains more than one channel, it is easy to get confused in the data. It is not known when one line ends and the next one begins, therefore, in order to interpret a file with a bitmap, you need to know the image size and bpp . In our case, the picture has a width w = 4 pixels and each of these pixels contains 3 bytes, so the string is encoded 12 (in the general case, w*bpp ) bytes.

- The string is not always encoded with exactly

w*bppbytes; often hidden pixels are added to it to bring the width of the image to a certain size. For example, it is faster and more convenient to scale pictures when their size in pixels is equal to a power of two. Therefore, the file may contain (user-accessible) image of 120x120 pixels, but stored as an image of 128x128. When displaying an image on the screen, these pixels are ignored. However, we do not need to know about them.

The coordinate of any pixel (x, y) in the one-dimensional representation is (y * w + x) * bpp . This, in general, is obvious: y is the line number, each line contains w pixels, so y * w is the beginning of the desired line, and +x takes us to the desired x within it. And since the coordinates are not in bytes, but in pixels, it all multiplies by the pixel size bpp , in this case in bytes. Since the pixel has a non-zero size, you need to read exactly bpp bytes, starting with the received coordinate, and we will have a complete representation of the desired pixel.

Font atlas

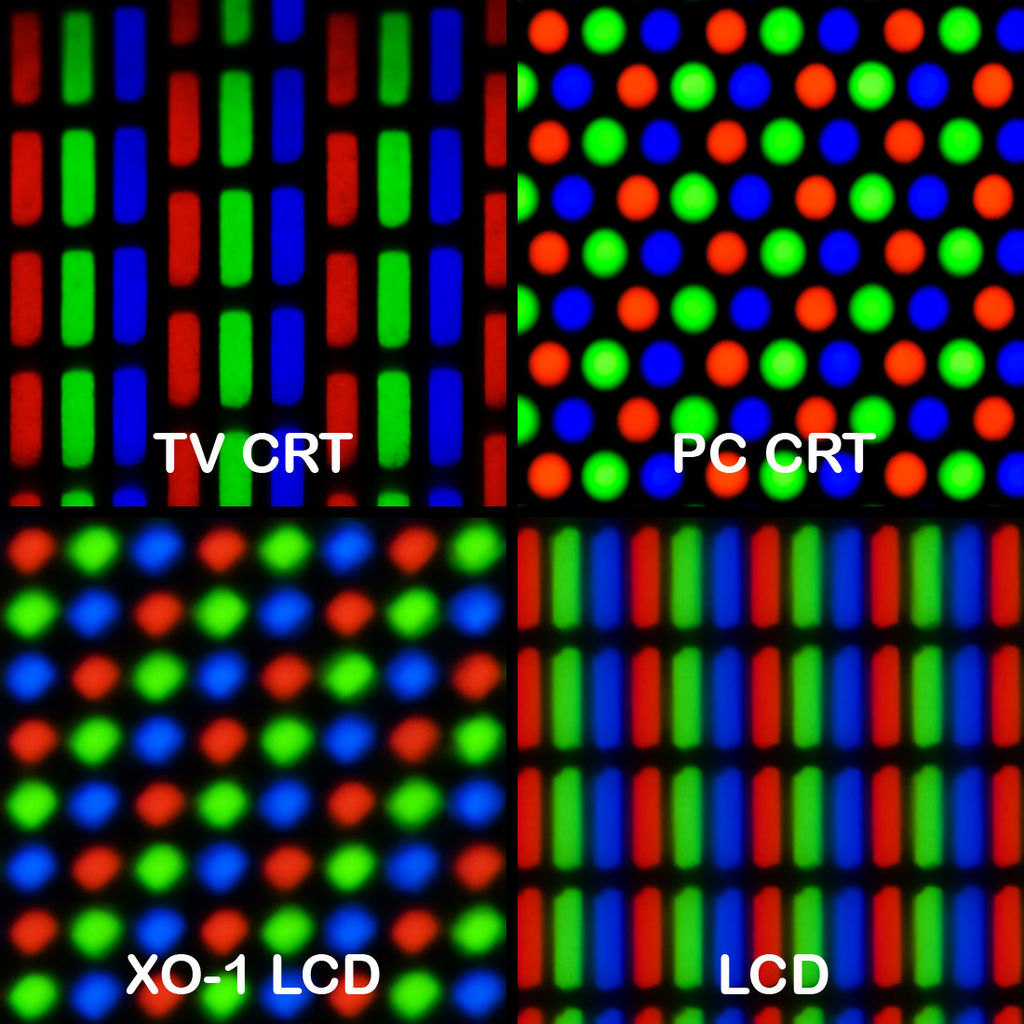

Real-life monitors do not display a pixel as a whole, but three sub-pixels — red, blue, and green. If you look at the monitor under magnification, you will see something like this:

- Wikipedia picture

We are interested in LCD, because, most likely, it is from such a monitor that you read this text. Of course, there are pitfalls:

- Not all matrices use just such an order of subpixels, sometimes BGR.

- If you turn the monitor (for example, look at the phone in landscape orientation), the pattern will also rotate and the font will stop working.

- Different matrix orientations and sub-pixel locations will require reworking of the font itself.

- In particular, it does not work on AMOLED displays using the PenTile layout . Such displays are most often used in mobile devices.

The use of hack-related subpixels to increase resolution is called subpixel rendering . You can read about its use in typography, for example, here .

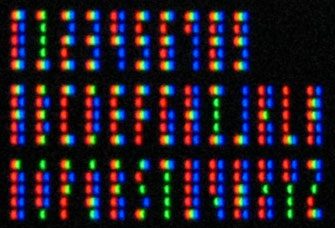

Fortunately for us, Matt Sarnoff already guessed using subpixel rendering to create a tiny millitext font. Manually, he created this tiny picture:

Which, if you look at the monitor very carefully, looks like this:

And here it is, programmatically enlarged 12 times:

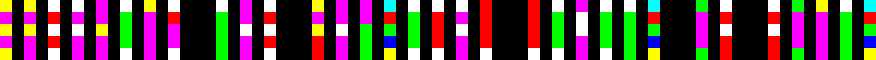

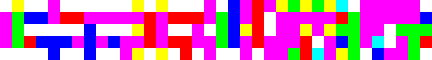

Based on his work, I created a font atlas, in which each character corresponds to a column of 1x5 pixels. The order of characters is as follows:

0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZ

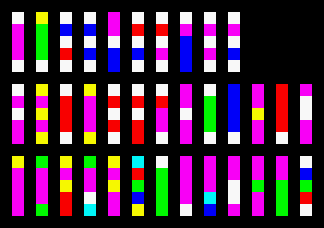

The same atlas, enlarged 12 times:

With 36 characters used, it turns out exactly 365 pixels. If we assume that each pixel occupies 3 bytes, then we need 36*5*3 = 540 bytes to store the entire drawing ( note: in the original, an intricate series of edits about the alpha channel, removal of metadata, etc. n. In translation, I dropped it and use only the final version of the file ). PNG file passed through pngcrush and optipng takes even less:

# wc -c < font-crushed.png 390 But you can achieve even smaller if you use a slightly different approach.

Compression

The attentive reader may have noticed that the atlas uses only 7 colors:

#ffffff#ff0000#00ff00#0000ff#00ffff#ff00ff#ffff00

Palette

In such situations it is often easier to create a palette. Then for each pixel you can store not three bytes of color, but only the number of color in the palette. In our case, 3 bits will be enough to choose from 7 colors ( 7 < 2^3 ). If each pixel is mapped to a three-bit value, then the entire atlas will fit in 68 bytes .

- A reader who understands data compression can answer that there is generally such a thing as “fractional bits” and in our case, 2.875 bits per pixel is enough. This density can be achieved with the help of black magic, known as arithmetic coding . We will not do this, because arithmetic coding is a complicated thing, and 68 bytes are so little.

Alignment

Three-bit coding has one serious drawback. Pixels cannot be evenly distributed over 8-bit bytes, which is important because byte is the minimum addressable memory location. Suppose we want to keep three pixels:

ABC If everyone takes 3 bits, then they will take 2 bytes to store ( - denotes unused bits):

bit 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 pixel AAABBBCCC - - - - - - - What's important, the C pixel doesn't just leave a bunch of empty space; it is broken between two bytes. When we start to add the following pixels, they can be located arbitrarily relative to the boundaries of the bytes. The simplest solution is to use nibbls per pixel, because 8 is perfectly divided by 4 and allows you to place exactly two pixels in each byte. But it will increase the size of the atlas by a third, from 68 bytes to 90 bytes .

- In fact, the file can be made even smaller using palindrome coding, interval coding, and other compression techniques. Like arithmetic coding, we will postpone these techniques until the next article.

Bit buffer

Fortunately, there is nothing fundamentally impossible to work with 3-bit values. You just need to keep track of what position inside the byte we are writing or reading at the moment. The following simple class converts a 3-bit data stream into a byte array.

- For readability reasons, the code is written in JS, but the same method is generalized to other languages.

- Uses order from low byte to high ( Little Endian )

class BitBuffer { constructor(bytes) { this.data = new Uint8Array(bytes); this.offset = 0; } write(value) { for (let i = 0; i < 3; ) { // bits remaining const remaining = 3 - i; // bit offset in the byte ie remainder of dividing by 8 const bit_offset = this.offset & 7; // byte offset for a given bit offset, ie divide by 8 const byte_offset = this.offset >> 3; // max number of bits we can write to the current byte const wrote = Math.min(remaining, 8 - bit_offset); // mask with the correct bit-width const mask = ~(0xff << wrote); // shift the bits we want to the start of the byte and mask off the rest const write_bits = value & mask; // destination mask to zero all the bits we're changing first const dest_mask = ~(mask << bit_offset); value >>= wrote; // write it this.data[byte_offset] = (this.data[byte_offset] & dest_mask) | (write_bits << bit_offset); // advance this.offset += wrote; i += wrote; } } to_string() { return Array.from(this.data, (byte) => ('0' + (byte & 0xff).toString(16)).slice(-2)).join(''); } }; Let's load and encode the file with the atlas:

const PNG = require('png-js'); const fs = require('fs'); // this is our palette of colors const Palette = [ [0xff, 0xff, 0xff], [0xff, 0x00, 0x00], [0x00, 0xff, 0x00], [0x00, 0x00, 0xff], [0x00, 0xff, 0xff], [0xff, 0x00, 0xff], [0xff, 0xff, 0x00] ]; // given a color represented as [R, G, B], find the index in palette where that color is function find_palette_index(color) { const [sR, sG, sB] = color; for (let i = 0; i < Palette.length; i++) { const [aR, aG, aB] = Palette[i]; if (sR === aR && sG === aG && sB === aB) { return i; } } return -1; } // build the bit buffer representation function build(cb) { const data = fs.readFileSync('subpixels.png'); const image = new PNG(data); image.decode(function(pixels) { // we need 3 bits per pixel, so w*h*3 gives us the # of bits for our buffer // however BitBuffer can only allocate bytes, dividing this by 8 (bits for a byte) // gives us the # of bytes, but that division can result in 67.5 ... Math.ceil // just rounds up to 68. this will give the right amount of storage for any // size atlas. let result = new BitBuffer(Math.ceil((image.width * image.height * 3) / 8)); for (let y = 0; y < image.height; y++) { for (let x = 0; x < image.width; x++) { // 1D index as described above const index = (y * image.width + x) * 4; // extract the RGB pixel value, ignore A (alpha) const color = Array.from(pixels.slice(index, index + 3)); // write out 3-bit palette index to the bit buffer result.write(find_palette_index(color)); } } cb(result); }); } build((result) => console.log(result.to_string())); As expected, the atlas fit 68 bytes , which is 6 times smaller than the PNG file.

( note: the author is somewhat cunning: he did not save the palette and image size, which according to my estimates would require 23 bytes with a fixed palette size and increase the image size to 91 bytes )

Now let's convert the image to a string so that it can be inserted into the source code. Essentially, the to_string method to_string this: it represents the contents of each byte as a hexadecimal number.

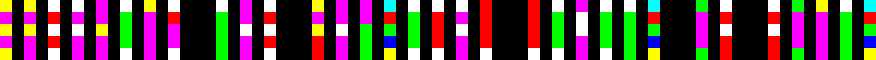

305000000c0328d6d4b24cb46d516d4ddab669926a0ddab651db76150060009c0285 e6a0752db59054655bd7b569d26a4ddba053892a003060400d232850b40a6b61ad00 But the resulting string is still quite long, because we have limited ourselves to an alphabet of 16 characters. You can replace it with base64 , in which there are four times more characters.

to_string() { return Buffer.from(this.data).toString('base64'); } The base64 atlas looks like this:

MFAAAAwDKNbUsky0bVFtTdq2aZJqDdq2Udt2FQBgAJwCheagdS21kFRlW9e1adJqTdugU4kqADBgQA0jKFC0CmthrQA= This string can be hard-coded into the JS-module and used for rasterizing the text.

Rasterization

To save memory, only one letter will be decoded at a time.

const Alphabet = '0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZ'; const Atlas = Uint8Array.from(Buffer.from('MFAAAAwDKNbUsky0bVFtTdq2aZJqDdq2Udt2FQBgAJwCheagdS21kFRlW9e1adJqTdugU4kqADBgQA0jKFC0CmthrQA=', 'base64')); const Palette = [ [0xff, 0xff, 0xff], [0xff, 0x00, 0x00], [0x00, 0xff, 0x00], [0x00, 0x00, 0xff], [0x00, 0xff, 0xff], [0xff, 0x00, 0xff], [0xff, 0xff, 0x00] ]; // at the given bit offset |offset| read a 3-bit value from the Atlas read = (offset) => { let value = 0; for (let i = 0; i < 3; ) { const bit_offset = offset & 7; const read = Math.min(3 - i, 8 - bit_offset); const read_bits = (Atlas[offset >> 3] >> bit_offset) & (~(0xff << read)); value |= read_bits << i; offset += read; i += read; } return value; }; // for a given glyph |g| unpack the palette indices for the 5 vertical pixels unpack = (g) => { return (new Uint8Array(5)).map((_, i) => read(Alphabet.length*3*i + Alphabet.indexOf(g)*3)); }; // for given glyph |g| decode the 1x5 vertical RGB strip decode = (g) => { const rgb = new Uint8Array(5*3); unpack(g).forEach((value, index) => rgb.set(Palette[value], index*3)); return rgb; } The decode function takes a character as input and returns the corresponding column in the source image. It is impressive here that decoding one character takes only 5 bytes of memory, plus ~ 1.875 bytes to read the required piece of the array, i.e. on average 6.875 per letter. If you add 68 bytes to the storage of the array and 36 bytes to the storage of the alphabet, you can theoretically render the text with 128 bytes of RAM.

- This is possible if you rewrite the code in C or assembler. Against the background of the overhead projector, JS saves on matches.

It remains only to put these columns together and return the image with the text.

print = (t) => { const c = t.toUpperCase().replace(/[^\w\d ]/g, ''); const w = c.length * 2 - 1, h = 5, bpp = 3; // * 2 for whitespace const b = new Uint8Array(w * h * bpp); [...c].forEach((g, i) => { if (g !== ' ') for (let y = 0; y < h; y++) { // copy each 1x1 pixel row to the the bitmap b.set(decode(g).slice(y * bpp, y * bpp + bpp), (y * w + i * 2) * bpp); } }); return {w: w, h: h, data: b}; }; This will be the minimum possible font.

const fs = require('fs'); const result = print("Breaking the physical limits of fonts"); fs.writeFileSync(`${result.w}x${result.h}.bin`, result.data); Add a little imagemagick to get the image in a readable format:

# convert -size 73x5 -depth 8 rgb:73x5.bin done.png And here is the final result:

It is increased 12 times:

It is also a macro shot from a poorly calibrated monitor:

And finally, it is better on the monitor:

')

Source: https://habr.com/ru/post/460697/

All Articles