Informatics specialists expand the boundaries of knowledge to be tested

The universe of computer-tested tasks has grown. What is the secret ingredient that happened? Because of quantum entanglement.

Imagine that someone came to you and declared that he has an oracle capable of uncovering the hidden secrets of the universe. You might be interested in this, but it would be hard for you to believe in it. You would like to think of a way to confirm that the oracle is telling the truth.

This is the main problem of computer science. Some tasks are too difficult to solve in a reasonable time. But their decisions are simple to check. Given this, computer scientists want to know: how difficult can a task be, the solution of which can still be checked?

')

It turns out that the answer to this question: almost unimaginably difficult.

In the work , which appeared in April 2019, two specialists in computer science radically increased the number of tasks that fall into the category “difficult to solve, easy to verify”. They describe a method that allows you to verify solutions to problems of almost inconceivable complexity. “It sounds crazy,” said Thomas Vidic, a computer science specialist from the California Institute of Technology, who is not associated with this work.

The study is applicable to quantum computers performing calculations according to the non-intuitive rules of quantum mechanics. Quantum computers have barely appeared, but in the future they have the potential to revolutionize computing.

New work, in fact, gives us a lever of influence on that all-powerful oracle. Even if the oracle promises to give you ready-made solutions to problems whose solutions are far beyond your abilities, there is still a way to check whether the oracle is telling the truth.

To the end of the universe

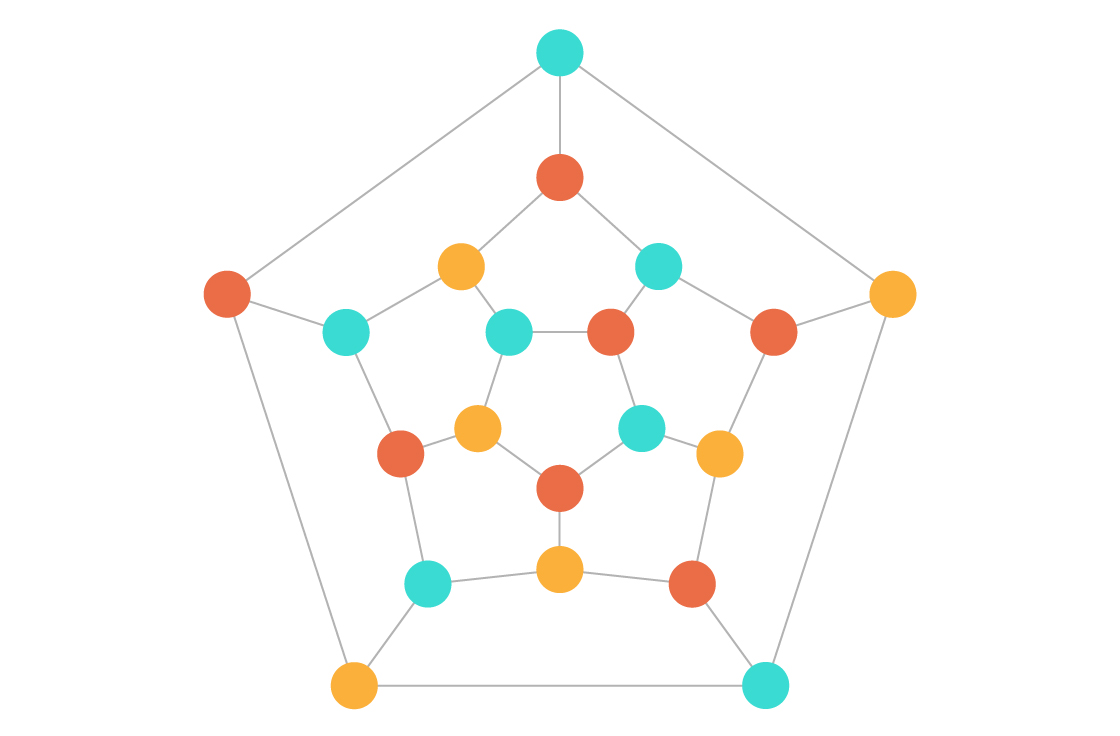

When a task is difficult to solve, but easy to verify, the search for a solution takes a long time, but checking the correctness of a particular solution does not require. For example, imagine that a person shows you a graph - a set of points (vertices) connected by lines (edges). A person asks if it is possible to color the vertices of the graph with three colors so that no two connected vertices have the same color.

The task of three colors is difficult to solve. In general, the time needed to search for vertex coloring (or the definition that such a coloring does not exist) increases exponentially with increasing graph size. If, say, finding a solution for a graph with 20 vertices takes 3 20 ns - that is, a few seconds, then for a graph with 60 vertices it will take 3 60 ns to search, which is 100 times more than the current age of the Universe.

But, let's say, someone claims that he painted the graph in three colors. This application will not take much time to check. You just need to go around all the vertices one by one, studying the vertices associated with them. With the increase in the size of the graph, the time of this check increases slowly - as polynomial . As a result, the computer does not take much more time to check the coloring of a graph of 60 vertices than to check a graph of 20 vertices.

“When properly painted in three colors, it’s easy to test its performance,” said John Wright , a physicist at the Massachusetts Institute of Technology, who wrote this paper with Anand Natarajan from Caltech.

In the 1970s, computer scientists identified a class of tasks that are easy to verify, even if some of them are difficult to solve. They called this class NP, nondeterministic polynomial time . Since then, in the computer science class NP studied more than others. In particular, computer scientists would like to know how this class is changing, when the testing algorithm gets new ways to verify the correctness of the solution.

Correct questions

Prior to the work of Natarajan and Wright, the power of checks increased in two large jumps.

To understand the first one, imagine that you do not distinguish colors. Someone places two dice in front of you on the table and asks if they are the same color or different. For you, this task is impossible. Moreover, you can not confirm the correctness of someone else's solution to this problem.

However, you are allowed to ask this person proving questions. Suppose the proving tells you that the cubes are of different colors. You call one of them "cube A", and the other - "cube B". Then you hide them behind your back, and accidentally change places among themselves. Then you open the cubes and ask the prover to find the cube A.

If the cubes are of different colors, this is a very simple question. The prover recognizes cube A, if it is, say, a red cube, and will correctly identify it each time.

However, if the cubes are of the same color - and the prover made a mistake by calling them different - the prover can only guess which one of them is. Therefore, he will be able to correctly determine the cube A only in 50% of cases. Constantly questioning proving his decision, you can confirm whether it is correct.

Anand Natarajan and John Wright

“The tester can send proving questions,” said Wright, “and maybe at the end of the conversation the tester will be more convinced of the answer.”

In 1985, three computer scientists proved that such interactive evidence can be used to confirm the solution of more complex problems than problems from NP. Their work created a new class of tasks, IP, "interactive polynomial time." The method used to confirm the colors of the cubes can be used to confirm the answers to much more complicated questions.

The second breakthrough occurred in the same decade. It is similar to the logic of a police investigation. If you have two suspects who have committed a crime, in your opinion, you will not question them together. You interrogate them in different rooms, and will verify the answers of one with the answers of the other. By polling them individually, you can uncover more truth than if you had one suspect.

“The two suspects will not be able to form a distributed consistent story, because they do not know what answers the other gives,” said Wright.

In 1988, four computer scientists proved that if you asked two computers to solve the same problem separately - and then interrogate them separately - you can confirm a class of problems, even more than IP: MIP class, interactive evidence with a lot of proving.

Using this approach, you can, for example, confirm the correctness of the coloring of the graph in three colors for a sequence of graphs that increase in size much faster than graphs from NP. In NP, graph size increases linearly - the number of vertices can grow from 1 to 2, to 3, to 4, and so on - so that the size of the graph is not disproportionately more than the amount of time required to check the coloring. But in MIP, the number of graph vertices grows exponentially from 2 1 to 2 2 , 2 3 , 2 4 , and so on.

As a result, the graphs turn out to be too large, even just to fit in the memory of the computer, which must go through the list of vertices and check the coloring. However, the coloring can still be checked by asking the two proving different, but related questions.

In the MIP, the verifier has enough memory to run a program that allows us to determine if the two vertices of the graph are connected by an edge — and he can verify the answers of those proving to make sure the coloring is correct.

Expansion of the list of tasks that are difficult to solve, but easy to verify, from NP to IP and MIP, classic computers were engaged. But quantum computers work very differently. And for several decades it was not clear how their use changes this picture - is it easier, or is it more difficult to check decisions with their help?

In the new work of Natarajan and Wright there is an answer to this question.

Quantum tricks

Quantum computers perform calculations using quantum bits or qubits. They have such a property as entanglement with each other. And when two qubits — or even large qubit systems — are entangled, this means that their physical properties influence each other in a certain way.

In their new work, Natarajan and Wright are considering a scenario that includes two different quantum computers, with the quits of one confusing with the quits of the other.

It would seem that such an installation degrades the quality of checking answers. The whole power of interactive evidence with a lot of evidence comes precisely from the fact that you can interrogate two separate proofs and check their answers. If the answers of those proving are the same, then they are most likely correct. But if the quantum states of the two provers are confused, they would seem to have more opportunities to consistently insist on the correctness of the wrong answers.

Indeed, when a scenario with two intricate quantum computers was first made public in 2003, computer scientists suggested that entanglement would reduce the scope for verification. “The obvious reaction of everyone, including mine, was that those who prove in this case have more opportunities,” said Vidik. “They can use confusion to link their answers.”

But, despite the initial pessimism, Vidic spent several years trying to prove the opposite. In 2012, he and Tsuyoshi Ito proved that there is an opportunity to test all tasks in MIP using intricate quantum computers.

And now Natarajan and Wright have proved that the situation is even better: with the help of entanglement, an even larger class of problems can be proved than without it. You can turn the connection between intricate quantum computers for the benefit of the verifier.

To understand how to do this, we recall the procedure from MIP to check the coloring of graphs whose sizes grow exponentially. The verifier does not have enough memory to store the graph as a whole, but he has enough memory to determine two connected vertices, and to ask the verifiers about the color of these vertices.

In the class of problems considered by Natarajan and Wright - called NEEXP, non-deterministic twice exponential time - the sizes of graphs grow even faster than in MIP. NEEXP graphs grow at a “double exponential rate.” Instead of growing as a power of two - 2 1 , 2 2 , 2 3 , 2 4 , and so on - the number of vertices in the graphs grows, as the power of a power of two - 2 2 1 , 2 2 2 , 2 2 3 , 2 2 4 , and so on. As a result, the graphs quickly turn out so huge that the verifier is no longer even able to find a pair of connected vertices.

“To identify a vertex, you need 2 n bits, which is exponentially larger than the examiner has in memory,” said Natarajan. However, Natarajan and Wright proved that it is possible to check the coloring of a double-exponential graph in three colors, even without the ability to determine which vertices you need to ask questions of proving. The fact is that you can make the proving themselves choose the right questions.

The idea of forcing computers to interrogate themselves about their own decisions, for computer scientists, sounds about as reasonable as asking suspects in crimes to interrogate themselves - definitely a stupid sentence. Only Natarajan and Wright proved that it is not. And the reason for this is confusion.

"Entangled states are shared resources," said Wright. “Our entire protocol is to understand how to use this shared resource to create interrelated questions.”

If quantum computers are confused, then their choice of vertices will correlate, and give just the right set of questions to check the coloring in three colors.

At the same time, the verifier does not need to have two quantum computers intertwined so that their answers to these questions correlate with each other (it would be similar to how the two crime suspects correlate their false alibis). This problem is dealt with by another strange quantum feature. In quantum mechanics, the principle of uncertainty forbids us to simultaneously accurately know the location and moment of a particle - if you measure one property, then you destroy information about another. The uncertainty principle severely limits your knowledge of any two “complementary” properties of a quantum system.

Natarajan and Wright take advantage of this in their work. To calculate the color of the vertex, they make two quantum computers carry out complementary measurements. Each computer calculates the color of its own vertex, and thus destroys all the information about the other. In other words, confusion allows computers to produce correlating questions, but the principle of uncertainty prohibits them from colluding in answering them.

“We need to make those proving to forget, and this is the main thing Natarajan and Wright did in their work,” said Vidik. “They are forcing the prover to erase the information through a measurement.”

The consequences of their work can be called almost existential. Before the release of the work, the limit on knowledge that we can get with full confidence was much smaller. If we were given an answer to a task from the NEEXP set, we would only have to accept it on faith. But Natarajan and Wright escaped beyond these boundaries, and made it possible to confirm the answers from where to a more extensive universe of computational problems.

And now, when they did, it is unclear where the next border of verifiability lies. “It may be much further,” said Lance Fortnau, an IT specialist from the Georgia Institute of Technology. “They leave open the possibility to take the next step.”

Source: https://habr.com/ru/post/460215/

All Articles