Dog ate on neural networks

You see you on the street dog. You always see dogs on the street. Someone doesn’t notice them at all and doesn’t have any troubles, these spiritual torments are not there, and in general we can assume that they are on the street of norms. But you! And I noticed, and your eyes are in a wet place, you think like this: “dog. wah, so pretty, I would take it but I can't. But that's right for sure, but nothing. And he went further. But pofotal him, stroked.

With such good little ones, we need to throw geos at the moment and a couple of photos into our system.

There are those who really want to find their runaway to mate, or something else that a baby girl. This one really definitely wants to find a pet. They themselves place ads, walk through doorways, basements and on different sites.

2019 has to more technological solutions. And our project with the working title PetSI (PetSearchInstrument) is exactly that.

')

In the framework of the Open Data Science community’s Machine Learning for Social Good, we, together with 9851754 and our team, make a service for searching for missing animals, in which the owner can indicate a photo of the animal, the address of the loss and other characteristics, and in response receive the most relevant from our point of view algorithm for reporting found or seen animals.

A brief algorithm for the operation of our service: we aggregate data (photos, location, breed, etc.) from several sites, convert images by a neural network into a vector, train knn, and show the nearest neighbors of the entered photo. You find a lost animal, the animal returns home. Everyone is happy)

In addition to searching for missing pets, we are developing a recommender system to speed up the addition of shelter animals to new owners. While we do not have statistics on user behavior on the site, we therefore use content-based recommendations based on visual similarity.

Development is conducted in python. We use the following technology stack:

To synchronize all stages of our workflow, we use Airflow:

Multistage data collection. First, the spiders collect information and send it into a queue in its raw form. On the other side of the queue, special handlers convert the data to the desired type (for example, convert the text to an address) and add it to the DBMS.

The collected data is validated and sent to the training model. A special service has been written for the site that loads new data and models, and also initiates recalculation of search results for each user. The whole cycle takes about 8 hours.

How we use machine learning and data analysis:

For further development we need:

Why it might be necessary for you:

Have a plan. We need people in the team.

Write in a personal or fill in the form and join!

Finally, we prepared a selection of meme dogs and similar dogs from shelters, which we found using our algorithms

With such good little ones, we need to throw geos at the moment and a couple of photos into our system.

There are those who really want to find their runaway to mate, or something else that a baby girl. This one really definitely wants to find a pet. They themselves place ads, walk through doorways, basements and on different sites.

2019 has to more technological solutions. And our project with the working title PetSI (PetSearchInstrument) is exactly that.

')

Concept

In the framework of the Open Data Science community’s Machine Learning for Social Good, we, together with 9851754 and our team, make a service for searching for missing animals, in which the owner can indicate a photo of the animal, the address of the loss and other characteristics, and in response receive the most relevant from our point of view algorithm for reporting found or seen animals.

A brief algorithm for the operation of our service: we aggregate data (photos, location, breed, etc.) from several sites, convert images by a neural network into a vector, train knn, and show the nearest neighbors of the entered photo. You find a lost animal, the animal returns home. Everyone is happy)

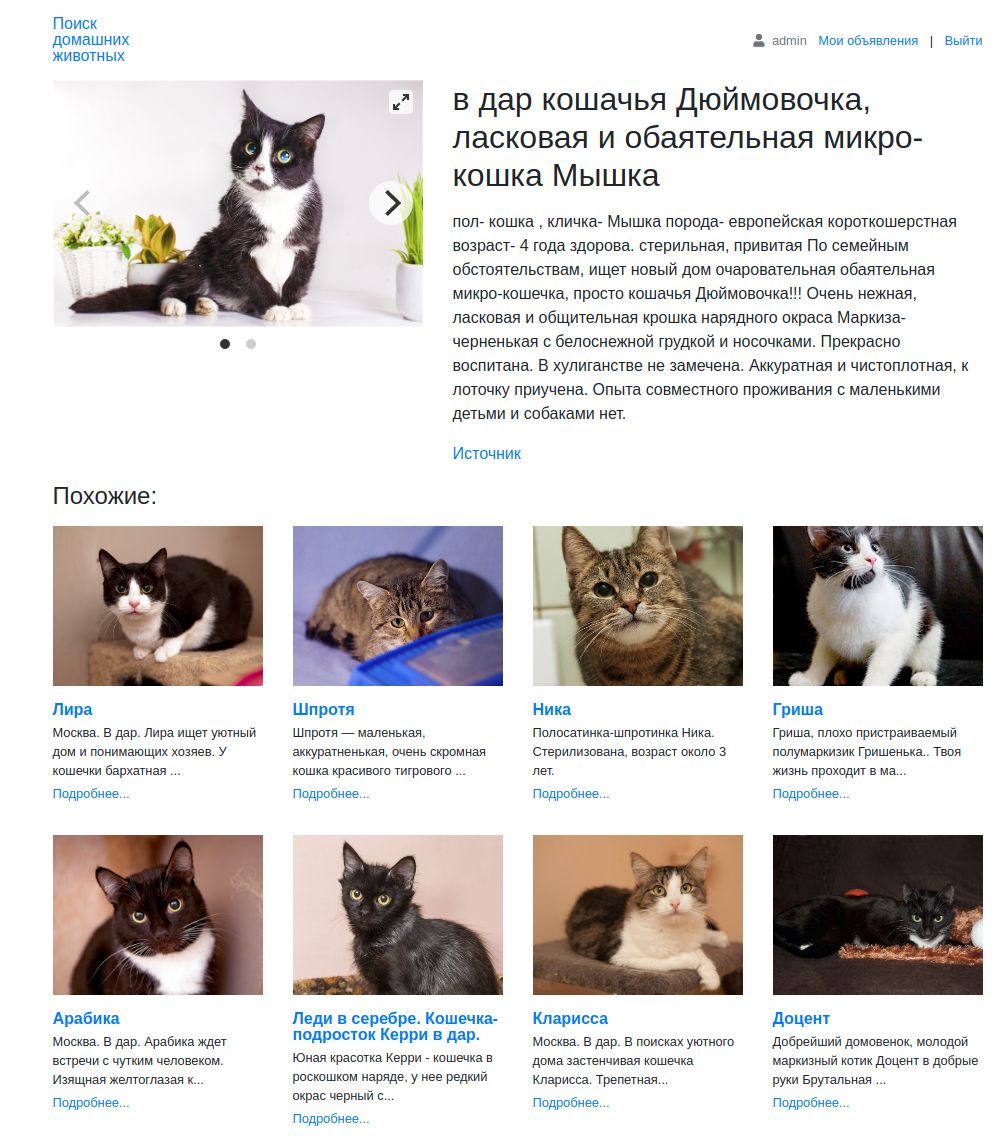

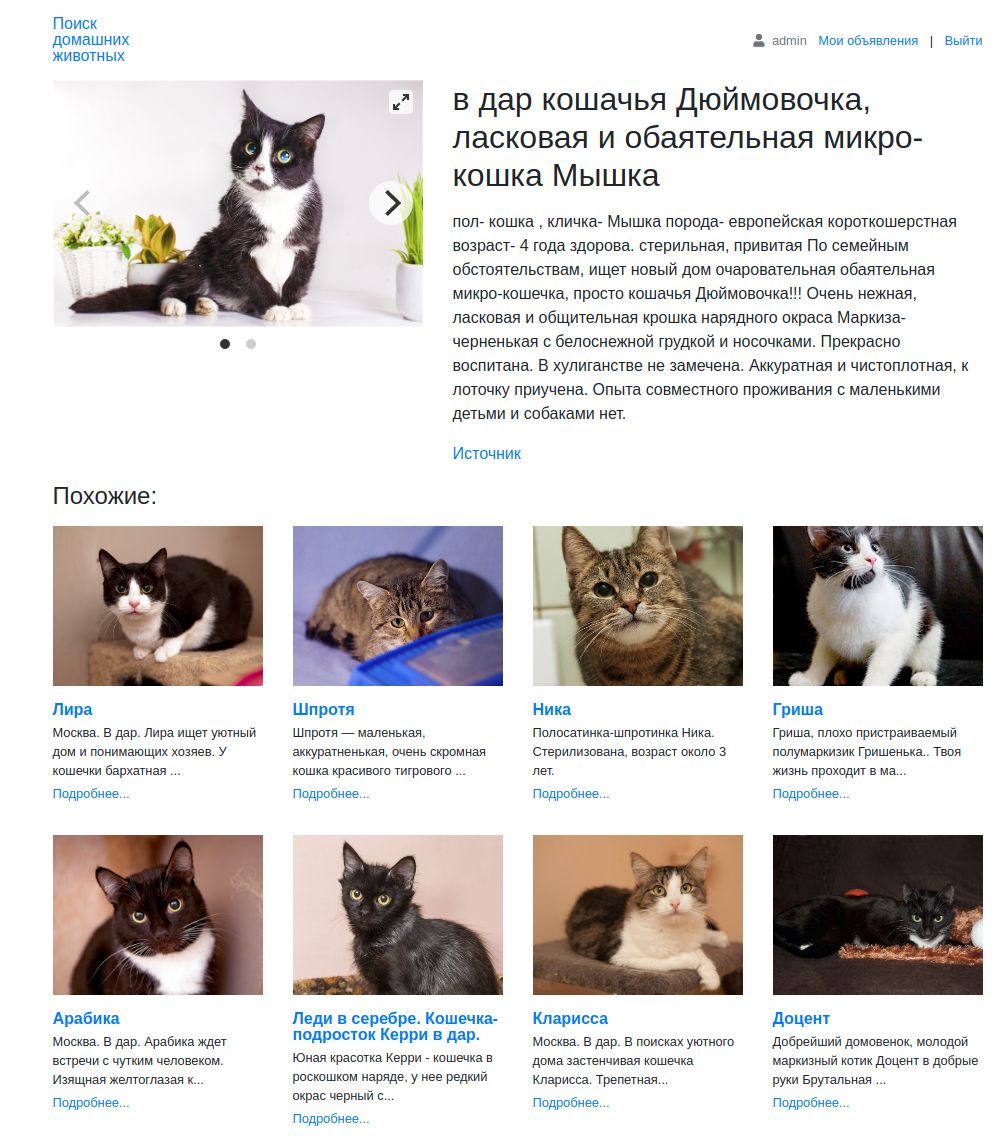

In addition to searching for missing pets, we are developing a recommender system to speed up the addition of shelter animals to new owners. While we do not have statistics on user behavior on the site, we therefore use content-based recommendations based on visual similarity.

What already is

- Excellent team of beginners and experienced professionals;

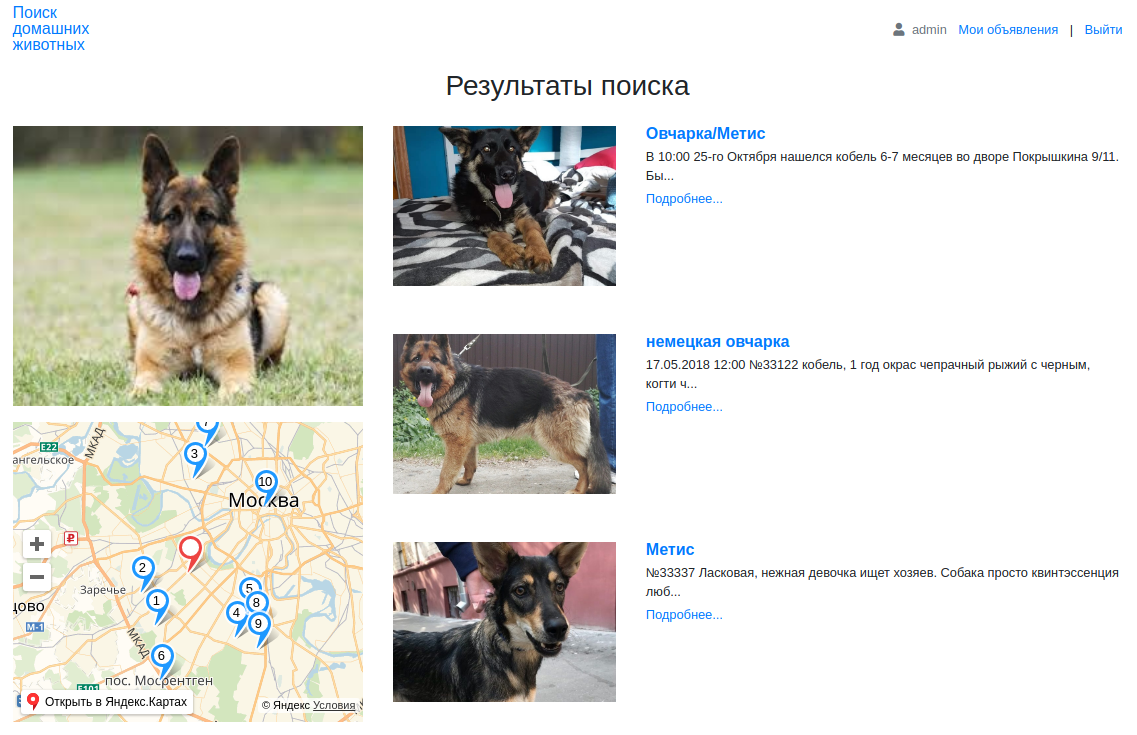

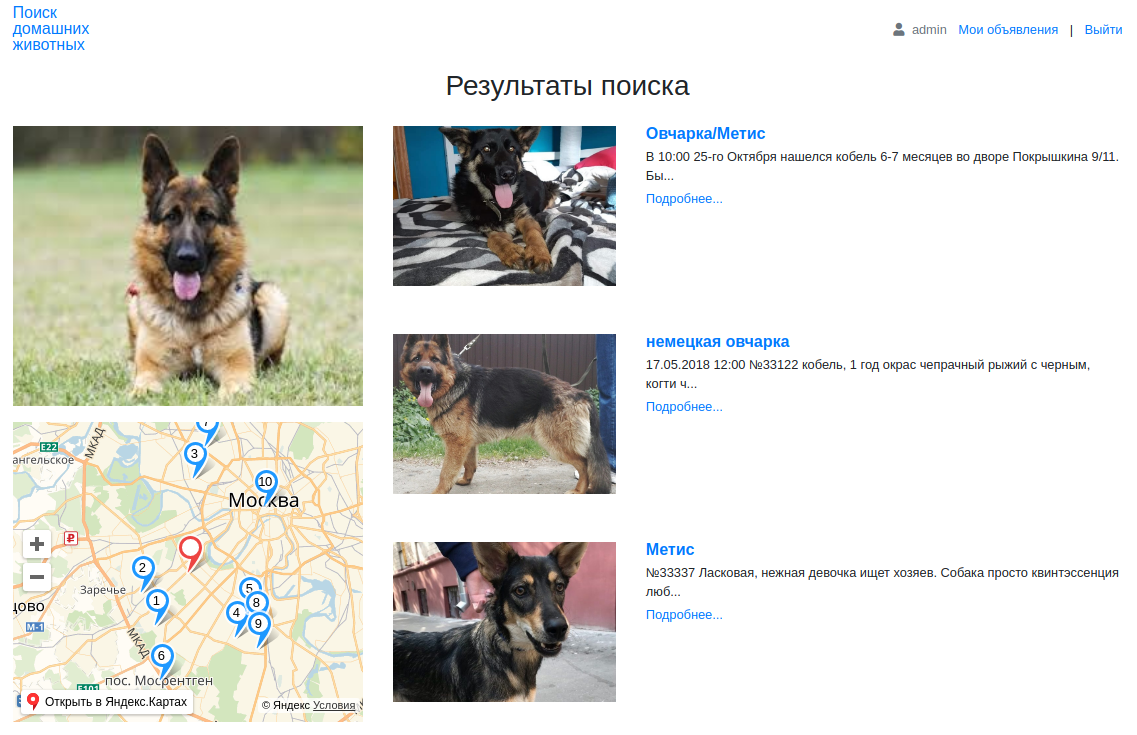

- http://petsiai.ru - a working prototype with a web interface for entering a photo of an animal and outputting similar ones (the prototype will be available within a few days after publication);

- Automated pipeline for the collection and processing of information;

- Updated database of animals (about 11,000 dogs and 6,000 cats.).

Under the hood

Development is conducted in python. We use the following technology stack:

- Docker, Gitlab CI / CD for application deployment;

- Google Kubernetes Engine for hosting our services and applications;

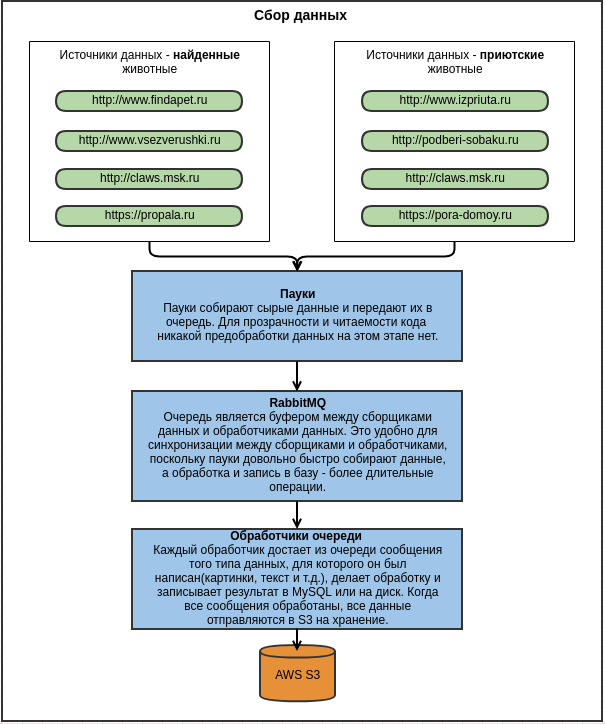

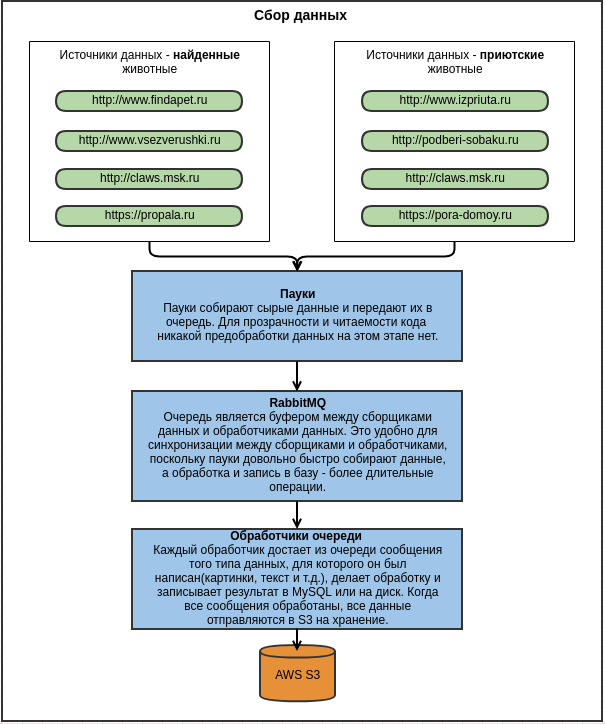

- Scrapy, RabbitMQ for data collection;

- Sklearn, keras for ML;

- Django, Flask, Bootstrap for the site;

- Elasticsearch for text search.

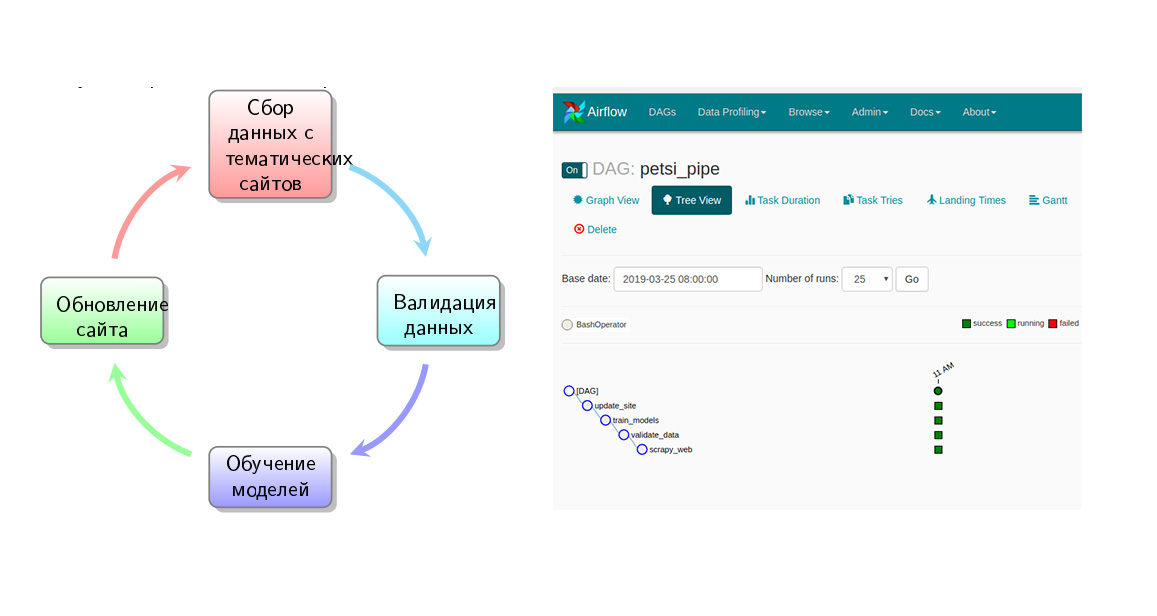

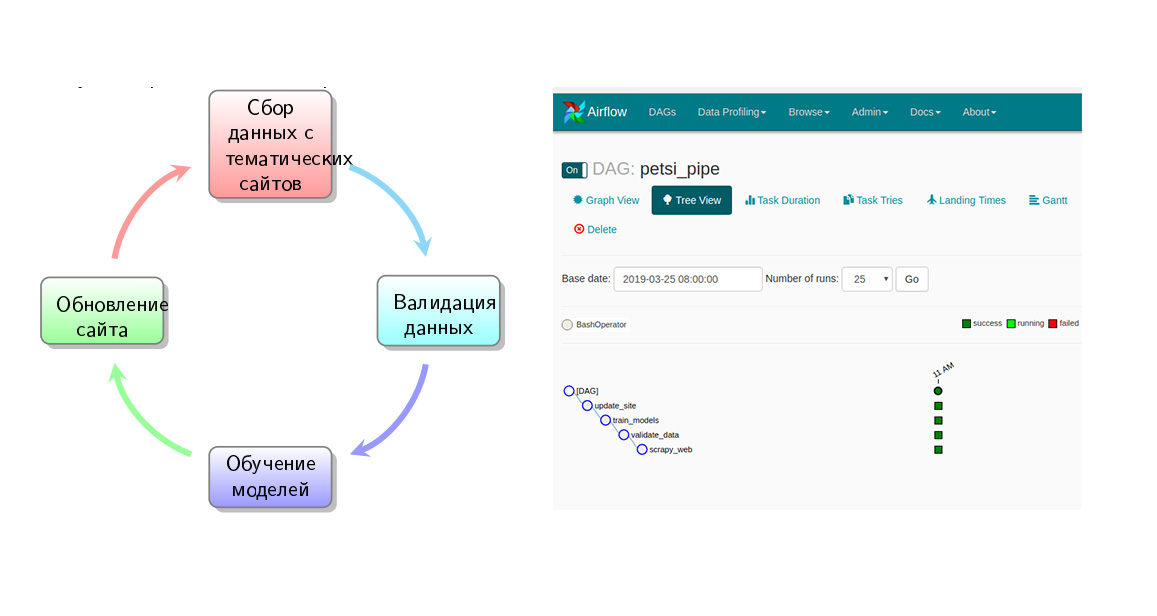

To synchronize all stages of our workflow, we use Airflow:

Multistage data collection. First, the spiders collect information and send it into a queue in its raw form. On the other side of the queue, special handlers convert the data to the desired type (for example, convert the text to an address) and add it to the DBMS.

The collected data is validated and sent to the training model. A special service has been written for the site that loads new data and models, and also initiates recalculation of search results for each user. The whole cycle takes about 8 hours.

How we use machine learning and data analysis:

- Sex determination by text;

- The definition of the breed in the text;

- Allocation of addresses from messages;

- Image segmentation;

- Image transfer to vector and ANN.

Immediate plans

- Confirm the performance of the project and connect the first pair of "master-lost pet";

- Develop cooperation with shelters and simplify the matching of the host-pet pair;

- Cooperate with other resources and make the service as user-friendly as possible.

Come to us!

For further development we need:

- Fighters front and backend armies;

- ML specialists;

- Data-engineers and system administrators to support the performance of the pipeline;

- DevOps to deploy applications on k8s and assist in its administration;

- Craftsmen working with spiders (scrapy);

- Journalists to popularize the project;

- Python programmers.

Why it might be necessary for you:

- Social responsibility if it interests you. Suddenly, do you like to benefit?

- A real project where you can realize your cool skills or pump them;

- You're strange and go everywhere where they invite. This is also a good option;

- You yourself were looking for a pet, you know what it is;

- Or you are the one who can not just pass by the event in the street, but do not know what to do with it.

Have a plan. We need people in the team.

Write in a personal or fill in the form and join!

Instead of conclusion

Finally, we prepared a selection of meme dogs and similar dogs from shelters, which we found using our algorithms

Source: https://habr.com/ru/post/459988/

All Articles