Parsing tasks from the Hydra conference - load balancing and in-memory storage

A few days ago there was a Hydra conference . The guys from the JUG.ru Group invited dream speakers (Leslie Lamport! Cliff Click! Martin Kleppmann!) And dedicated two days to distributed systems and computing. The contour was one of the three partners of the conference. We talked on the stand, talked about our distributed storage, played bingo, solved puzzles.

This is a post with an analysis of tasks on the Contour stand from the author of their text. Who was on the Hydra is your reason to remember pleasant impressions, who was not - a chance to stretch your brains with a big O- notation.

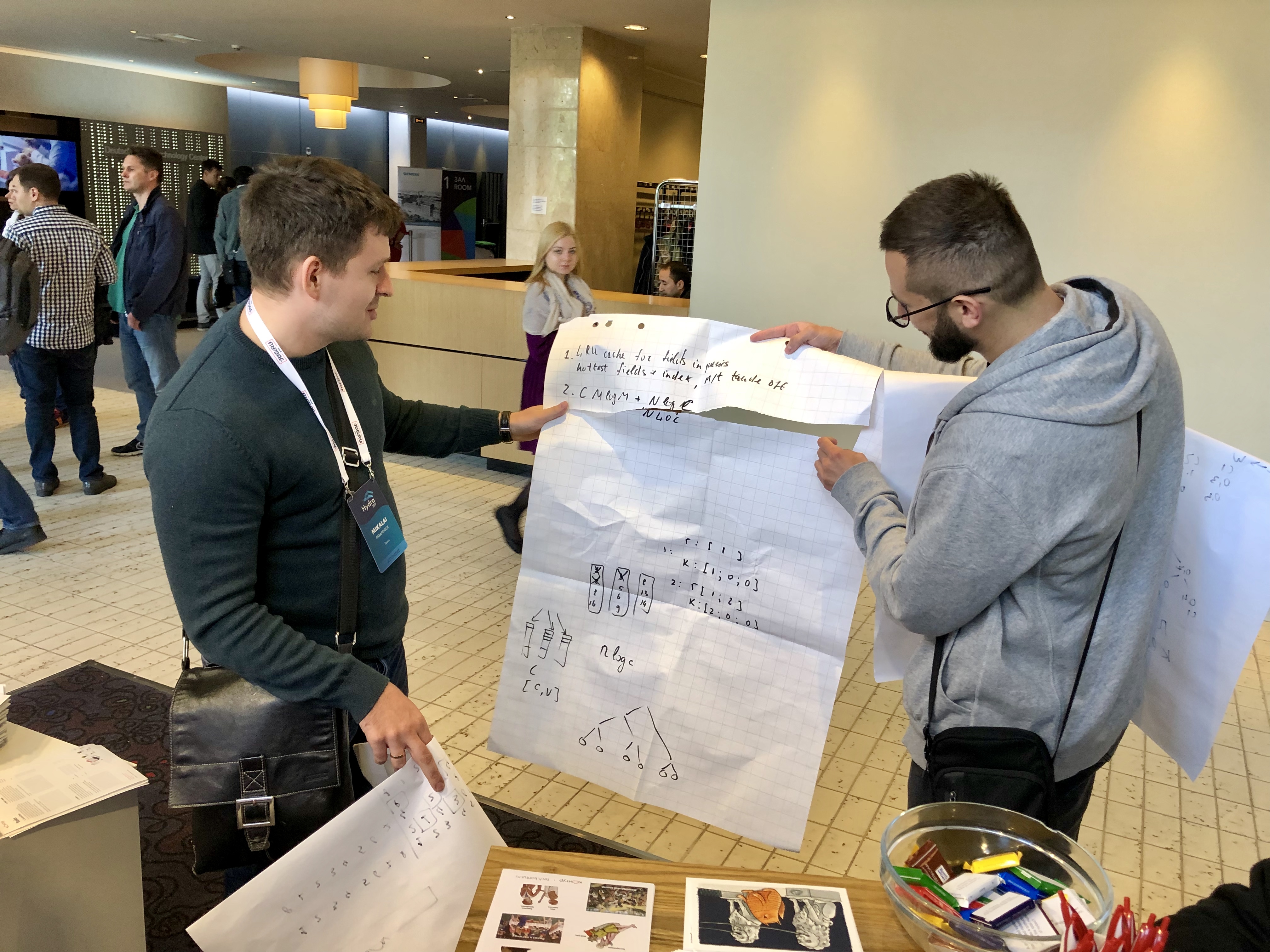

There were even participants who disassembled a flipchart on the slides to record their decision. I am not joking - they handed over for checking just such a stack of paper:

In total there were three tasks:

- about choosing replicas on weights for load balancing

- about sorting query results to in-memory database

- on state transfer in a distributed system with ring topology

Task 1. ClusterClient

It was necessary to propose an algorithm for effectively choosing K from N weighted replicas of the distributed system:

There is a massively distributed cluster of N nodes. Metadata associated with nodes (eg, their latencies, 4xx / 5xx response rates, etc.). If you are not selected, you must be able to choose the right way to choose a node.

Propose an algorithm to select nodes efficiently. Estimate its computational complexity using big O notation.

Because in such a way the participants of the conference struggled with them and because English was the official language of Hydra. Tasks looked like this:

Take a paper and a pencil, think, do not rush to immediately open spoilers :)

Beginning at 5:53, just 4 minutes:

But how the guys with the flipchart pitch their decision:

There is such a solution on the surface: sum up the weights of all the replicas, generate a random number from 0 to the sum of all weights, then choose an i-replica such that the sum of the replica weights from 0 to (i-1) -th is less than the random number, and the sum of the replica weights from 0 to i-th is greater than him. So it turns out to choose one replica, and to select the next one, you need to repeat the whole procedure, without considering the selected replica. With this algorithm, the complexity of choosing one replica is O (N), the complexity of choosing K replicas is O (N · K) ~ O (N 2 ).

Quadratic complexity is bad, but it can be improved. For this, we construct a segment tree for the weights sums. You will get a tree with a depth of lg N, in the leaves of which there will be replica weights, and in the rest of the nodes - partial sums, up to the sum of all weights in the root of the tree. Next, we generate a random number from 0 to the sum of all weights, find the i-th replica, remove it from the tree and repeat the procedure to find the remaining replicas. With this algorithm, the complexity of building a tree is O (N), the complexity of finding the i-th replica and removing it from the tree is O (lg N), the complexity of choosing K replicas is O (N + K lg N) ~ O (N lg N) .

Linear-logarithmic complexity is more pleasant than quadratic, especially for large K.

This algorithm is implemented in the code of the ClusterClient library from the Vostok project. (There the tree is built as O (N lg N), but this does not affect the final complexity of the algorithm.)

Task 2. Zebra

It was necessary to propose an algorithm for efficient sorting of documents in memory by an arbitrary non-indexed field:

Your team is tasked with in-memory document database. If necessary, you can select the number of fields for a sample of the number of M (usually N <100 << M). A bit less common workload after skipping top documents (S ~ N).

Propose an algorithm to execute such queries efficiently. Estimate of computational complexity of scenarios.

Beginning at 34:50, just 6 minutes:

Surface solution: sort all documents (for example, using quicksort ), then take N + S documents. In this case, the complexity of sorting is on average O (M lg M), at worst - O (M 2 ).

It is obvious that sorting all M documents in order to take only a small part from them is inefficient. In order not to sort all documents, the quickselect algorithm is suitable , which will select the N + S documents you need (they can be sorted by any algorithm). In this case, the complexity will decrease to O (M) on average, and the worst case will remain the same.

However, you can make it even more efficient - use the binary heap streaming algorithm. In this case, the first N + S documents are added to the min- or max-heap (depending on the sorting direction), and then each next document is compared with the root of the tree, which contains the minimum or maximum document at the current time, and if necessary is added to the tree . In this case, the complexity in the worst case, when you have to constantly rebuild the tree - O (M lg M), the complexity on average - O (M), as when using quickselect.

However, heap streaming is more efficient due to the fact that in practice most of the documents can be discarded without rebuilding the heap, after a single comparison with its root element. Such sorting is implemented in the Zebra document in-memory database developed and used in the Contour.

Task 3. State swaps

It was necessary to propose the most efficient algorithm for the state shift:

Your team has been tied up with a cluster of N nodes. The i-th node's state should not be transferred to the first node. It is supported by the two states exchange atom states atomically. It is known that a state swap takes M milliseconds. For every moment.

')

How long does it take for all the nodes in a cluster?

Surface solution: exchange the states of the first and second element, then the first and third, then the first and fourth, and so on. After each exchange, the state of one element will be in the desired position. You have to do O (N) permutations and spend O (N · M) time.

Linear time is long, so you can exchange the states of elements in pairs: the first with the second, the third with the fourth, and so on. After each exchange, the state of each second element will be in the desired position. You have to do O (lg N) permutations and spend O (M lg N) time.

However, the shift can be made even more efficient - not in a linear, but in a constant time. To do this, the first step is to exchange the state of the first element with the last, the second with the last but one, and so on. The state of the last element will be in the desired position. And now you need to exchange the state of the second element with the last, third with the last but one and so on. After this round of exchanges, the status of all elements will be in the desired position. A total of O (2M) ~ O (1) permutations will be done.

Such a decision would not surprise a mathematician who still remembers that a turn is a composition of two axial symmetries. By the way, it is trivially generalized for a shift not by one, but by K <N positions. (Write in the comments exactly how.)

Do you like puzzles? Know other solutions? Share in the comments.

And here are some useful links at last:

- learn more about infrastructure development in Contour

- look at the records of internal batches with reports on distributed systems

- watch the cycle of video lectures “ Unusual algorithms for ordinary people ”

- subscribe to our channel in Telegram

Source: https://habr.com/ru/post/459958/

All Articles