Deep Learning: An Overview

Hello. Already this month, a new course, Mathematics for Data Science, will start at OTUS. On the eve of the start of this course we traditionally share with you the translation of interesting material.

Annotation . Deep learning is an advanced area of machine learning research (machine learning - ML). It consists of several hidden layers of artificial neural networks. The deep learning methodology applies nonlinear transformations and high-level model abstractions on large databases. Recent advances in the implementation of deep learning architecture in numerous areas have already made a significant contribution to the development of artificial intelligence. This article presents a modern study on the contribution and new applications of deep learning. The following overview in chronological order represents how and in which of the most significant applications the deep learning algorithms were used. In addition, the benefits and advantages of the deep learning methodology in its multi-layered hierarchy and non-linear operations are presented, which are compared with more traditional algorithms in conventional applications. A review of recent advances in the field further reveals the general concepts, ever-growing benefits and popularity of in-depth training.

1. Introduction

Artificial Intelligence (AI) as the intelligence exhibited by machines is an effective approach to understanding human learning and reasoning [1]. In 1950, "Turing Test" was proposed as a satisfactory explanation of how a computer can reproduce human cognitive reasoning [2]. As a field of study, AI is divided into more specific subdomains. For example: Natural Language Processing (NLP) [3] can improve the quality of writing in various applications [4,17]. The most classic division in NLP is machine translation, which is understood as a translation between languages. Machine translation algorithms have contributed to the emergence of various applications that take into account grammatical structure and spelling errors. Moreover, the vocabulary and vocabulary related to the topic of the material are automatically used as the main source when the computer suggests changes to the author or editor [5]. In fig. 1 shows in detail how AI encompasses seven areas of computer science.

Recently, machine learning and data mining have come into the spotlight and have become the most popular topics among the research community. The combination of these areas of research analyzes the many possibilities for characterizing databases [9]. Over the years, databases have been collected for statistical purposes. Statistical curves can describe the past and present to predict future behaviors. However, over the past decades, only classical methods and algorithms were used to process this data, while the optimization of these algorithms could form the basis for effective self-learning [19]. An improved decision-making process can be implemented on the basis of existing values, several criteria and advanced statistical methods. Thus, one of the most important applications of this optimization is medicine, where symptoms, causes and medical decisions create large databases that can be used to determine the best treatment [11].

Fig. 1. Research in the field of artificial intelligence (AI) Source: [1].

Since ML covers a wide range of research, many approaches have already been developed. Clustering, Bayesian network, deep learning and decision tree analysis - this is only part of it. The following review mainly focuses on deep learning, its basic concepts, proven and modern applications in various fields. In addition, it presents several figures reflecting the rapid growth of publications with research in the field of deep learning in recent years in scientific databases.

2. Theoretical foundations

The Deep Learning (DL) concept first appeared in 2006 as a new area of research in machine learning. At first it was known as hierarchical learning in [2], and as a rule it included many areas of research related to pattern recognition. Deep learning mainly takes into account two key factors: nonlinear processing in several layers or stages and training with or without supervision [4]. Nonlinear multi-layer processing refers to an algorithm in which the current layer takes as input the output of the previous layer. A hierarchy is established between layers to order the importance of the data whose utility should be established. On the other hand, supervised and unsupervised learning is associated with the label of classes of objectives: its presence implies a supervised system, and the absence means unsupervised.

3. Applications

Deep learning involves layers of abstract analysis and hierarchical methods. However, it can be used in numerous real-world applications. As an example, in digital image processing; Black and white coloring was previously done manually by users who had to choose each color based on their own judgment. Using the deep learning algorithm, coloring can be performed automatically using a computer [10]. In the same way, sound can be added to drumming video without sound using recurrent neural networks (RNN), which are part of deep learning methods [18].

In-depth training can be presented as a method for improving results and optimizing processing time in several computational processes. In the field of natural language processing, deep learning methods have been applied to create captions for images [20] and generate handwritten text [6]. The following applications are further classified in areas such as digital image processing, medicine, and biometrics.

3.1 Image Processing

Before deep learning was formally established as a new research approach, some applications were implemented as part of the pattern recognition concept through layer processing. In 2003, an interesting example was developed using particle filtering and the belief propagation algorithm (Bayesian). The basic concept of this application assumes that a person can recognize the face of another person by observing only half of the face image [14], so the computer can restore the face image from the cropped image.

Later in 2006, the greedy algorithm and hierarchy were combined into an application capable of handling handwritten numbers [7]. Recent studies have applied deep learning as the primary tool for digital image processing. For example, using Convolutional Neural Networks (CNN) convolutional neural networks for iris recognition may be more effective than using conventional sensors. The effectiveness of CNN can reach 99.35% accuracy [16].

Mobile location recognition now allows the user to know a specific address based on an image. The SSPDH (Supervised Semantics - Preserving Deep Hashing) algorithm was a significant improvement over VHB (Visual Hash Bit) and SSFS (Space - Saliency Fingerprint Selection). The accuracy of SSPDH is as much as 70% more efficient [15].

Finally, another great application in digital image processing using the deep learning method is face recognition. Google, Facebook and Microsoft have unique face recognition models with deep learning [8]. Recently, identification on the basis of an image of a face has changed to automatic recognition by defining age and sex as initial parameters. Sighthound Inc., for example, tested a deep convolutional neural network algorithm that can recognize not only age and gender, but even emotions [3]. In addition, a reliable system was developed to accurately determine the age and sex of a person from a single image by applying a deep multitasking learning architecture [21].

3.2 Medicine

Digital image processing is undoubtedly an important part of research areas where deep learning can be applied. In the same way, clinical applications have recently been tested. For example, a comparison between low-level learning and deep learning in neural networks has led to better performance in predicting diseases. An image obtained using magnetic resonance imaging (MRI) [22] from the human brain was processed to predict possible Alzheimer's disease [3]. Despite the rapid success of this procedure, some problems should be seriously considered for future applications. One of the limitations is training and dependence on high quality. The volume, quality and complexity of the data are complex aspects, but the integration of heterogeneous data types is a potential aspect of the deep learning architecture [17, 23].

Optical coherence tomography (OCT) is another example where deep learning methods show significant results. Traditionally, images are processed by manually developing convolutional matrices [12]. Unfortunately, the lack of training sets limits the method of deep learning. However, for several years, the introduction of improved training kits will effectively predict retinal pathologies and reduce the cost of OCT technology [24].

3.3 Biometrics

In 2009, an application for automatic speech recognition was used to reduce the frequency of telephone errors (Phone Error Rate - PER) using two different architectures of deep trust networks [18]. In 2012, the CNN method [25] was applied within the framework of a hybrid neural network - the hidden Markov Model (NN - HMM). As a result, PER was reached at 20.07%. The resulting PER is better than the previously used 3-layer baseline method of the neural network [26]. The smartphones and resolution of their cameras were tested for iris recognition. When using mobile phones developed by various companies, the accuracy of iris recognition can reach up to 87% of efficiency [22,28].

In terms of security, especially access control; deep learning is used in conjunction with biometric characteristics. DL was used to accelerate the development and optimization of FaceSentinel face recognition devices. According to this manufacturer, their devices can extend the one-to-one to one-to-many identification process in nine months [27]. This engine enhancement could take 10 man-years without DL implementation. What has accelerated the production and launch of equipment. These devices are used at London Heathrow Airport, and can also be used to record working hours and attendance, and in the banking sector [3, 29].

4. Overview

Table 1 summarizes several applications implemented during the previous years regarding deep learning. Speech recognition and image processing are mainly mentioned. This review covers only some of the large list of applications.

Table 1. Applications of deep learning, 2003–2017

( Application: 2003 - Hierarchical Bayesian inference in the visual cortex; 2006 - Classification of numbers; 2006 - Deep network of trust for telephone recognition; 2012 - Speech recognition from multiple sources; 2015 - Recognition of the iris using smart cameras; 2016 - Mastering the game of deep Go neural networks with tree search; 2017 - Model of sensory recognition of the iris).

4.1 Analysis of publications for the year

In fig. 1 shows the number of publications on deep learning from the ScienceDirect database per year from 2006 to June 2017. Obviously, a gradual increase in the number of publications could describe an exponential growth.

In fig. 2 shows the total number of publications on deep learning in Springer per year from January 2006 to June 2017. In 2016, there has been a sudden increase in publications, reaching 706 publications, which proves that deep learning is indeed the focus of modern research.

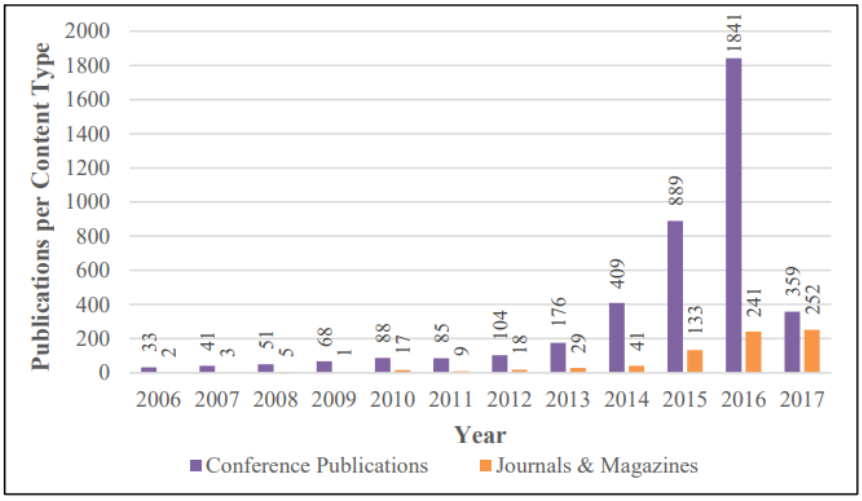

In fig. 3 shows the number of publications at conferences, in IEEE journals from January 2006 to June 2017. It is noteworthy that since 2015 the number of publications has increased significantly. The difference between 2016 and 2015 is more than 200% of the increase.

Fig. 1. The increase in the number of publications on in-depth training in the Sciencedirect database (January 2006 - June 2017)

Fig. 2. The increase in the number of publications on deep learning from the database Springer. (January 2006 - June 2017)

Fig. 3. Growth of publications in deep learning from the IEEE database. (January 2006 - June 2017)

5. Conclusions

Deep learning is a really fast growing application of machine learning. The numerous applications described above prove its rapid development in just a few years. The use of these algorithms in different areas shows its versatility. The analysis of publications made in this study clearly demonstrates the relevance of this technology and provides a clear illustration of the growth of deep learning and trends for future research in this area.

In addition, it is important to note that hierarchy of levels and control in learning are key factors for developing a successful application for deep learning. The hierarchy is important for appropriate data classification, while the control takes into account the importance of the database itself as part of the process. The core value of deep learning is to optimize existing applications in machine learning due to the innovative hierarchical processing. Deep learning can provide effective results in digital image processing and speech recognition. The reduction in the percentage of errors (from 10 to 20%) clearly confirms the improvement compared with existing and proven methods.

In this era and in the future, deep learning can be a useful security tool through a combination of face and speech recognition. In addition, digital image processing is a research area that can be applied in many other areas. For this reason, and having proved true optimization, deep learning is a modern and interesting subject of the development of artificial intelligence.

References

- Abdel, O .: Applying a convincing neural networks concept for a hybrid NN-HMM model for speech recognition. Acoustics, Speech and Signal Processing 7, 4277-4280 (2012).

- Mosavi A., Varkonyi-Koczy AR: Integration of Machine Learning and Optimization for Robot Learning. Advances in Intelligent Systems and Computing 519, 349-355 (2017).

- Bannister, A .: Biometrics and AI: how FaceSentinel evolves 13 times faster thanks to deep learning (2016).

- Bengio, Y .: Learning deep architectures for AI. Foundations and trends in Machine Learning 2, 1-127 (2009).

- Mosavi, A., Varkonyi-Koczy, AR, Fullsack, M .: Combined of Decision-Making. MCDM (2015).

- Deng L, Yu D Deep learning: methods. Foundations and Trends in Signal Processing 7, 197-387 (2014)

- Goel, B.: Developments in The Field of Natural Language Processing. International Journal of Advanced Research in Computer Science 8, (2017).

- Vaezipour, A .: Mosavi, A. Seigerroth, U .: Machine learning, 26th Europian Conference on Operational Research, Rome (2013).

- Hinton GE, Simon O, Yee-Whye TA. Neural computation 18, 1527-1554 (2006)

- Hisham, A., Harin, S .: Deep Learning - The New Kid in Artificial Intelligence. (2017)

- Kim IW, Oh, M .: Deep learning: from chemoinformatics to precision medicine. Journal of Pharmaceutical Investigation: 1-7 (2017)

- Mosavi, A., Vaezipour, A .: Developing Effective Tools for Predictive Analytics and Informed Decisions. Technical Report. University of Tallinn (2013)

- Mosavi A., Y., Bathla, Varkonyi-Koczy AR: Predicting the Future Using Web

Survey Advances in Intelligent Systems and Computing (2017). - Mosavi, A., Vaezipour, A .: Reactive Search Optimization; Application to Multiobjective

Optimization Problems. Applied Mathematics 3, 1572-1582 (2012) - Lee JG (2017) Deep Learning in Medical Imaging: General Overview. Korean Journal of

Radiology 18 (4): 570-584 - Lee T .: David M Hierarchical Bayesian inference in the visual cortex. JOSA 20, 1434-1448

(2003). - Liu W deep learning hashing for mobile visual search. EURASIP Journal on Image and

Video Processing 17, (2017). - Marra F .: A Deep Learning Approach for Iris Sensor Identification. Pattern Recognition Letters (2017).

- Miotto R et al (2017) Deep learning for healthcare: review, opportunities and challenges.

Briefings in Bioinformatics - Mohamed A .: Deep belief networks for phone recognition. Nips workshop on deep learning

for speech recognition and related applications: 1, 635-645 (2009). - Moor. J .: The Turing Test: The Elusive Standard of Artificial Intelligence. Springer Science &

Business Media (2003). - Vaezipour, A. Mosavi, U. Seigerroth, A .: Visual analytics and informed decisions in health

and life sciences, International CAE Conference, Verona, Italy (2013) - Raja KB, Raghavendra R, Vemuri VK, Busch C (2015) Smartphone based visible iris

recognition using deep sparse filtering. Pattern Recognition Letters 57: 33-42. - Safdar S, Zafar S, Zafar N, Khan NF (2017) for heart disease diagnosis: a review. Artificial Intelligence Review: 1-17

- Mosavi, A. Varkonyi. A .: Learning in Robotics. Learning 157, (2017)

- Xing J, Li K, Hu W, Yuan C, Ling H et al (2017)

accuracy estimation from a single image. Pattern Recognition - Mosavi, A. Rabczuk, T .: Learning and Intelligent Optimization for Computational Materials

Design Innovation, Learning and Intelligent Optimization, Springer-Verlag, (2017) - Vaezipour, A., et al., Visual analytics for informed decisions, International CAE Conference, Verona, Italy, (2013).

- Dehghan, A .: DAGER: Deep Age, Gender and Emotion Recognition Using Convolutional

Neural Network3, 735-748 (2017) - Mosavi, A: predictive decision model, 2015, https://doi.org/10.13140/RG.2.2.21094.630472

- Vaezipour, A., et al.: Visual analytics and informed decisions.

Paper in Proceedings of the International CAE Conference, Verona, Italy. (2013). - Vaezipour, A .: Visual analytics for informed decisions, CAE Conference, Italy, (2013).

- A.Vaezipour, A.:Machine learning integrated optimization for decision making. 26th European Conference on Operational Research, Rome (2013).

- Vaezipour, A .: Visual Analytics for Multi-Criteria Decision Analysis, in CA International Conference, Verona, Italy (2013).

- Mosavi, A., Vaezipour, A .: Developing Effective Tools for Predictive Analytics and Informed Decisions. Technical Report. (2013). https://doi.org/10.13140/RG.2.2.23902.84800

- Mosavi A., Varkonyi-Koczy AR: Integration of Machine Learning and Optimization for

Robot learning. Advances in Intelligent Systems and Computing 519, 349-355 (2017). - Mosavi, A., Varkonyi, A .: Learning in Robotics. Learning, 157, (2017).

- Mosavi, A .: Decision-making software architecture; the visualization and data mining assisted approach. International Journal of Information and Computer Science 3, 12-26 (2014).

- Mosavi, A .: The large scale system of multiple criteria decision making; pre-processing

Large Scale Complex Systems Theory and Applications 9, 354-359 (2010). - Esmaeili, M., Mosavi, A .: Variable reduction for multiobjective optimization using data

mining techniques. Computer Engineering and Technology 5, 325-333 (2010) - Mosavi, A .: Data mining for decision making in engineering optimal design. Journal of AI

and Data Mining 2, 7-14 (2014). - Mosavi, A., Vaezipour, A .: Visual Analytics, Obuda University, Budapest, (2015).

- Mosavi, A., Vaezipour, A .: Reactive Search Optimization; Application to Multiobjective

Optimization Problems. Applied Mathematics 3, 1572-1582 (2012). - Mosavi, A., Varkonyi-Koczy, AR, Fullsack, M .: Combination of Machine Learning and

Optimization for Automated Decision-Making. MCDM (2015). - Mosavi, A., Delavar, A .: Business Modeling, Obuda University, Budapest, (2016).

- Mosavi, A .: Application of data mining in multiobjective optimization problems. International Journal for Simulation and Multidisciplinary Design Optimization, 5, (2014)

- Mosavi, A. Rabczuk, T .: Learning and Intelligent Optimization for Material Design, Theoretical Computer Science and General Issues, LION11 (2017).

- Mosavi, A., Visual Analytics, Obuda University, 2016.

- Mosavi, A .: Predictive decision making, Tech Rep 2015. doi: 10.13140 / RG.2.2.16061.46561

- Mosavi. A .: Predictive Decision Making, Predictive Decision Model, Tech. Report. (2015).

https://doi.org/10.13140/RG.2.2.21094.63047 - Mosavi, A., Lopez, A .: Varkonyi-Koczy, A .: Industrial Applications of Big Data: State of

Art Survey, Advances in Intelligent Systems and Computing, (2017). - Mosavi, A., Rabczuk, T., Varkonyi-Koczy, A .: Reviewing the Novel Machine Learning

Tools for Materials Design, Advances in Intelligent Systems and Computing, (2017). - Mousavi, S., Mosavi, A., Varkonyi-Koczy, AR: A load balancing algorithm for resource

cloud computing, Advances in Intelligent Systems and Computing, (2017). - Baranyai, M., Mosavi, A., Vajda, I., Varkonyi-Koczy, AR: Optimal Design of Electrical

Machines: State of the Art Survey, Advances in Intelligent Systems and Computing, (2017). - Mosavi, A., Benkreif, R., Varkonyi-Koczy, A .: Comparison of Euler-Bernoulli and Timoshenko

and Computing, (2017). - Mosavi, A., Rituraj, R., Varkonyi-Koczy, AR: Reviewing the Multiobjective Optimization

Package of mode Frontier in Energy Sector, Advances in Intelligent Systems and Computing,

(2017). - Mosavi, A., Bathla, Y., Varkonyi-Koczy AR: Predicting the Future Using Web

Knowledge: State of the Art Survey, Advances in Intelligent Systems and Computing,

(2017).

')

Source: https://habr.com/ru/post/459785/

All Articles