Artificial Intelligence Goldeneye 007

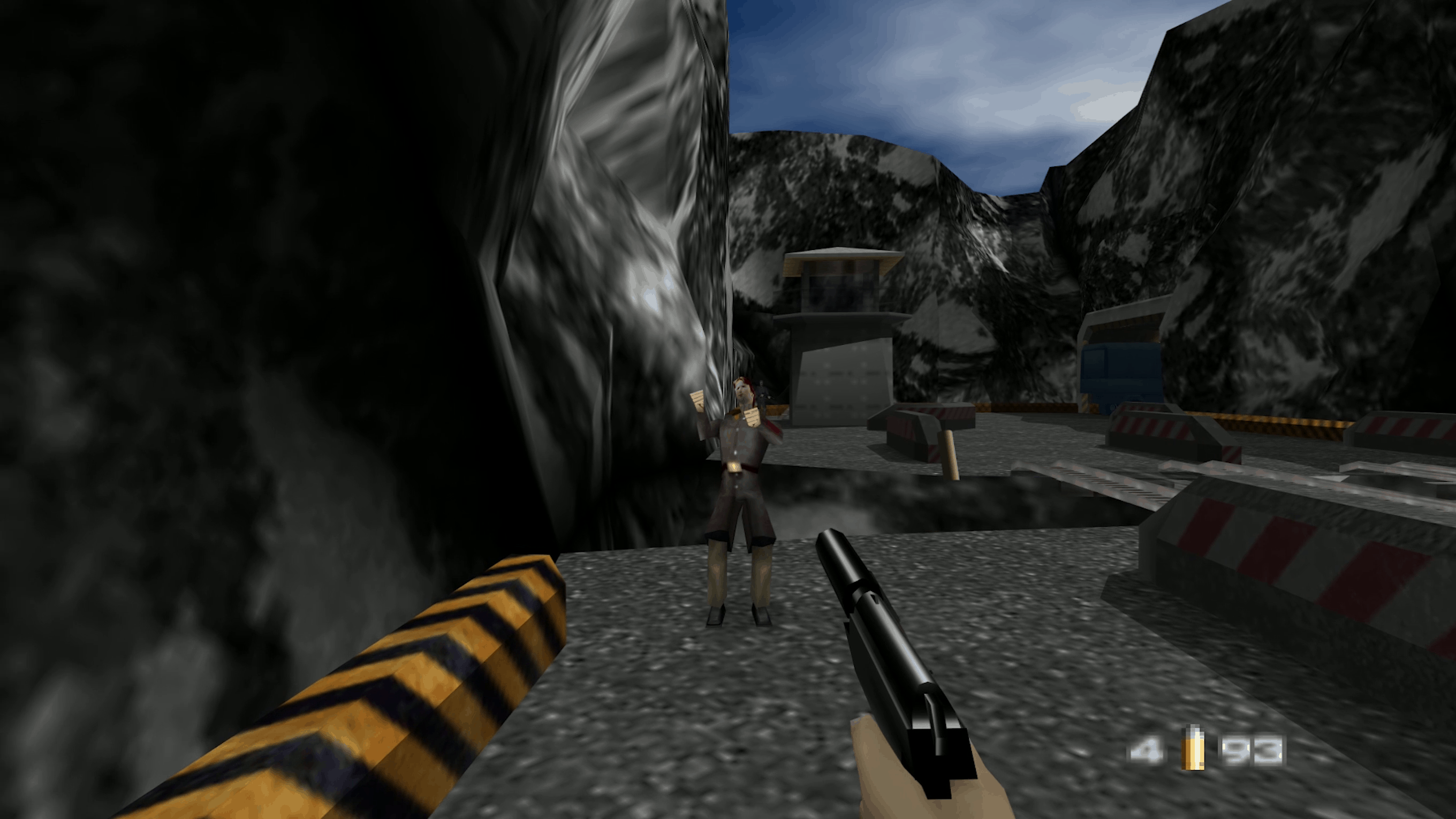

Goldeneye 007 is one of the most important games in history. She identified the further development of a whole generation of console games and paved the way for first-person shooters to the console market. Fast forward more than 20 years ago to find out how one of the most popular 64 games on Nintendo managed to realize AI enemies and friends, from which you can learn something even today.

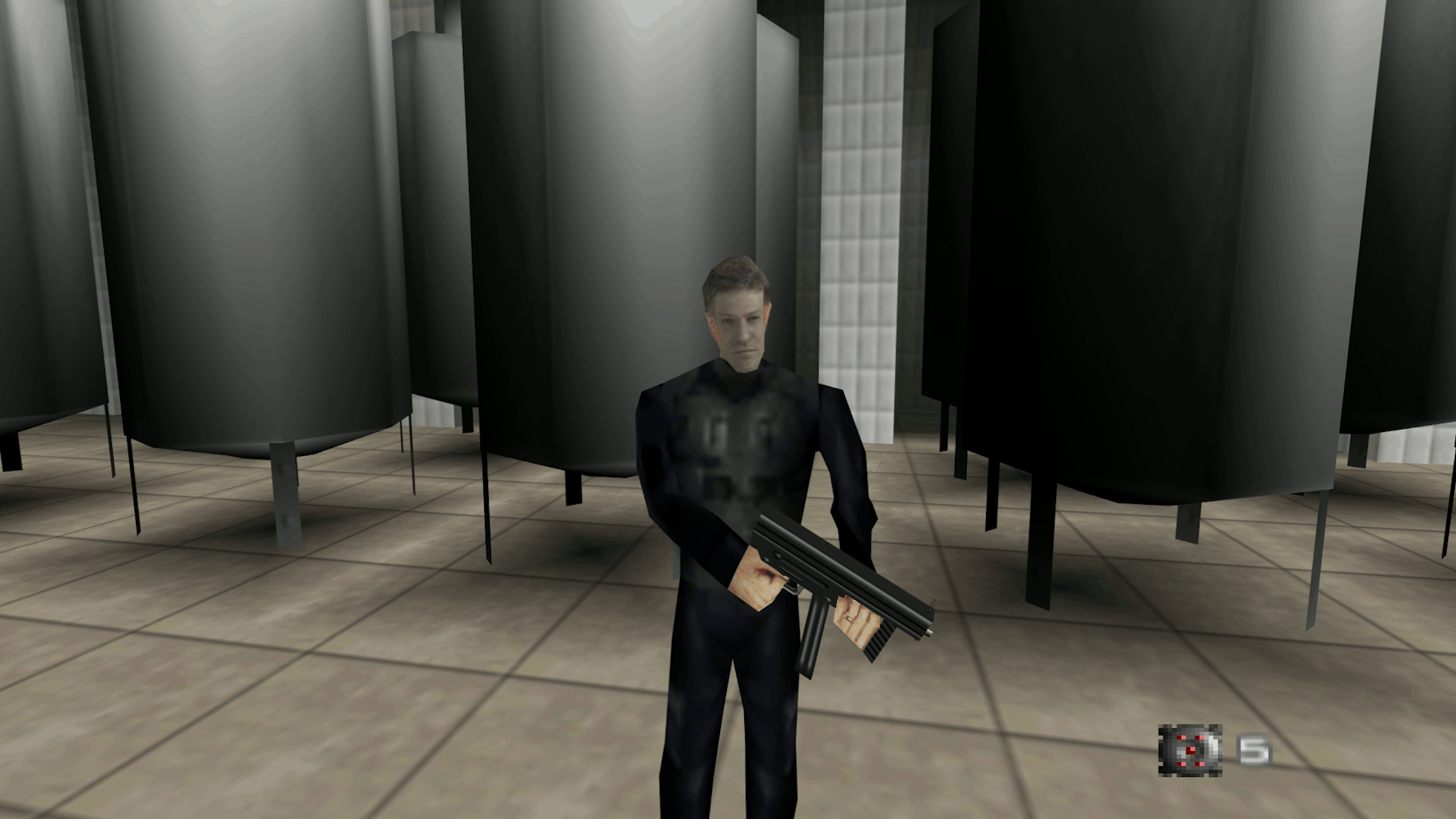

About the game

After its release in 1997, GoldenEye 007 not only determined the fate of the generation, but also surpassed all expectations. Rare, Nintendo and even the owner of the Bond franchise, MGM, did not believe in this game very much. Released two years after the film’s release and a year after the console appeared on the market, it seemed doomed to fail, but was third in sales (eight million copies) over the entire life of the platform, second only to Super Mario 64 and Mario Kart 64 . Not to mention the fact that in 1998 she earned Rare a BAFTA award and developer title of the year.

This game has left us a huge legacy: it set the standards for what to expect from next generations of first-person shooters, especially in the behavior of AI: characters with patrol patterns, enemies requesting reinforcements, civilians fleeing in fear, smooth navigation, and searching for a path, a rich set of animations, dynamic properties arising during the game, and much more. She not only set the standards of the generation, but also influenced the games that surpassed her - Half Life , Crysis , Far Cry and many others.

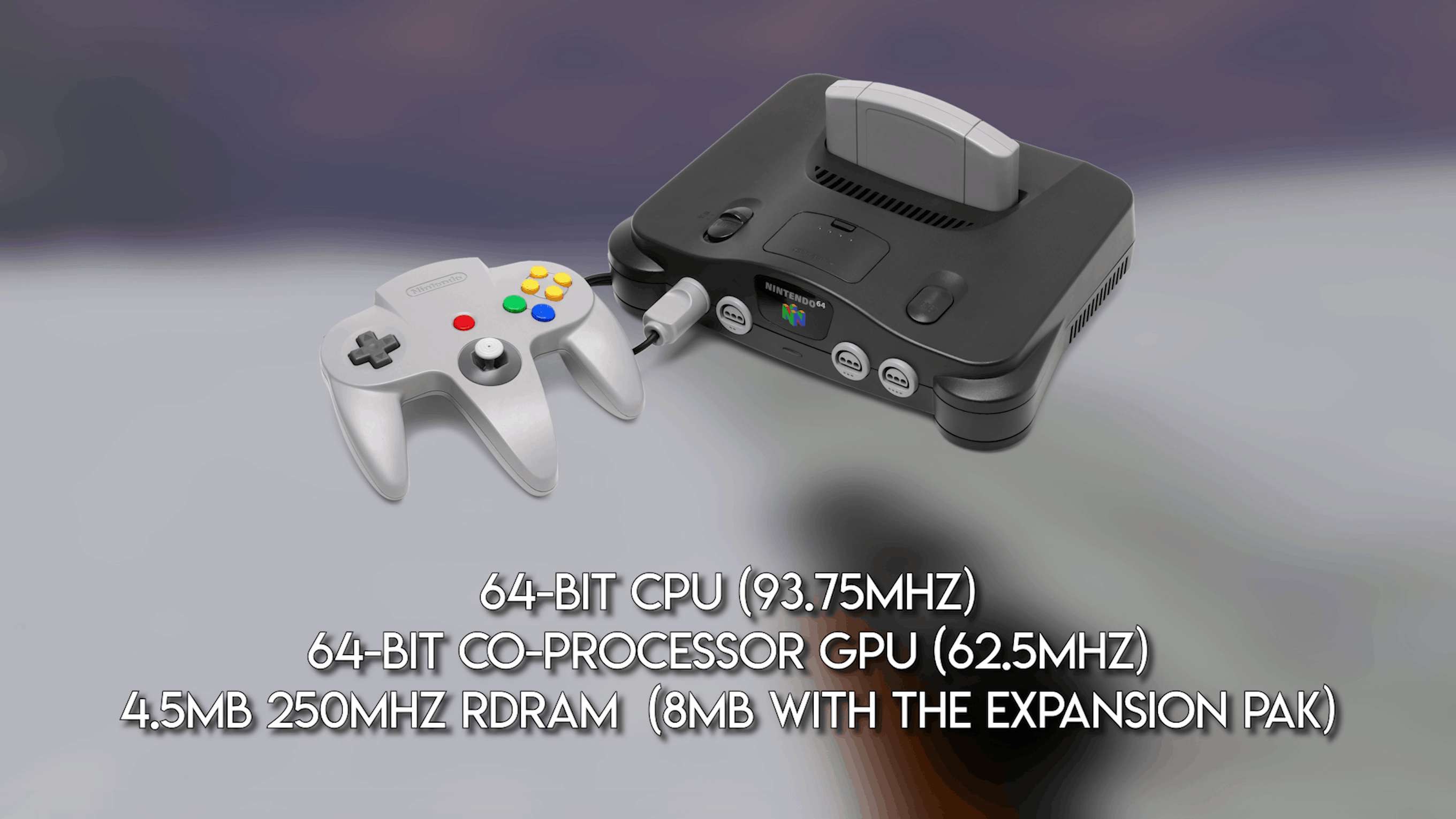

I’m not just interested in how artificial intelligence worked, but also how the hell did the developers manage to make it work. I talked about many AI techniques, including finite automata, navigation meshes, behavior trees, planning technologies, and machine learning, but at the time of GoldenEye’s release they were not well-established practices in the games industry. In addition, Nintendo 64 has been almost 25 years old, and compared to modern machines, it has minimal processor and memory resources. How can you create AI and gameplay systems that will work effectively on hardware with such serious limitations?

')

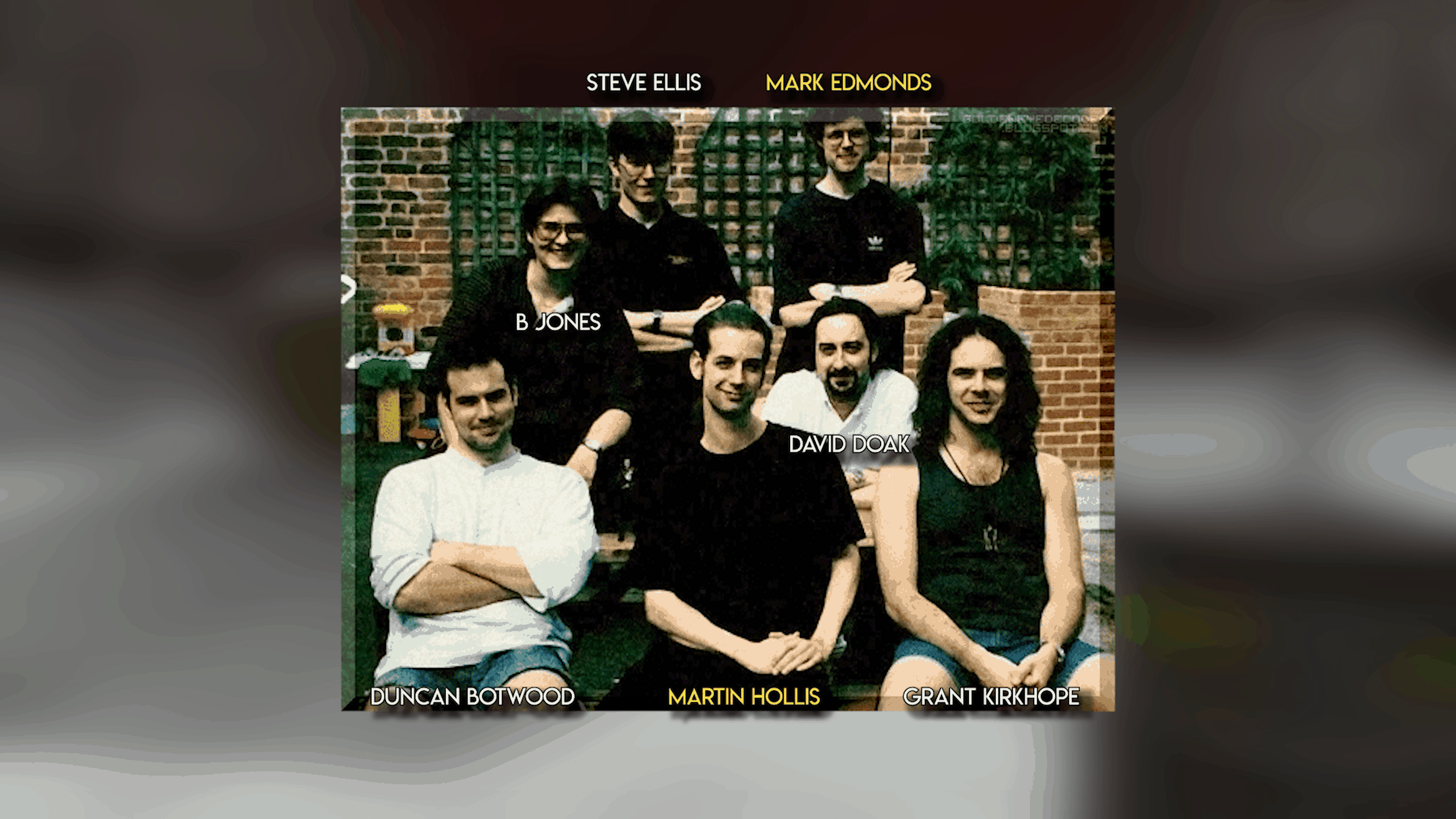

To find out the truth, I contacted the best secret informant: Dr. David Douk . David played an important role in the development team and GoldenEye 007 and its spiritual follower Perfect Dark . During the interview, we discussed the NPC's intelligence, alarm behavior, sensor systems, navigation tools, performance balancing, and more. So let's start to figure out how it was all arranged.

Building architecture

Basically, GoldenEye has become what it has become thanks to two people: producer and game director Martin Hollis and gameplay programmer and engine Mark Edmonds. Initially, Hollis was inspired by games like Virtua Cop , where enemies shot into the camera frame, shot at a player, and then ran away, hid, or dynamically reacted to player hits. However, Hollis sought to create a more exciting and reactive AI, surpassing the standard set in 1993 by DOOM .

“It was important for us to show the AI player. There is no point in complex artificial intelligence, if the player does not notice it. Your NPC can inspirely talk about the meaning of life, but a player will not notice this if he just pokes his head around the corner in the game and feeds the enemies with lead. That is, the intelligence should be obvious. AI must demonstrate the game mechanics. The AI should show the level structure. And all this really should bring something new to the gameplay itself. ”

[Martin Hollis, European Developers Forum, 2004.]

This approach led to the appearance of guards and patrols, forcing the player to be more tactical about passing. Sensors of sight and hearing were used, which allowed the AI to react to the player's behavior or not to notice him if he sneaked in secret. In addition, friendly and civilian characters reacted to the presence of the player, helping him or fleeing.

When David Doke arrived at the project, most of the basic engine components for 3D motion, rendering, and simple AI behaviors were already created by Edmonds. However, over the past two years of the development of the project, the main part of AI behaviors and other basic gameplay systems have appeared. The game has become less focused on the player, the AI has appeared patrol patterns, the transition to the alarm state, the ability to use control terminals, toilets and much more.

To achieve this, Mark Edmonds created a whole system of scripts inside the codebase written in C, which was constantly supplemented and updated while Doak and other team members experimented with ideas. Quite often, a new idea was offered to Edmonds, who assessed the possibility of its implementation and added it to the engine during the day. This scripting system allowed developers to link many pre-created actions in a sequence of intellectual behavior that depend on a very specific context. These behaviors caused their own threads to be executed and freed up resources as soon as possible as soon as the next “atomic” behavior began to run, and then checked to see if the update could be performed again. Such scripting behaviors were used not only for friendly and enemy characters, but also for systems like doors and gates opening in time, as well as cinematic screensavers at the beginning and end of each level that controlled the player and other characters in the world.

In the GoldenEye Editor, originally developed by modder Mitchell "SubDrag" Kleiman, reverse engineering of these AI behaviors was performed. They have become editable “action blocks” from which you can create your own behaviors. Enemy guards can react to a wide range of sensory signals: when a shot gets into them, when they notice an enemy, at another guard who was killed in their field of view, and also at shots heard nearby. In addition, the NPC could decide to surrender, it depended on the probability, but much more on whether the player aims at them. And if the AI knew that the player is no longer aiming at him, his behavior changed. As will be explained below, the vision and hearing tests required for such behaviors were performed in very limited and curtailed processes, however, creating impressive results on the N64's weak hardware.

Depending on the conditions, the guards could perform many different behaviors, including side steps, rolling to the side, fire in a squat, on the go, on the run, and even throwing grenades. These actions strongly depended on the relative location of the player and their current behavior.

In fact, it was a fairly simple finite state machine (Finite State Machine), in which the AI was in a certain state of behavior execution, until the in-game events forced him to proceed to the next one. The same principle was later used in Half-Life , which came out a year after GoldenEye (in 1998), and the developers at Rare were aware of the impact their game had on the highly popular Valve shooter:

“Most of all I remember the meeting with the guys from Valve at the British industry trade show ECTS in 1998. They joked that GoldenEye made them remake a bunch of things in Half-Life. They decided to do it right. ”

David Doke, GamesRadar, 2018.

Performing AI

The two most important elements of the Goldeneye engine for controlling AI were the camera and something called the “STAN system”. To simplify rendering, even levels as large and open as the Severnaya surface were divided into “rooms” - smaller blocks. This helped to maintain the desired level of performance, because you could only render rooms in the pyramid of camera visibility. But because of this, the AI usually did not perform its basic behaviors until the characters were rendered by the camera. This helped to reduce computational costs, and at the same time provided interesting opportunities, which I will discuss below.

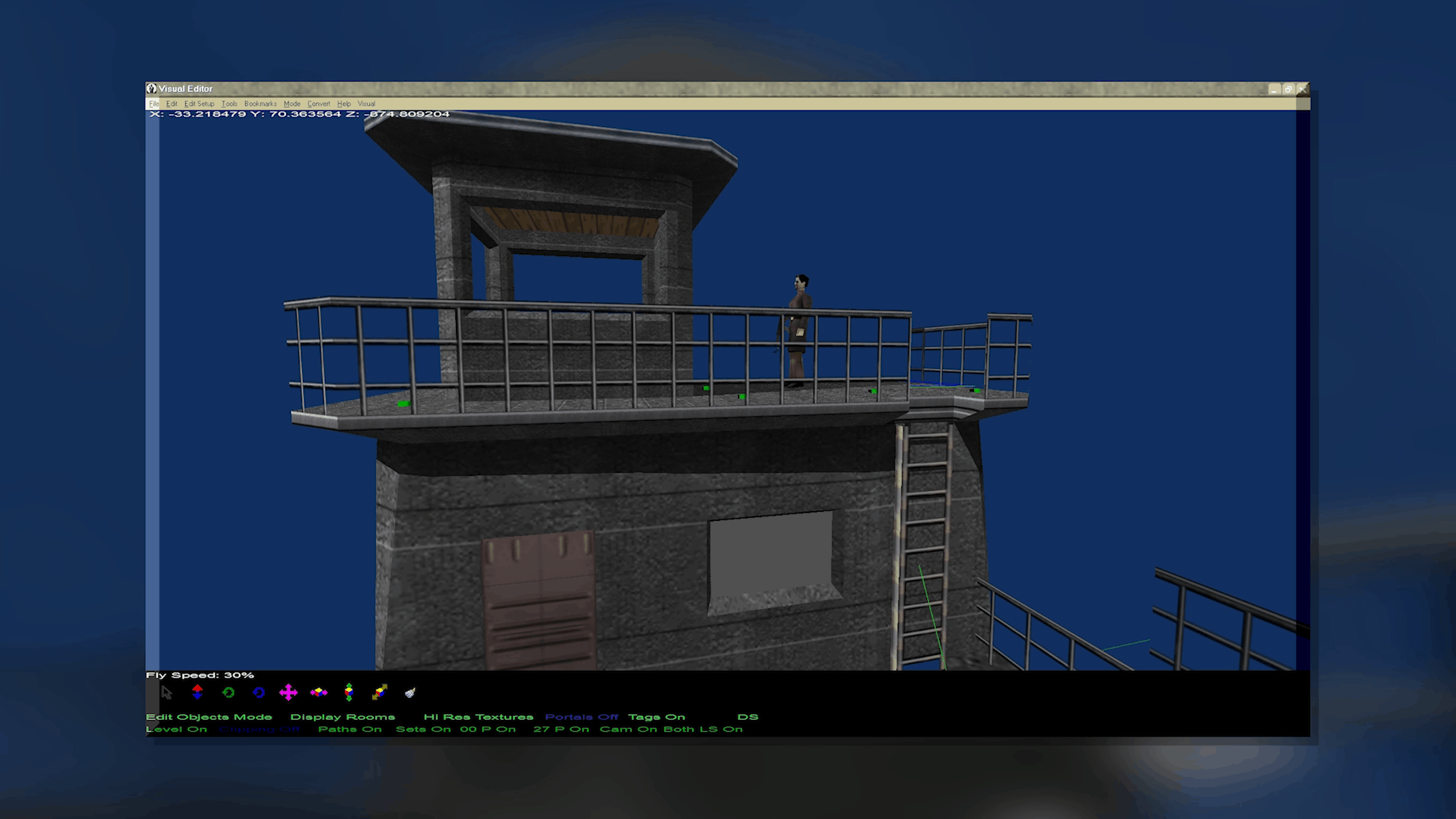

But the most important system related to rooms and rendering behavior was STAN (which is a simple abbreviation for Stand). The STAN objects developed by Martin Hollis are polygonal meshes for a particular room, marked for use as STAN. All these objects “knew” in which room or corridor they were located. If the AI character stood on top of a certain STAN, then he could see the player only if the STAN in which he is located is located in the current or next room. The system performed a simple check to see if the two STANs could “see” each other. This allowed for less costly visibility tests instead of 3D ray tracing, which would require much more resources from equipment. The system worked surprisingly well, but in some specific cases it “broke down”. An example of this is the guards on the dam who could not see the player until he approached their path of movement: STAN at the top of the stairs could only see STAN at its bottom, that is, the player could perform any actions nearby and go unnoticed. The second example is the spiral path in caves, where NPCs see the path in front of themselves, but not on the other side of the abyss.

Having placed all STAN maps, it was possible to build a navigation system on the basis of their polygons. As I mentioned earlier in AI 101, in modern games, navigation meshes are commonly used, creating a complete polygonal surface that defines the places where a character can move on the map. In their modern form, navigation meshes appeared only in 1997, so for GoldenEye the developers took the STAN system and added objects called PAD over it. The PADs were located on the STAN, but at the same time were connected to each other, essentially creating a mesh navigation graph. The characters knew which room they were in and could search for the PAD of the destination room if they reacted to a disturbance nearby, or moved across the STAN within the current room to the point of interest.

All this is complemented by hearing sensors. They performed a simple check of the proximity of the characters within a certain radius of the sound source. They also took into account the type of weapon and the rate of its shooting. The PP7 with a silencer did not attract attention, the standard PP7 was loud, the KF7 Soviet was even louder, and firing bursts led to an increase in the radius to the maximum level, the louder than which was considered only a rocket launcher and a tank gun.

Adaptive change

All these tools and AI systems are very impressive, they show how the developers deliberately searched for smart and effective ways to create specific elements that they wanted to see in the game. But there are many other elements with which GoldenEye created separate AI behaviors that were made almost individually for each level. NPCs didn’t hide behind obstacles, so at such levels as Silo and Train, unique scripts were needed so that the characters would stand next to certain PADs and react depending on whether they were in a squat or standing tall.

The NPC did not know where the other characters are on the map, so at the levels where the player needs to protect Natalia Simonova, it was necessary not only to provide more movement, but also to create special behaviors so that the enemies would shoot not only at her, but also target the player. To implement such characters as scientists, freezing on the spot when hovering weapons at them, or Boris Grishenko, who acted as the player ordered him, while he did not let him out of sight, other behaviors were needed that worked in a more specific context. Many main plot characters had to write their own AI behaviors for each level. For example, Alek Trevelyan has different behaviors on each of the six levels with his participation: Facility, Statue, Train, Control, Caverns and Cradle.

In addition, it was necessary to solve problems with the design of levels and pace of the game. Severnaya exterior levels turned out to be difficult in terms of balancing the location of AI characters, so the developers wrote level scripts that create enemies outside the fog radius and run straight to the player. But if the enemies were too far away, then they simply turned off and could be reactivated later.

My favorite feature of GoldenEye is how the player’s punishment for loud and aggressive weapon handling was implemented if he didn’t pass the level quickly enough. Remember, I said that NPCs do not activate their AI behavior if they have not yet been rendered? In case the NPC heard the sound, but was not yet rendered, it creates its own clone, so that it goes to check the sound source, and continues to create clones, or until the excitement settles, or the camera does not render the character. This is especially noticeable at levels like Archives, where a player can easily fall into the trap in the interrogation room at the beginning of the level due to the constantly created clones.

In order to improve the overall pace of the gameplay, as well as adapt the controls to the lack of accuracy of the Nintendo 64 controller, the transitions and durations of the animations were carefully tested thanks to testing, giving players more chances to determine the priorities of their enemies using automatic or precise aiming mechanics.

Finally

After GoldenEye was released, his rendering and AI rendering service served well for the games that followed him. In the spiritual successor to Perfect Dark of Rare, the same systems were used, partially complemented by innovations. As mentioned above, NPCs in GoldenEye can hide behind shelters only with the help of specially prescribed behavior, but in Perfect Dark enemies can already calculate positions for shelters, including dynamic ones created by anti-gravity objects. In addition, due to experiments with lighting, calculated by shading the peaks, the sensors of vision could have reduced vision. The same process was later used by Free Radical Design for its Timesplitters franchise, because this studio was founded by several members of the GoldenEye development team. In this series of games, the STAN / PAD system has been extended to better support the vertical level architecture.

Despite his twentieth anniversary, GoldenEye set the standards that were used in Half-Life, and today many creators of many other shooter franchises are trying to reproduce them. I hope that after reading this article, you have appreciated the depth of the systems used in the game and the level of commitment and skill that was required to create the project, which ultimately had such a great influence.

Source: https://habr.com/ru/post/459765/

All Articles