Serverless serverless rendering

The author of the material, the translation of which we publish, is one of the founders of the Webiny project - serverless CMS, based on React, GraphQL and Node.js. He says support for a multi-tenant serverless cloud platform is a matter with special challenges. Already written many articles in which we are talking about the standard techniques for optimizing web projects. Among them are server rendering, the use of advanced web application development technologies, various ways to improve application assemblies, and much more. This article, on the one hand, is similar to others, and on the other, it is different from them. The fact is that it is dedicated to the optimization of projects working in a serverless environment.

In order to take measurements that will help identify project problems, we will use webpagetest.org . With the help of this resource, we will execute queries and collect information on the execution time of various operations. This will allow us to better understand what users see and feel while working with the project.

We are especially interested in the “First view” indicator, that is, how much time it takes to load a site from a user who visits it for the first time. This is a very important indicator. The fact is that the browser cache can hide many bottlenecks of web projects.

')

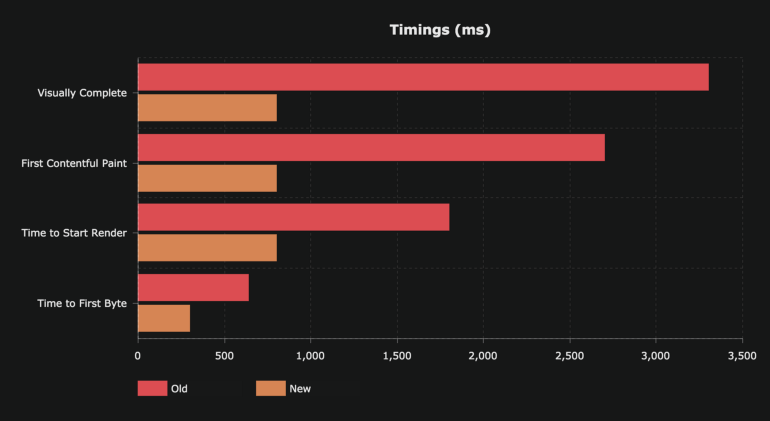

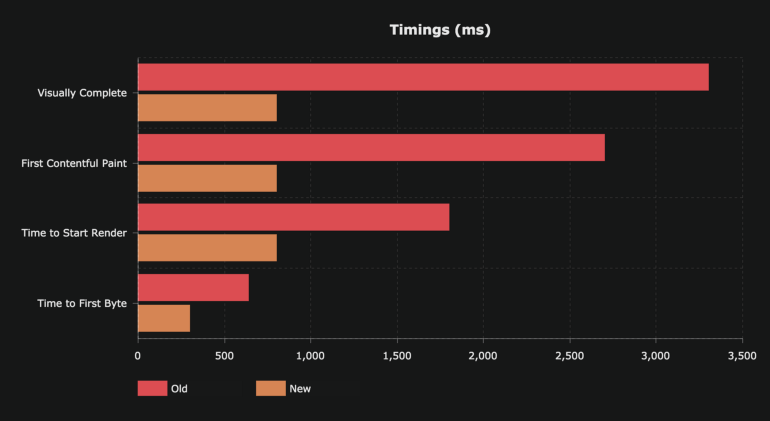

Take a look at the following chart.

Analysis of old and new indicators of the web project

Here, the most important indicator can be considered “Time to Start Render” - the time before the start of rendering. If you look at this indicator, you can see that only in order to start rendering the page, in the old version of the project it took almost 2 seconds. The reason for this lies in the very essence of single-page applications (Single Page Application, SPA). In order to display the page of such an application on the screen, you first need to download the bulk JS-bundle (this stage of loading the page is marked in the following figure as 1). Then this bundle needs to be processed in the main thread (2). And only after that something can appear in the browser window.

(1) Download js-bundle. (2) Waiting for the main thread to handle the bundle.

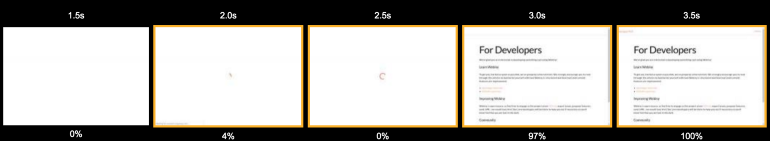

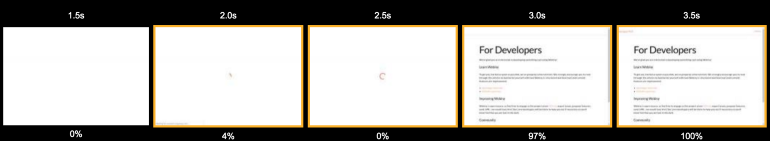

However, this is only part of the overall picture. After the main thread processes the JS bundle, it executes several requests to the Gateway API. At this stage of the page processing, the user sees a rotating loading indicator. The spectacle is not the most pleasant. In this case, the user has not yet seen any page content. Here is a storyboard of the page loading process.

Loading page

All this suggests that the user who has visited such a site experiences not particularly pleasant feelings from working with him. Namely, he is forced to look at a blank page for 2 seconds, and then another second - at the download indicator. This second is added to the page preparation time due to the fact that after loading and processing the JS bundle, API requests are executed. These requests are necessary in order to load the data and, as a result, display the finished page.

Loading page

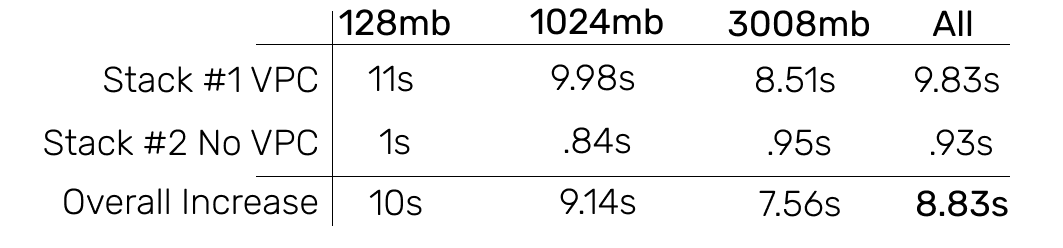

If the project were hosted on a regular VPS, then the time spent on executing these requests to the API would be mostly predictable. However, projects operating in a serverless environment are affected by the infamous cold-start problem. In the case of the Webiny cloud platform, the situation is even worse. AWS Lambda features are part of the Virtual Private Cloud (Virtual Private Cloud) VPC. This means that for each new instance of such a function, you need to initialize ENI (Elastic Network Interface, flexible network interface). This significantly increases the cold start time of functions.

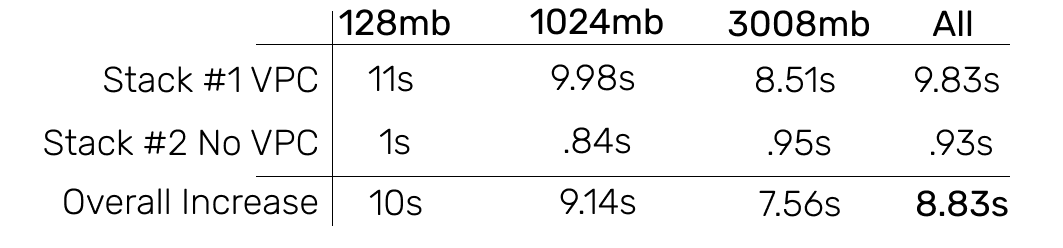

Here are some temporary metrics regarding the loading of AWS Lambda features inside the VPC and outside the VPC.

Load analysis of AWS Lambda functions inside the VPC and outside the VPC (image taken from here )

From this we can conclude that in the case when the function is launched inside the VPC, this gives a 10-fold increase in the cold start time.

In addition, there must be taken into account and another factor - the delay of network data transmission. Their duration is already included at the time that is required to execute requests to the API. Requests are initiated by the browser. Therefore, it turns out that by the time the API responds to these requests, the time it takes for the request to go from the browser to the API, and the time it takes for the response to get from the API to the browser, is added. These delays occur during the execution of each request.

Based on the above analysis, we formulated several tasks that we needed to solve in order to optimize the project. Here they are:

Here are some approaches to solving the problems that we considered:

We finally chose the third item on this list. We reasoned like this: “What if we don’t need API requests at all? What if we can do without a JS-bundle at all? This would allow us to solve all the problems of the project. ”

The first idea that seemed interesting to us was to create an HTML snapshot of the rendered page and transfer this snapshot to users.

Webiny Cloud is a serverless infrastructure based on AWS Lambda that supports Webiny sites. Our system can detect bots. When it turns out that the request is executed by the bot, this request is redirected to the Puppeteer instance, which renders the page using Chrome without a user interface. The bot sends the ready HTML code of the page. This was done mainly for SEO reasons, due to the fact that many bots do not know how to perform JavaScript. We decided to use the same approach for the preparation of pages intended for ordinary users.

This approach shows itself well in environments that do not have JavaScript support. However, if you try to give pre-rendered pages to a client whose browser supports JS, the page is displayed, but then, after downloading the JS files, the React components simply do not know where to mount them. This results in a whole heap of error messages in the console. As a result, this decision did not suit us.

The strength of server-side rendering (SSR, Server Side Rendering) is that all requests to the API are performed within the local network. Since they are processed by a certain system or function running inside the VPC, they are not typical of delays that occur when executing requests from the browser to the backend of the resource. Although in this scenario, the problem of “cold start” remains.

An additional advantage of using SSR is that we transfer the following HTML-version of the page to the client, which, after working with JS files, does not cause any problems with mounting the React components.

And finally, we do not need a very large JS bundle. We, in addition, can, to display the page, do without appealing to the API. A bundle can be loaded asynchronously and it will not block the main thread.

In general, we can say that server rendering, it seems, should have solved most of our problems.

Here is how the analysis of the site after the application of server rendering.

Site performance after using server rendering

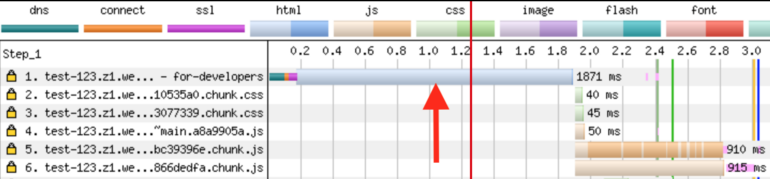

Now API requests are not executed, and the page can be seen before the big JS bundle is loaded. But if you look at the first request, you can see that it takes almost 2 seconds to get the document from the server. Let's talk about it.

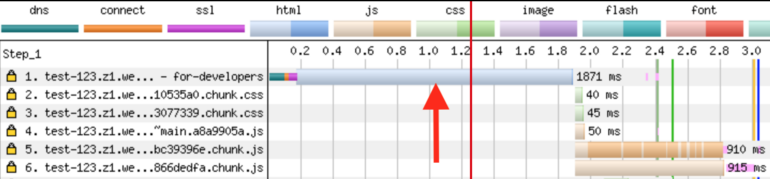

Here we discuss the TTFB (Time To First Byte, time to first byte). Here are the details of the first request.

First Request Details

To handle this first request, we need to do the following: run the Node.js server, perform server-side rendering, make requests to the API and execute the JS code, then return the final result to the client. The problem here is that all this, on average, takes 1-2 seconds.

Our server, which performs server rendering, needs to do all this work, and only after that it can send the first byte of the response to the client. This leads to the fact that the browser has to wait a very long time for the receipt of the response to the request. As a result, it turns out that now for the output of the page you need to produce almost the same amount of work as before. The only difference is that this work is not done on the client side, but on the server, in the server rendering process.

Here you may have a question about the word "server". We're talking all this time about the serverless system. Where did this "server" come from? We certainly tried to perform server-side rendering in AWS Lambda functions. But it turned out that this is a very resource-intensive process (in particular, it was necessary to greatly increase the amount of memory in order to get more processor resources). In addition, here the problem of the “cold start”, about which we have already spoken, is added. As a result, the ideal solution then was to use a Node.js server, which would load the site materials and render them server-side rendering.

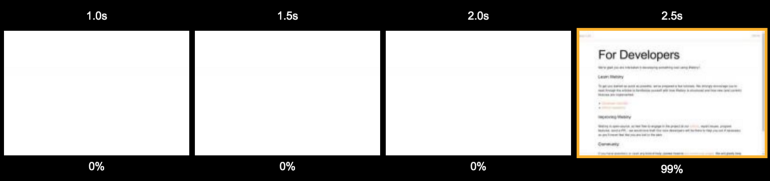

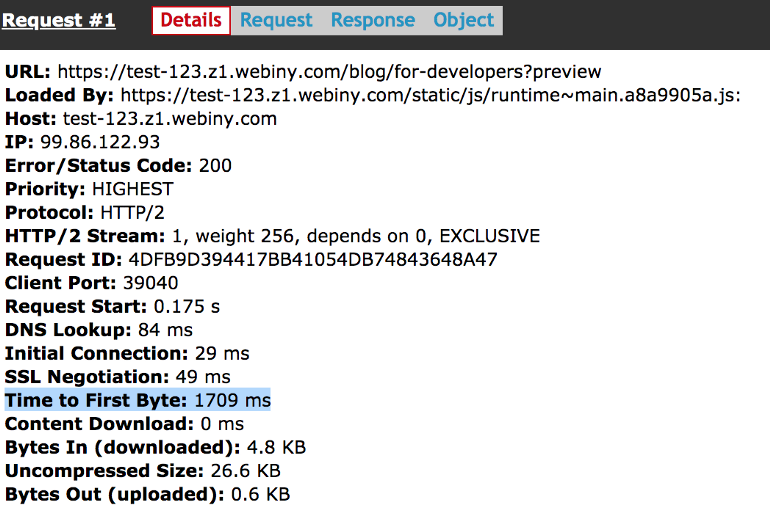

Returning to the implications of using server rendering. Take a look at the next storyboard. It is easy to notice that it is not particularly different from the one that was obtained in the study of the project, which was rendered on the client.

Page load when using server rendering

The user is forced to look at a blank page within 2.5 seconds. It is sad.

Although, looking at these results, one might think that we have achieved absolutely nothing, this is actually not the case. We had an HTML snapshot of the page containing everything needed. This shot was ready to work with React. At the same time during the processing of the page on the client did not need to perform any requests to the API. All necessary data has already been embedded in HTML.

The only problem was that creating this HTML image took too much time. At this point, we could either invest more time in optimizing server rendering, or just cache its results and give our customers a snapshot of a page from something like Redis caches. We did just that.

After a user visits the Webiny site, we first check the centralized Redis cache to see if there is an HTML snapshot of the page. If so, we give the user a cached page. On average, this reduced the TTFB to 200-400 ms. It was after the introduction of the cache, we began to notice significant improvements in the performance of the project.

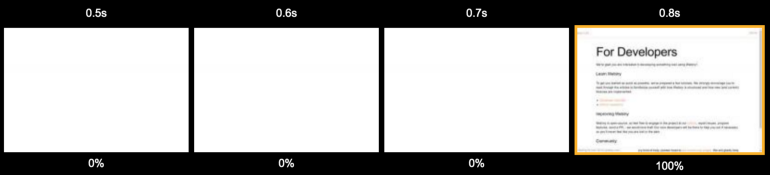

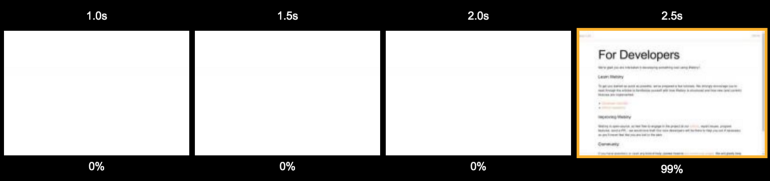

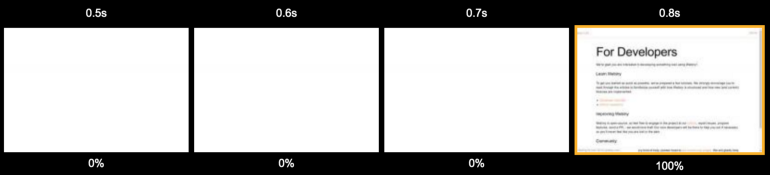

Page load when using server rendering and cache

Even a user who visits a site for the first time sees the contents of a page in less than a second.

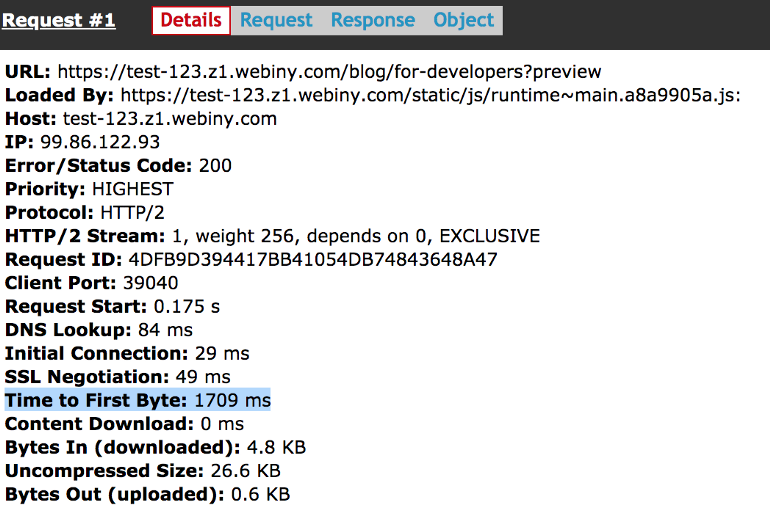

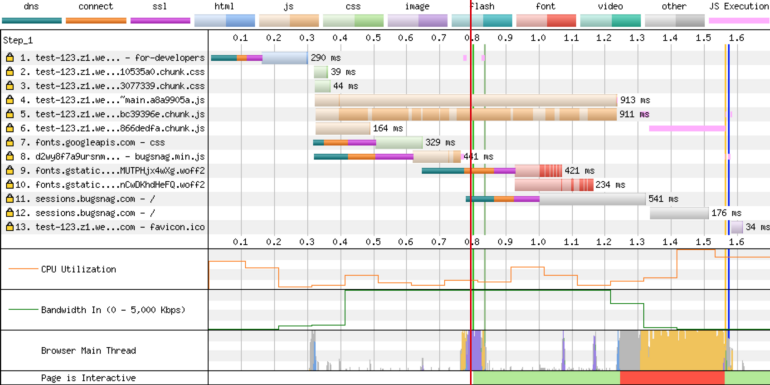

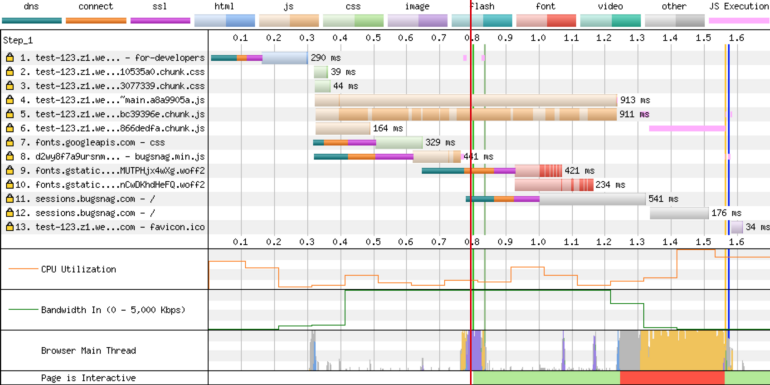

Let's look at how the waterfall chart now looks.

Site performance after server-side rendering and caching

The red line indicates a time stamp of 800 ms. This is where the content of the page is fully loaded. In addition, here you can see that the JS-bundles are loaded at about 1.3 s. But this does not affect the time it takes the user to see the page. At the same time to display the page does not need to perform requests to the API and load the main thread.

It will draw attention to the fact that temporary indicators relating to the download of the JS-bundle, the execution of requests to the API, the execution of operations in the main thread, still play an important role in preparing the page for work. These time and resources are required to make the page “interactive”. But this does not play any role, firstly, for the bots of search engines, and secondly - for the formation of the user experience of "fast page load."

Suppose a page is “dynamic”. For example, it displays in the header a link to access a user account in the event that the user who views the page is logged in. After performing server rendering, a general-purpose page will arrive in the browser. That is, one that is displayed to users who are not logged in. The title of this page will change to reflect the fact that the user has logged in to the system, only after the JS bundle has been loaded and API calls have been made. Here we are dealing with the TTI (Time To Interactive indicator, time to first interactivity).

After a few weeks, we discovered that our proxy server does not close the connection to the client where it is needed, if the server rendering was started as a background process. The correction of just one line of code led to the fact that the TTFB indicator was able to be reduced to the level of 50-90 ms. As a result, the site now began to appear in the browser after about 600 ms.

However, we faced another problem ...

"In computer science, there are only two complex things: cache invalidation and entity naming."

Phil carlton

Cache disability is, indeed, a very difficult task. How to solve it? First, you can frequently update the cache by setting a very short storage time for cached objects (TTL, Time To Live, time to live). This will sometimes cause pages to load slower than usual. Secondly, you can create a mechanism for invalidation of the cache, based on certain events.

In our case, this problem was solved with the use of a very small TTL indicator of 30 seconds. But we also realized the possibility of providing customers with outdated data from the cache. While customers are receiving similar data, the cache is updated in the background. Because of this, we got rid of problems, such as delays and cold starts, which are characteristic of AWS Lambda functions.

Here is how it works. User visits the Webiny site. We check the HTML cache. If there is a snapshot of the page there - we give it to the user. The age of the picture can be even several days. We, transferring to the user this old snapshot in a few hundred milliseconds, simultaneously launch the task of creating a new snapshot and updating the cache. It usually takes a few seconds to complete this task, since we created a mechanism by which we always have a number of AWS Lambda functions that are already running in advance. Therefore, we do not have to, while creating new images, spend time on cold start functions.

As a result, we always return cached pages to clients, and when the age of cached data reaches 30 seconds, the contents of the cache are updated.

Caching is definitely the area in which we can still improve on something. For example, we are considering the possibility of automatically updating the cache when a user publishes a page. However, this cache update mechanism is also not perfect.

For example, suppose that the resource’s home page displays the three most recent blog posts. If the cache is updated when a new page is published, then, from a technical point of view, after publication, only the cache for this new page will be generated. The cache for the home page will be outdated.

We are still looking for ways to improve the caching system of our project. But so far, the emphasis has been on getting to grips with existing performance problems. We believe that we have done a fairly good job in terms of solving these problems.

Initially, we used client rendering. Then, on average, the user could see the page in 3.3 seconds. Now this figure has decreased to about 600 ms. It is also important that we now do without the loading indicator.

To achieve this result, we are allowed, basically, the use of server rendering. But without a good caching system, it turns out that the calculations are simply transferred from the client to the server. And this leads to the fact that the time required for the user to see the page does not change much.

The use of server rendering has another positive quality not mentioned earlier. The point is that it makes it easier to view pages on weak mobile devices. The speed of preparing the page for viewing on such devices depends on the modest capabilities of their processors. Server rendering allows you to remove part of the load from them. It should be noted that we have not conducted a special study of this issue, but the system that we have should contribute to the improvement in the field of viewing the site on phones and tablets.

In general, we can say that the implementation of server rendering is not an easy task. And the fact that we use a serverless environment only complicates this task. The solution to our problems required changes to the code, additional infrastructure. We needed to create a well thought out caching mechanism. But in return we got a lot of good. The most important thing is that the pages of our site are now loaded and ready for work much faster than before. We believe our users will like it.

Dear readers! Do you use caching and server rendering technologies to optimize your projects?

Training

In order to take measurements that will help identify project problems, we will use webpagetest.org . With the help of this resource, we will execute queries and collect information on the execution time of various operations. This will allow us to better understand what users see and feel while working with the project.

We are especially interested in the “First view” indicator, that is, how much time it takes to load a site from a user who visits it for the first time. This is a very important indicator. The fact is that the browser cache can hide many bottlenecks of web projects.

')

Indicators reflecting the features of the site loading - identification of problems

Take a look at the following chart.

Analysis of old and new indicators of the web project

Here, the most important indicator can be considered “Time to Start Render” - the time before the start of rendering. If you look at this indicator, you can see that only in order to start rendering the page, in the old version of the project it took almost 2 seconds. The reason for this lies in the very essence of single-page applications (Single Page Application, SPA). In order to display the page of such an application on the screen, you first need to download the bulk JS-bundle (this stage of loading the page is marked in the following figure as 1). Then this bundle needs to be processed in the main thread (2). And only after that something can appear in the browser window.

(1) Download js-bundle. (2) Waiting for the main thread to handle the bundle.

However, this is only part of the overall picture. After the main thread processes the JS bundle, it executes several requests to the Gateway API. At this stage of the page processing, the user sees a rotating loading indicator. The spectacle is not the most pleasant. In this case, the user has not yet seen any page content. Here is a storyboard of the page loading process.

Loading page

All this suggests that the user who has visited such a site experiences not particularly pleasant feelings from working with him. Namely, he is forced to look at a blank page for 2 seconds, and then another second - at the download indicator. This second is added to the page preparation time due to the fact that after loading and processing the JS bundle, API requests are executed. These requests are necessary in order to load the data and, as a result, display the finished page.

Loading page

If the project were hosted on a regular VPS, then the time spent on executing these requests to the API would be mostly predictable. However, projects operating in a serverless environment are affected by the infamous cold-start problem. In the case of the Webiny cloud platform, the situation is even worse. AWS Lambda features are part of the Virtual Private Cloud (Virtual Private Cloud) VPC. This means that for each new instance of such a function, you need to initialize ENI (Elastic Network Interface, flexible network interface). This significantly increases the cold start time of functions.

Here are some temporary metrics regarding the loading of AWS Lambda features inside the VPC and outside the VPC.

Load analysis of AWS Lambda functions inside the VPC and outside the VPC (image taken from here )

From this we can conclude that in the case when the function is launched inside the VPC, this gives a 10-fold increase in the cold start time.

In addition, there must be taken into account and another factor - the delay of network data transmission. Their duration is already included at the time that is required to execute requests to the API. Requests are initiated by the browser. Therefore, it turns out that by the time the API responds to these requests, the time it takes for the request to go from the browser to the API, and the time it takes for the response to get from the API to the browser, is added. These delays occur during the execution of each request.

Optimization tasks

Based on the above analysis, we formulated several tasks that we needed to solve in order to optimize the project. Here they are:

- Improving the speed of executing requests to the API or reducing the number of requests to the API that block rendering.

- Reducing the size of a JS bundle or translating this bundle into resources that are not necessary for page rendering.

- Unlock the main thread.

Approaches to solving problems

Here are some approaches to solving the problems that we considered:

- Optimization of the code in the calculation of the acceleration of its implementation. This approach requires a lot of effort, it is high cost. The benefits that can be obtained from this optimization are questionable.

- Increase the amount of RAM available to AWS Lambda features. Make it easy, the cost of such a solution is somewhere between medium and high. Only small positive effects can be expected from the application of this solution.

- Apply some other way to solve the problem. True, at that moment we did not yet know what this method was.

We finally chose the third item on this list. We reasoned like this: “What if we don’t need API requests at all? What if we can do without a JS-bundle at all? This would allow us to solve all the problems of the project. ”

The first idea that seemed interesting to us was to create an HTML snapshot of the rendered page and transfer this snapshot to users.

Unsuccessful attempt

Webiny Cloud is a serverless infrastructure based on AWS Lambda that supports Webiny sites. Our system can detect bots. When it turns out that the request is executed by the bot, this request is redirected to the Puppeteer instance, which renders the page using Chrome without a user interface. The bot sends the ready HTML code of the page. This was done mainly for SEO reasons, due to the fact that many bots do not know how to perform JavaScript. We decided to use the same approach for the preparation of pages intended for ordinary users.

This approach shows itself well in environments that do not have JavaScript support. However, if you try to give pre-rendered pages to a client whose browser supports JS, the page is displayed, but then, after downloading the JS files, the React components simply do not know where to mount them. This results in a whole heap of error messages in the console. As a result, this decision did not suit us.

Introduction to SSR

The strength of server-side rendering (SSR, Server Side Rendering) is that all requests to the API are performed within the local network. Since they are processed by a certain system or function running inside the VPC, they are not typical of delays that occur when executing requests from the browser to the backend of the resource. Although in this scenario, the problem of “cold start” remains.

An additional advantage of using SSR is that we transfer the following HTML-version of the page to the client, which, after working with JS files, does not cause any problems with mounting the React components.

And finally, we do not need a very large JS bundle. We, in addition, can, to display the page, do without appealing to the API. A bundle can be loaded asynchronously and it will not block the main thread.

In general, we can say that server rendering, it seems, should have solved most of our problems.

Here is how the analysis of the site after the application of server rendering.

Site performance after using server rendering

Now API requests are not executed, and the page can be seen before the big JS bundle is loaded. But if you look at the first request, you can see that it takes almost 2 seconds to get the document from the server. Let's talk about it.

Problem with TTFB

Here we discuss the TTFB (Time To First Byte, time to first byte). Here are the details of the first request.

First Request Details

To handle this first request, we need to do the following: run the Node.js server, perform server-side rendering, make requests to the API and execute the JS code, then return the final result to the client. The problem here is that all this, on average, takes 1-2 seconds.

Our server, which performs server rendering, needs to do all this work, and only after that it can send the first byte of the response to the client. This leads to the fact that the browser has to wait a very long time for the receipt of the response to the request. As a result, it turns out that now for the output of the page you need to produce almost the same amount of work as before. The only difference is that this work is not done on the client side, but on the server, in the server rendering process.

Here you may have a question about the word "server". We're talking all this time about the serverless system. Where did this "server" come from? We certainly tried to perform server-side rendering in AWS Lambda functions. But it turned out that this is a very resource-intensive process (in particular, it was necessary to greatly increase the amount of memory in order to get more processor resources). In addition, here the problem of the “cold start”, about which we have already spoken, is added. As a result, the ideal solution then was to use a Node.js server, which would load the site materials and render them server-side rendering.

Returning to the implications of using server rendering. Take a look at the next storyboard. It is easy to notice that it is not particularly different from the one that was obtained in the study of the project, which was rendered on the client.

Page load when using server rendering

The user is forced to look at a blank page within 2.5 seconds. It is sad.

Although, looking at these results, one might think that we have achieved absolutely nothing, this is actually not the case. We had an HTML snapshot of the page containing everything needed. This shot was ready to work with React. At the same time during the processing of the page on the client did not need to perform any requests to the API. All necessary data has already been embedded in HTML.

The only problem was that creating this HTML image took too much time. At this point, we could either invest more time in optimizing server rendering, or just cache its results and give our customers a snapshot of a page from something like Redis caches. We did just that.

Caching server rendering results

After a user visits the Webiny site, we first check the centralized Redis cache to see if there is an HTML snapshot of the page. If so, we give the user a cached page. On average, this reduced the TTFB to 200-400 ms. It was after the introduction of the cache, we began to notice significant improvements in the performance of the project.

Page load when using server rendering and cache

Even a user who visits a site for the first time sees the contents of a page in less than a second.

Let's look at how the waterfall chart now looks.

Site performance after server-side rendering and caching

The red line indicates a time stamp of 800 ms. This is where the content of the page is fully loaded. In addition, here you can see that the JS-bundles are loaded at about 1.3 s. But this does not affect the time it takes the user to see the page. At the same time to display the page does not need to perform requests to the API and load the main thread.

It will draw attention to the fact that temporary indicators relating to the download of the JS-bundle, the execution of requests to the API, the execution of operations in the main thread, still play an important role in preparing the page for work. These time and resources are required to make the page “interactive”. But this does not play any role, firstly, for the bots of search engines, and secondly - for the formation of the user experience of "fast page load."

Suppose a page is “dynamic”. For example, it displays in the header a link to access a user account in the event that the user who views the page is logged in. After performing server rendering, a general-purpose page will arrive in the browser. That is, one that is displayed to users who are not logged in. The title of this page will change to reflect the fact that the user has logged in to the system, only after the JS bundle has been loaded and API calls have been made. Here we are dealing with the TTI (Time To Interactive indicator, time to first interactivity).

After a few weeks, we discovered that our proxy server does not close the connection to the client where it is needed, if the server rendering was started as a background process. The correction of just one line of code led to the fact that the TTFB indicator was able to be reduced to the level of 50-90 ms. As a result, the site now began to appear in the browser after about 600 ms.

However, we faced another problem ...

Cache invalidation problem

"In computer science, there are only two complex things: cache invalidation and entity naming."

Phil carlton

Cache disability is, indeed, a very difficult task. How to solve it? First, you can frequently update the cache by setting a very short storage time for cached objects (TTL, Time To Live, time to live). This will sometimes cause pages to load slower than usual. Secondly, you can create a mechanism for invalidation of the cache, based on certain events.

In our case, this problem was solved with the use of a very small TTL indicator of 30 seconds. But we also realized the possibility of providing customers with outdated data from the cache. While customers are receiving similar data, the cache is updated in the background. Because of this, we got rid of problems, such as delays and cold starts, which are characteristic of AWS Lambda functions.

Here is how it works. User visits the Webiny site. We check the HTML cache. If there is a snapshot of the page there - we give it to the user. The age of the picture can be even several days. We, transferring to the user this old snapshot in a few hundred milliseconds, simultaneously launch the task of creating a new snapshot and updating the cache. It usually takes a few seconds to complete this task, since we created a mechanism by which we always have a number of AWS Lambda functions that are already running in advance. Therefore, we do not have to, while creating new images, spend time on cold start functions.

As a result, we always return cached pages to clients, and when the age of cached data reaches 30 seconds, the contents of the cache are updated.

Caching is definitely the area in which we can still improve on something. For example, we are considering the possibility of automatically updating the cache when a user publishes a page. However, this cache update mechanism is also not perfect.

For example, suppose that the resource’s home page displays the three most recent blog posts. If the cache is updated when a new page is published, then, from a technical point of view, after publication, only the cache for this new page will be generated. The cache for the home page will be outdated.

We are still looking for ways to improve the caching system of our project. But so far, the emphasis has been on getting to grips with existing performance problems. We believe that we have done a fairly good job in terms of solving these problems.

Results

Initially, we used client rendering. Then, on average, the user could see the page in 3.3 seconds. Now this figure has decreased to about 600 ms. It is also important that we now do without the loading indicator.

To achieve this result, we are allowed, basically, the use of server rendering. But without a good caching system, it turns out that the calculations are simply transferred from the client to the server. And this leads to the fact that the time required for the user to see the page does not change much.

The use of server rendering has another positive quality not mentioned earlier. The point is that it makes it easier to view pages on weak mobile devices. The speed of preparing the page for viewing on such devices depends on the modest capabilities of their processors. Server rendering allows you to remove part of the load from them. It should be noted that we have not conducted a special study of this issue, but the system that we have should contribute to the improvement in the field of viewing the site on phones and tablets.

In general, we can say that the implementation of server rendering is not an easy task. And the fact that we use a serverless environment only complicates this task. The solution to our problems required changes to the code, additional infrastructure. We needed to create a well thought out caching mechanism. But in return we got a lot of good. The most important thing is that the pages of our site are now loaded and ready for work much faster than before. We believe our users will like it.

Dear readers! Do you use caching and server rendering technologies to optimize your projects?

Source: https://habr.com/ru/post/459306/

All Articles