JavaScript price in 2019

Over the past few years in what is called the “ price of JavaScript ”, there have been significant positive changes due to the increased speed of parsing and compiling scripts by browsers. Now, in 2019, the main components of the load on the system generated by JavaScript are the load time of scripts and their execution time.

The user's interaction with the site may be temporarily disrupted if the browser is busy executing JavaScript code. As a result, we can say that optimization of bottlenecks associated with loading and executing scripts can have a strong positive impact on the performance of sites.

What does the above mean for web developers? The point here is that the resources spent on parsing (parsing, parsing) and compiling scripts are not as serious as they used to be. Therefore, when analyzing and optimizing JavaScript bundles, developers should heed the following three recommendations:

')

Why in modern conditions it is important to optimize the load time and run scripts? Script loading time is extremely important in situations where sites are operated via slow networks. Despite the fact that 4G (and even 5G) networks are increasingly spreading in the world, the NetworkInformation.effectiveType property in many cases of using mobile Internet connections shows indicators that are at the level of 3G networks or even at lower levels.

The time it takes to execute a JS code is important for mobile devices with slow processors. Due to the fact that various CPUs and GPUs are used in mobile devices, due to the fact that when devices overheat, for the sake of their protection, the performance of their components decreases, a serious gap between the performance of expensive and cheap phones and tablets can be observed. This greatly affects the performance of the JavaScript code, since the ability to execute such a code by some device is limited by the processor's capabilities of this device.

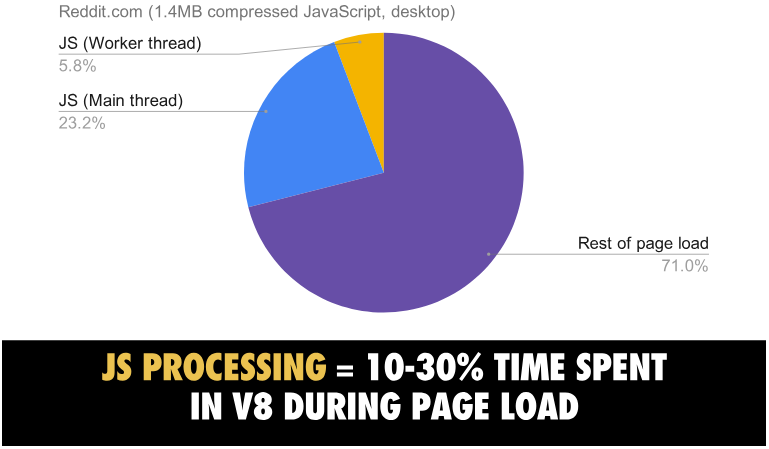

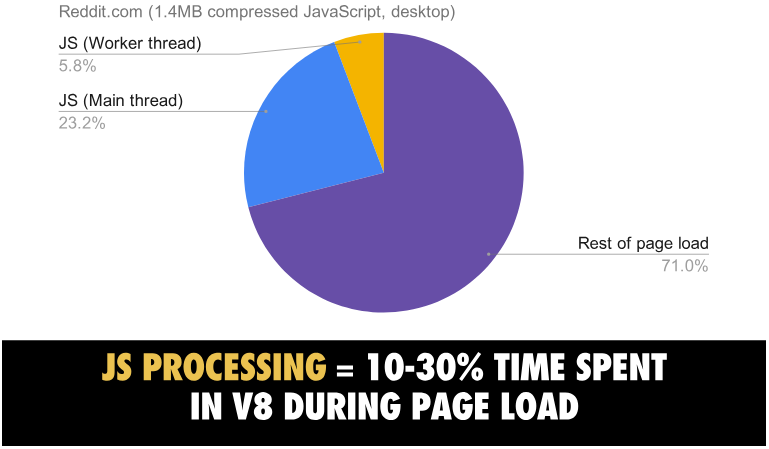

In fact, if you analyze the total time spent on loading and preparing a page in a browser like Chrome, about 30% of this time can be spent on JS code execution. Below is a load analysis of a very typical web page (reddit.com) on a high-performance desktop.

In the process of loading the page about 10-30% of the time is spent on code execution by means of V8

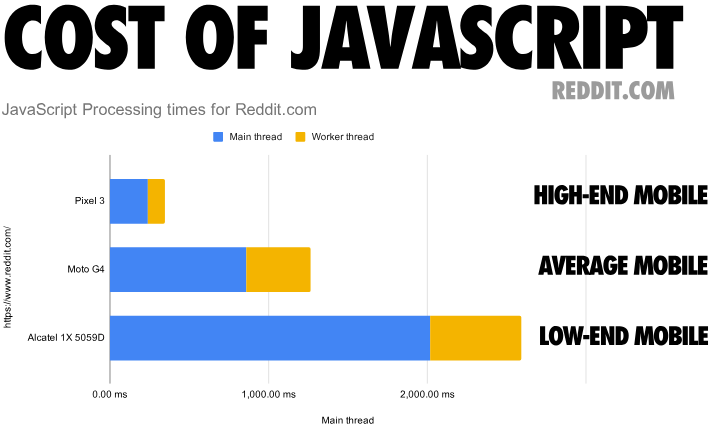

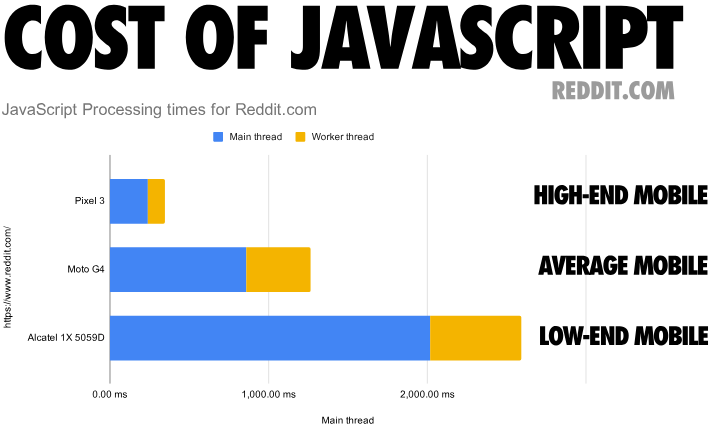

If we talk about mobile devices, then on an average phone (Moto G4) to execute the JS code reddit.com takes 3-4 times longer than on a high-level device (Pixel 3). On a weak device (Alcatel 1X worth less than $ 100) it takes at least 6 times more time to solve the same problem than on something like Pixel 3.

The time required to process the JS code on mobile devices of different classes

Please note that the mobile and desktop versions of reddit.com are different. Therefore, you can not compare the results of mobile devices and, say, MacBook Pro.

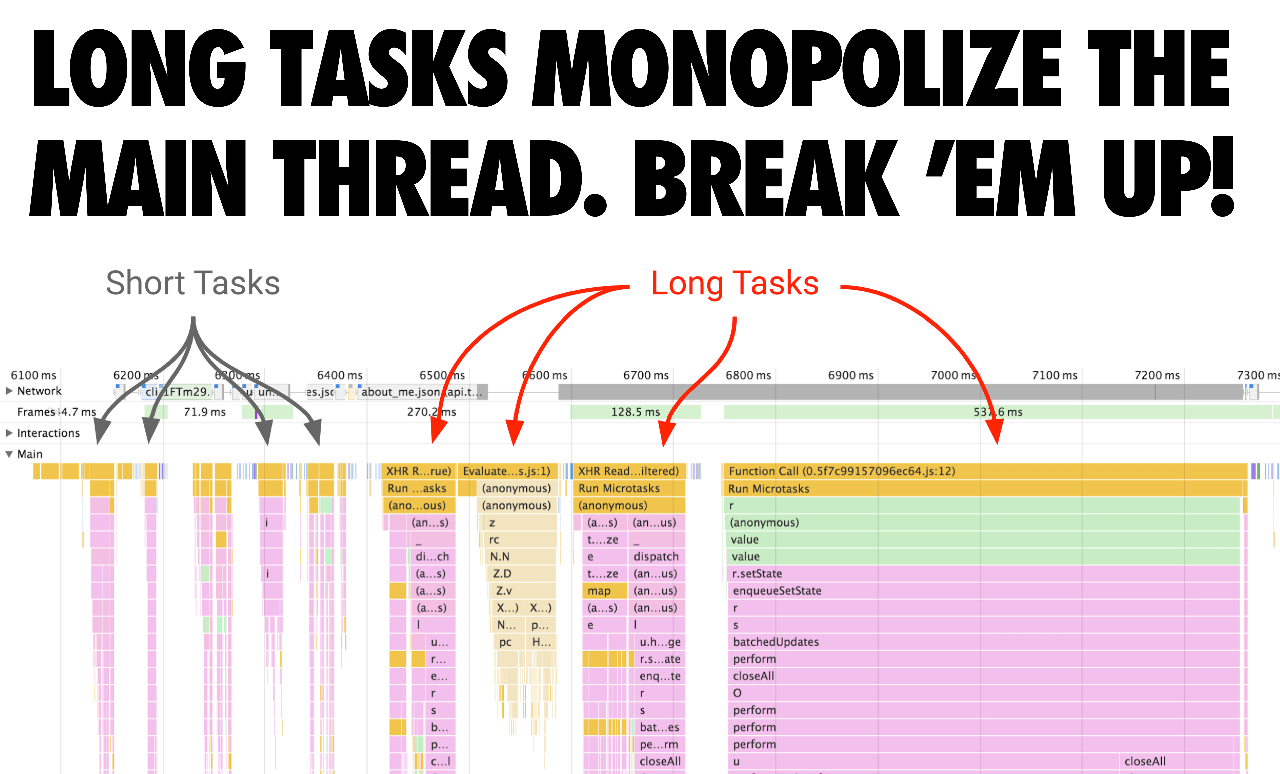

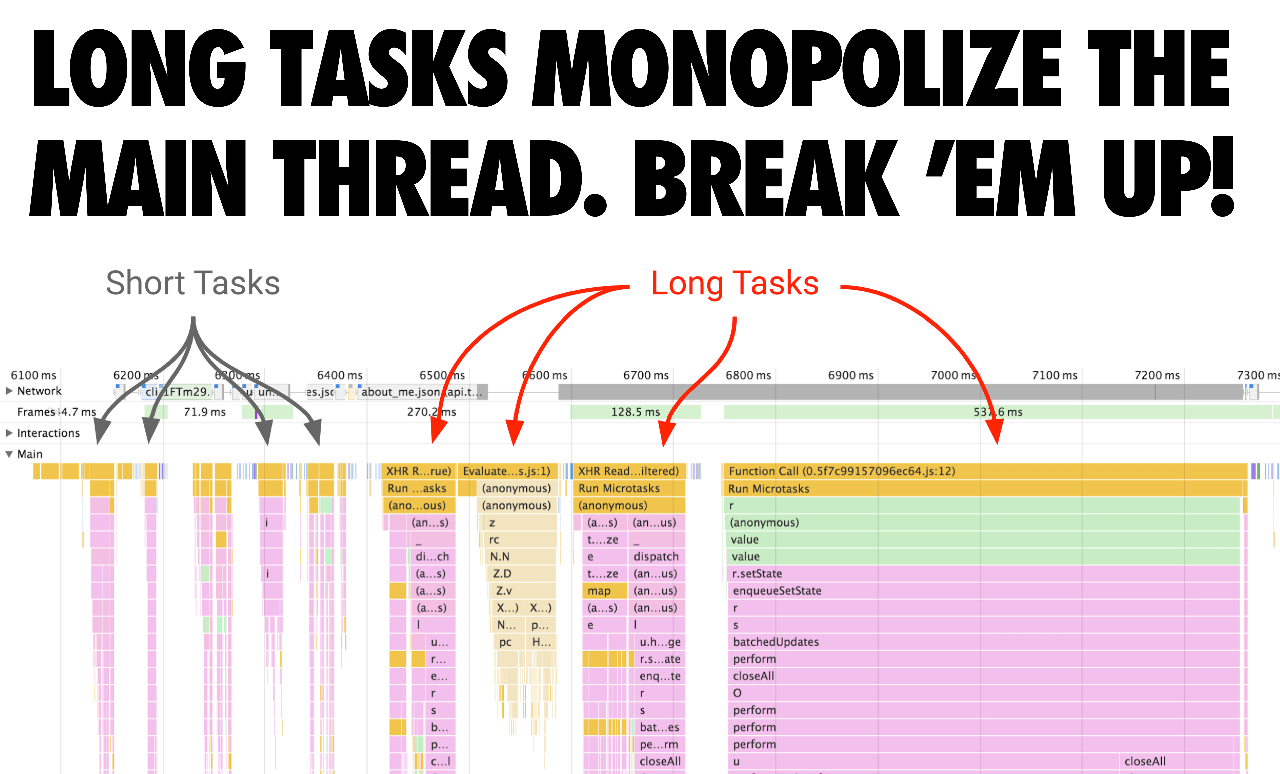

When you are trying to optimize the execution time of the JavaScript code - pay attention to long-term tasks that can permanently capture the UI stream. These tasks can interfere with the performance of other, extremely important tasks, even when externally the page looks completely ready for work. Long tasks should be broken down into smaller tasks. Dividing the code into parts and controlling the order of loading of these parts can ensure that the pages will come to an interactive state more quickly. This, hopefully, will result in users having less inconvenience in interacting with the pages.

Long tasks capture the main stream. They should be broken apart

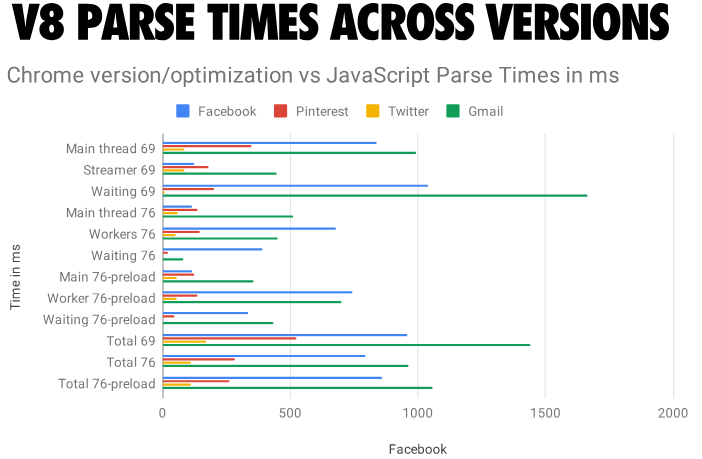

The speed of parsing the source JS code in V8, since the time of Chrome 60, has increased 2 times. At the same time, parsing and compiling now make a smaller contribution to the “JavaScript price”. This is due to other work on optimizing Chrome, leading to the parallelization of these tasks.

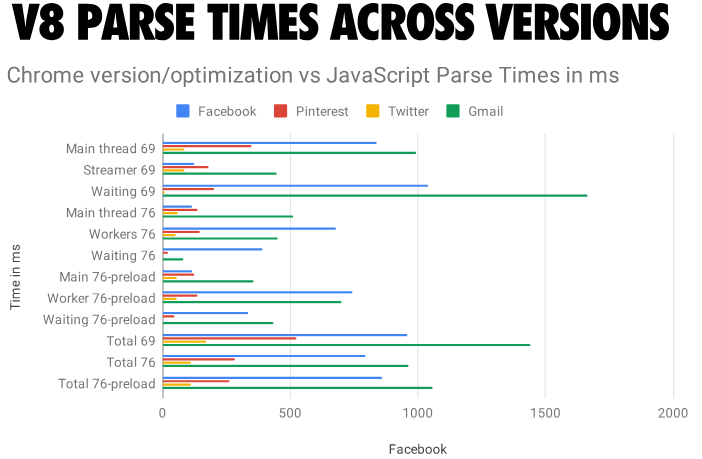

In V8, the amount of work on parsing and compiling code produced in the main stream is reduced by an average of 40%. For example, for Facebook, the improvement of this indicator was 46%, for Pinterest - 62%. The highest score of 81% was obtained for YouTube. Such results are possible due to the fact that parsing and compilation are moved to a separate stream. And this is in addition to the already existing improvements concerning the streaming solution of the same tasks outside the main stream.

JS parsing time in different versions of Chrome

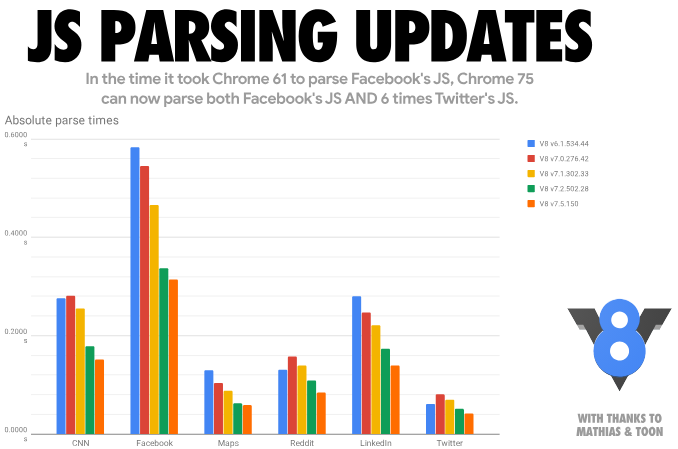

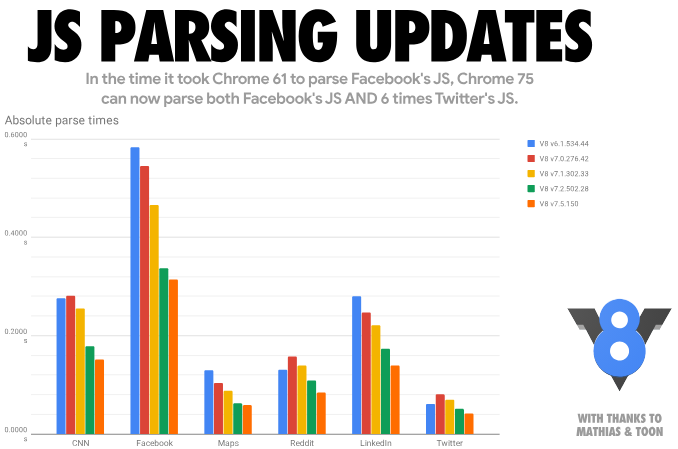

You can also visualize how the V8 optimizations produced in different versions of Chrome affect the processor time required to process the code. During the same time that Chrome 61 needed to parse the JS-code of Facebook, Chrome 75 can now parse the JS-code of Facebook and, in addition, parse the Twitter code 6 times.

In the time that Chrome 61 needed to process the JS-code of Facebook, Chrome 75 can process both the Facebook code and the six-fold amount of Twitter code

Let's talk about how these improvements were achieved. In a nutshell, the scripting resources can be parsed and compiled streaming in the workflow. This means the following:

If you talk about it all in a little more detail, then the point here is as follows. In much older versions of Chrome, the script needed to download the entire one before it could be parsed. This approach is simple and understandable, but when it is used, processor resources are not efficiently used. Chrome, between versions 41 and 68, starts parsing in asynchronous mode, immediately after the script has started loading, performing this task in a separate thread.

Scripts come to the browser fragments. V8 starts streaming data after it has at least 30 KB of code.

In Chrome 71, we moved to a task-based system. Here the scheduler can simultaneously start several sessions of asynchronous / delayed script processing. Due to this change, the load created by parsing to the main thread has decreased by about 20%. This resulted in an estimated 2% improvement in TTI / FIDs from real sites.

Chrome 71 uses a task-based code processing system. With this approach, the scheduler can process several asynchronous / deferred scripts simultaneously.

In Chrome 72, we did stream processing in the basic way of script parsing. Now even ordinary synchronous scripts are processed this way (although this does not apply to built-in scripts). In addition, we have stopped canceling parsing operations based on tasks, in case the main thread needs parsed code. This is done because it leads to the need to re-perform some part of the work already done.

In the previous version of Chrome, there was support for streaming parsing and streaming code compilation. Then the script, loaded from the network, had to first get into the main stream, and then redirected to the stream processing system scripts.

This often led to the fact that the stream parser had to wait for data that is already downloaded from the network but not yet redirected by the main stream for stream processing. This happened because the main thread could be busy with some other tasks (such as parsing HTML, creating page layout or executing JS code).

Now we are experimenting to start parsing the code when preloading pages. Previously, the implementation of such a mechanism was hampered by the need to use the resources of the main stream to transfer jobs to the stream parser. Details about parsing JS-code that runs "instantly" can be found here .

In addition to the above, it can be noted that in the developer’s tools there used to be one problem . It consisted in the fact that information about the task of parsing was derived as if they completely blocked the main stream. However, the parser performed operations blocking the main thread only when it needed new data. Since we have moved from a single-stream use scheme for stream processing to a stream processing scheme, this has become quite obvious. This is what you could see in Chrome 69.

The problem in the developer tools, because of which information about parsing scripts were displayed as if they completely blocked the main thread

Here you can see that the Parse Script task takes 1.08 seconds. But parsing javascript is actually not so slow! Most of this time, nothing useful is done except for waiting for data from the main stream.

In Chrome 76, you can see a completely different picture.

In Chrome 76, the parsing procedure is divided into many small tasks.

In general, it can be noted that the Performance tab of the developer tools is great for seeing the overall picture of what is happening on the page. In order to get more detailed information reflecting V8 features, such as parsing time and compile time, you can use the Chrome Tracing tool with RCS (Runtime Call Stats) support. In the received RCS data, you can find the indicators Parse-Background and Compile-Background. They are able to report how much time it took to parse and compile the JS code outside the main thread. The Parse and Compile values indicate how much time was spent on the corresponding actions in the main thread.

Analyzing RCS data using Google Tracing

Let's look at a few examples of how stream processing scripts influenced the viewing of real sites.

▍Reddit

View reddit.com on the MacBook Pro. Time to parse and compile JS code spent in the main and in the workflow

Reddit.com has several JS-bundles, each of which exceeds 100 Kb in size. They are wrapped with external functions, which results in large volumes of lazy compilation in the main thread. The time required for processing scripts in the main thread is crucial in the above scheme. This is due to the fact that a large load on the main thread can increase the time it takes for a page to go online. When processing the reddit.com site code, most of the time is spent in the main thread, and the resources of the working / background stream are used to a minimum.

It would be possible to optimize this site by dividing some large bundles into parts (about 50 Kb each) and doing without wrapping the code in a function. This would maximize parallel processing of scripts. As a result, bundles could be simultaneously parsed and compiled in streaming mode. This would reduce the load on the main stream when preparing the page for work.

▍Facebook

View facebook.com site on macbook pro. Time to parse and compile JS code spent in the main and in the workflow

We can also consider a site like facebook.com, which uses about 6 MB of compressed JS code. This code is loaded using approximately 292 requests. Some of them are asynchronous, some are aimed at preloading data, some have low priority. Most Facebook scripts are small and narrow. This can well affect the parallel processing of data by means of background / workflows. The fact is that many small scripts can be simultaneously parsed and compiled by means of stream processing scripts.

Please note that your site is probably different from Facebook. You probably do not have applications that are kept open for a long time (like the one that is the Facebook site or the Gmail interface), and when working with which, it can be justified to load such serious volumes of scripts with a desktop browser. But despite this, we can give a general recommendation that is valid for any projects. It lies in the fact that the application code should be broken into bundles of a modest size, and the fact that you need to download these bundles only when they are needed.

Although most of the work on parsing and compiling JS code can be performed using streaming tools in a background thread, some operations still require a main stream. When the main thread is busy with something, the page cannot respond to user input. Therefore, it is recommended to pay attention to the impact on the UX sites have loading and executing JS-code.

Consider that now not all JavaScript engines and browsers implement stream processing of scripts and optimize their loading. But despite this, we hope that the general optimization principles outlined above can improve the user experience of working with sites viewed in any of the existing browsers.

Parsing JSON code can be much more efficient than parsing JavaScript code. The point is that the JSON grammar is much simpler than the JavaScript grammar. This knowledge can be applied in order to improve the speed of preparing for web applications that use large configuration objects (such as Redux repositories), whose structure resembles JSON code. As a result, it turns out that instead of presenting data in the form of object literals embedded in the code, you can represent them as strings of JSON objects and parse these objects during program execution.

The first approach, using JS objects, looks like this:

The second approach, using JSON strings, involves the use of such constructs:

Since JSON strings must be processed only once, the approach that uses

Using object literals as repositories for large amounts of data carries another threat. The idea is that there is a risk that such literals can be processed twice:

It is impossible to get rid of the first pass of processing object literals. But, fortunately, the second pass can be avoided by placing object literals at the top level or inside PIFE .

Optimize the performance of the site for those cases when users visit them more than once, thanks to the caching capabilities of the code and byte-code. When a script is requested from the server for the first time, Chrome downloads it and sends it to V8 for compilation. The browser also saves the file of this script in its disk cache. When the second request is made to download the same JS file, Chrome takes it from the browser cache and passes the V8 again for compilation. This time, however, the compiled code is serialized and attached to the cached script file as metadata.

The work of the code caching system in V8

When a script is requested for the third time, Chrome takes the file and its metadata from the cache, and then passes the V8 and both. V8 deserializes metadata and, as a result, may skip the compilation step. Code caching is triggered if visits are made to the site within 72 hours. Chrome, moreover, uses the strategy of greedy caching code when a service worker is used to cache scripts. Details about caching code can be read here .

In 2019, the main performance bottlenecks of web pages are loading and executing scripts. In order to improve the situation - strive to use synchronous (embedded) scripts of small sizes, which are necessary for organizing user interaction with the part of the page that is visible to him immediately after loading. The scripts used to serve other parts of the pages are recommended to be loaded in deferred mode. Break large bundles into small pieces. This will facilitate the implementation of the strategy for working with code, the application of which will only load code when it is needed, and only where it is needed. This will allow the maximum use of V8 features aimed at parallel code processing.

If you are developing mobile projects, then you should strive to ensure that they use as little as possible JS-code. This recommendation stems from the fact that mobile devices typically operate on fairly slow networks. Such devices, in addition, may be limited in terms of available RAM and processor resources available to them. Try to find a balance between the time required to prepare scripts uploaded from the network and using the cache. This will maximize the amount of work involved in parsing and compiling code that runs outside the main thread.

Dear readers! Do you optimize your web projects taking into account the peculiarities of processing JS-code with modern browsers?

The user's interaction with the site may be temporarily disrupted if the browser is busy executing JavaScript code. As a result, we can say that optimization of bottlenecks associated with loading and executing scripts can have a strong positive impact on the performance of sites.

General practice recommendations for website optimization

What does the above mean for web developers? The point here is that the resources spent on parsing (parsing, parsing) and compiling scripts are not as serious as they used to be. Therefore, when analyzing and optimizing JavaScript bundles, developers should heed the following three recommendations:

')

- Strive to reduce the time required to download scripts.

- Try to keep your JS bundles small. This is especially important for sites designed for mobile devices. Using small bundles improves code loading time, reduces memory usage, and reduces processor load.

- Try to ensure that the entire project code is not represented as a single large bundle. If the size of a bundle exceeds approximately 50-100 Kb, divide it into separate fragments of a small size. Thanks to HTTP / 2 multiplexing, multiple requests to the server and processing of multiple responses can be performed at the same time. This reduces the load on the system associated with the need to perform additional requests to load data.

- If you are working on a mobile project - try to keep the code as small as possible. This recommendation is associated with low data rates on mobile networks. Also, strive for economical use of memory.

- Aim to reduce the time required to execute scripts.

- Avoid using lengthy tasks that can load the main thread for a long time and increase the time it takes for the pages to be in a state in which users can interact with them. Under current conditions, the execution of scripts, which occurs after they are loaded, makes the main contribution to the “JavaScript price”.

- Do not embed large code snippets into pages.

- Here it is worth adhering to the following rule: if the size of the script exceeds 1 Kb - try not to embed it in the page code. One of the reasons for this recommendation is the fact that 1 Kb is the limit after which the caching of external script code starts working in Chrome. Also, keep in mind that parsing and compiling embedded scripts are still performed in the main thread.

Why is loading and executing scripts so important?

Why in modern conditions it is important to optimize the load time and run scripts? Script loading time is extremely important in situations where sites are operated via slow networks. Despite the fact that 4G (and even 5G) networks are increasingly spreading in the world, the NetworkInformation.effectiveType property in many cases of using mobile Internet connections shows indicators that are at the level of 3G networks or even at lower levels.

The time it takes to execute a JS code is important for mobile devices with slow processors. Due to the fact that various CPUs and GPUs are used in mobile devices, due to the fact that when devices overheat, for the sake of their protection, the performance of their components decreases, a serious gap between the performance of expensive and cheap phones and tablets can be observed. This greatly affects the performance of the JavaScript code, since the ability to execute such a code by some device is limited by the processor's capabilities of this device.

In fact, if you analyze the total time spent on loading and preparing a page in a browser like Chrome, about 30% of this time can be spent on JS code execution. Below is a load analysis of a very typical web page (reddit.com) on a high-performance desktop.

In the process of loading the page about 10-30% of the time is spent on code execution by means of V8

If we talk about mobile devices, then on an average phone (Moto G4) to execute the JS code reddit.com takes 3-4 times longer than on a high-level device (Pixel 3). On a weak device (Alcatel 1X worth less than $ 100) it takes at least 6 times more time to solve the same problem than on something like Pixel 3.

The time required to process the JS code on mobile devices of different classes

Please note that the mobile and desktop versions of reddit.com are different. Therefore, you can not compare the results of mobile devices and, say, MacBook Pro.

When you are trying to optimize the execution time of the JavaScript code - pay attention to long-term tasks that can permanently capture the UI stream. These tasks can interfere with the performance of other, extremely important tasks, even when externally the page looks completely ready for work. Long tasks should be broken down into smaller tasks. Dividing the code into parts and controlling the order of loading of these parts can ensure that the pages will come to an interactive state more quickly. This, hopefully, will result in users having less inconvenience in interacting with the pages.

Long tasks capture the main stream. They should be broken apart

How to improve V8 affect the acceleration of parsing and compiling scripts?

The speed of parsing the source JS code in V8, since the time of Chrome 60, has increased 2 times. At the same time, parsing and compiling now make a smaller contribution to the “JavaScript price”. This is due to other work on optimizing Chrome, leading to the parallelization of these tasks.

In V8, the amount of work on parsing and compiling code produced in the main stream is reduced by an average of 40%. For example, for Facebook, the improvement of this indicator was 46%, for Pinterest - 62%. The highest score of 81% was obtained for YouTube. Such results are possible due to the fact that parsing and compilation are moved to a separate stream. And this is in addition to the already existing improvements concerning the streaming solution of the same tasks outside the main stream.

JS parsing time in different versions of Chrome

You can also visualize how the V8 optimizations produced in different versions of Chrome affect the processor time required to process the code. During the same time that Chrome 61 needed to parse the JS-code of Facebook, Chrome 75 can now parse the JS-code of Facebook and, in addition, parse the Twitter code 6 times.

In the time that Chrome 61 needed to process the JS-code of Facebook, Chrome 75 can process both the Facebook code and the six-fold amount of Twitter code

Let's talk about how these improvements were achieved. In a nutshell, the scripting resources can be parsed and compiled streaming in the workflow. This means the following:

- V8 can parse and compile JS code without blocking the main thread.

- Stream processing of a script starts from the moment when the universal HTML parser encounters a

<script>. HTML parser handles scripts that block page parsing. Meeting with asynchronous scripts, he continues to work. - In most real-world scenarios characterized by certain network connection speeds, V8 parses the code faster than it can load. As a result, V8 completes the task of parsing and compiling code within a few milliseconds after the last bytes of the script are loaded.

If you talk about it all in a little more detail, then the point here is as follows. In much older versions of Chrome, the script needed to download the entire one before it could be parsed. This approach is simple and understandable, but when it is used, processor resources are not efficiently used. Chrome, between versions 41 and 68, starts parsing in asynchronous mode, immediately after the script has started loading, performing this task in a separate thread.

Scripts come to the browser fragments. V8 starts streaming data after it has at least 30 KB of code.

In Chrome 71, we moved to a task-based system. Here the scheduler can simultaneously start several sessions of asynchronous / delayed script processing. Due to this change, the load created by parsing to the main thread has decreased by about 20%. This resulted in an estimated 2% improvement in TTI / FIDs from real sites.

Chrome 71 uses a task-based code processing system. With this approach, the scheduler can process several asynchronous / deferred scripts simultaneously.

In Chrome 72, we did stream processing in the basic way of script parsing. Now even ordinary synchronous scripts are processed this way (although this does not apply to built-in scripts). In addition, we have stopped canceling parsing operations based on tasks, in case the main thread needs parsed code. This is done because it leads to the need to re-perform some part of the work already done.

In the previous version of Chrome, there was support for streaming parsing and streaming code compilation. Then the script, loaded from the network, had to first get into the main stream, and then redirected to the stream processing system scripts.

This often led to the fact that the stream parser had to wait for data that is already downloaded from the network but not yet redirected by the main stream for stream processing. This happened because the main thread could be busy with some other tasks (such as parsing HTML, creating page layout or executing JS code).

Now we are experimenting to start parsing the code when preloading pages. Previously, the implementation of such a mechanism was hampered by the need to use the resources of the main stream to transfer jobs to the stream parser. Details about parsing JS-code that runs "instantly" can be found here .

How have improvements affected what you can see in the developer tools?

In addition to the above, it can be noted that in the developer’s tools there used to be one problem . It consisted in the fact that information about the task of parsing was derived as if they completely blocked the main stream. However, the parser performed operations blocking the main thread only when it needed new data. Since we have moved from a single-stream use scheme for stream processing to a stream processing scheme, this has become quite obvious. This is what you could see in Chrome 69.

The problem in the developer tools, because of which information about parsing scripts were displayed as if they completely blocked the main thread

Here you can see that the Parse Script task takes 1.08 seconds. But parsing javascript is actually not so slow! Most of this time, nothing useful is done except for waiting for data from the main stream.

In Chrome 76, you can see a completely different picture.

In Chrome 76, the parsing procedure is divided into many small tasks.

In general, it can be noted that the Performance tab of the developer tools is great for seeing the overall picture of what is happening on the page. In order to get more detailed information reflecting V8 features, such as parsing time and compile time, you can use the Chrome Tracing tool with RCS (Runtime Call Stats) support. In the received RCS data, you can find the indicators Parse-Background and Compile-Background. They are able to report how much time it took to parse and compile the JS code outside the main thread. The Parse and Compile values indicate how much time was spent on the corresponding actions in the main thread.

Analyzing RCS data using Google Tracing

How did the changes affect the work with real sites?

Let's look at a few examples of how stream processing scripts influenced the viewing of real sites.

View reddit.com on the MacBook Pro. Time to parse and compile JS code spent in the main and in the workflow

Reddit.com has several JS-bundles, each of which exceeds 100 Kb in size. They are wrapped with external functions, which results in large volumes of lazy compilation in the main thread. The time required for processing scripts in the main thread is crucial in the above scheme. This is due to the fact that a large load on the main thread can increase the time it takes for a page to go online. When processing the reddit.com site code, most of the time is spent in the main thread, and the resources of the working / background stream are used to a minimum.

It would be possible to optimize this site by dividing some large bundles into parts (about 50 Kb each) and doing without wrapping the code in a function. This would maximize parallel processing of scripts. As a result, bundles could be simultaneously parsed and compiled in streaming mode. This would reduce the load on the main stream when preparing the page for work.

View facebook.com site on macbook pro. Time to parse and compile JS code spent in the main and in the workflow

We can also consider a site like facebook.com, which uses about 6 MB of compressed JS code. This code is loaded using approximately 292 requests. Some of them are asynchronous, some are aimed at preloading data, some have low priority. Most Facebook scripts are small and narrow. This can well affect the parallel processing of data by means of background / workflows. The fact is that many small scripts can be simultaneously parsed and compiled by means of stream processing scripts.

Please note that your site is probably different from Facebook. You probably do not have applications that are kept open for a long time (like the one that is the Facebook site or the Gmail interface), and when working with which, it can be justified to load such serious volumes of scripts with a desktop browser. But despite this, we can give a general recommendation that is valid for any projects. It lies in the fact that the application code should be broken into bundles of a modest size, and the fact that you need to download these bundles only when they are needed.

Although most of the work on parsing and compiling JS code can be performed using streaming tools in a background thread, some operations still require a main stream. When the main thread is busy with something, the page cannot respond to user input. Therefore, it is recommended to pay attention to the impact on the UX sites have loading and executing JS-code.

Consider that now not all JavaScript engines and browsers implement stream processing of scripts and optimize their loading. But despite this, we hope that the general optimization principles outlined above can improve the user experience of working with sites viewed in any of the existing browsers.

Price parsing json

Parsing JSON code can be much more efficient than parsing JavaScript code. The point is that the JSON grammar is much simpler than the JavaScript grammar. This knowledge can be applied in order to improve the speed of preparing for web applications that use large configuration objects (such as Redux repositories), whose structure resembles JSON code. As a result, it turns out that instead of presenting data in the form of object literals embedded in the code, you can represent them as strings of JSON objects and parse these objects during program execution.

The first approach, using JS objects, looks like this:

const data = { foo: 42, bar: 1337 }; // The second approach, using JSON strings, involves the use of such constructs:

const data = JSON.parse('{"foo":42,"bar":1337}'); // Since JSON strings must be processed only once, the approach that uses

JSON.parse is much faster than using JavaScript object literals. Especially - with the "cold" loading page. It is recommended to use JSON strings to represent objects with sizes starting at 10 Kb. However, as with any performance advice, this advice should not be thoughtlessly followed. Before applying this production presentation technique, you need to make measurements and evaluate its real impact on the project.Using object literals as repositories for large amounts of data carries another threat. The idea is that there is a risk that such literals can be processed twice:

- The first processing pass is performed during the preliminary parsing of the literal.

- The second approach is performed in the course of the “lazy” parsing of the literal.

It is impossible to get rid of the first pass of processing object literals. But, fortunately, the second pass can be avoided by placing object literals at the top level or inside PIFE .

What can you say about parsing and compiling code when you re-visit sites?

Optimize the performance of the site for those cases when users visit them more than once, thanks to the caching capabilities of the code and byte-code. When a script is requested from the server for the first time, Chrome downloads it and sends it to V8 for compilation. The browser also saves the file of this script in its disk cache. When the second request is made to download the same JS file, Chrome takes it from the browser cache and passes the V8 again for compilation. This time, however, the compiled code is serialized and attached to the cached script file as metadata.

The work of the code caching system in V8

When a script is requested for the third time, Chrome takes the file and its metadata from the cache, and then passes the V8 and both. V8 deserializes metadata and, as a result, may skip the compilation step. Code caching is triggered if visits are made to the site within 72 hours. Chrome, moreover, uses the strategy of greedy caching code when a service worker is used to cache scripts. Details about caching code can be read here .

Results

In 2019, the main performance bottlenecks of web pages are loading and executing scripts. In order to improve the situation - strive to use synchronous (embedded) scripts of small sizes, which are necessary for organizing user interaction with the part of the page that is visible to him immediately after loading. The scripts used to serve other parts of the pages are recommended to be loaded in deferred mode. Break large bundles into small pieces. This will facilitate the implementation of the strategy for working with code, the application of which will only load code when it is needed, and only where it is needed. This will allow the maximum use of V8 features aimed at parallel code processing.

If you are developing mobile projects, then you should strive to ensure that they use as little as possible JS-code. This recommendation stems from the fact that mobile devices typically operate on fairly slow networks. Such devices, in addition, may be limited in terms of available RAM and processor resources available to them. Try to find a balance between the time required to prepare scripts uploaded from the network and using the cache. This will maximize the amount of work involved in parsing and compiling code that runs outside the main thread.

Dear readers! Do you optimize your web projects taking into account the peculiarities of processing JS-code with modern browsers?

Source: https://habr.com/ru/post/459296/

All Articles