Running with prostheses: nekstgen simulation of human movement with the help of muscles, bones and neural networks

Employees of Seoul University published a study on the simulation of the movement of two-legged characters based on the work of the joints and muscle contractions, using a neural network with Deep Reinforcement Learning. Under the cut translation of the overview.

My name is Jehee Lee. I am a professor at Seoul National University and a computer graphics researcher with over 25 years of experience. I study new ways of understanding, presenting and simulating human and animal movements.

Simulation of the movements of two-legged characters based on physics has been a known problem in the field of robotics and computer graphics since the mid-80s. In the 1990s, most bipedal controllers were based on a simplified dynamic model (for example, on an inverted pendulum), which made it possible to use a balance strategy that can be derived in a closed-form equation. Since 2007, controllers have appeared that use the dynamics of the whole body to achieve rapid progress in this area. It is noteworthy that the theory of optimal control and methods of stochastic optimization, such as CMS-ES, were the main tools for maintaining the balance of simulated two-legs.

')

Gradually, the researchers built more detailed models of the human body. In 1990, the inverted pendulum model had less than five degrees of freedom. In 2007, the dynamic model was a 2D-figure, driven by engines at the joints with dozens of degrees of freedom. In 2009-2010, full 3D-models with 100 degrees of freedom appeared.

In 2012-2014, controllers for biomechanical models, driven by muscles, appeared. The controller sends a signal to each individual muscle at each point in time to stimulate them. Muscle contraction pulls attached bones and sets them in motion. In our work, we used 326 muscles to move the model, including all the main muscles of our body, with the exception of some small ones.

The number of degrees of freedom of a dynamic system has increased rapidly since 2007. Previous approaches to the design of controllers suffered from the “curse of dimensionality” - when the required computing resources (time and memory) increase exponentially as the number of degrees of freedom increases.

We used Deep Reinforcement Learning to solve problems related to the complexity of the musculoskeletal model and the scalability of control of two-legged creatures. Networks can effectively represent and store multi-dimensional control policies (a function that compares states with actions) and explore invisible states and actions.

The main improvement is in how we cope with muscle activation of the whole body. We have created a hierarchical network that in the upper layers learns to imitate the movement of joints at a low frame rate (30 Hz), and at the lower ones it learns to stimulate muscles at high frequencies (1500 Hz).

The dynamics of muscle contraction requires greater accuracy than when simulating a skeleton. Our hierarchical structure eliminates discrepancies in requirements.

It's nice to see how our algorithm works on a wide range of human movements. We still do not know how wide it really is and we are trying to understand the boundaries. So far we have not reached them due to the limit of computing resources.

The new approach gives improved results every time we invest more resources (mainly processor cores). A good point is that Deep Reinforcement Learning requires computational overhead only during the learning phase. Once the multi-dimensional control policy has been learned, simulation and control are performed quickly. A simulation of the musculoskeletal system will soon work in interactive applications in real time. For example, in games.

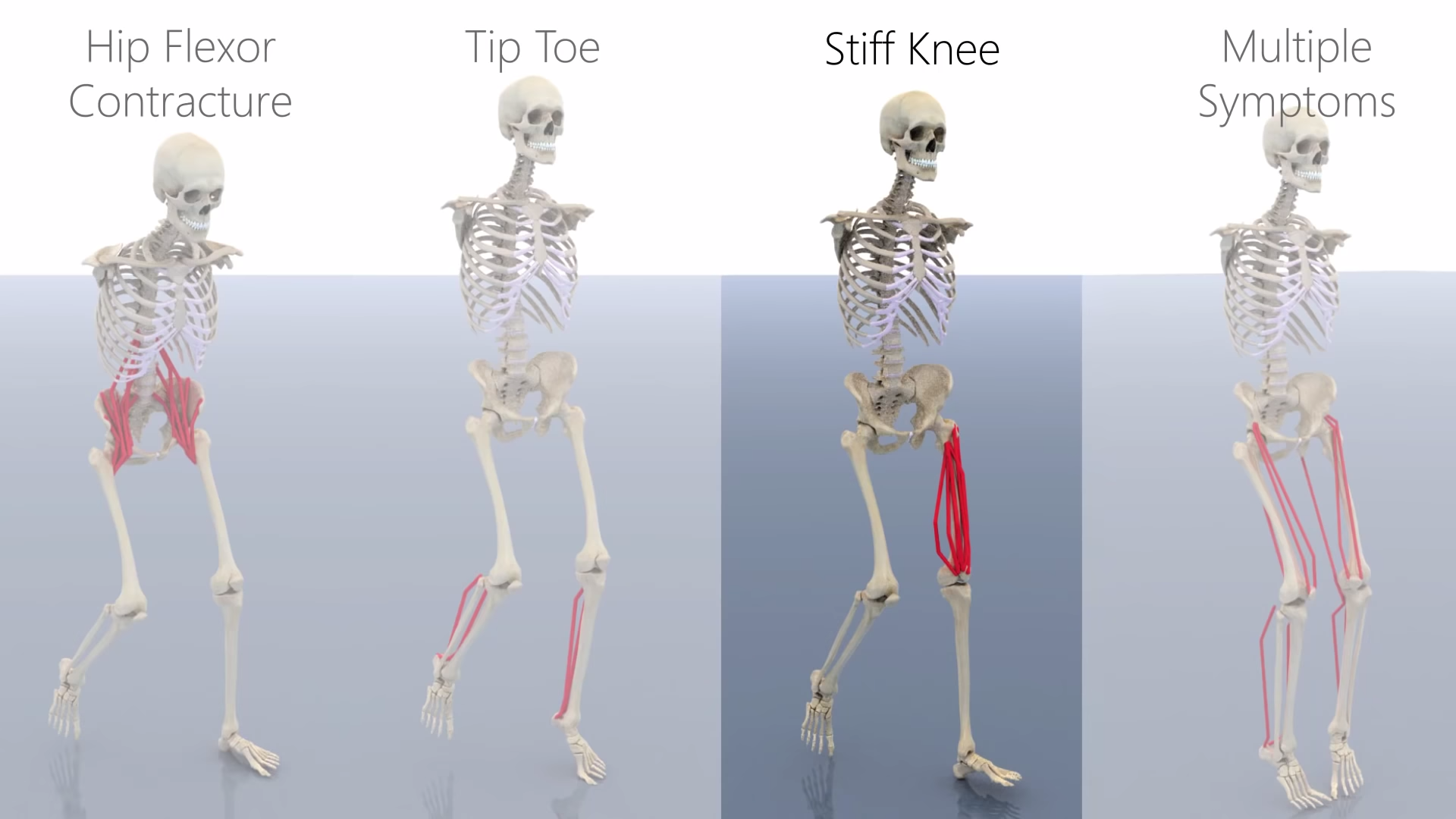

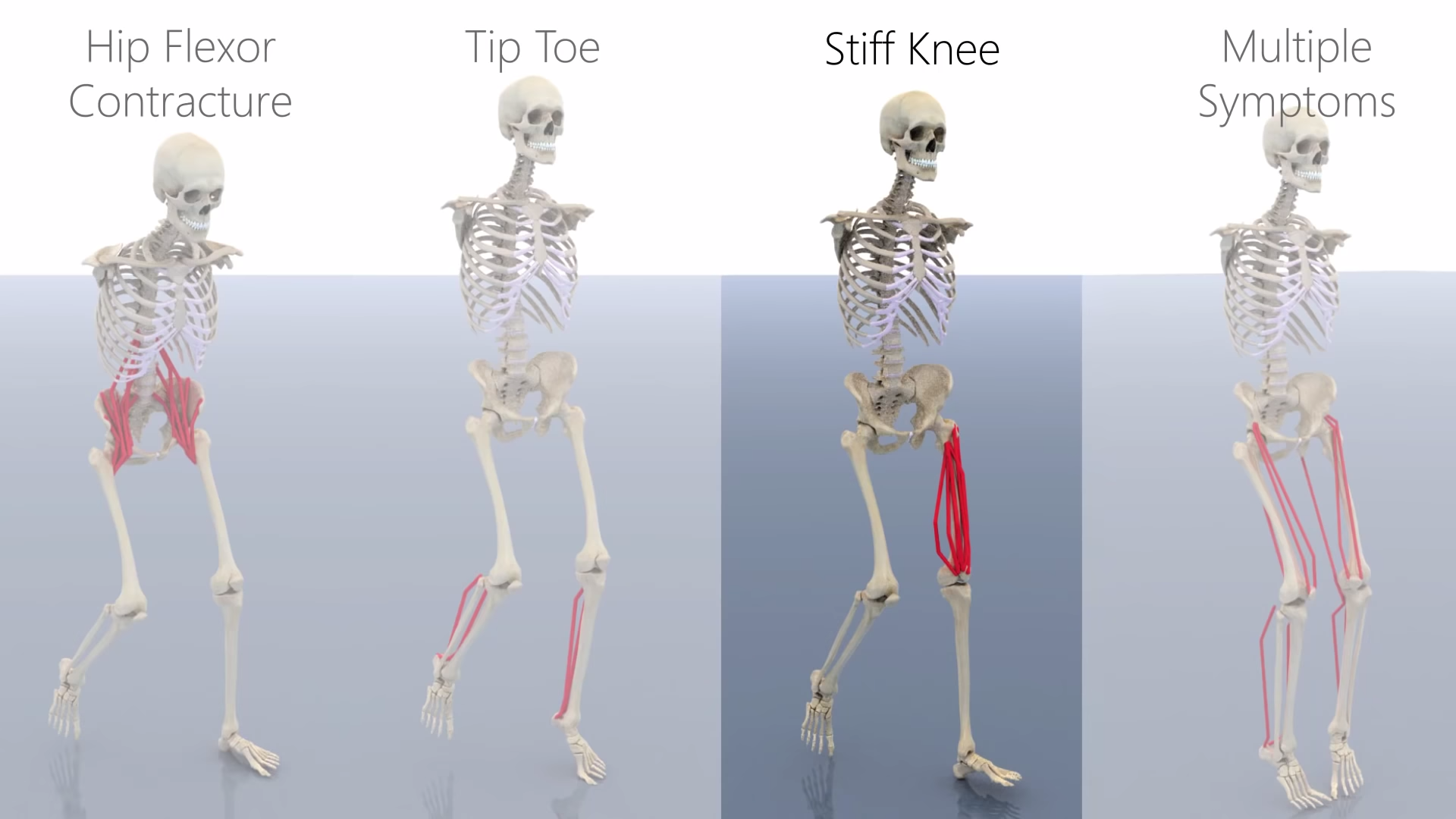

We use Hill’s muscle model, which is de facto the standard in biomechanics. Our algorithm is very flexible, so any dynamic muscle contraction model can be included. The use of high-precision muscle model allows you to generate human movement in various conditions, including pathology, prostheses, and so on.

Straight muscle of the thigh. 3D surface mesh (left). Approximation with waypoints (center). Approximate LBS coordinates of the waypoints when the knee bends (right).

We share the same fundamental idea with the Deepmind movement study, which is based on the stick-and-motor model. Surprisingly, the standard DRL algorithm works well with the stick-and-motor model, but it does not work well with muscle-driven biomechanical models.

At the last exhibition NeurlPS 2018, an AI challenge was held for prosthetics . In the competitive model there are only 20+ muscles, but even the winner has a result that does not look the best.

This example shows the difficulty of learning muscle-driven models. Our hierarchical model makes a breakthrough and allows you to apply the DRL to the biomechanical model of a person with a large number of muscles.

Project in PDF .

Project on Github .

Subject explored: Jehee Lee, Seunghwan Lee, Kyoungmin Lee and Moonseok Park.

My name is Jehee Lee. I am a professor at Seoul National University and a computer graphics researcher with over 25 years of experience. I study new ways of understanding, presenting and simulating human and animal movements.

Simulation of the movements of two-legged characters based on physics has been a known problem in the field of robotics and computer graphics since the mid-80s. In the 1990s, most bipedal controllers were based on a simplified dynamic model (for example, on an inverted pendulum), which made it possible to use a balance strategy that can be derived in a closed-form equation. Since 2007, controllers have appeared that use the dynamics of the whole body to achieve rapid progress in this area. It is noteworthy that the theory of optimal control and methods of stochastic optimization, such as CMS-ES, were the main tools for maintaining the balance of simulated two-legs.

')

Gradually, the researchers built more detailed models of the human body. In 1990, the inverted pendulum model had less than five degrees of freedom. In 2007, the dynamic model was a 2D-figure, driven by engines at the joints with dozens of degrees of freedom. In 2009-2010, full 3D-models with 100 degrees of freedom appeared.

In 2012-2014, controllers for biomechanical models, driven by muscles, appeared. The controller sends a signal to each individual muscle at each point in time to stimulate them. Muscle contraction pulls attached bones and sets them in motion. In our work, we used 326 muscles to move the model, including all the main muscles of our body, with the exception of some small ones.

Difficulty in controlling the movement of a two-legged character

The number of degrees of freedom of a dynamic system has increased rapidly since 2007. Previous approaches to the design of controllers suffered from the “curse of dimensionality” - when the required computing resources (time and memory) increase exponentially as the number of degrees of freedom increases.

We used Deep Reinforcement Learning to solve problems related to the complexity of the musculoskeletal model and the scalability of control of two-legged creatures. Networks can effectively represent and store multi-dimensional control policies (a function that compares states with actions) and explore invisible states and actions.

New approach

The main improvement is in how we cope with muscle activation of the whole body. We have created a hierarchical network that in the upper layers learns to imitate the movement of joints at a low frame rate (30 Hz), and at the lower ones it learns to stimulate muscles at high frequencies (1500 Hz).

The dynamics of muscle contraction requires greater accuracy than when simulating a skeleton. Our hierarchical structure eliminates discrepancies in requirements.

What we have achieved

It's nice to see how our algorithm works on a wide range of human movements. We still do not know how wide it really is and we are trying to understand the boundaries. So far we have not reached them due to the limit of computing resources.

The new approach gives improved results every time we invest more resources (mainly processor cores). A good point is that Deep Reinforcement Learning requires computational overhead only during the learning phase. Once the multi-dimensional control policy has been learned, simulation and control are performed quickly. A simulation of the musculoskeletal system will soon work in interactive applications in real time. For example, in games.

We use Hill’s muscle model, which is de facto the standard in biomechanics. Our algorithm is very flexible, so any dynamic muscle contraction model can be included. The use of high-precision muscle model allows you to generate human movement in various conditions, including pathology, prostheses, and so on.

Straight muscle of the thigh. 3D surface mesh (left). Approximation with waypoints (center). Approximate LBS coordinates of the waypoints when the knee bends (right).

Use Deep Reinforcement Learning (DRL)

We share the same fundamental idea with the Deepmind movement study, which is based on the stick-and-motor model. Surprisingly, the standard DRL algorithm works well with the stick-and-motor model, but it does not work well with muscle-driven biomechanical models.

At the last exhibition NeurlPS 2018, an AI challenge was held for prosthetics . In the competitive model there are only 20+ muscles, but even the winner has a result that does not look the best.

This example shows the difficulty of learning muscle-driven models. Our hierarchical model makes a breakthrough and allows you to apply the DRL to the biomechanical model of a person with a large number of muscles.

Project in PDF .

Project on Github .

Subject explored: Jehee Lee, Seunghwan Lee, Kyoungmin Lee and Moonseok Park.

Source: https://habr.com/ru/post/459208/

All Articles