Ways of Point Segmentation in Point Clouds

Introduction

Some time ago I needed to solve the problem of segmenting points in Point Cloud (point clouds - data obtained from lidars).

Sample data and problem to be solved:

The search for a general review of existing methods was unsuccessful, so I had to collect information on my own. You can see the result: here are collected the most important and interesting (in my opinion) articles over the past few years. All considered models solve the problem of segmentation of a cloud of points (to which class each point belongs).

This article will be useful to those who are well acquainted with neural networks and want to understand how to apply them to unstructured data (for example, graphs).

Existing datasets

Now in open access there are following datasets on this topic:

- Stanford Large-Scale 3DIndoor Spaces Dataset (S3DIS) - marked up scenes inside buildings

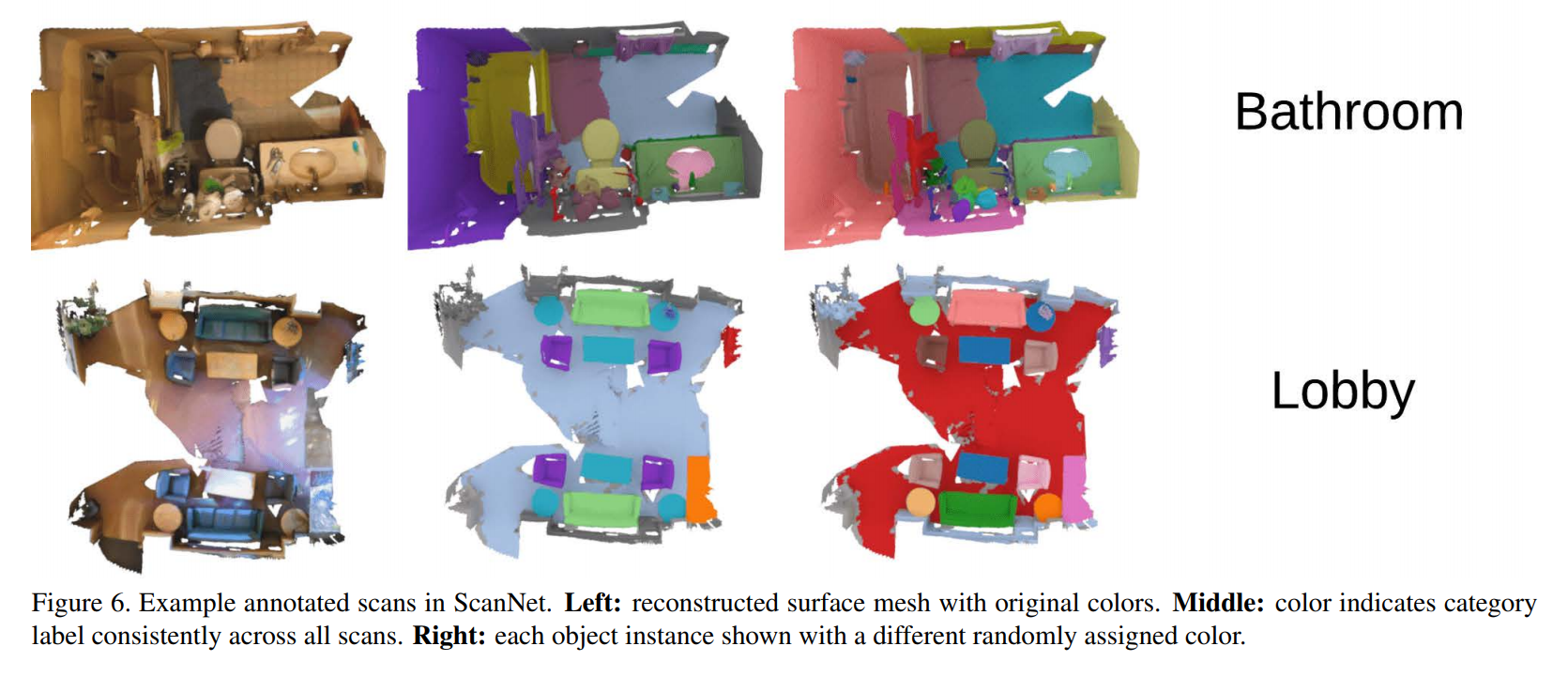

- ScanNet - marked scenes inside buildings

- NYUV2 - marked up scenes inside buildings

- ShapeNet - objects of different forms

- ModelNet40 - Objects of Different Forms

- SHREC15 - different poses of animals and humans

Features of working with Point Clouds

Neural networks have come to this area recently. And standard architectures such as fully connected and convolutional networks are not applicable to this task. Why?

Because the order of points is not important here. An object is a set of points and it does not matter in which order they are viewed. If each pixel has its place on the images, then we can calmly mix the points and the object will not change. The result of the work of standard neural networks, on the contrary, depends on the location of the data. If you mix pixels on an image, you get a new object.

Now let's see how the neural networks are adapted to solve this problem.

Most Important Articles

There are not many basic architectures in this area. If you are going to work with graphs or unstructured data, you need to be aware of the following models:

- Pointnet

- PointNet ++

- DGCNN

Consider them in more detail.

- PointNet: Deep Learning for 3D Classification and Segmentation

Pioneers in working with unstructured data.- how to solve: The article describes two models: for the segmentation of points and the classification of the object. The general part consists of the following blocks:

- network to determine the transformation (translation of the coordinate system), which will then be applied to all points

- transformation applied to each point separately (ordinary perceptorn)

- maxpooling, which combines information from different points and creates a global feature vector for the entire object.

- Further, the differences between the models begin:

- model for classification: a global feature vector goes to the input of a fully connected layer to determine the class of the entire point cloud

- model for segmentation: a global feature vector and counted features for each point go to the input to the fully connected layer, which defines the class for each point.

- code

- how to solve: The article describes two models: for the segmentation of points and the classification of the object. The general part consists of the following blocks:

- PointNet ++: Metric Space: Deep Hierarchical Feature Learning

The same guys from Stanford, that described PointNet.- how they decide: pointNet is applied recursively to smaller sub-palms, by analogy with convolutional networks. That is, cubes are divided into space, PointNet is applied to each, then new cubes are made up of these cubes. This allows you to highlight local signs that the previous version of the network was losing.

- code

Dynamic Graph CNN for Learning on Point Clouds

how they decide: on the basis of the existing points they build a graph: the vertices are points, edges exist only between the current point and the k points closest to it. Next, determine Edge conv - a special convolution on the edges outgoing from the current point. The article proposed several variants of this convolution. As a result, the following was used: for each point x [i] , all its J adjacent points were considered to be M features feature [i, m] = max_j (Relu (θ [m] ∗ (x [i] −x [j]) + φ [m] ∗ x [i])) . The resulting value is stored as a new embedding point. Here, local (x [j] −x [i]) and global (x [j]) coordinates are used as input data for convolution.

After convolution is defined on the graph, a convolution network is constructed. You can also see the counting of transformations and their application to each point by analogy with PointNet.

In this article you will find an excellent overview of other solution methods.

Articles based on PointNet and PointNet ++:

Basically, articles differ in error counting or depth and the depth of complex blocks.

PointWise: An Unsupervised Point-wise Feature Learning Network

Feature of work - learning without a teacher- how to solve: for each point a vector of embeddings is trained, which is then segmented.

The main postulate of the article - similar objects should have similar embeddings (for example, two different legs of a chair), despite their distance. PointNet is used as a base model. The main innovation is the error function. It consists of two parts: reconstruction error and smoothness error.

The reconstruction error uses point context information. Its task is to make embedings of points with the same geometric context similar. To calculate it on the basis of the vector of embeddings for the selected point, new points are generated near it. That is, the characteristic description of a point should contain information about the shape of the object around the point. Next, consider how much the generated points fall out of the real shape of the object.

The error of smoothness is necessary in order to embedigi were similar at the points lying nearby and unlike at the distant points. The most beautiful thing here is the measurement of proximity, not just as a norm between two points in Euclidean space, but counting the distance through the points of the object. For each point, one point is selected from the k nearest and from the k further.

Current embedding should be closer to the nearest minimum on a certain margin than to further.

- how to solve: for each point a vector of embeddings is trained, which is then segmented.

SGPN: Similarity Group Proposal Network for 3D Point Cloud Instance Segmentation

- how they decide: as in PointWise, the most interesting thing about error counting here. As a basis - PointNet ++, first we consider the feature vector and the object for each point separately by analogy with PointNet ++.

Further, based on the signs, we consider 3 matrices (similarity, confidence, and segmentation).

The training error will be the sum of three errors calculated by the corresponding matrices: L = L1 + L2 + L3

Let N be the number of points

Similarity matrix - square, N * N in size. The element at the intersection of the ith row and jth column indicates whether these points belong to the same object or not. Points belonging to the same object should have similar feature vectors. The elements of the matrix can take one of three values: the points i and j belong to the same object, the points belong to the same class of objects, but different objects (and that, and that chair, but the chairs are different), or they are generally points from objects of different classes. This matrix is considered to be true values.

The confidence matrix is the vector of length N. For each point, consider the intersection divided by union (IoU) between the set of points that belong to the object according to the work of our algorithm, and the set of points that actually belong to the object with the current point. The error is simply the L2 norm between the truth and the calculated matrix. That is, the network tries to predict how confident it is of predicting the class for points of an object.

The segmentation matrix has a size - N * number of classes. The error here is considered as cross-entropy in the multi-class classification problem. - code

- how they decide: as in PointWise, the most interesting thing about error counting here. As a basis - PointNet ++, first we consider the feature vector and the object for each point separately by analogy with PointNet ++.

- Know What Your Neighbors Do: 3D Semantic Segmentation of Point Clouds

- how they decide: At first, the signs are considered for a long time, more complicated than in PointNet, with a bunch of residual connections, and amounts, but in general, and the same thing. A small difference - they count the signs for each point in global and local coordinates.

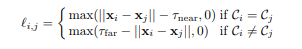

The main difference here is again the error counting. This is not a standard cross-entropy, but a sum of two errors:- pairwise distance loss - points from one object must be closer than τ_near and points from different objects must be longer than τ_far .

- centroid loss - points from one object should be close to each other

- pairwise distance loss - points from one object must be closer than τ_near and points from different objects must be longer than τ_far .

- how they decide: At first, the signs are considered for a long time, more complicated than in PointNet, with a bunch of residual connections, and amounts, but in general, and the same thing. A small difference - they count the signs for each point in global and local coordinates.

DGCNN based articles:

DGCNN was recently published (2018), so there are few articles based on this architecture. I want to draw your attention to one:

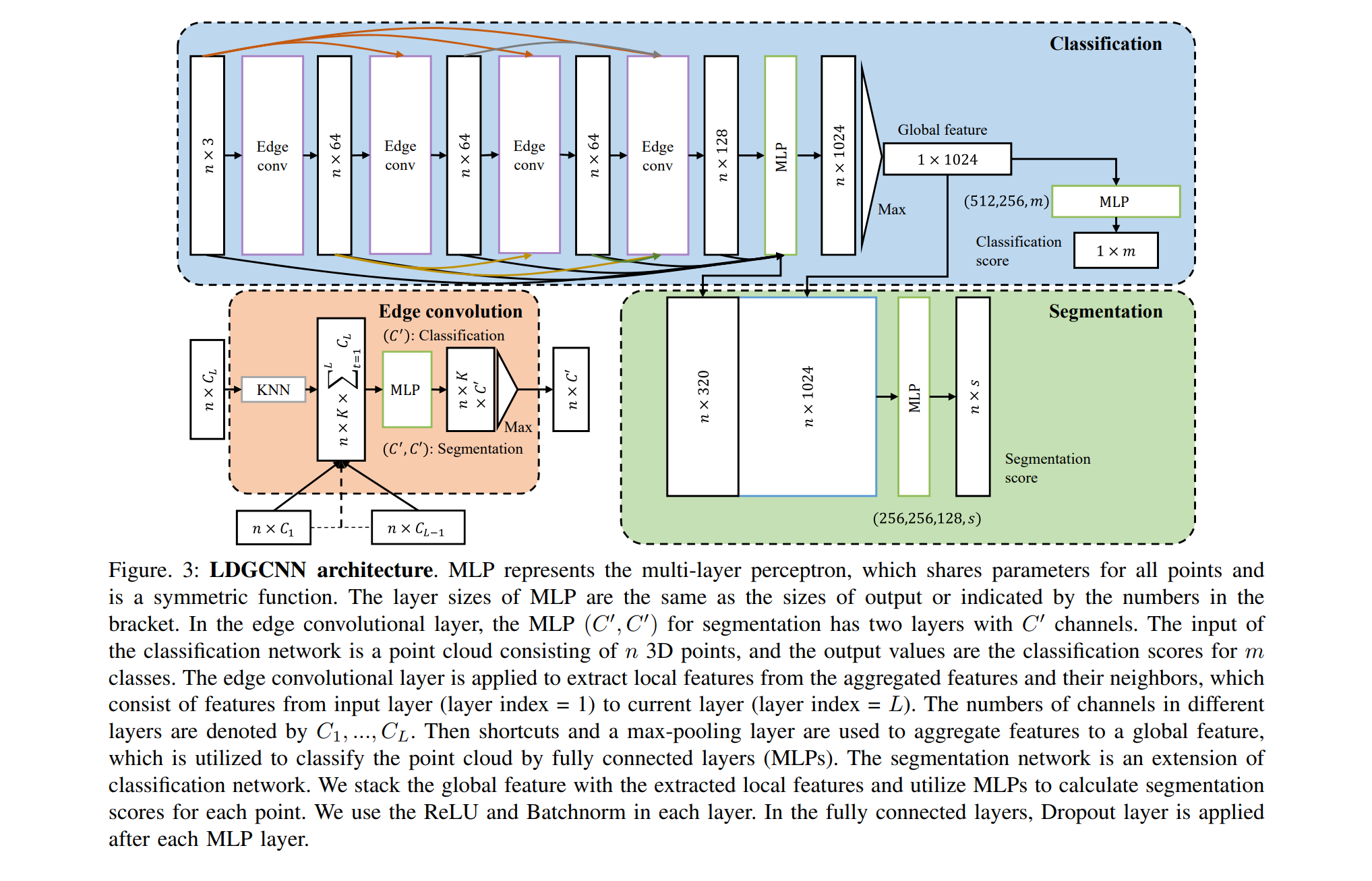

- Linked Dynamic Graph CNN: Learning on Point Cloud via Linking Hierarchical Featurese

- how they decide: they complicated the original architecture, added residual connections to it

Conclusion

Here you could find brief information about modern methods for solving classification and segmentation problems in Point Clouds. There are two main models (PointNet ++, DGCNN), modifications of which are now used to solve these problems. Most often, modifications modify the function of the error and complicate these architectures by adding layers and links.

')

Source: https://habr.com/ru/post/459088/

All Articles