Attention for dummies and implementation in Keras

About articles on artificial intelligence in Russian

Despite the fact that the Attention mechanism is described in English-language literature, in the Russian-speaking sector I still have not met a decent description of this technology. In our language there are many articles on Artificial Intelligence (AI). However, the articles that were found could reveal only the simplest AI models, for example, convolutional networks, generative networks. However, according to the latest advanced developments in the field of AI, there are very few articles in the Russian-speaking sector.

The lack of articles in Russian on the latest developments became a problem for me when I entered the topic, studied the current state of affairs in the field of AI. I know English well, I read articles in English on the subject of AI. However, when a new concept or a new principle of AI comes out, its understanding in a non-native language can be painful and long. Knowing English, to grasp on a non-native in a complex, yet the subject costs much more time and effort. After reading the description you ask yourself the question: by how many percent did you understand? If there was an article in Russian, I would understand 100% after the first reading. This happened to the generative networks, for which there is a magnificent series of articles: after reading everything became clear. But in the world of networks there are many approaches that are described only in English and with which you had to deal with the days.

I am going to periodically write articles in my native language, bringing to our language area of knowledge. As you know, the best way to understand the topic is to explain it to someone. So who else but me can start a series of articles on the most modern, complex, advanced architectural AI. By the end of the article, I myself will understand the 100% approach, and it will be useful for someone who reads and improves his understanding (by the way, I love Gesser, but better ** Blanche de bruxelles **).

')

When you understand the subject, you can distinguish 4 levels of understanding:

- you understand the principle and the ins and outs of the Algorithm / Level

- you understand the gatherings and ways in general how it works

- you understand all of the above, as well as the device of each network level (for example, in the VAE model you understood the principle, and you also understood the essence of the trick with reparameterization)

- I understood everything, including each level, so I understood how it all learns, so that I was able to select the hyperparameters for my task, and not to copy ready-made solutions.

According to new architectures, the transition from level 1 to level 4 is often complex: the authors emphasize that they describe the various important details superficially to them (did they understand them themselves?). Or your brain does not contain any constructions, so even after reading the description it was not deciphered and did not pass into skills. This happens if you were asleep during your student years at the very same matan lesson, after the night evening students where they gave you the right mat. apparatus. And just here you need articles in your own language, revealing the nuances and subtleties of each operation.

Attention concept and application

The above is a script of levels of understanding. To disassemble Attention, we will begin with the first level. Before describing the inputs and outputs, let us analyze the essence: what basic concepts, understandable even to a child, concepts are based on this concept. In the article, we will use the English term Attention, because in this form it is also a call to the function of the Keras library (it is not directly implemented in it, an additional module is required, but more on that below). To read further you must have an understanding of the Keras and python libraries, because the source code will be provided.

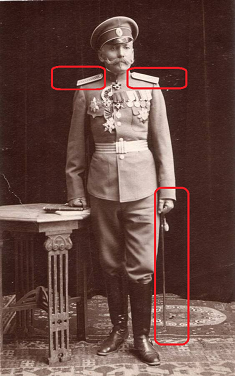

From English Attention translates as "attention." This term correctly describes the essence of the approach: if you are a motorist and the photo depicts the general traffic police, you intuitively give it importance, regardless of the context of the photo. You are likely to take a closer look at the general. You will strain your eyes, look at the epaulets more closely: how many stars does he have there specifically. If the general is not very tall, ignore him. Otherwise, take into account as a key factor in making decisions. This is how our brain works. In Russian culture we have been trained by generations for attention to high ranks, our brain automatically places high priority on such objects.

From English Attention translates as "attention." This term correctly describes the essence of the approach: if you are a motorist and the photo depicts the general traffic police, you intuitively give it importance, regardless of the context of the photo. You are likely to take a closer look at the general. You will strain your eyes, look at the epaulets more closely: how many stars does he have there specifically. If the general is not very tall, ignore him. Otherwise, take into account as a key factor in making decisions. This is how our brain works. In Russian culture we have been trained by generations for attention to high ranks, our brain automatically places high priority on such objects.Attention is a way to tell the network what you should pay more attention to, that is, to report the probability of a particular outcome depending on the state of the neurons and the incoming data. On the basis of the training sample, the Attention layer implemented in Keras reveals the factors that draw attention to which reduces the network error. The identification of important factors is carried out through the method of back propagation of error, just as it is done for convolutional networks.

When training, Attention demonstrates its probabilistic nature. The mechanism itself forms a matrix of weights of importance. If we didn’t teach Attention, we could set the importance of, for example, empirically (the general is more important than the ensign). But when we train the network on data, the importance becomes a function of the probability of a particular outcome depending on the incoming data on the network. For example, if living in Tsarist Russia, we met a general, then the probability of getting a gauntlet would be high. Having ascertained this would have been possible through several face-to-face meetings, collecting statistics. After that, our brain will set the appropriate weight to the fact of the meeting of this subject and put markers on epaulets and stripes. It should be noted that an exhibited marker is not a probability: now the meeting of the general will entail completely different consequences for you than then, besides the weight may be greater than one. But weight can be reduced to probability by rationing it.

The probabilistic nature of the Attention mechanism in training is manifested in problems of machine translation. For example, let us inform the network that, when translated from Russian into English, the word Love translates in 90% of cases as Love, in 9% cases as Sex, in 1% cases as otherwise. The network will immediately mark many options, showing the best quality of training. When translating, we report to the network: “When translating the word love, pay special attention to the English word Love, also see if it can still be Sex.”

The Attention approach is used to work with text, as well as sound and time series. For text processing, recurrent neural networks (RNN, LSTM, GRU) are widely used. Attention can either supplement them or replace them, translating the network to simpler and faster architectures.

One of the most famous applications of Attention is to use it in order to abandon the recurrent network and go to a fully connected model. Recurrent networks have a series of flaws: the inability to carry out training on the GPU, the fast-paced re-training. Using the Attention mechanism, we can build a network capable of studying sequences on the basis of a fully connected network, train it on the GPU, use droput.

Attention is widely used to improve the performance of recurrent networks, for example, in the field of translation from language to language. When using the approach of encoding / decoding, which is quite often used in modern AI (for example, variational autocoders). When adding an Attention layer between the encoder and decoder, the result of the network is noticeably improved.

In this article, I do not provide specific network architectures using Attention, this will be the subject of separate work. Listing all the possibilities of using attention is worth a separate article.

Implement Attention in Keras out of the box

When one understands some approach, it is very useful to learn the basic principle. But often full understanding comes only after looking at the technical implementation. You see the data streams that make up the function of the operation, it becomes clear what is being calculated. But first you need to start it and write “Attention hello word”.

Currently, the Attention mechanism is not implemented in Keras itself. But there are already third-party implementations, for example, attention-keras which can be installed with github. Then your code will become extremely simple:

from attention_keras.layers.attention import AttentionLayer attn_layer = AttentionLayer(name='attention_layer') attn_out, attn_states = attn_layer([encoder_outputs, decoder_outputs]) This implementation supports the Attention scale visualization function. By training Attention, you can get a matrix that signals that, in the opinion of the network, it is especially important to look something like this (picture from github from the page of the attention-keras library).

In principle, you do not need anything else: include this code in your network as one of the levels and enjoy learning your network. Any network, any algorithm is designed at the first stages at the conceptual level (as well as the database by the way), after which the implementation before the implementation is specified in a logical and physical representation. For neural networks, this design method has not yet been developed (oh yes, this will be the topic of my next article). You do not understand how the convolution layers work inside? The principle is described, you use them.

Attention implementation in Keras low

In order to finally understand the topic, below we analyze in detail the implementation of Attention under the hood. The concept is good, but how exactly does it work and why is the result obtained exactly as stated?

The simplest implementation of the Attention mechanism in Keras takes only 3 lines:

inputs = Input(shape=(input_dims,)) attention_probs = Dense(input_dims, activation='softmax', name='attention_probs')(inputs) attention_mul = merge([inputs, attention_probs], output_shape=32, name='attention_mul', mode='mul' In this case, the first line declares an Input layer, then a fully connected layer with an activation function softmax with the number of neurons equal to the number of elements in the first layer. The third layer multiplies the result of the fully connected layer by the input data elementwise.

Below is a whole Attention class that implements a slightly more complex self-attention mechanism that can be used as a full-fledged level in a model; the class inherits from the Keras layer class.

# Attention class Attention(Layer): def __init__(self, step_dim, W_regularizer=None, b_regularizer=None, W_constraint=None, b_constraint=None, bias=True, **kwargs): self.supports_masking = True self.init = initializers.get('glorot_uniform') self.W_regularizer = regularizers.get(W_regularizer) self.b_regularizer = regularizers.get(b_regularizer) self.W_constraint = constraints.get(W_constraint) self.b_constraint = constraints.get(b_constraint) self.bias = bias self.step_dim = step_dim self.features_dim = 0 super(Attention, self).__init__(**kwargs) def build(self, input_shape): assert len(input_shape) == 3 self.W = self.add_weight((input_shape[-1],), initializer=self.init, name='{}_W'.format(self.name), regularizer=self.W_regularizer, constraint=self.W_constraint) self.features_dim = input_shape[-1] if self.bias: self.b = self.add_weight((input_shape[1],), initializer='zero', name='{}_b'.format(self.name), regularizer=self.b_regularizer, constraint=self.b_constraint) else: self.b = None self.built = True def compute_mask(self, input, input_mask=None): return None def call(self, x, mask=None): features_dim = self.features_dim step_dim = self.step_dim eij = K.reshape(K.dot(K.reshape(x, (-1, features_dim)), K.reshape(self.W, (features_dim, 1))), (-1, step_dim)) if self.bias: eij += self.b eij = K.tanh(eij) a = K.exp(eij) if mask is not None: a *= K.cast(mask, K.floatx()) a /= K.cast(K.sum(a, axis=1, keepdims=True) + K.epsilon(), K.floatx()) a = K.expand_dims(a) weighted_input = x * a return K.sum(weighted_input, axis=1) def compute_output_shape(self, input_shape): return input_shape[0], self.features_dim Here we see about the same thing that was implemented above through the fully connected layer of Keras, only performed through deeper logic at a lower level. The function creates a parametric level (self.W) which is then multiplied scalarly (K.dot) by the input vector. The logic wired in this version is a bit more complicated: the input vector multiplied by the weight self.W is applied a shift (if the bias parameter is disclosed), hyperbolic tangent, exposure, a mask (if specified), normalization, after which the input vector is weighted again by the result obtained. I have no description of the logic laid out in this example, I reproduce the operations of reading the code. By the way, please write in the comments if you have learned some kind of mathematical high-level function in this logic.

The class has the parameter “bias” i.e. bias. If the parameter is activated, then after applying the Dense layer, the final vector will be complicated with the vector of parameters of the “self.b” layer, which will make it possible not only to determine the “weight” for our attention function, but also to shift the level of attention by number. An example from life: we are afraid of ghosts, but have never met them. Thus, we correct for fear of -100 points. That is, only if fear exceeds 100 points, we will make decisions about protecting against ghosts, calling the agency of ghost hunters, buying scare devices, etc.

Conclusion

The Attention mechanism has variations. The simplest version of Attention, implemented in the class above, is called Self-Attention. Self-attention is a mechanism for processing sequential data, taking into account the context of each time stamp. It is most often used to work with textual information. The self-attention implementation can be taken out of the box by importing the keras-self-attention library. There are other variations of Attention. Studying the English-language materials managed to account for more than 5 variations.

When writing even this relatively short article, I studied more than 10 English-language articles. Of course, I was not able to load all the data from all these articles into 5 pages, I only made a squeeze, in order to create a “guide for dummies”. To disassemble all the nuances of the Attention mechanism, you will need a 150-200 book booklet. I really hope I managed to reveal the basic essence of this mechanism so that those who are just starting to understand machine learning understand how it all works.

Sources

- Attention mechanism in Neural Networks with Keras

- Attention in Deep Networks with Keras

- Attention-based Sequence-to-Sequence in Keras

- Text Classification using Attention Mechanism in Keras

- Pervasive Attention: 2D Convolutional Neural Networks for Sequence-to-Sequence Prediction

- How to implement the Attention Layer in Keras?

- Attention? Attention!

- Neural Machine Translation with Attention

Source: https://habr.com/ru/post/458992/

All Articles