Benchmarking PostgreSQL with large Linux pages

The Linux kernel provides a wide range of configuration options that can affect performance. It's all about getting the right configuration for your application and workload. Like any other database, PostgreSQL uses the Linux kernel for optimal configuration. Poorly configured settings can result in poor performance. Therefore, it is important that you measure database performance after each tuning session to avoid performance degradation. In one of my previous publications, “Tuning Linux Kernel Parameters for PostgreSQL Optimization,” I described some of the most useful parameters of the Linux kernel and how they can help you improve database performance. Now I’m going to share my test results after setting up large Linux pages with another PostgreSQL workload. I ran a comprehensive test suite for various PostgreSQL download sizes and simultaneous number of clients.

The Linux kernel provides a wide range of configuration options that can affect performance. It's all about getting the right configuration for your application and workload. Like any other database, PostgreSQL uses the Linux kernel for optimal configuration. Poorly configured settings can result in poor performance. Therefore, it is important that you measure database performance after each tuning session to avoid performance degradation. In one of my previous publications, “Tuning Linux Kernel Parameters for PostgreSQL Optimization,” I described some of the most useful parameters of the Linux kernel and how they can help you improve database performance. Now I’m going to share my test results after setting up large Linux pages with another PostgreSQL workload. I ran a comprehensive test suite for various PostgreSQL download sizes and simultaneous number of clients.Testing machine

- Supermicro server:

- Intel® Xeon® CPU E5-2683 v3 @ 2.00GHz

- 2 sockets / 28 cores / 56 threads

- Memory: 256GB of RAM

- Storage: SAMSUNG SM863 1.9TB Enterprise SSD

- Filesystem: ext4 / xfs

- OS: Ubuntu 16.04.4, kernel 4.13.0-36-generic

- PostgreSQL: version 11

Linux kernel settings

I used the default kernel settings without any optimization / tuning other than disabling the transparent large pages (Transparent HugePages). Transparent large pages are enabled by default and highlight the page size, which may not be recommended for use by the database. As a rule, databases require large, fixed-size pages that are not covered by large transparent pages. Therefore, it is always recommended to disable this feature and use classic large pages by default.

PostgreSQL settings

I used the same PostgreSQL settings for all the tests to record different PostgreSQL workloads with different settings for large Linux pages. Here is the PostgreSQL setting used for all tests:

')

postgresql.conf

shared_buffers = '64GB' work_mem = '1GB' random_page_cost = '1' maintenance_work_mem = '2GB' synchronous_commit = 'on' seq_page_cost = '1' max_wal_size = '100GB' checkpoint_timeout = '10min' synchronous_commit = 'on' checkpoint_completion_target = '0.9' autovacuum_vacuum_scale_factor = '0.4' effective_cache_size = '200GB' min_wal_size = '1GB' wal_compression = 'ON' Testing scheme

In testing the testing scheme plays an important role. All tests are performed three times for 30 minutes for each run. I took the average of these three indicators. Tests were conducted using the PostgreSQL pgbench performance testing tool. pgbench works with a scale factor, with one scale factor of approximately 16 MB of workload.

Large pages (HugePages)

Linux uses 4 KB of memory by default, along with large pages. BSD has Super Pages, while Windows has Large Pages. PostgreSQL only supports large pages (Linux). In cases of large memory usage, small pages degrade performance. By installing large pages, you increase the allocated memory for the application and, therefore, reduce the transaction costs that occur during allocation / swapping; that is, you increase productivity by using large pages.

Here is the setting of large pages when using a large page size of 1 GB. You can always get this information from / proc.

$ cat / proc / meminfo | grep -i huge

AnonHugePages: 0 kB ShmemHugePages: 0 kB HugePages_Total: 100 HugePages_Free: 97 HugePages_Rsvd: 63 HugePages_Surp: 0 Hugepagesize: 1048576 kB For more information on large pages, please read my previous blog post.

https://www.percona.com/blog/2018/08/29/tune-linux-kernel-parameters-for-postgresql-optimization/

Usually the size of large pages is 2 MB and 1 GB, so it makes sense to use the size of 1 GB instead of the much smaller size of 2 MB.

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/6/html/performance_tuning_guide/s-memory-transhuge

https://kerneltalks.com/services/what-is-huge-pages-in-linux/

Test results

This test shows the overall effect of different sizes of large pages. The first test set was created with a default page size in Linux of 4KB without the inclusion of large pages. Note that the huge transparent pages were also turned off and remained off during all these tests.

Then a second set of tests was performed with a large page size of 2 MB. Finally, the third set of tests is performed with the size of large pages of 1 GB.

All of these tests were performed in PostgreSQL version 11. The kits include a combination of different database sizes and clients. The graph below shows comparative performance results for these tests with TPS (transactions per second) on the Y axis, database size and number of clients per database size on the X axis.

From the above graph, it can be seen that performance gains with large pages increase with increasing number of clients and database size if the size remains in a previously allocated buffer in shared memory (shared buffer).

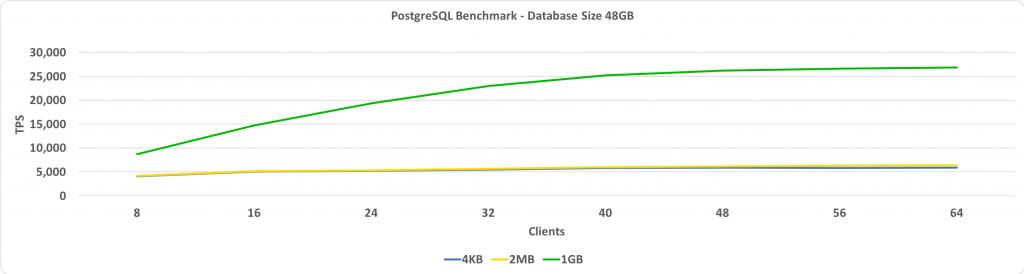

This test shows TPS versus client count. In this case, the size of the database is 48 GB. On the Y axis, we have TPS, and on the X axis, we have the number of connected clients. The size of the database is small enough to fit in the shared buffer, which is set to 64 GB.

If large pages are set to 1 GB, then the more customers, the higher the relative performance increase.

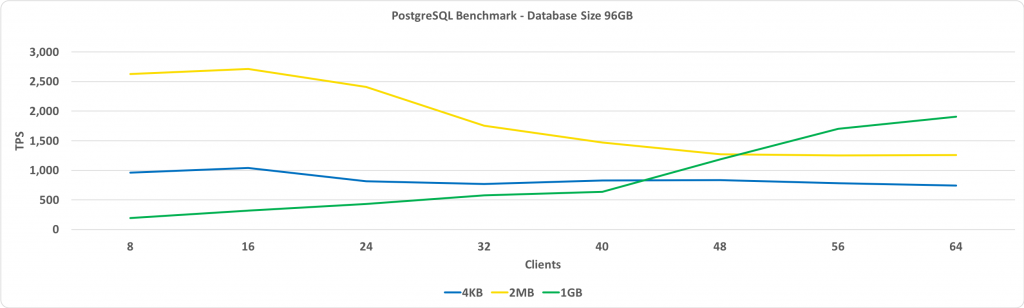

The following graph is the same as above, except for the database size of 96 GB. This exceeds the size of the shared buffer, which is set to 64 GB.

The key observation here is that performance with large pages of 1 GB increases as the number of clients increases, and ultimately it gives more performance than large pages of 2 MB or a standard page size of 4 KB.

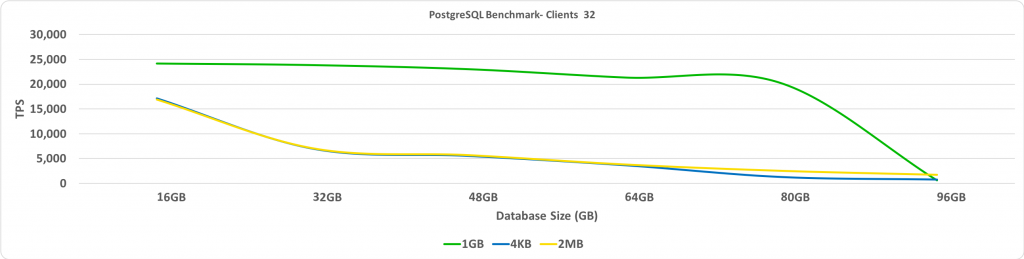

This test shows TPS depending on the size of the database. In this case, the number of connected clients is 32. On the Y axis, we have TPS, and on the X axis, the size of the database.

As expected, when the database goes beyond the pre-allocated large pages, performance drops significantly.

Summary

One of my key recommendations is that we need to turn off transparent large pages (Transparent HugePages). You will see the greatest performance gains when the database is placed in a shared buffer with large pages enabled. Choosing the size of large pages requires a small amount of trial and error, but this can potentially lead to a significant increase in TPS when the size of the database is large, but remains small enough to fit into the shared buffer.

Source: https://habr.com/ru/post/458910/

All Articles