How we pierced the Great Chinese Firewall (Part 2)

Hello!

Nikita is with you again - a system engineer from SEMrush . And with this article, I continue the story about how we came up with a solution to circumvent the Chinese Firewall for our service semrush.com.

In the previous part I told:

- what problems arise after the decision is made “We need to make our service work in China”

- what problems does chinese internet have

- why do we need ICP license

- how and why we decided to test our test benches using Catchpoint

- What was the result of our first solution based on Cloudflare China Network?

- how we found a bug in DNS Cloudflare

This part is the most interesting, in my opinion, because it focuses on specific technical implementations of staging. And we will begin, or rather we will continue, with Alibaba Cloud .

Alibaba Cloud

Alibaba Cloud is a fairly large cloud provider that has all the services that allow it to honestly dignify itself as a cloud provider. It is good that they have the opportunity to register with foreign users, and that most of the site is translated into English (for China it is a luxury). In this cloud, you can work with many regions of the world, mainland China, and Oceania (Hong Kong, Taiwan, etc.).

IPSEC

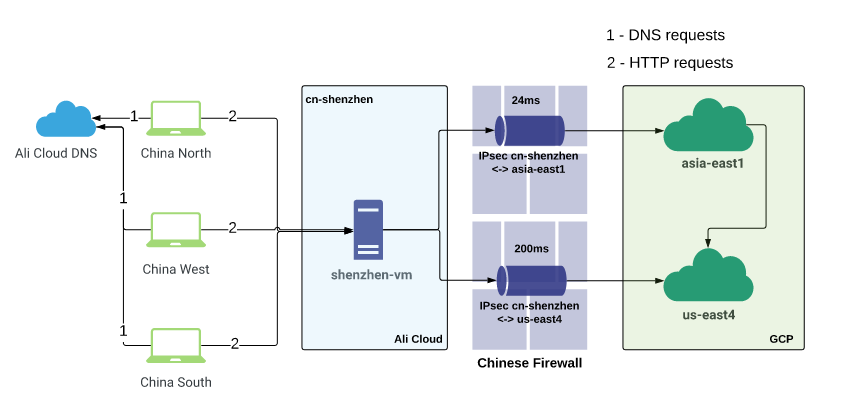

Started with geography. Since our test site was in Google Cloud, we had to “link” Alibaba Cloud with the GCP, so we opened the list of locations in which Google is present. At that moment, they did not yet have their own data center in Hong Kong.

The nearest region was asia-east1 (Taiwan). Ali has cn-shenzhen (Shenzhen) as the closest region of mainland China to Taiwan.

Using terraform, they described and raised the entire infrastructure in GCP and Ali. A tunnel of 100 Mbps between the clouds rose almost instantly. On the side of Shenzhen and Taiwan, proxy virtuals were raised. In Shenzhen, user traffic is terminated, proxied through a tunnel to Taiwan, and from there it already goes directly to the external IP of our service in us-east (US East Coast). Ping between virtuals on the 24ms tunnel, which is not so bad.

At the same time, we placed a test zone in Alibaba Cloud DNS . After delegating the zone to NS Ali, the re-resolution time decreased from 470 ms to 50 ms . Prior to this, the zone was also on Cloudlfare.

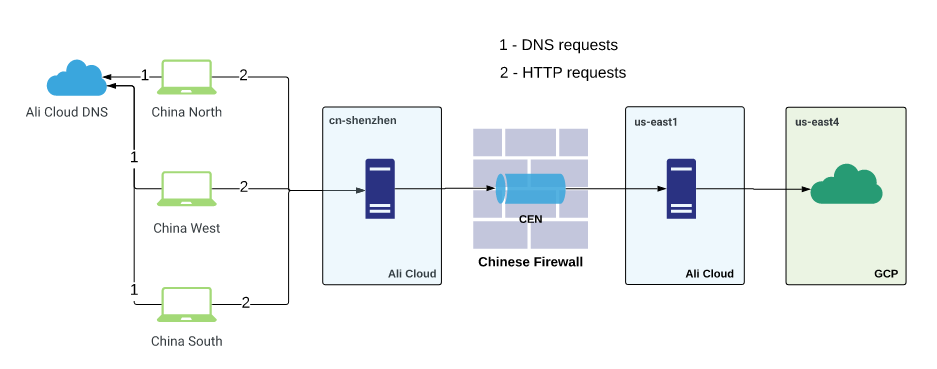

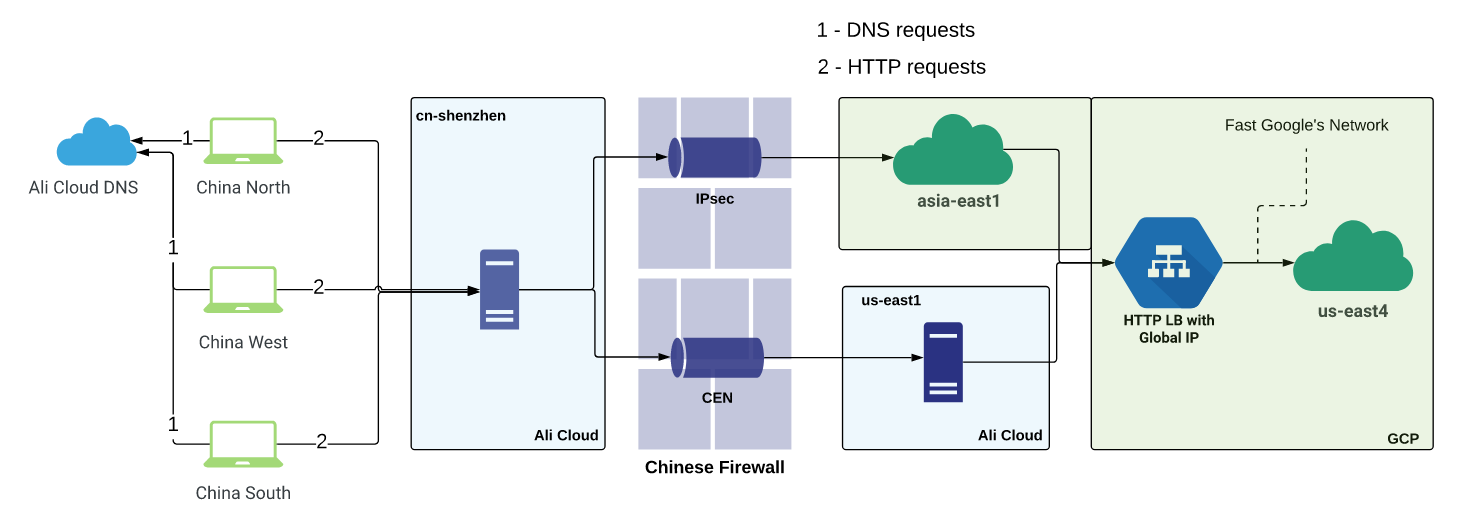

In parallel with the tunnel to asia-east1 , another tunnel from Shenzhen was taken straight into us-east4 . They created more proxying virtual machines and started measuring both solutions, routing test traffic using Cookies or DNS. A schematic test bench is described in the following figure:

Latency for tunnels turned out the following:

Ali cn-shenzhen <-> GCP asia-east1 - 24ms

Ali cn-shenzhen <-> GCP us-east4 - 200ms

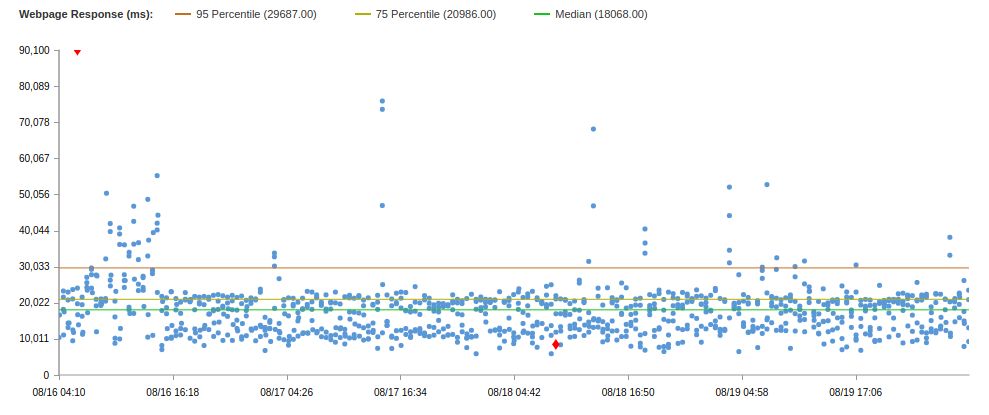

Catchpoint browser tests reported excellent performance improvements.

Compare test results for two solutions:

| Decision | Uptime | Median | 75 Percentile | 95 Percentile |

|---|---|---|---|---|

| Cloudflare | 86.6 | 18s | 30s | 60s |

| IPsec | 99.79 | 18s | 21s | 30s |

This data is a solution using an IPSEC tunnel through asia-east1 . Through us-east4, the results were worse, and there were more mistakes, so I will not give any results.

According to the results of this test, two tunnels, one of which is terminated in the nearest region to China, and the other at the final destination, it became clear that it is important to “emerge” from the Chinese firewall as quickly as possible, and then use fast networks (CDN providers , cloud providers, etc.). Do not try in one fell swoop to pass the firewall and get to your destination. This is not the fastest way.

Overall, the results are not bad, however, semrush.com has a median of 8.8s, and 75 Percentile 9.4s (on the same test).

And before moving on, I would like to make a small lyrical digression.

Lyrical digression

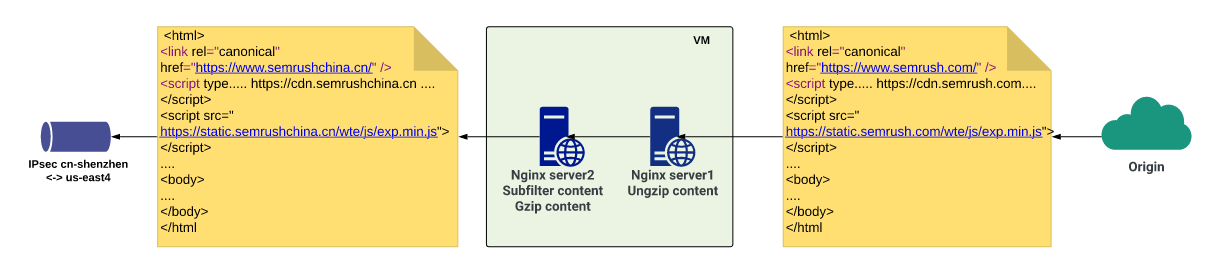

After a user visits www.semrushchina.cn , which is resolved via the “fast” Chinese DNS servers, the HTTP request goes through our quick solution. The answer is returned along the same path, but in all JS scripts, HTML pages and other elements of the web page, the domain semrush.com is specified for additional resources that must be loaded when the page is rendered . That is, the client resolves the “home” A-record www.semrushchina.c n and goes into the fast tunnel, quickly receives a response — an HTML page that states:

- Download such js from sso.semrush.com,

- CSS files take with cdn.semrush.com,

- and take more pictures from dab.semrush.com

- and so on.

The browser starts to go to the “external” Internet behind these resources, passing each time through the firewall that devours the response time.

But in the previous test, the results are presented when there are no semrush.com resources on the page, only semrushchina.cn , and * .semrushchina.cn is supposed to be the address of the virtual woman in Shenzhen to get into the tunnel later.

Only in this way, to the maximum, by throwing all possible traffic through your solution to the rapid passage of the Chinese firewall, you can get acceptable speeds and site accessibility indicators, as well as honest results of solution tests.

We did this without a single code edit on the product side of the teams.

Subfilter

The solution was born almost immediately after the problem surfaced. We needed a PoC (Proof of Concept) that our firewall passage solutions really work well. To do this, you need to maximize all site traffic in this solution. And we applied the subfilter to nginx.

Subfilter is a fairly simple module in nginx, allowing you to change one line in the response body to another line. So we changed all the entries of semrush.com to semrushchina.cn in all the answers.

And ... it did not work, because we received compressed content from backends, respectively, the subfilter did not find the desired string. I had to add another local server to nginx, which uncompressed the response and transferred it to the next local server, which was already engaged in replacing the string, compressing it and giving it to the next proxy server in the chain.

In the end, where the client would receive <subdomain> .semrush.com , he received <subdomain> .semrushchina.cn and obediently went through our solution.

However, it is not enough just to change the domain in one direction, because the backends are still waiting for semrush.com in subsequent requests from the client. Accordingly, on the same server where the replacement is made in the same direction, using a simple regular expression, we get a subdomain from the query, and then do proxy_pass with the $ host variable set in $ subdomain.semrush.com . It may seem confusing, but it works. And it works well. For individual domains that require different logic, they simply create their own server blocks and create a separate configuration. Below are the shortened nginx configs for clarity and demonstration of this scheme.

The following config handles all requests from China on .semrushchina.cn:

listen 80; server_name ~^(?<subdomain>[\w\-]+)\.semrushchina.cn$; sub_filter '.semrush.com' '.semrushchina.cn'; sub_filter_last_modified on; sub_filter_once off; sub_filter_types *; gzip on; gzip_proxied any; gzip_types text/plain text/css application/json application/x-javascript text/xml application/xml application/xml+rss text/javascript application/javascript; location / { proxy_pass http://127.0.0.1:8083; proxy_set_header Accept-Encoding ""; proxy_set_header Host $subdomain.semrush.com; proxy_set_header X-Accept-Encoding $http_accept_encoding; } } This config is proxied to port 83 on localhost , and the next config is waiting there:

listen 127.0.0.1:8083; server_name *.semrush.com; location / { resolver 8.8.8.8 ipv6=off; gunzip on; proxy_pass https://$host; proxy_set_header Accept-Encoding gzip; } } Again, these are cropped configs.

Like that. It may look complicated, but in words. In fact, everything is easier than steamed turnip :)

End of lyrical digression

For a while we were happy, because the myth of falling IPSEC tunnels was not confirmed. But then the tunnels began to fall. Several times a day for several minutes. Not much, but it did not suit us. Since both tunnels were terminated on the Ali side on the same router, we decided that maybe this is a regional problem and we need to raise the backup region.

Raised. The tunnels began to fall at different times, but we have perfectly worked out the filelover at the level of upstream in nginx. But then the tunnels began to fall at about the same time :) And again 502 and 504 began. Uptime began to deteriorate, so we began to work on the Alibaba CEN (Cloud Enterprise Network) version.

CEN

CEN is the connectivity of two VPCs from different regions within Alibaba Cloud, that is, you can connect the private networks of any regions within the cloud to each other. And most importantly, this channel has a rather strict SLA . It is very stable both in speed and in uptime. But never is everything so simple:

- it is VERY difficult to get if you are not Chinese citizens or legal entities,

- You need to pay for each megabit of bandwidth.

Having the opportunity to connect Mainland China and Overseas , we created a CEN between two Ali regions: cn-shenzhen and us-east-1 (the closest point to us-east4). In Ali us-east-1 they raised another virtual machine so that there would be another hop .

It turned out like this:

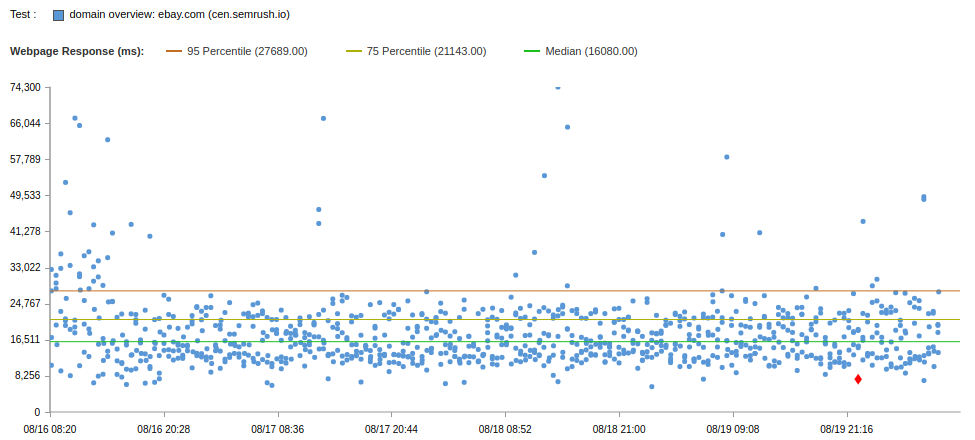

The results of the browser tests below:

| Decision | Uptime | Median | 75 Percentile | 95 Percentile |

|---|---|---|---|---|

| Cloudflare | 86.6 | 18s | 30s | 60s |

| IPsec | 99.79 | 18s | 21s | 30s |

| CEN | 99.75 | 16s | 21s | 27s |

Performance is slightly better than IPSEC. But through IPSEC you can potentially download at a speed of 100 Mb / s, and through CEN only at a speed of 5 Mb / s and more expensive.

It begs a hybrid, right? Connect IPSEC speed and CEN stability.

So we did, letting traffic through both IPSEC and CEN in case of a drop of the IPSEC tunnel. Uptime has become much higher, but the download speed of the site has left much to be desired. Then I drew all the schemes that we have already used and tested, and decided to try to add some more GCP to this scheme, namely GLB .

GLB

GLB is the Global Load Balancer (or Google Cloud Load Balancer). It has an important advantage for us: in the context of a CDN, it has anycast IP , which allows routing traffic to the data center closest to the client, due to which the traffic gets faster to the fast Google network and less goes to the “normal” Internet.

Without thinking twice, we raised HTTP / HTTPS LB to GCP and backed up our virtuals with a subfilter.

There were several schemes:

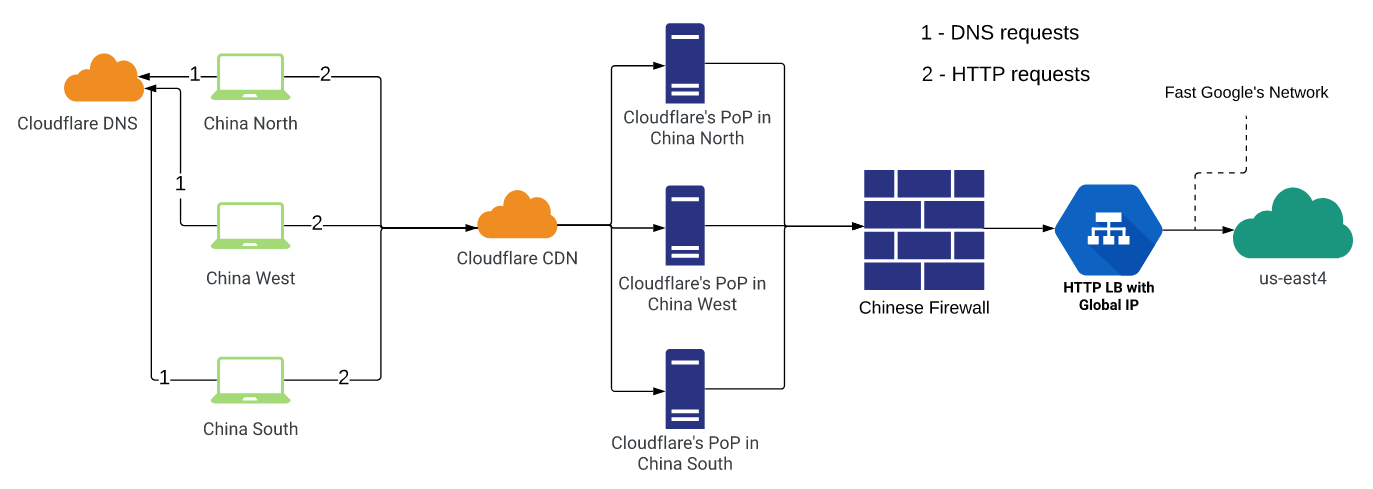

- Use Cloudflare China Network , but this time with Origin, specify the global IP GLB .

- Terminate clients in cn-shenzhen , and from there, proxy traffic immediately to GLB .

- Go immediately from China to GLB .

- Terminate clients in cn-shenzhen , from there, proxy to asia-east1 via IPSEC (to us-east4 via CEN), from there go to GLB (calmly, there will be a picture and explanation below)

We tested all of these options and a few more hybrid ones:

- Cloudflare + GLB

This scheme did not suit us for uptime and DNS errors. But the test was carried out before the fix of the bug by the CF, perhaps it is now better (however, this does not exclude HTTP timeouts).

- Ali + GLB

This scheme also did not suit us for uptime, since GLB often fell out of the upstream because of the impossibility of a connection at an acceptable time or timeout, because for a server inside China, the address of GLB remains outside, which means behind the Chinese firewall. Magic did not happen.

- GLB only

A variant similar to the previous one, only it did not use servers in China itself: traffic went straight to GLB (changed DNS records). Accordingly, the results did not work, since ordinary Chinese customers using the services of ordinary Internet providers, the situation with the passage of the firewall is much worse than that of Ali Cloud.

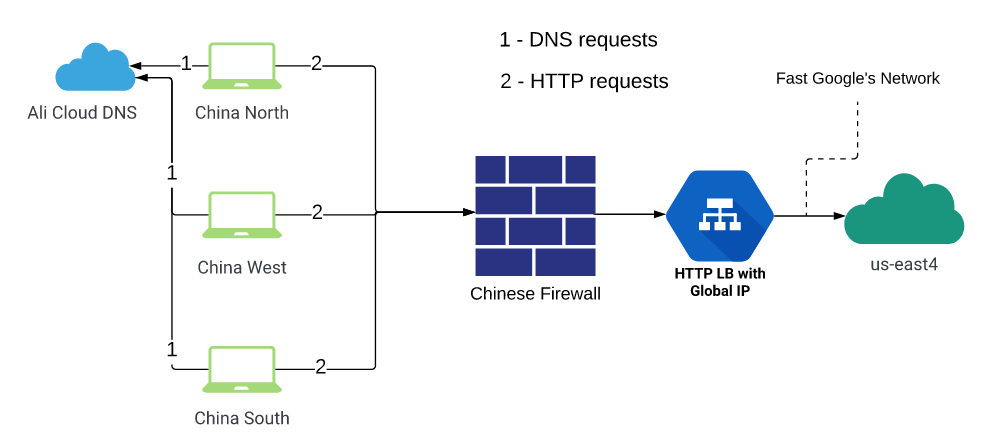

- Shenzhen -> (CEN / IPSEC) -> Proxy -> GLB

Here we decided to use the best of all solutions:

- stability and guaranteed SLA from CEN

- high speed from IPSEC

- Google's “fast” network and its anycast.

The scheme looks like this: user traffic is terminated on a virtual machine in ch-shenzhen . Nginx upstream is configured there, some of which refer to private IP servers located on the other end of the IPSEC tunnel, and some upstream to private server addresses on the other side of CEN. IPSEC was tuned to the asia-east1 region in the GCP (it was the closest region to China at the time of creating the solution. Now the GCP also has a presence in Hong Kong). CEN - to us-east1 region in Ali Cloud.

Then the traffic from both ends went to anycast IP GLB , that is, to the nearest point of presence of Google, and went through its networks to the us-east4 region in the GCP, in which there were substitute virtuals (from the subfilter to nginx).

This hybrid solution, as we expected, allowed us to take advantage of each technology. In general, the traffic goes through fast IPSEC, but if problems start, we quickly and for several minutes throw these servers out of the upstream and send traffic only through CEN, until the tunnel is stabilized.

Having implemented the 4th decision from the list above, we achieved what we wanted and what the business demanded from us at that time.

The results of the browser tests for the new solution in comparison with the previous ones:

| Decision | Uptime | Median | 75 Percentile | 95 Percentile |

|---|---|---|---|---|

| Cloudflare | 86.6 | 18s | 30s | 60s |

| IPsec | 99.79 | 18s | 21s | 30s |

| CEN | 99.75 | 16s | 21s | 27s |

| CEN / IPsec + GLB | 99.79 | 13s | 16s | 25s |

CDN

In the solution we have implemented, everything is fine, only there is no CDN that could accelerate traffic at the level of regions and even cities. In theory, this should speed up the operation of the site for end users by using fast communication channels of a CDN provider. And we thought about it all the time. And now, the time has come for the next iteration of the project: search and testing of CDN providers in China.

And I will tell you about it in the next, final part :)

All parts

')

Source: https://habr.com/ru/post/458840/

All Articles