Unsuccessful software deployment led to failure of the Cloudflare service

This is a small temporary article, in the place of which there will later be a full analysis and comprehensive information about what happened today.

Today, for about 30 minutes, visitors to Cloudflare sites could see an error 502 caused by a sharp jump in the CPU load on our network. This happened due to unsuccessful software deployment. We rolled back the changes, and now the service is functioning normally, as before, and all domains using Cloudflare have returned to normal traffic levels.

We assure you that there was no attack, and we offer our deepest apologies for what happened. Our developers are already conducting a detailed error analysis and are trying to figure out what needs to be done in order to avoid similar incidents in the future.

Added at 20:09 UTC:

Today at 13:42 UTC, a failure in our network was discovered, as a result of which visitors of the Cloudflare domains saw error 502 ("Bad Gateway"). The reason for this failure was the deployment of an incorrectly configured rule in Cloudflare Web Application Firewall (WAF) during the standard deployment process of the new managed Cloudflare WAF rules.

The new rules were intended to improve the blocking mechanism of embedded JavaScript used in hacker attacks. These rules were deployed in simulation mode, in which errors are usually detected and logged without blocking user traffic, which allows you to measure the number of false positives and make sure that the new rules will work normally when deployed within the framework of this project.

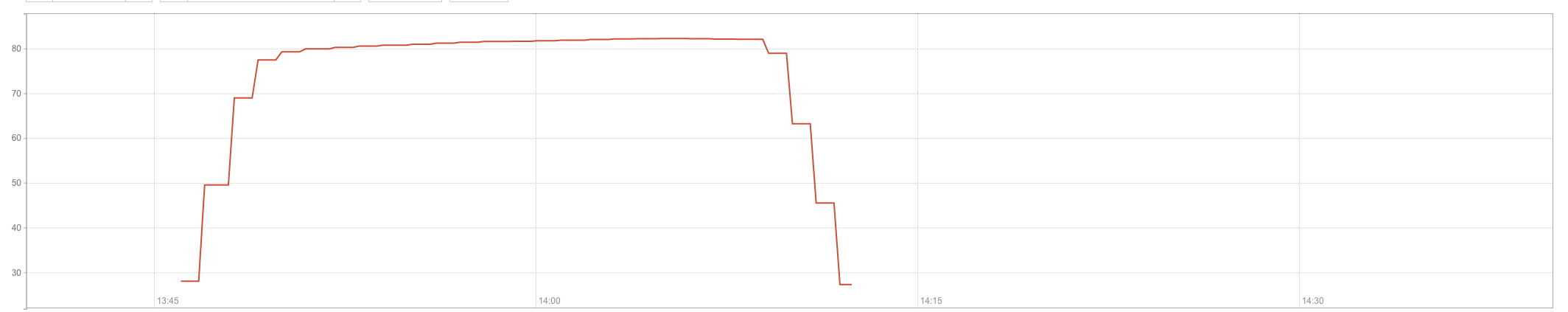

Unfortunately, one of these rules contained a regular expression that led to a jump in CPU utilization of up to 100% on our computers everywhere. It is because of this jump, users of our service witnessed error 502, and traffic fell to 82%.

The graph below shows a jump in CPU utilization in one of our PoPs:

We first encountered the problem of complete exhaustion of CPU resources, which was extremely unexpected for us.

We constantly carry out software deployment in our network and have already developed automated systems for running tests and a phased deployment procedure in order to prevent unpleasant situations. Unfortunately, the global deployment of WAF rules was carried out in one action, which caused today's failure.

At 14:02 UTC, we realized what had happened and decided to completely disable the WAF rule sets, which immediately normalized the CPU load and restored traffic. We made it at 2:09 PM UTC.

After that, we analyzed the problematic pull request, rolled back the changes in the relevant rules, tested our actions to be 100% sure that the error was found correctly, and then restored the WAF rule sets at 14:52.

We realize how much damage our users cause such incidents. In this case, our testing mechanism did not cope with the task, and we are already working on improving it and optimizing the deployment process in order to avoid similar errors in the future.

')

Source: https://habr.com/ru/post/458660/

All Articles