Depth cameras are a quiet revolution (when robots see) Part 2

In the first part of this text, we looked at depth cameras based on structural light and measuring round-trip light delays, which mainly use infrared lights. They work well in premises at distances from 10 centimeters to 10 meters, and most importantly - they are very cheap. Hence the massive wave of their current use in smartphones. But ... As soon as we go outside, the sun even through the clouds illuminates the infrared illumination and their work deteriorates dramatically.

As Steve Blank says ( on another occasion , however): “If you want success, leave the building.” Below we will discuss the depth cameras that work outdoors. Today, this topic is strongly moved by autonomous cars, but, as we will see, not only.

')

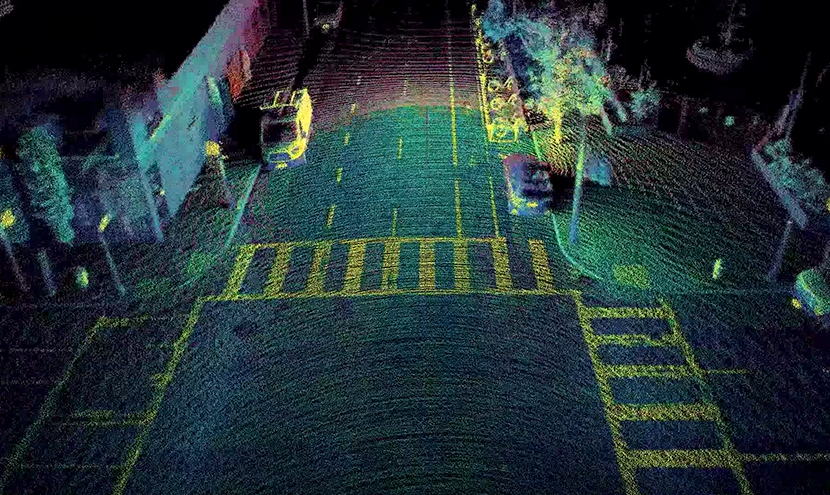

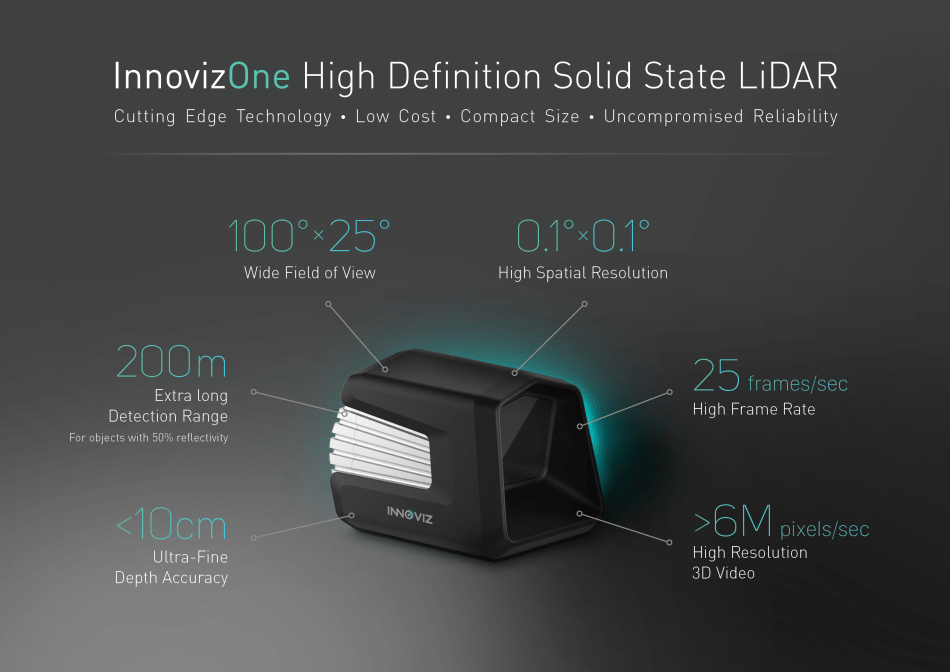

Source: Innoviz Envisions Mass Produced Self-Driving Cars With Solid State LiDAR

So, the depth cameras, i.e. devices shooting video, each pixel of which is the distance to the object of the scene, working in sunlight!

Who cares - welcome under the cat!

Let's start with the eternal classics ...

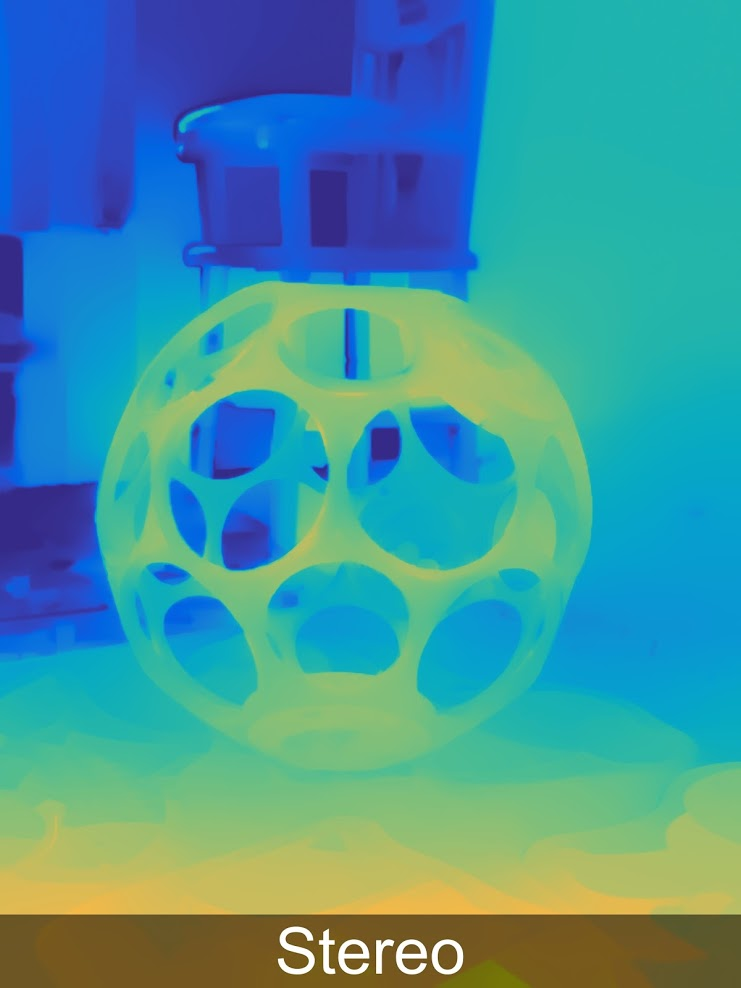

Method 3: Depth from Stereo Camera +

The construction of the depth map from stereo is well known and has been used for more than 40 years . Below is an example of a $ 450 compact camera that can be used to control gestures, along with professional photography or with VR helmets:

A source

The main advantage of such cameras is that sunlight not only does not interfere with them, but vice versa, makes their results better, and as a result, the active use of such cameras for all kinds of street cases, for example, here’s a great example of how to take a three-minute model of a three-year old fort:

ZED outdoor camera example

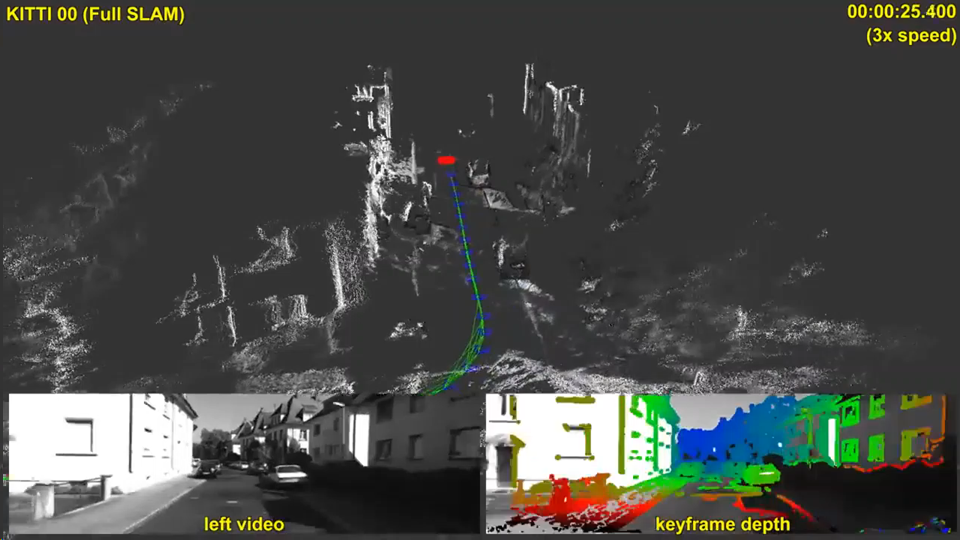

Considerable money in the translation of the construction of depth from stereo to a new level was infused, of course, by the topic of autonomous cars. Of the 5 considered methods of building video depth, only two - this and the next (stereo and plenoptic) do not interfere with the sun and do not interfere with neighboring cars. At the same time, plenopticism is several times more expensive and less accurate at large distances. It can take shape anyway, it is difficult to build forecasts, but in this case it is worth agreeing with Ilon Mask - the stereo of all 5 ways has the best prospects. And the current results are quite encouraging:

Source: Large-Scale Direct SLAM with Stereo Cameras

But it is interesting that, it seems, not unmanned vehicles (of which only a little is being produced so far) will have an even stronger influence on the development of the depth-building theme from stereo, where already the stereo depth map is being built, namely ... That's right! Smartphones!

About three years ago, the “two-eyed” smartphones boomed, in which literally all brands were noted, for the quality of photos taken with one camera and the quality of photos taken with two differed dramatically, but in terms of increasing the price of a smartphone, it was not so significant:

Moreover, last year the process actively went even further: “Do you have 2 cameras in your smartphone? Sucks! I have

Source: Samsung Galaxy A8 & A9

Sony's six-eyed future were mentioned in the first part. In general, multi-eyed smartphones are gaining popularity among manufacturers.

The fundamental reasons for this phenomenon are simple:

- The resolution of the camera phones is growing, and the lens size is small. As a result, despite numerous tricks, the noise level increases and the quality drops, especially when shooting in the dark.

- At the same time, in the second chamber we can remove the so-called Bayer filter from the matrix, i.e. One camera will be black and white, and the second color. This increases the black and white sensitivity by about 3 times. Those. The sensitivity of 2 cameras grows conditionally not 2, but 4 times (!). There are numerous nuances, but such an increase in sensitivity is really clearly seen by eye.

- Among other things, when a stereo pair appears, we have the opportunity to programmatically change the depth of field, i.e. Blur the background, from which many photos significantly benefit (we wrote about it in the second half here ). This option of new smartphone models quickly became extremely popular.

- With an increase in the number of cameras, it also becomes possible to use other lenses - more wide-angle (short-focus), and, conversely, long-focus lenses, which make it possible to noticeably improve quality when “approaching” objects.

- Interestingly, the increase in the number of cameras brings us closer to the subject of a rarefied light field, whose mass of its features and advantages, however, is a separate story.

- Note also that the increase in the number of cameras allows you to increase the resolution resolution recovery methods.

In general, there are so many advantages that when people become aware of them, they start to wonder why at least 2 cameras have not been installed for a long time.

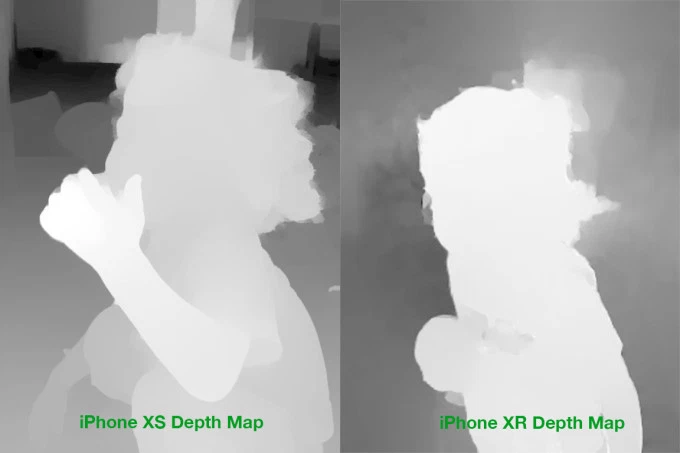

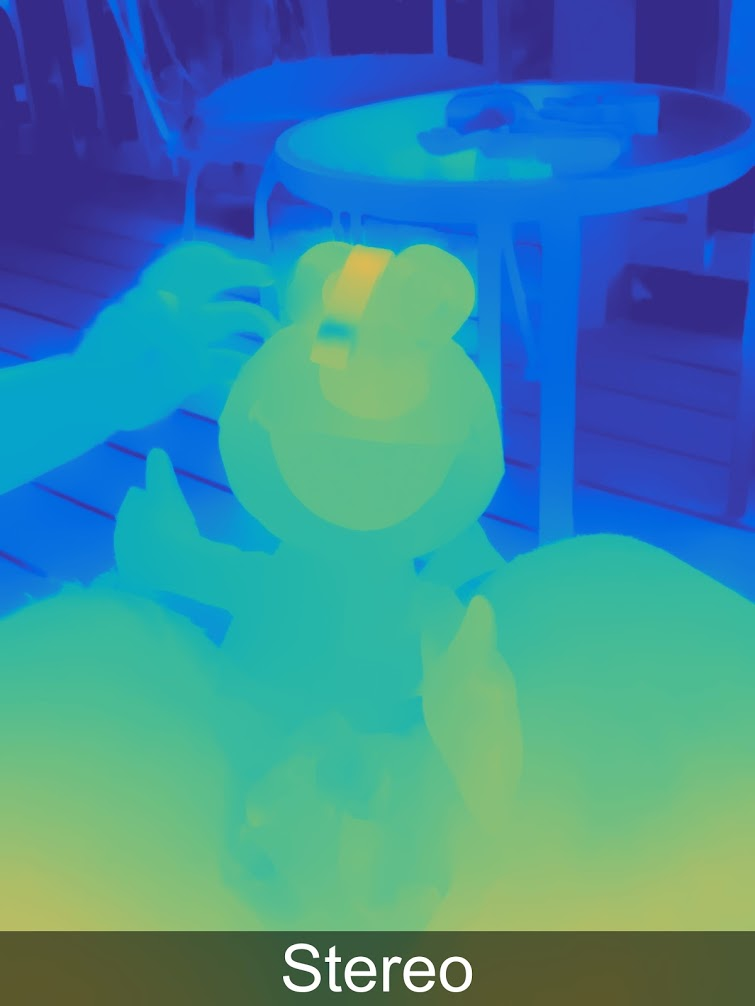

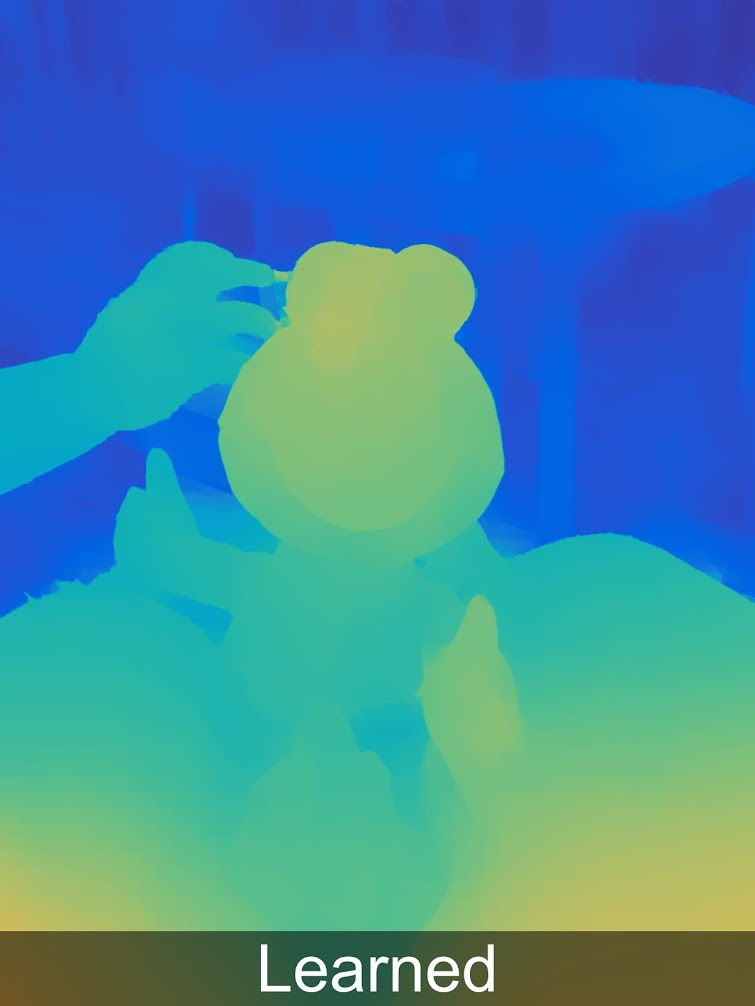

And here it turns out that not everything is so simple. In order to meaningfully use additional cameras to improve the image (and not just switch to a camera with a different lens), we must build a so-called disparity map, which is directly converted into a depth map. And this is a very nontrivial task, the solution to which the power of smartphones came just. And even now, depth maps often have a rather dubious quality. That is, you still need to live up to an exact comparison of the “pixel in the right image to the pixel on the left”. For example, here are real examples of depth maps for the iPhone:

Source: iPhone XS & XR depth maps comparison

Even on the eye, massive problems are clearly visible on the background, on the borders, I am silent about translucent hair. From here - numerous problems that arise both when transferring color from a black and white camera to a color one, and during further processing.

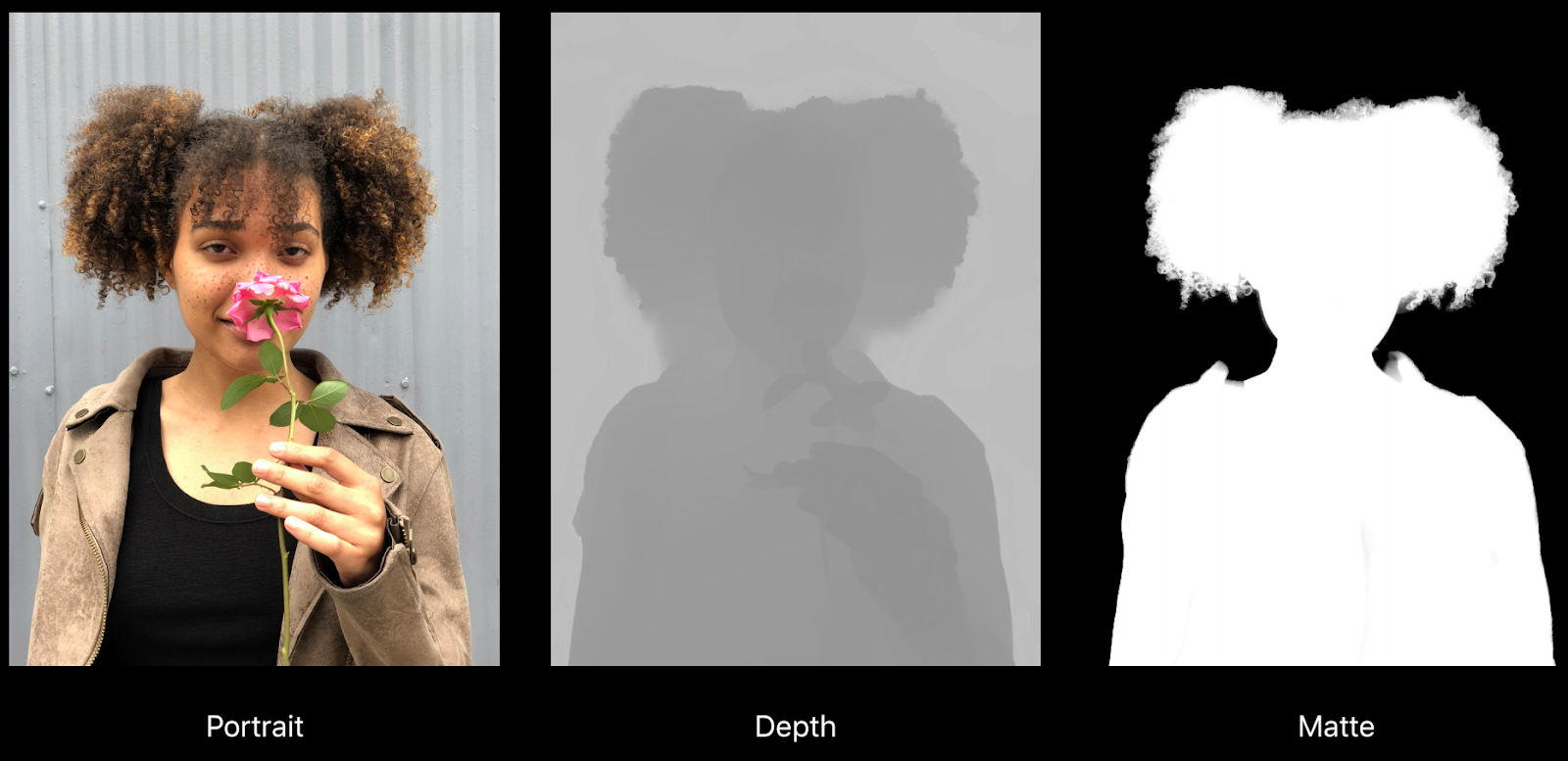

For example, here’s a pretty good example from the Apple’s “Creating Photo and Video Effects Using Depth” talk:

Source: Creating Photo and Video Effects • Using Depth, Apple, WWDC18

You can clearly see how the depth is buggy under the hair, and indeed on any more or less uniform background, but more importantly, the collar went to the background on the right matting card (that is, it will be blurred). Another problem is the real resolution of the depth and the matting map is significantly lower than the image resolution, which also affects the quality during processing:

However, all this - the problem of growth. If 4 years ago there was no talk of any serious effects on the video, the phone simply didn’t “pull” them, then today the processing of video with depth is shown on top serial phones (it was in the same Apple presentation):

Source: Creating Photo and Video Effects • Using Depth, Apple, WWDC18

In general, the topic of multi-cell phones, and, as a result, the theme of receiving depth from stereo on cell phones - conquers the masses without a fight:

Source: "found in these your internet"

Key findings:

- The depth of the stereo - in terms of the cost of equipment - the cheapest way to get the depth, because the cameras are now cheap and continue to fall rapidly. The difficulty is that further processing is much more resource-intensive than for other methods.

- Mobile phones can not increase the diameter of the lens, while the resolution is growing rapidly. As a result, the use of two or more cameras allows you to significantly improve the quality of photos, reduce noise in low light conditions, increase resolution. Since today the mobile phone is often chosen for the quality of the camera, this is a very significant plus. Building a depth map comes as an inconspicuous side bonus.

- The main disadvantages of building depth from stereo:

- As soon as the texture disappears or simply becomes less contrasting, the noise in the depth increases sharply, as a result, on flat monochromatic objects the depth is often poorly used (serious errors are possible).

- Also, the depth is poorly defined on thin and small-sized objects (“cut off” fingers, or even hands, collapsed poles, etc.)

- As soon as the texture disappears or simply becomes less contrasting, the noise in the depth increases sharply, as a result, on flat monochromatic objects the depth is often poorly used (serious errors are possible).

- As soon as the power of iron allows you to build a depth map for video, the depth on smartphones will give a powerful impetus to the development of AR (at some point the quality of AR applications on all new phone models, including budget ones, will suddenly become noticeably higher and a new wave will go) . Totally unexpected!

The next way is less trivial and well-known, but very cool. Meet

Method 4: Light Field Camera Depth

The topic of plenopticians (from the Latin plenus is full and optikos is visual) or light fields are still relatively poorly known to the broad masses, although the professionals began to study it very closely. At many top conferences, separate sections have been allocated to articles on Light Field (the author was once amazed at the number of Asian researchers at the IEEE International Conference on Multimedia and Expo who are closely involved in this topic).

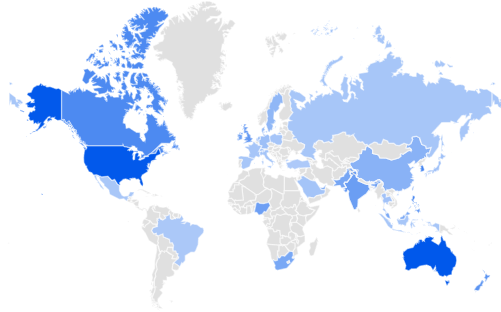

Google Trends say that the US, Australia are leading in terms of interest in Light Field, followed by Singapore and Korea. Great Britain. Russia is in 32nd place ... We will correct the gap with India and South Africa:

Source: Google Trends

Your humble servant some time ago did a detailed article on Habré with a detailed description of how it works and what it gives, so let's go over briefly.

The main idea is to try to fix at each point not just light, but a two-dimensional array of light rays, making each frame four-dimensional. In practice, this is done using a microlens array:

Source: plenoptic.info (it is recommended to click and see in full resolution)

As a result, we have a lot of new features , but the resolution is seriously falling. Having solved a lot of complex technical problems, they drastically raised the resolution at Lytro (bought by Google), where in Lytro Cinema the resolution of the camera's sensor was brought to 755 megapixels of RAW data, and which looked cumbersome, like the first television cameras:

Source: NAB: New Chandelier Light

Interestingly, even professionals regularly misinterpret the drop in resolution of plenoptic cameras, because they underestimate how well Super Resolution algorithms can be worked out for them, really superbly restoring many of the details on the micro-shifts of the image in the light field (pay attention to the blurry needles and moving swings in the background) :

Source: Naive, Smart and Super Resolution plenoptic frame recovery from the Adobe Technical Report "Superresolution with Plenoptic Camera 2.0"

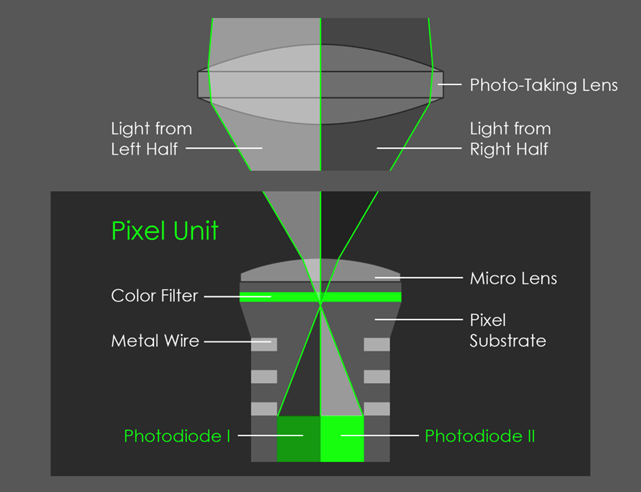

All of this would have been relatively theoretical interest, if Google in Pixel 2 had not implemented plenoptic, having covered 2 pixels with a lens :

Source: AI Google Blog

As a result, a microstereopara formed, which made it possible to MEASURE the depth to which Google, faithful to new traditions, added neural networks, and it turned out generally wonderful:

More depth examples in full resolution in a special gallery .

Interestingly, the depth is stored by Google (like Huawei, and others) in the image itself, so you can extract it from there and see:

Source: Google's New Secret Camera App.

And then you can turn the photo into three-dimensional:

Source: Google's New Secret Camera App.

You can experiment with this on the website http://depthy.me where you can upload your photo. Interestingly, the site is available in source code , i.e. You can improve the processing of depth, since there are many possibilities for this, now the simplest processing algorithms are used there.

Key points:

- At one of the Google conferences, it sounded that perhaps the lens would cover not 2, but 4 pixels. This will reduce the direct resolution of the sensor, but it will drastically improve the depth map. Firstly, due to the appearance of stereo pairs in two perpendicular directions, and secondly, due to the fact that the stereo base will increase by a factor of 1.4 (two diagonals). This includes a marked improvement in depth accuracy at a distance.

- In itself plenoptic (it is also a calculated photo), it allows:

- “Honestly” changing the focus and depth of field after shooting is the most well-known ability of plenoptic sensors.

- Calculate the shape of the diaphragm.

- Calculate scene lighting.

- Multiple shift point shooting, including getting a stereo (or multi-angle frame) with a single lens.

- Calculate the resolution, because using the Super Resolution algorithms that are heavy in computational complexity, you can actually restore the frame.

- Calculate a transparency map for translucent borders.

- And finally, build a depth map, which is important today.

- “Honestly” changing the focus and depth of field after shooting is the most well-known ability of plenoptic sensors.

- Potentially, when the main camera of the phone can simultaneously build a real-time quality depth map in parallel with the survey, this will create a revolution . This depends largely on the computing power on board (this is what is preventing us from doing better and better resolution of the depth map in real time). This is most interesting for AR, of course, but there will be many opportunities to change photos.

And finally, we come to the last in this review method of measuring depth.

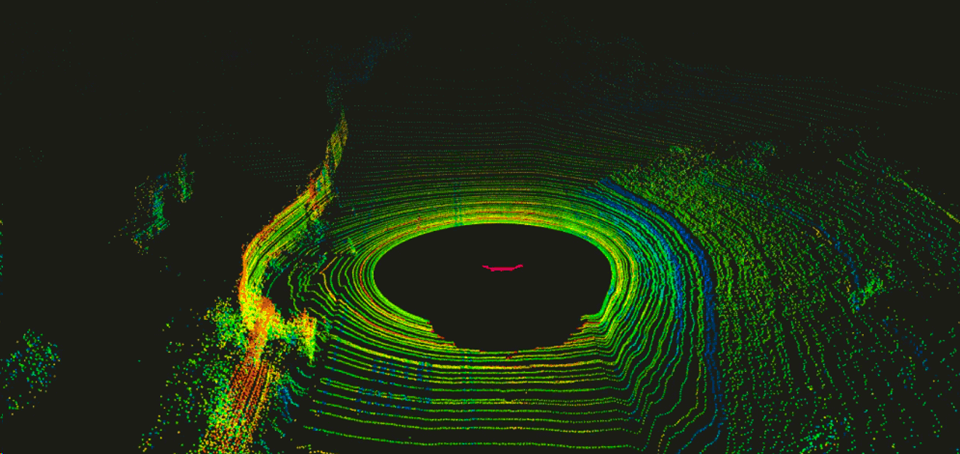

Method 5: Cameras on lidar technology

Generally, laser rangefinders are firmly established in our lives, are inexpensive and give high accuracy. The first lidars (from LIDaR - Light Identification Detection and Ranging ), built as bundles of similar devices rotating around a horizontal axis, were first used by the military, then tested in the autopilot of the machines. They performed quite well there, which caused a strong surge in investment in the region. Initially, the lidars rotated, giving a similar pattern several times a second:

Source: LIDAR: The Key Self-Driving Car Sensor

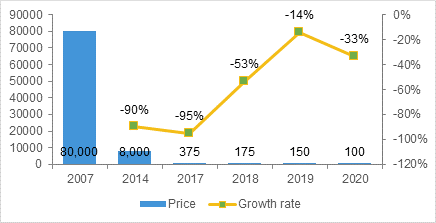

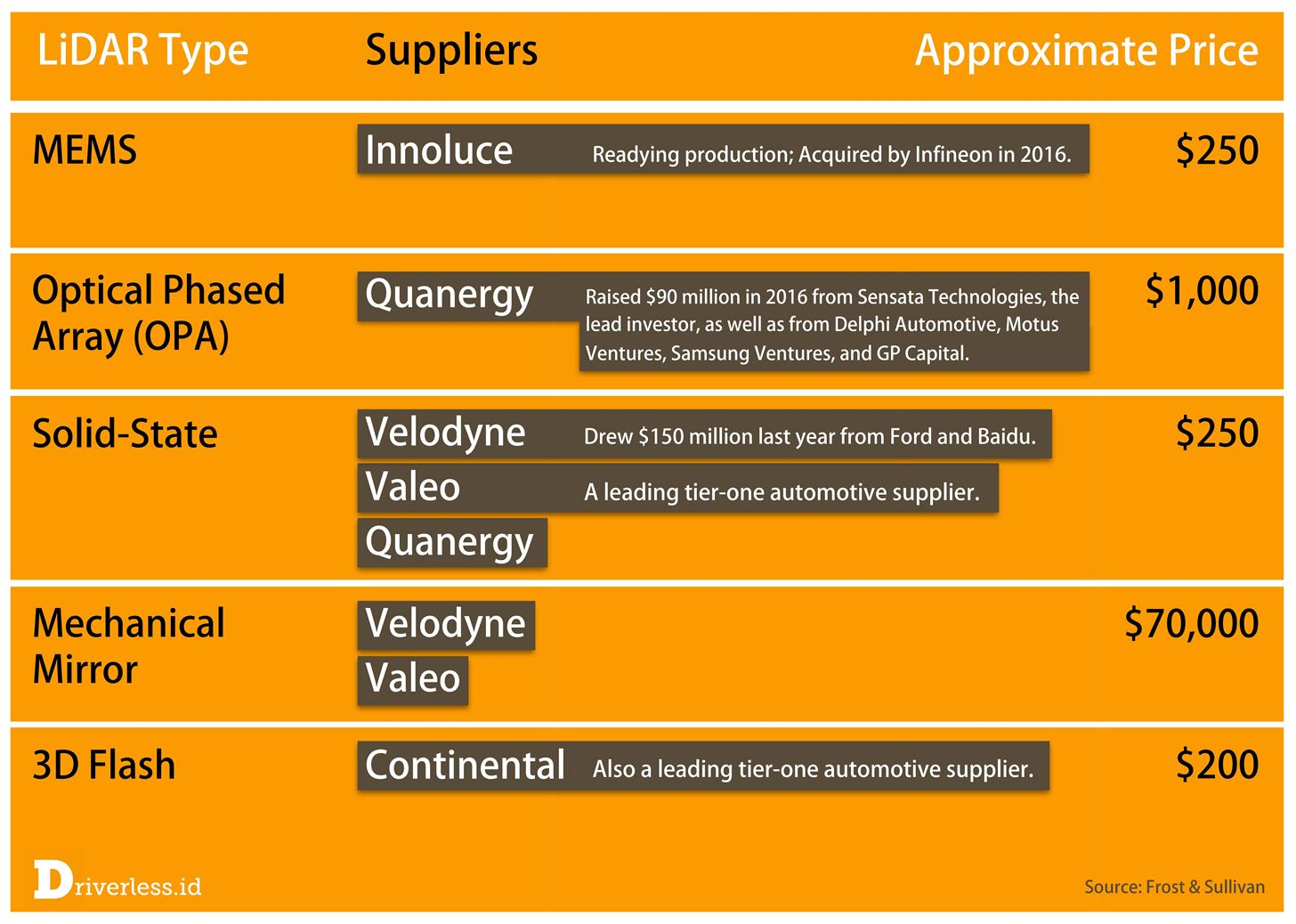

It was inconvenient, unreliable due to moving parts and rather expensive. Tony Seb in his lectures presents interesting data on the rate of decline in the cost of lidars. If for the first stand-alone Google machines, the lidars cost 70 thousand dollars (for example, the specialized HDL-64E used on the first machines cost 75 thousand):

Source: This and the Next From Parking to Parks –Bellevue & the Disruption of Transportation

When mass production of new models of the next generation, they are threatening to reduce the price significantly less than $ 1000:

One can argue about Tony’s example (the promise of a startup is not the final cost), but what is booming in this area, the rapid increase in production runs, the emergence of completely new products and the general decline in prices is indisputable. A little later, in 2017, the forecast for the fall in prices was as follows (and the moment of truth will come when they will start to be put into cars in large quantities):

Source: LiDAR Completes Sensing Triumvirate

In particular, relatively recently, several manufacturers have acquired the so-called Solid State Lidar , which in principle have no moving parts, which show a radically higher reliability, especially when shaken, a lower cost , etc. I recommend to watch this video, where their device is explained in 84 seconds very clearly:

Source: Solid State Lidar Sensor

What is important for us is that Solid State Lidar gives a rectangular picture, i.e. in essence, it starts working like a “regular” depth camera:

Source: Innoviz Envisions Mass Produced Self-Driving Cars With Solid State LiDAR

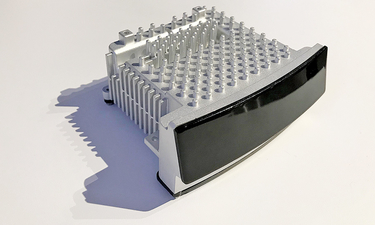

The example above gives a video of approximately 1024x256, 25 FPS, 12 bits per component. Such lidars will be mounted under the hood grill (since the device heats well):

Source: Solid-State LiDAR Magna Electronics

As usual, the Chinese, who are today in the first place in the world for the production of electric cars and who obviously aim at the first place in the world in autonomous cars, are lighting up:

Source: Alibaba, RoboSense launch unmanned vehicle using solid-state LIDAR

In particular, their experiments with non-square “pixel” depths are interesting, if you do joint processing with an RGB camera, it is possible to raise the resolution and this is a rather interesting compromise (“squareness” of pixels is important, in fact, only for humans):

Source: MEMS Lidar for Driverless Vehicles Takes Another Big Step

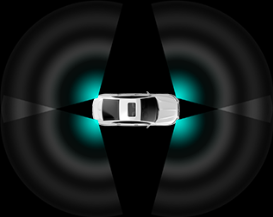

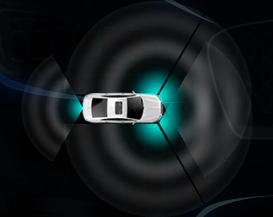

Lidars are mounted in different schemes, depending on the cost of the kit and the power of the on-board system, which will need to process all this data. The overall characteristics of the autopilot change accordingly. As a result, more expensive cars will better tolerate dusty roads and it is easier to “dodge” cars entering at intersections at the side, while cheap ones will only help reduce the number of (numerous) stupid accidents in traffic jams:

Source: Description RoboSense RS-LiDAR-M1

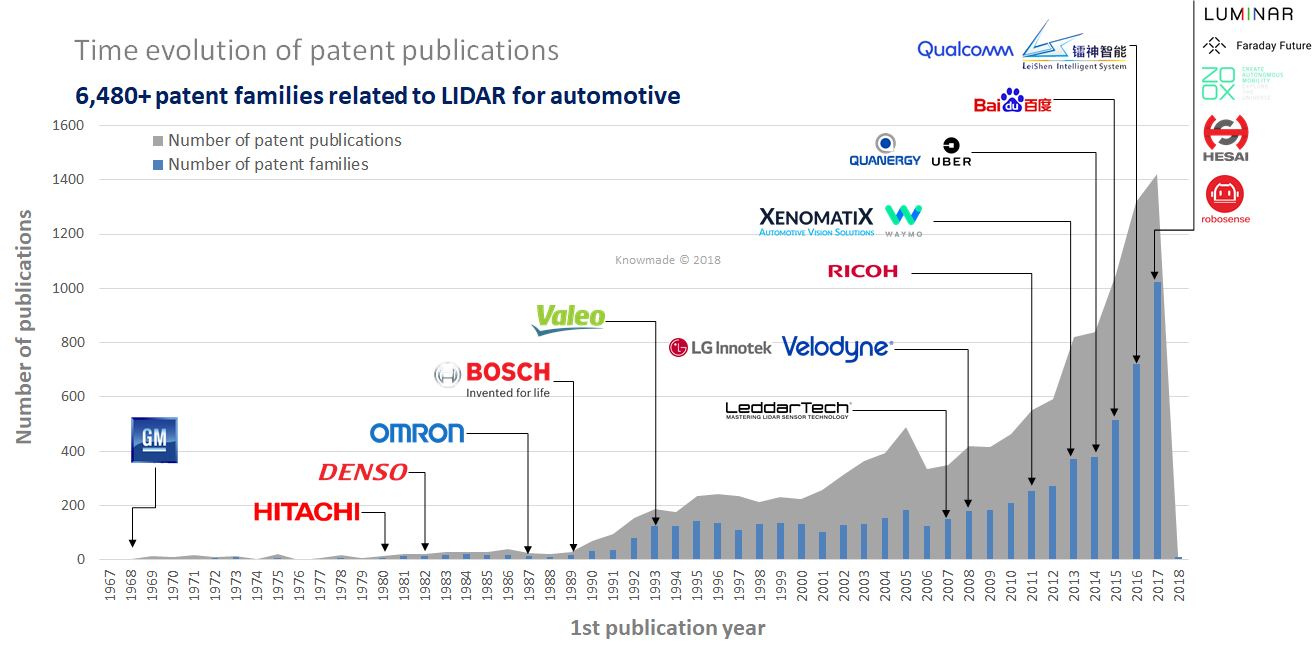

Note that, apart from Solid-State, low prices promise a couple more directions in which lidars are developing. Something to predict here is a thankless task, since too much will not depend on the potential engineering characteristics of the technology, but, for example, on patents. Just Solid-State is already engaged in several companies, so the topic looks the most promising. But not to say about the rest would be unfair:

Source: This 2017 LiDAR Bottleneck Is Causing a Modern-Day Gold Rush

If we talk about lidars as cameras, it’s worth mentioning another important feature that is essential when using solid-state lidars. They by their nature work like cameras with an already forgotten running shutter , which makes noticeable distortions when shooting moving objects:

Source: What is the difference between the global (global) shutter and the running (rolling)

Considering that on the road, especially on the autobahn at a speed of 150 km / h, everything changes pretty quickly, this feature of the lidars will distort objects, including fast flying towards us ... Including in depth ... Note that the two previous methods There is no such problem.

Source: good animation on wikipedia, which shows the distortion of the car

This feature, coupled with low FPS, requires the adaptation of processing algorithms, but in any case, due to high accuracy, including at large distances, lidars have no special competitors.

Interestingly, by their nature, lidars work very well on even areas and worse on borders, and stereo sensors do poorly on even areas and relatively well on borders. In addition, the lidars give a relatively small FPS, and the cameras are much larger. As a result, they essentially complement each other, which was used, among other things, in the Lytro Cinema cameras (in the photo above the camera there is an illuminated lens of plenoptics, giving up to 300 FPS, and below is

Source: Lytro poised to forever change filmmaking: debuts Cinema prototype and short film at NAB

If we already combined two depth sensors in a movie camera, then in other devices (from smartphones to cars) we can expect the mass distribution of hybrid sensors that provide maximum quality.

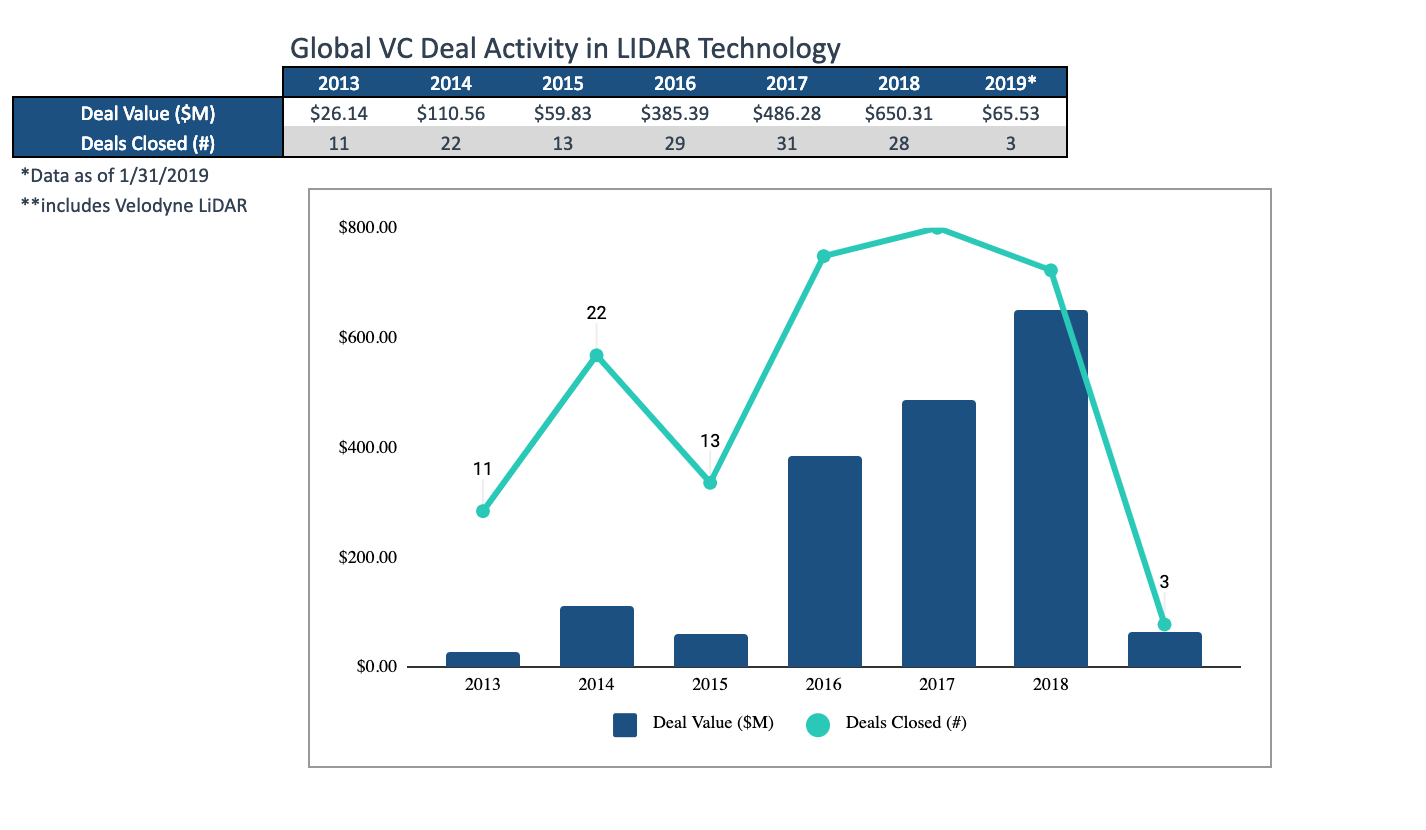

In a sense, lidars are still about investments that in the previous three years amounted to about 1.5 billion dollars (since this market is valued at 10 billion in 6 years and the graph below clearly shows how the average transaction size grew):

Source: An interesting March review of trends in the lidar market from Techcrunch

The development cycle of an innovative product even in highly competitive markets is 1.5-2 years, so soon we will see extremely interesting products. In the form of prototypes they already have.

Key points:

- Pluses lidar:

- the highest accuracy, including the best among all methods at large distances,

- work best on flat surfaces on which the stereo stops working,

- almost do not shine the sun

- the highest accuracy, including the best among all methods at large distances,

- Cons lidar:

- high power consumption

- relatively low frame rate

- running shutter and the need to compensate for it during processing

- Lidars working alongside create interference to each other, which is not so easy to compensate.

- high power consumption

- And nevertheless, we are seeing how literally in 2 years a new type of depth cameras has appeared with excellent prospects and a huge potential market. In the coming years, we can expect a serious decline in prices, including the emergence of small universal lidars for industrial robots.

Processing video with depth

In a nutshell, consider why depth is not so easy to handle.

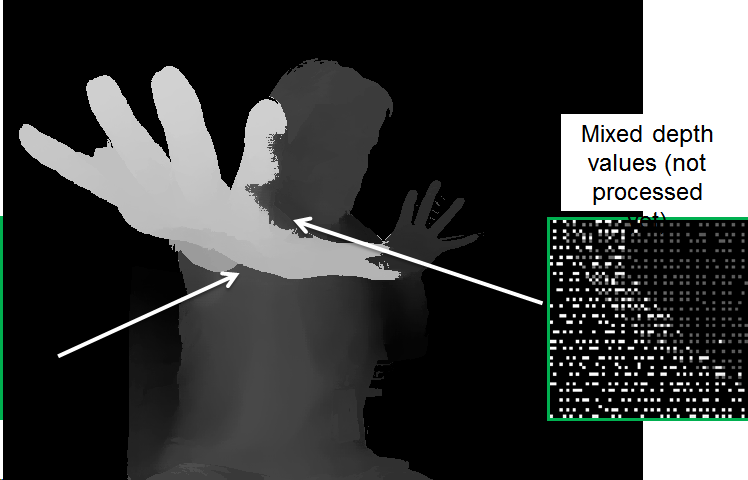

Below is an example of raw depth data from a very nice line of Intel RealSense cameras . Fingers on this video can be relatively easy to see the work of the camera and processing algorithms:

Source: hereinafter - the author's materials

The typical problems of depth cameras are clearly visible:

- The data is unstable in terms of values, the pixels are “noisy” in depth.

- Along the borders for a fairly large width, the data is also very noisy (for this reason, depth values along the borders are often simply masked so as not to interfere with operation).

- A lot of “walking” pixels can be seen around the head (apparently, proximity to the wall from behind + hair begins to influence).

But that is not all. If we take a closer look at the data, it can be seen that in some places deeper data “see through” through closer ones:

This is because for convenience of processing the image is reduced to an image from an RGB camera, and since the borders are not precisely determined, it becomes necessary to specially process such “overlaps”, otherwise problems arise, such as such “holes” in depth on the arm:

When processing, there are a lot of problems, for example:

- "Flowing" depth from one object to another.

- The instability of the depth in time.

- Aliasing (the formation of a "ladder"), when the suppression of noise in depth leads to the discretization of depth values:

Nevertheless, it is clear that the picture has improved greatly. Note that slower algorithms can still significantly improve the quality.

In general, video depth processing ( depth video processing ) is a separate huge topic that not many people are engaged in so far, but which is rapidly gaining popularity. The previous market, which she completely changed, is the conversion of films from 2D to 3D.The result of manually building stereo on 2D video improved so quickly, showed so much more predictable results, caused so much less headache and less cost that in the 3D movie, after almost 100 years of shooting (!), Pretty quickly almost completely ceased to shoot and only started to convert . There was even a separate specialty - depth artist , and the old-school stereographers were shocked (“ka-ak ta-ak?”). And the key role in this revolution was played by the rapid improvement of video processing algorithms. Perhaps, somehow I will take the time to write about this separate series of articles, because my material is in large quantities.

Approximately the same can be expected in the near future for cameras depth robots, smartphones and autonomous cars.

Wake up! Revolution is coming!

Instead of conclusion

A couple of years ago, your humble servant brought a comparison of different approaches to obtaining video with depth into a single tablet. In general, during this time the situation has not changed. The division here is rather arbitrary, since the manufacturers are well aware of the disadvantages of the technologies and try to compensate for them (sometimes very successfully). Nevertheless, in general, it can be compared to different approaches:

Source: materials of the author

Let 's run through the table:

- According to the resolution, the depth from the stereo is in the lead, but everything very much depends on the scene (if it is monotonous, the case is a pipe).

- In terms of accuracy , the lidars are out of competition, the situation is worse for plenopticians, by the way.

- — «» ToF , .

- FPS ToF , , ( 300 fps). .

- .

- — ToF .

It is important to understand the situation in specific markets. For example, the plenoptic approach looks clearly lagging behind. However, if it turns out to be the best for AR (and this will become clear in the next 3-4 years), then it will take its rightful place in every phone and tablet. It is also clear that solid-state lidars look best of all (the “greenest” ones), but this is also the youngest technology, and these are their own risks, first of all patent (in the area of a huge number of fresh patents that will not expire soon):

Source : LiDAR: An Overview of the Patent Landscape

Nevertheless, we don’t forget - the market of depth sensors for smartphones is planned to be worth $ 6 billion next yearand less than 4 it can not be, because last year amounted to more than 3 billion. This is a huge amount of money that will not only go to return on investment (the most successful investors), but also to develop new generations of sensors. There is no similar growth of lidars yet, but by all indications it will go literally in the next 3 years. And then it is the usual avalanche-like exponential process, which have already happened more than once.

The near future of depth cameras looks extremely interesting!

Stay tuned!

Part 1

Acknowledgments

:

- . .. ,

- Google, Apple Intel , ,

- , , ,

- , , , , !

Source: https://habr.com/ru/post/458458/

All Articles