Google opens source code for the robots.txt parser

Today, Google announced a draft of the RFC standard of the Robots Exclusion Protocol (REP) , simultaneously making its parser robots.txt file available under the Apache License 2.0 license. Until today, there was no official standard for Robots Exclusion Protocol (REP) and robots.txt (this was closest to it), which allowed developers and users to interpret it in their own way. The company's initiative aims to reduce differences between implementations.

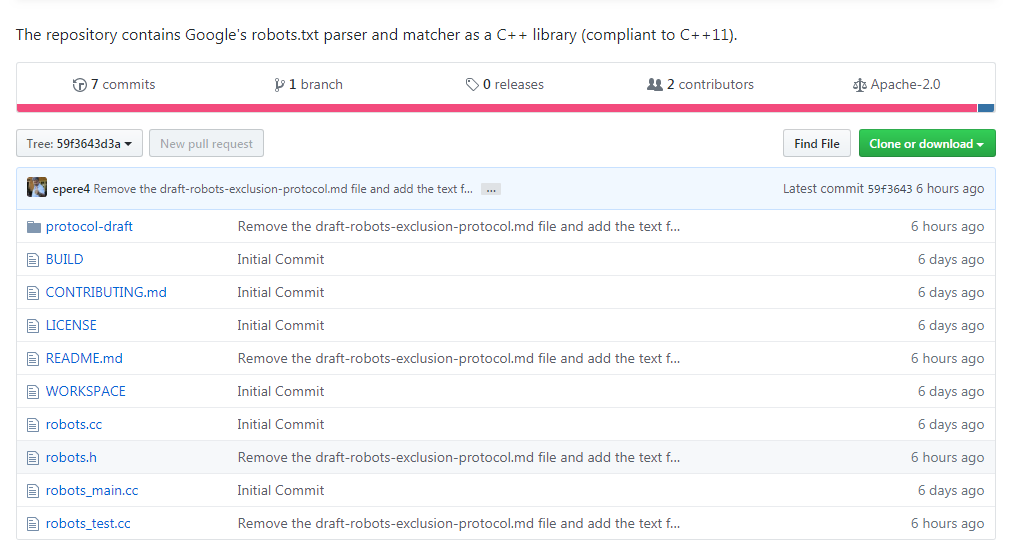

A draft of the standard can be viewed on the IETF website , and the repository is available on Github via https://github.com/google/robotstxt .

')

The parser is the source code that Google uses as part of its production systems (with the exception of minor edits - such as the cleaned header files used only inside the company) - the robots.txt files are parsed exactly the way Googlebot does (including how he handles unicode characters in patterns). The parser is written in C ++ and essentially consists of two files - you will need a compiler that is compatible with C ++ 11, although the library code goes back to the 90th, and you will find in it “raw” pointers and strbrk . In order to collect it, it is recommended to use Bazel (CMake support is planned in the near future).

The very idea of robots.txt and the standard belongs to Martein Koster, who created it in 1994 - according to legend , the reason for this was the search spider Charles Stross, who "dropped" Koster's server using a DoS attack. His idea was picked up by others and quickly became the de facto standard for those involved in search engine development. But those who wanted to parse it sometimes had to do reverse engineering of Googlebot - among them the company Blekko, who wrote his own Perl parser for his search engine.

The parser has not been without amusing moments: for example, look at how much work went into disallow processing .

Source: https://habr.com/ru/post/458428/

All Articles