Deep ranking for comparing two images

Hi, Habr! I present to your attention the translation of the article “Image Similarity using Deep Ranking” by the author Akarsh Zingade.

The concept of " similarity of two images " was not introduced, so let's introduce this concept at least within the framework of the article.

The similarity of two images is the result of comparing two images according to certain criteria. Its quantitative measure determines the degree of similarity between the intensity charts of the two images. Using the measure of similarity, some signs describing the images are compared. As a measure of similarity is usually used: Hamming distance, Euclidean distance, Manhattan distance, etc.

Deep Ranking - studies the fine-grained similarity of images, characterizing the fine-dispersion ratio of similarity of images using a set of triplets.

')

A triplet contains a request image, a positive and a negative image. Where a positive image looks more like a request image than a negative one.

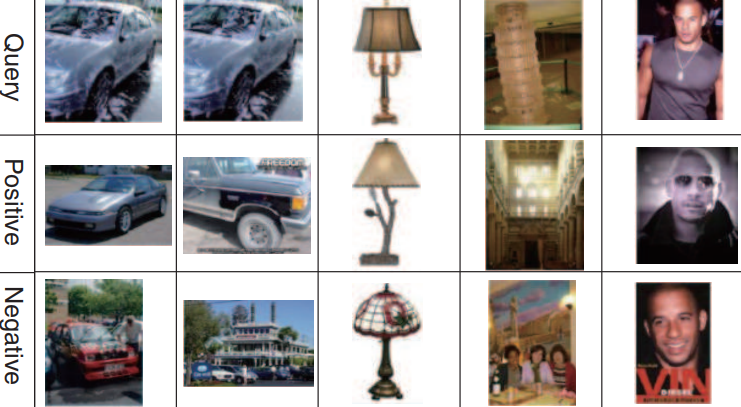

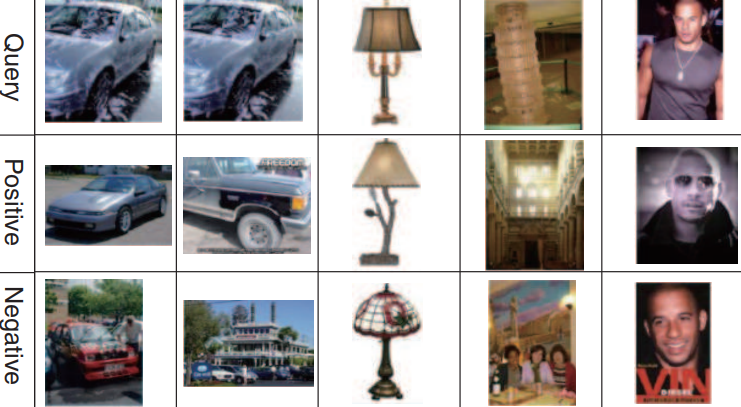

An example of a set of triplets:

The first, second and third lines correspond to the request image. The second line (positive images) looks more like request images than the third (negative images).

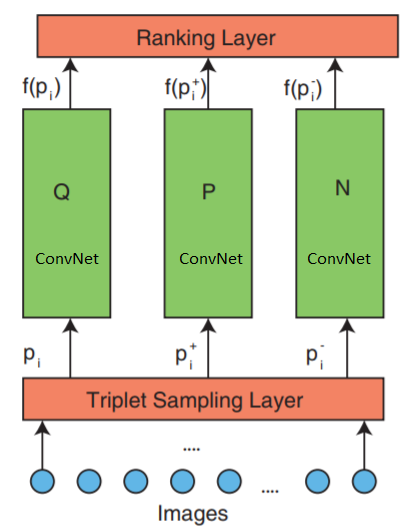

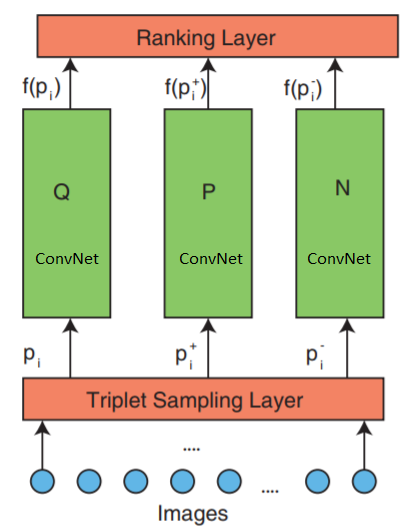

The network consists of 3 parts: triplet sampling, ConvNet and the ranking layer.

The network accepts triples of images as input. One image triplet contains an image request $ inline $ p_i $ inline $ positive image $ inline $ p_i ^ + $ inline $ and negative image $ inline $ p_i ^ - $ inline $ which are independently transmitted in three identical deep neural networks.

The topmost ranking layer - estimates the triplet loss function. This error is corrected in the lower layers in order to minimize the loss function.

Let's now take a closer look at the middle layer:

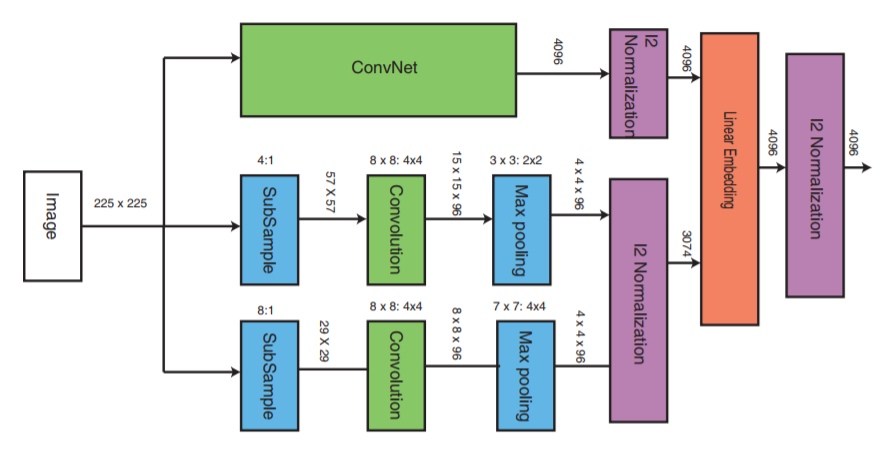

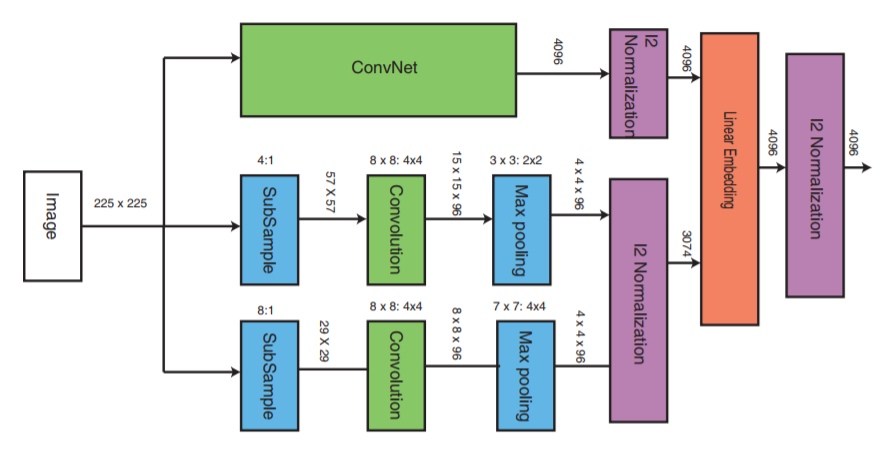

ConvNet can be any deep neural network (in this article, one of the implementation of the convolutional neural network VGG16 will be considered). ConvNet contains convolutional layers, a maximum pool layer, local normalization layers, and fully connected layers.

The other two parts receive images with a reduced sampling rate and carry out the convolution stage and max pooling. Then the stage of normalization of the three parts occurs and at the end they are merged with the linear layer with the subsequent normalization.

There are several ways to form a triplet file, for example, use an expert assessment. But this article will use the following algorithm:

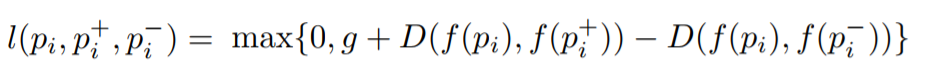

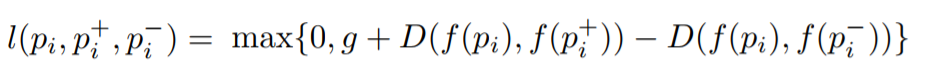

The goal is to train a function that assigns a small distance for the most similar images and a large one for different ones. This can be expressed as:

Where l is the loss coefficient for triplets, g is the gap coefficient between the distance between two pairs of images: ( $ inline $ p_i $ inline $ , $ inline $ p_i ^ + $ inline $ ) and ( $ inline $ p_i $ inline $ , $ inline $ p_i ^ - $ inline $ ), f - embedding function, which displays the image in a vector, $ inline $ p_i $ inline $ - this is a request image, $ inline $ p_i ^ + $ inline $ - this is a positive image, $ inline $ p_i ^ - $ inline $ Is a negative image, and D is the Euclidean distance between two Euclidean points.

Implementing with Keras.

Three parallel networks are used for the request, a positive and a negative image.

There are three main parts to the implementation:

Learning three parallel deep networks will consume a lot of memory resources. Instead of three parallel deep networks that receive the image of the request, a positive and a negative image, these images will be fed to the input of the neural network sequentially into one deep neural network. The tensor transferred to the loss layer will contain an image embedding in each row. Each line corresponds to each input image in the packet. Since, the image of the request, the positive image and the negative image are successively transmitted, the first line will correspond to the request image, the second - to the positive image, and the third - to the negative image, and then repeat to the end of the packet. Thus, in the ranking layer gets an attachment of all images. After that, the loss function is calculated.

To implement the ranking layer, we need to write our own loss function, which will calculate the Euclidean distance between the request image and the positive image, as well as the Euclidean distance between the request image and the negative image.

The packet size must always be a multiple of 3. Because the triplet contains 3 images, and the triplet images are transmitted sequentially (we send each images a deep neural network in series)

The rest of the code link

Algorithm Deep Ranking

The concept of " similarity of two images " was not introduced, so let's introduce this concept at least within the framework of the article.

The similarity of two images is the result of comparing two images according to certain criteria. Its quantitative measure determines the degree of similarity between the intensity charts of the two images. Using the measure of similarity, some signs describing the images are compared. As a measure of similarity is usually used: Hamming distance, Euclidean distance, Manhattan distance, etc.

Deep Ranking - studies the fine-grained similarity of images, characterizing the fine-dispersion ratio of similarity of images using a set of triplets.

')

What is a triplet?

A triplet contains a request image, a positive and a negative image. Where a positive image looks more like a request image than a negative one.

An example of a set of triplets:

The first, second and third lines correspond to the request image. The second line (positive images) looks more like request images than the third (negative images).

Deep Ranking Network Architecture

The network consists of 3 parts: triplet sampling, ConvNet and the ranking layer.

The network accepts triples of images as input. One image triplet contains an image request $ inline $ p_i $ inline $ positive image $ inline $ p_i ^ + $ inline $ and negative image $ inline $ p_i ^ - $ inline $ which are independently transmitted in three identical deep neural networks.

The topmost ranking layer - estimates the triplet loss function. This error is corrected in the lower layers in order to minimize the loss function.

Let's now take a closer look at the middle layer:

ConvNet can be any deep neural network (in this article, one of the implementation of the convolutional neural network VGG16 will be considered). ConvNet contains convolutional layers, a maximum pool layer, local normalization layers, and fully connected layers.

The other two parts receive images with a reduced sampling rate and carry out the convolution stage and max pooling. Then the stage of normalization of the three parts occurs and at the end they are merged with the linear layer with the subsequent normalization.

Triplet formation

There are several ways to form a triplet file, for example, use an expert assessment. But this article will use the following algorithm:

- Each image in the class generates an image request

- Each image, except the request image, will form a positive image. But you can limit the number of positive images for each image request

- A negative image is randomly selected from any class that is not a request image class.

Triplet loss function

The goal is to train a function that assigns a small distance for the most similar images and a large one for different ones. This can be expressed as:

Where l is the loss coefficient for triplets, g is the gap coefficient between the distance between two pairs of images: ( $ inline $ p_i $ inline $ , $ inline $ p_i ^ + $ inline $ ) and ( $ inline $ p_i $ inline $ , $ inline $ p_i ^ - $ inline $ ), f - embedding function, which displays the image in a vector, $ inline $ p_i $ inline $ - this is a request image, $ inline $ p_i ^ + $ inline $ - this is a positive image, $ inline $ p_i ^ - $ inline $ Is a negative image, and D is the Euclidean distance between two Euclidean points.

Deep Ranking Algorithm Implementation

Implementing with Keras.

Three parallel networks are used for the request, a positive and a negative image.

There are three main parts to the implementation:

- Implementation of three parallel multiscale neural networks

- Implementation of the loss function

- Triplet generation

Learning three parallel deep networks will consume a lot of memory resources. Instead of three parallel deep networks that receive the image of the request, a positive and a negative image, these images will be fed to the input of the neural network sequentially into one deep neural network. The tensor transferred to the loss layer will contain an image embedding in each row. Each line corresponds to each input image in the packet. Since, the image of the request, the positive image and the negative image are successively transmitted, the first line will correspond to the request image, the second - to the positive image, and the third - to the negative image, and then repeat to the end of the packet. Thus, in the ranking layer gets an attachment of all images. After that, the loss function is calculated.

To implement the ranking layer, we need to write our own loss function, which will calculate the Euclidean distance between the request image and the positive image, as well as the Euclidean distance between the request image and the negative image.

Implementation of the loss calculation function

_EPSILON = K.epsilon() def _loss_tensor(y_true, y_pred): y_pred = K.clip(y_pred, _EPSILON, 1.0-_EPSILON) loss = tf.convert_to_tensor(0,dtype=tf.float32) # initialise the loss variable to zero g = tf.constant(1.0, shape=[1], dtype=tf.float32) # set the value for constant 'g' for i in range(0,batch_size,3): try: q_embedding = y_pred[i+0] # procure the embedding for query image p_embedding = y_pred[i+1] # procure the embedding for positive image n_embedding = y_pred[i+2] # procure the embedding for negative image D_q_p = K.sqrt(K.sum((q_embedding - p_embedding)**2)) # calculate the euclidean distance between query image and positive image D_q_n = K.sqrt(K.sum((q_embedding - n_embedding)**2)) # calculate the euclidean distance between query image and negative image loss = (loss + g + D_q_p - D_q_n ) # accumulate the loss for each triplet except: continue loss = loss/(batch_size/3) # Average out the loss zero = tf.constant(0.0, shape=[1], dtype=tf.float32) return tf.maximum(loss,zero) The packet size must always be a multiple of 3. Because the triplet contains 3 images, and the triplet images are transmitted sequentially (we send each images a deep neural network in series)

The rest of the code link

Bibliography

[1] Object Recognition from Local Scale-Invariant Features- www.cs.ubc.ca/~lowe/papers/iccv99.pdf

[2] Histograms of Oriented Gradients for Human Detection- courses.engr.illinois.edu/ece420/fa2017/hog_for_human_detection.pdf

[3] Learning Fine-grained Image Similarity with Deep Ranking- static.googleusercontent.com/media/research.google.com/en//pubs/archive/42945.pdf

[4] ImageNet Classification with Deep Convolutional Neural Networks- papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf

[5] Dropout: A Simple Way to Prevent Neural Networks from Overfitting- www.cs.toronto.edu/~hinton/absps/JMLRdropout.pdf

[6] ImageNet: A Large-Scale Hierarchical Image Database- www.image-net.org/papers/imagenet_cvpr09.pdf

[7] Fast Multiresolution Image Querying- grail.cs.washington.edu/projects/query/mrquery.pdf

[8] Large-Scale Image Retrieval with Compressed Fisher Vectors-citesex.ist.psu.edu/viewdoc/download?doi=10.1.1.401.9140&rep=rep1&type=pdf

[9] Beyond Bags of Spam Pyramid Matching for Recognizing Natural Scene Categories- ieeexplore.ieee.org/document/1641019

[10] Improved Consistent Sampling, Weighted Minhash and L1 Sketching- static.googleusercontent.com/media/research.google.com/en//pubs/archive/36928.pdf

[11] Large scale online similarity through ranking- jmlr.csail.mit.edu/papers/volume11/chechik10a/chechik10a.pdf

[12] Learning Fine-grained Image Similarity with Deep Ranking- users.eecs.northwestern.edu/~jwa368/pdfs/deep_ranking.pdf

[13] Image Similarity using Deep Ranking- medium.com/@akarshzingade/image-similarity-using-deep-ranking-c1bd83855978

[2] Histograms of Oriented Gradients for Human Detection- courses.engr.illinois.edu/ece420/fa2017/hog_for_human_detection.pdf

[3] Learning Fine-grained Image Similarity with Deep Ranking- static.googleusercontent.com/media/research.google.com/en//pubs/archive/42945.pdf

[4] ImageNet Classification with Deep Convolutional Neural Networks- papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf

[5] Dropout: A Simple Way to Prevent Neural Networks from Overfitting- www.cs.toronto.edu/~hinton/absps/JMLRdropout.pdf

[6] ImageNet: A Large-Scale Hierarchical Image Database- www.image-net.org/papers/imagenet_cvpr09.pdf

[7] Fast Multiresolution Image Querying- grail.cs.washington.edu/projects/query/mrquery.pdf

[8] Large-Scale Image Retrieval with Compressed Fisher Vectors-citesex.ist.psu.edu/viewdoc/download?doi=10.1.1.401.9140&rep=rep1&type=pdf

[9] Beyond Bags of Spam Pyramid Matching for Recognizing Natural Scene Categories- ieeexplore.ieee.org/document/1641019

[10] Improved Consistent Sampling, Weighted Minhash and L1 Sketching- static.googleusercontent.com/media/research.google.com/en//pubs/archive/36928.pdf

[11] Large scale online similarity through ranking- jmlr.csail.mit.edu/papers/volume11/chechik10a/chechik10a.pdf

[12] Learning Fine-grained Image Similarity with Deep Ranking- users.eecs.northwestern.edu/~jwa368/pdfs/deep_ranking.pdf

[13] Image Similarity using Deep Ranking- medium.com/@akarshzingade/image-similarity-using-deep-ranking-c1bd83855978

Source: https://habr.com/ru/post/457928/

All Articles