"Tools are not as important as the ability to think about the systems they create." Big interview with Martin Kleppman

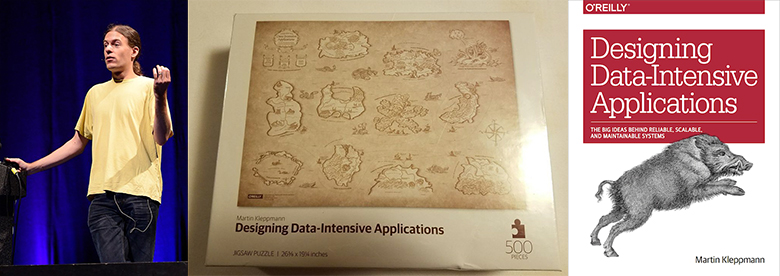

Martin Kleppman (Martin Kleppman) - a researcher at the University of Cambridge, working on CRDT and formal verification of algorithms. His book Designing Data-Intensive Applications , published in 2017, has become a bestseller in the field of data storage and processing.

Kevin Scott (CTO at Microsoft) once said : “This book should be mandatory for development engineers. This is a rare resource that unites theory and practice, helping developers to think more deeply through the design and implementation of infrastructure and data processing systems. ” Jay Kreps, creator of Apache Kafka and CEO Confluent, said something similar.

')

And before doing academic research, Martin worked in the industry and co-founded two successful startups: Rapportive (bought by LinkedIn in 2012) and Go Test It (bought by RedGate).

This habrapos is a detailed interview with Martin. Sample discussion topics:

- The transition from business to academic research;

- Prerequisites for writing Designing Data-Intensive Applications;

- Common sense against artificial hype and advertising tools;

- Unnecessary CAP theorem and other industry errors;

- The usefulness of decentralization;

- Blockchains, Dat, IPFS, Filecoin, WebRTC;

- New CRDT. Formal verification on Isabelle;

- Discussion about event sourcing. Low-level approach. XA transactions;

- Apache Kafka, PostgreSQL, Memcached, Redis, Elasticsearch;

- Using all this in real life;

- The threshold for entering the reports of Martin and the conference Hydra.

The interview was conducted by Vadim Tsesko ( @incubos ) - a leading developer in the Odnoklassniki company Platform team. Vadim’s scientific and engineering interests concern distributed systems and data warehouses, as well as verification of software systems.

From business to academic research

Vadim : I would like to start with a question that is very important for me personally. You founded Go Test It and Rapportive, and for a long time engaged in the development of large systems on LinkedIn, but then you decided to leave the commercial development and do research at the university. Could you tell us what prompted you to this? What are the advantages of working at the university, and what did you have to sacrifice?

Martin : It was a very interesting transition. As I understand it, my decision interests you because quite a few people go to academic research from commercial development, and much more often there is a movement in the opposite direction. This is understandable, since earnings at universities are significantly lower than in business. I am personally attracted to the position of a researcher by the fact that I can decide on which topics I work on, and I make this choice based on what seems to me interesting and important, even if the work on the topic does not promise to give a profit for the next 6 months. Everything that you work for in a company should be sold in one form or another. At the moment I am working on important topics for the future of the Internet and software, but our understanding of them is still not deep enough to create a finished product. So far, we do not even have a general idea of how these technologies should work. Since these studies are fundamental, I decided that they should be better conducted at the university, and not at the company: there is more freedom at the university, there you can do things that will not bring any profit for another 10 years. Planning horizon is much wider.

The book "Designing Data-Intensive Applications"

Vadim : We will definitely return to the topic of research, but for now let's talk about your book, Designing Data-Intensive Applications . In my opinion, this is one of the best guides on modern distributed systems, almost an encyclopedia: it lists all the most significant achievements that exist today.

Martin : Thank you, I am glad that it was useful to you.

Vadim : It is unlikely that our readers are not yet familiar with it, but just in case, let's discuss the most significant achievements in the field of distributed systems about which you write.

Martin : In fact, when writing this book, I did not set a goal to describe certain technologies. Rather, I wanted to make a guide around the world of systems used for storing and processing data. Now there are a huge number of databases, stream processors, batch processing tools, all sorts of replication tools, and the like, so it’s very difficult to compile a general picture of this area. And if you need a database to solve a specific problem, it is difficult to choose one of the many existing ones. Many books written on such systems are simply useless in this case. For example, in the book about Apache Cassandra it may be written about how wonderful Cassandra is, but nothing will be said about tasks for which it is not suitable. Therefore, in my book I try to identify the main questions that need to be asked when writing large systems. Answers to these questions will help determine which technologies are well suited to solve the current problem, and which - not very. The main thing is that there is no technology that could do everything. I try to show the advantages and disadvantages of different technologies in different contexts.

Vadim : Indeed, many technologies have common features and functionality and provide the same data model. At the same time, advertising cannot be trusted, and in order to understand the internal structure of the system, it is necessary to read not only technical reports and documentation, but even source codes.

Common sense against artificial hype and advertising tools

Martin : Moreover, you often have to read between the lines, because the documentation does not say which tasks the database is not suitable for. In fact, any database has its limitations, so you should always know which ones. Often you have to read deployment guides and reconstruct the internal workings of the system.

Vadim : Yes, this is a great example. Do not you think that in this area there is a lack of a common dictionary or a single set of criteria, using which one could compare different solutions for one task? Now for the same things different names are used, and many aspects that should be clearly and clearly stated are not mentioned at all - for example, transactional guarantees.

Martin : Yes, it is. Unfortunately, in our industry very often there is excessive excitement around different tools. Which is understandable, since these tools are created by companies that are interested in promoting their products. Therefore, these companies send people to conferences, and they, in effect, talk about how wonderful these products are. This is disguised as technical reports, but in essence it is an advertisement. As an industry, it would not hurt us to be more honest about the advantages and disadvantages of our products. One of the requirements for this is general terminology; it is impossible to compare things without it. But beyond that, methods of analyzing the advantages and disadvantages of various technologies are needed.

Unnecessary CAP theorem and other industry errors

Vadim : My next question is rather delicate. Could you tell us about any common mistakes in our industry that you encountered during your career? For example, about some overpriced technology or a widely used solution that you should have got rid of a long time ago? Perhaps this is not the best example, but it comes to my mind to use JSON over HTTP / 1.1 instead of gRPC over HTTP / 2. Or maybe you do not share this point of view?

Martin : Most often, when creating systems, in order to achieve something one, you have to sacrifice something else, and here I prefer not to talk about mistakes. In the case of the choice between JSON over HTTP / 1.1 and, say, Protocol Buffers over HTTP / 2, both options are completely justified. If you decide to use Protocol Buffers, you need to define a scheme, and it can be very useful because it helps to determine the exact behavior of the system. But in some situations, such a scheme does not cause anything but irritation, especially in the early stages of development, when data formats change quite often. Again, here you have to sacrifice something to achieve a certain goal, and in some situations this is justified, while in others it is not. Actually, there are not so many solutions that can truly be called wrong. But since we are talking about this, let's talk about the CAP theorem - in my opinion, there is absolutely no benefit from it. When it is used in the design of systems, either there is a misunderstanding of the meaning of the CAP theorem, or with the help of it they substantiate self-evident statements. It uses a very narrowly defined consistency model — linearizability, and a very narrowly defined accessibility model — each replica must be fully accessible, even if it cannot communicate with any other replica. On the one hand, these definitions are quite correct, but, on the other hand, they are too narrow: many applications simply do not need such a definition of consistency or accessibility. And if the application uses a different definition of these words, the CAP theorem is useless for it. So I do not see much sense in its application. By the way, since we started talking about errors in our industry, let's honestly admit that mining cryptocurrency is a completely wasteless use of electricity. I do not understand how to do this seriously.

Vadim : In addition, most data storage technologies are now customizable for a specific task, i.e. You can select the appropriate mode of operation in the presence of failures.

Martin : Exactly. Moreover, much of the technology does not fall under the strict definition of the consistency and accessibility of the CAP theorem, that is, they are not CP, not AP, and CA, but only P. No one will write this directly about their software, but in fact be a completely rational strategy when developing. This is one of the reasons why I believe that CAP in analyzing software has more harm than good: a significant part of the design decisions are not represented in any way, and it can be quite rational decisions, and CAP does not allow them to be described.

The usefulness of decentralization

Vadim : What problems in the development of Data-Intensive Applications are now most acute? What topics are most actively explored? As far as I know, you are a supporter of decentralized computing and decentralized data warehouses.

Martin : Yes. One of the theses that I prove in my research is that at the moment we rely too heavily on servers and centralization. During the first time of the Internet, when it developed from ARPANET, it was designed as the most stable network in which packets can be sent along various routes, and they still all reach the goal. In the event of a nuclear explosion in any city in America, the surviving part of the network would continue to operate, simply alternative routes would be used to bypass the failed sections. It was a cold war generated circuit. But then we decided to place everything in the cloud, so now almost everything somehow passes through one of the AWS centers somewhere in Virginia, in the east of the United States. At a certain point, we abandoned the ideal of decentralized use of various parts of the network and outlined the services on which everything now depends. I consider it important to return again to the decentralized approach, in which more control over the data would belong not to the services, but to the end users.

When it comes to decentralization, it is often understood as things like cryptocurrencies, because they have networks of interacting agents, over which there is no single centralized authority like a bank. But this is not the decentralization that I am talking about, because, in my opinion, cryptocurrencies are also extremely centralized: if you need to execute a deal with Bitcoin, you must do it through the Bitcoin network, so everything is centralized around this network. The network structure is decentralized in the sense that it does not have a single organizing center, but the network as a whole is extremely centralized, since every transaction must be made through this network and nothing else. I believe that this is also a form of centralization. In the case of cryptocurrencies, this is inevitable, since it is necessary to ensure that there are no double costs, and this is hard to achieve without a network, which provides a consensus on which transactions have been made, and the like. But there are many applications that do not require a system like a blockchain, they can work with a much more flexible data model. It is these decentralized systems that interest me the most.

Vadim : If you mentioned blockchain, could you tell us about promising or insufficiently known technologies in the field of decentralized systems? I myself have been playing with IPFS, but you have much more experience in this area.

Martin : Actually, I don’t actively follow such technologies. I read a little about IPFS, but did not use it myself. We worked a little bit with Dat , which, like IPFS , is a decentralized data storage technology. The difference is that the Filecoin cryptocurrency is tied to IPFS, and it is used to pay for data storage, and no blockchain is tied to Dat. Dat only allows you to replicate data to multiple machines on a P2P basis, and for the project we were working on, Dat is great. We wrote software for user collaboration on a document, data, or database, and every change in this data is sent to anyone who has a copy of the data. In such a system, Dat can be used on the P2P principle, so that it can operate at the network level, that is, NAT Traversal and pass through firewalls, which is quite a challenge. On top of this, we wrote a level from CRDT, with the help of which several people could edit a document or a set of data and which made it possible to exchange edits quickly and conveniently. I think a similar system could be written on top of IPFS, while ignoring Filecoin and using only P2P replication.

Vadim : But wouldn't such a system be less responsive, because WebRTC directly connects the nodes to each other, and IPFS is more likely a distributed hash table.

Martin : The thing is, WebRTC is a slightly different stack level. It is intended mainly for video calls - it is likely to be used in the software through which we now communicate. In addition, WebRTC provides a channel through which arbitrary binary data can be sent. But building a replication system on top of it can be difficult. And in the Dat and IPFS for this you do not need to do anything.

You mentioned responsiveness, and this is a really important factor to keep in mind. Suppose we want to make the next Google Docs decentralized. In Google Docs, the unit of change is a separate keystroke, and each new character can be sent in real time to other people who work with the same document. On the one hand, this ensures fast collaboration, on the other hand, this means that when writing a large document, it is necessary to send hundreds of thousands of one-character changes, and many existing technologies do not cope well with the compression of this kind of data. Even if we assume that for each keystroke you need to send just a hundred bytes, then for a document of 100,000 characters you will need to send 10 MB of data, while usually such a document takes no more than several tens of kilobytes. Until some clever compression method has been invented, such data synchronization requires an enormous additional expenditure of resources. In many P2P systems, there is no effective way to create snapshots of state that would allow them to be used for systems like Google Docs. This is exactly the problem I am dealing with now, trying to create an algorithm for more efficient document synchronization for several users. This should be an algorithm that would allow not to store each individual keystroke, because it requires too many resources, and it should provide a more efficient use of the network.

New CRDT, Formal Verification on Isabelle

Vadim : Could you tell us more about this? Did you manage to achieve more than 100 times data compression? Are we talking about new compression techniques or special CRDT?

Martin : Yes. So far we only have a prototype, it is not fully implemented yet. It is necessary to put additional experiments to find out how effective it is in practice. But some of our methods are promising. In my prototype, I managed to reduce the size of one edit from 100 to 1.7 bytes. But, I repeat, this is still only an experimental version, this indicator may change a little. Anyway, there are great opportunities for optimization in this area.

Vadim : So your report at the Hydra conference will be exactly about that?

Martin : Yes. I will have a short introduction on CRDT, collaboration software and some of the problems that arise in this context. Then I will talk about the research that we are doing in this area - they relate to many different problems. On the application side, we have the implementation of these algorithms in JavaScript; based on it, we create functioning programs to better understand the behavior of the algorithms. At the same time, we are also working on formal methods for proving the correctness of these algorithms, because some of them are rather unobvious, and we want to ensure that they always reach a consistent state. Many previously developed algorithms do not provide consistency in some borderline cases. To avoid this, we turned to formal proof methods.

Vadim : Do you use proofs of theorems like Coq or Isabelle for this system?

Martin : Yes, Isabelle .

Editor's note: Martin will read the Isabelle talk at The Strange Loop conference.

Vadim : Are you planning to publish this evidence?

Martin : Yes, we published the first set of evidence a year and a half ago, along with the CRDT framework for testing. We tested three CRDTs using this framework, the most important of which was RGA ( Replicated Growable Array ), CRDT for co-editing text. This algorithm is not too complicated, but rather unobvious, it is not immediately clear at a glance whether it is correct, therefore a formal proof was necessary. We also were engaged in proving the correctness of several existing CRDTs, and the last thing we did in this area was the creation of our CRDTs for new data models.

Vadim : Is the volume of the formal proof larger than the size of the code of the algorithm itself? With this sometimes there are difficulties.

Martin : There are really enough difficulties, we have to work a lot with evidence. I just looked at the code: the description of the algorithm takes about 60 lines, it is quite compact, and the proof exceeds 800 lines. It turns out that the proof is 12 times longer. Unfortunately, this is quite a typical situation. On the other hand, the presence of a formal proof gives us confidence in the correctness of the algorithm. In addition, the work on the proof allowed us to better understand this algorithm. Formalization generally contributes to this. Ultimately, this allows you to create better-quality implementations of algorithms.

Vadim : Tell me, on what audience do you expect your report? What prior knowledge is needed?

Martin : I try to make my reports as accessible as possible, and I try to pull everyone up to the same level. I give a lot of stuff, but I start with pretty simple things. It will be useful for listeners to have some experience with distributed systems: sending data over the network via TCP, a Git device view, and the like. But, by and large, in addition to basic knowledge, nothing is needed. Having them, to understand our work is not so difficult. I explain everything with examples and illustrate them with pictures. I hope that the report will be available to everyone.

Event sourcing, low-level approach, XA transactions

Vadim : I would like to talk about your recent article about handling events online. As far as I understand, you are a supporter of event sourcing. Now this approach is becoming increasingly popular, and programmers are trying to use it everywhere because of the advantages of a globally ordered log of operations. And in what situations is event sourcing not the best approach? I would like to avoid disappointment in this technology due to the fact that people try to apply it everywhere and in some cases it does not work well.

Martin : This problem needs to be discussed at two different levels. Event sourcing in the form in which it was created by Greg Young and others is a data modeling mechanism. If your database becomes too many tables and transactions with these tables and it becomes too unorganized, then event sourcing can help streamline the data model. Events can express directly what happens at the logic level of the application, what action the user takes, how its effects update various tables, and so on. In essence, event sourcing allows you to separate an action (event) from its effects.

I came to the event sourcing from a lower level. I was developing scalable systems using technologies like Apache Kafka. Event sourcing is similar to Apache Kafka, because both are used there. But for event sourcing it is not necessary to use Apache Kafka, it can be done with the help of a regular database or a database specially created for event sourcing. In general, these approaches are similar, but they are not tied to each other, they just have some intersection. A system like Apache Kafka is useful if scaling is needed, if the data flow is so large that it is impossible to process them in a single node database. With an event log like Apache Kafka, this load can be distributed across multiple computers. Apache Kafka is especially useful if you need to integrate several different storage systems. With it, you can update with a single event not only a relational database, but also a full-text search index like Elasticsearch, or a caching system like Memcached or Redis.

As for your initial question, it is difficult for me to say exactly when exactly event sourcing should not be used. As a rule, I prefer to use the most simple approach. If the required data model is perfectly implemented in a relational database with the insertion, update and deletion of rows, then use it. Relational databases are a perfectly acceptable option, they have been serving us well for a long time. But if the data model becomes too complex for a relational database, then you should go to event sourcing. A similar principle should be followed at a lower level: if the size of the data allows you to store them in PostgreSQL on the same computer, then this should be done. If one computer cannot process all the data, then it is necessary to turn to distributed systems like Kafka. That is, I repeat, for each situation, you should choose the most simple approach for it.

Vadim : This is great advice. In addition, most application systems are constantly evolving and the direction of their development is not always known in advance, so you never know in advance what requests, patterns and data streams will appear in them.

Martin : Yes, and in this respect relational databases are especially useful, because as a rule they now have JSON support (for example, PostgreSQL supports it well) and with it they are especially flexible. If you need to support new queries, you can simply create the missing indexes. You can change the data schema and migrate the database. If the data size is not too large and it is not too complex, everything will work fine.

Vadim : I have one more question regarding event sourcing. You mentioned an interesting example in which events from one queue are sent to multiple recipients. Suppose we create a new document (say, an announcement), and several systems receive events about it: a search engine based on Elasticsearch, which allows you to find this announcement; a caching system that places it in a key-value cache based on memcached; and a database that stores it in a table. These systems operate simultaneously and in parallel.

Martin : So you want to know what to do if some of these event recipients are already updated and others are not yet?

Vadim : Yes. And in this situation, a user comes to the site, enters a search query and sees that, say, an apartment is available in this area, but after clicking on the ad receives the code 404, because the database has not yet managed to receive the event and the required document is not yet in it.

Martin : It really is a major difficulty. Ideally, causal consitency should be maintained for these systems. That is, if one system contains some necessary data, then they will also be in other systems. Unfortunately, to achieve this for several different storage systems is very difficult: no matter which approach or system is used to send updates to various systems, in the end, there can always be problems with concurrency. Even if you write to both systems at the same time, there may be a slight delay in the network, due to which the processing of one of the recording actions will occur a little sooner or later. When reading from both systems, inconsistencies can be detected. There are research projects that are trying to achieve this kind of causal consistency, but it is difficult to achieve it simply by using Elasticsearch or Memcached. The problem is that for the right solution you need to have a snapshot of both the search index, the cache, and the database. If we work only with a relational database, then we have a snapshot of isolation: this means that reading from the database is performed as if you have a copy of the entire database. And all requests will return data at the time of the snapshot. That is, even if the data at the time of reading has changed, the old data will still be presented, because they are part of a consistent snapshot. In the case under discussion with Memcached and Elasticsearch, the problem can be solved with a consistent snapshot of the two systems. But, unfortunately, neither Memcached, nor Redis, nor Elasticsearch provide an efficient mechanism for creating such snapshots that could be coordinated for several data storage systems. Each system operates independently and, as a rule, simply gives the last value of each key. There is usually no opportunity to get an earlier, but consistent data version. So I can not recommend an optimal solution for this problem. I'm afraid that somehow I will have to change the storage system code. You need a mechanism for creating snapshots, which would be fast enough so that they can be constantly used - such images may be needed several times a second, and not once a day. So far, existing solutions do not allow creating snapshots for multiple storage systems. In general, this is a very interesting research topic. I hope that someone will take care of it, but so far I have not seen a satisfactory solution to this problem.

Vadim : Yes, you need some single distributed Multiversion Concurrency Control .

Martin : , . XA-, , , . , , . , , . XA . , , . .

: , - .

: , . , , , . , . - , . , . - , : , , , . . , .

: , . , . - , , , - .

: . . , , . , .

: , . event sourcing. , , , . , . , , , . , , , , , . ?

: , . , , . , , , , . . , . , , .

: , , , .

: . , . , . , , , . (, - ), . . - , . — , . , , , , 100%, .

: . .

: .

: , , . , — , . .

Hydra 2019,

: , Hydra? , , , .

: , , , , « » « ». . . , , - ; , , , , .

: , , , ? . , , ?

: , . , . , . - . , - , - . , . : , . — , — . , , , .

: . ? , - , , ?

: . . , . , , , . - — . , , . : . , , Slack , . , , . , , , .

: .

: , .

: , !

, . — , . - . , «Syncing data across user devices for distributed collaboration» Hydra 2019, 11-12 2019 -. Tickets can be purchased on the official website .

Source: https://habr.com/ru/post/457736/

All Articles