Depth cameras - silent revolution (when robots see) Part 1

Recently, I described how robots tomorrow will start MUCH better think (post about hardware acceleration of neural networks ). Today we analyze why robots will soon be MUCH better to see. In a number of situations it is much better than a person.

It will be about depth cameras, which shoot a video, in each pixel of which not the color is stored, but the distance to the object at this point. Such cameras have existed for more than 20 years, but in recent years their speed of development has grown many times and we can already speak about a revolution. And multi-vector. The rapid development is in the following areas:

- Structured Light cameras , or structural light cameras, when there is a projector (often infrared) and a camera that captures the structural light of the projector;

- Time of Flight cameras , or cameras based on the measurement of the delay of the reflected light;

- Depth from Stereo cameras - the classic and perhaps the most well-known direction for constructing depth from a stereo;

- Light Field Camera - they are light field cameras or plenoptic cameras, about which there was a separate detailed post ;

- And, finally, Lidar- based cameras, especially the fresh Solid State Lidars , which work about 100 times as long as normal lidars and give out a familiar rectangular picture.

Who cares how it will look, as well as a comparison of different approaches and their current and tomorrow's application - welcome under the cat!

So! Let us examine the main directions of development of depth chambers or actually different principles of depth measurement. With their pros and cons.

')

Method 1: Structured Light Cameras

Let's start, perhaps, with one of the most simple, old and relatively cheap ways to measure the depth - structured light. This method appeared essentially as soon as digital cameras appeared, i.e. more than 40 years ago and greatly simplified a little later, with the advent of digital projectors.

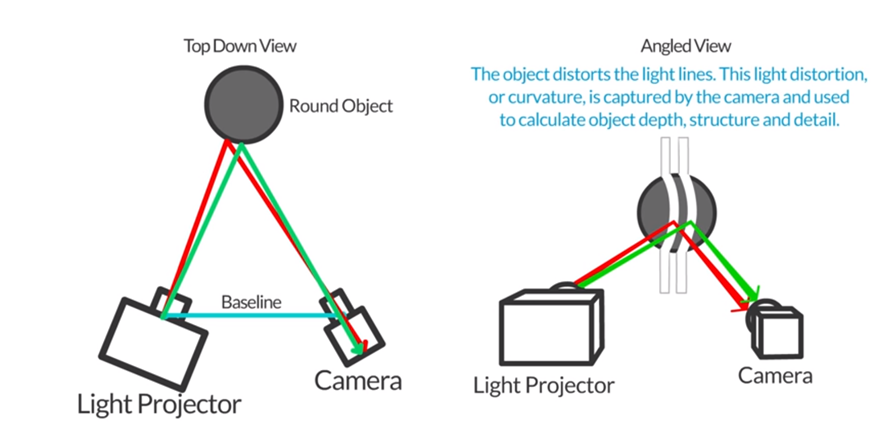

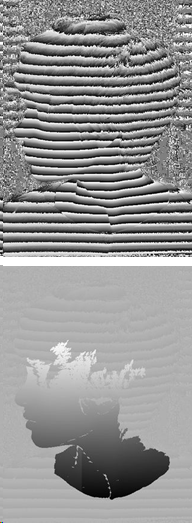

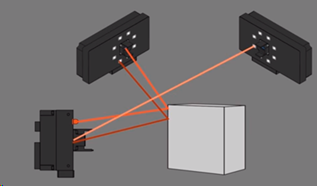

The basic idea is extremely simple. Put a projector next to it, which creates, for example, horizontal (and then vertical) stripes and a camera next to it, which shoots a picture with stripes, as shown in this figure:

Source: Autodesk: Structured Light 3D Scanning

Since the camera and the projector are displaced relative to each other, the stripes will also be displaced in proportion to the distance to the object. By measuring this offset we can calculate the distance to the object:

Source: http://www.vision-systems.com/

Source: http://www.vision-systems.com/In fact, with the cheapest projector (and their price starts at 3,000 rubles) and a smartphone, you can measure the depth of static scenes in a dark room:

Source: Autodesk: Structured Light 3D Scanning

It is clear that this will have to solve a whole bunch of tasks - it is the calibration of the projector, the calibration of the phone’s camera, the recognition of the fringe shift and so on, but all these tasks are fully within the power of even advanced high school students who study programming.

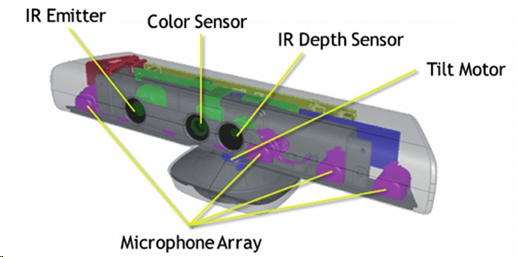

This principle of measuring depth became most widely known when in 2010 Microsoft released the MS Kinect depth sensor for $ 150, which was revolutionaryly cheap at that time.

Source: Partially Occluded Object Reconstruction using Multiple Kinect Sensors

With the addition of the actual depth measurement with an IR projector and an IR camera, Kinect also shot normal RGB video, had four microphones with noise reduction and could tune itself to a person in height, automatically tilting up or down, was built in right there data processing, which gave the finished depth map to the console immediately:

Source: Implementation of natural user interface buttons using Kinect

A total of about 35 million devices were sold, making Kinect the first mass depth camera in history. And if we take into account that the depth chambers of course were before her, but they were usually sold by a maximum of hundreds and cost at least an order of magnitude more expensive - this was a revolution that provided large investments in this area.

An important reason for the success was that by the time Microsoft launched the Xbox 360 there were already a few games that were actively using Kinect as a sensor. Takeoff was swift:

Moreover, Kinect even managed to enter the Guinness Book of Records as the fastest selling gadget in history. True, Apple soon pressed Microsoft out of this place, but nonetheless. For a new experimental sensor that works in addition to the main device to become the fastest selling electronic device in history, this is simply a great achievement:

While lecturing, I like to ask the audience where all these millions of shoppers come from? Who were all these people?

As a rule, no one guesses, but sometimes, especially if people are older and more experienced in the audience, they give the correct answer: the sales were driven by American parents, who enthusiastically saw that their children could play on the console and not sit on the couch while jumping in front of the TV. It was a breakthrough !!! Millions of moms and dads rushed to order a device for their children.

Generally, when it comes to recognizing gestures, people usually naively believe that just having data from a 2D camera is enough. After all, they have seen many beautiful demos! The reality is much more harsh. The accuracy of gesture recognition from the 2D video stream from the camera and the accuracy of gesture recognition from the camera depth differ by an order of magnitude. From the depth camera, or rather from the RGB camera combined with the depth camera (the latter is important), gestures can be recognized much more accurately and cost-effectively (even if it is dark in the room) and this brought success to the first mass depth camera.

About Kinect on Habré in his time wrote a lot , so very briefly how it works.

An infrared projector gives a pseudo-random set of points in space, the displacement of which determines the depth in a given pixel:

Source: Depth Sensing Planar Structures: Detection of Office Furniture Configurations

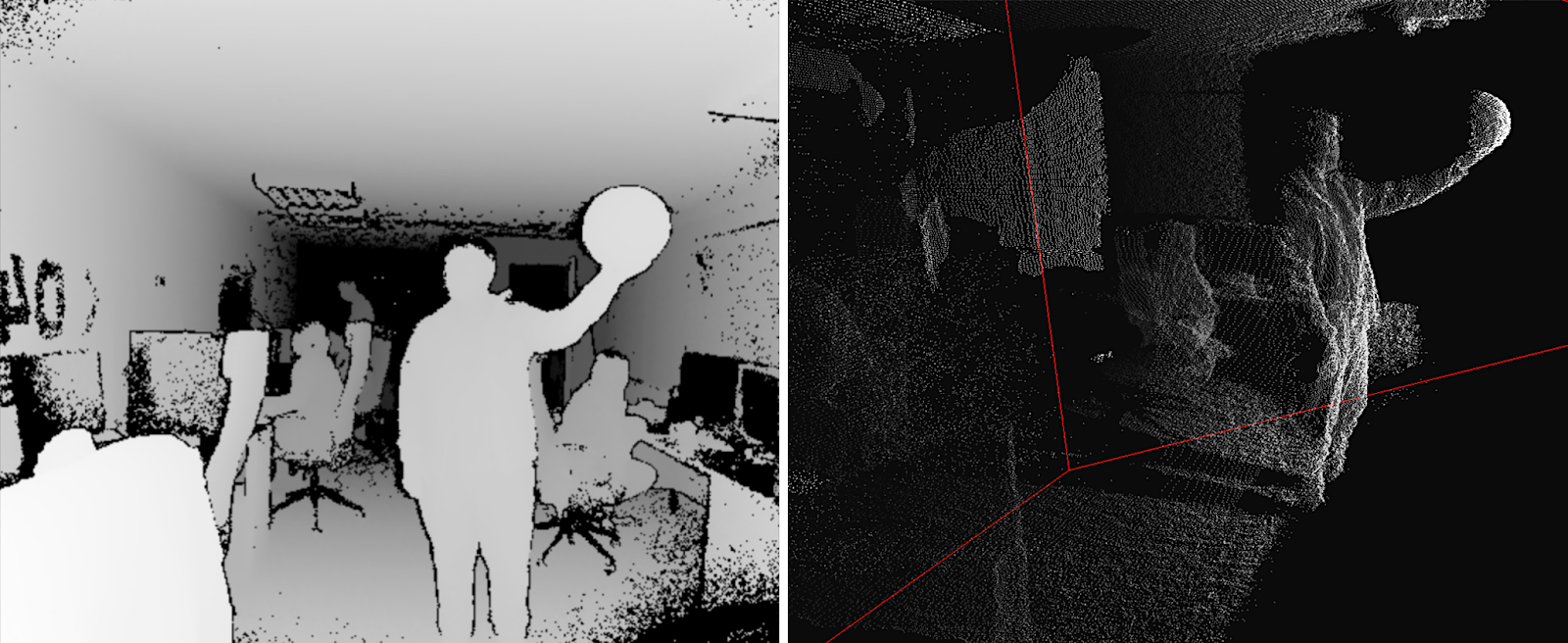

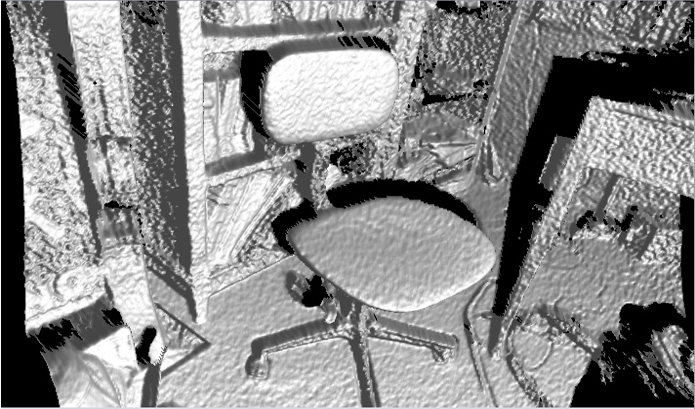

The resolution of the camera is stated as 640x480, but in reality there is somewhere around 320x240 with fairly strong filtering and the picture looks like real examples (that is, pretty scary):

Source: Partially Occluded Object Reconstruction using Multiple Kinect Sensors

The “shadows” of objects are clearly visible, since the camera and the projector are spaced quite far away. It can be seen that to predict the depth, shifts of several points of the projector are taken. In addition, there is a (hard) filtering by direct neighbors, but still the depth map is quite noisy, especially at the borders. This leads to quite noticeable noise on the surface of the resulting objects, which must be further and nontrivially smoothed:

Source: J4K Java Library for the Microsoft's Kinect SDK

Nevertheless, only $ 150 ( today is $ 69 , although it’s better to get closer to $ 200 , of course) - and you “see” the depth! Serial products really a lot .

By the way, in February of this year, the new Azure Kinect was announced:

Source: Microsoft announces Azure Kinect, available for pre-order now

Its deliveries to developers in the US and China should begin on June 27, i.e. literally right now. Of the possibilities, besides the noticeably better RGB resolution and better quality depth cameras (promising 1024x1024 at 15 FPS and 512x512 at 30 FPS and higher quality is clearly seen in the demo , the ToF camera) supports the collaboration of several devices out of the box; sun, an error of less than 1 cm at a distance of 4 meters and 1-2 mm at a distance of less than 1 meter, which sounds EXTREMELY interesting, so we wait, we wait:

Source: Introducing Azure Kinect DK

The next mass product, where the depth camera was implemented in structured light, was not a game console, but ... (drum roll) is correct - iPhone X !

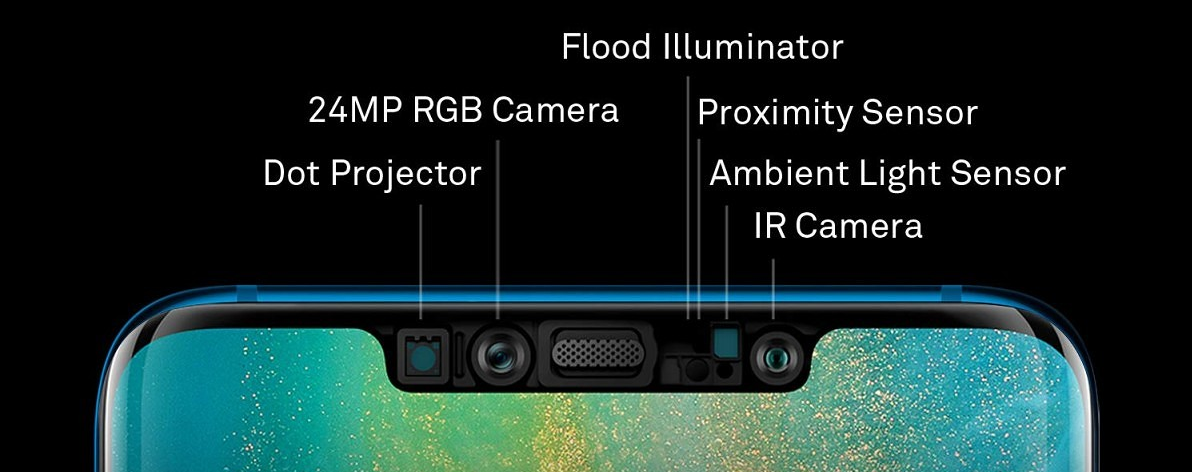

Its Face ID technology is a typical depth camera with a typical infrared Dot projector and an infrared camera (by the way, now you understand why they are at the edges of the “bangs”, spaced as far as possible from each other - this is a stereo base ):

The resolution of the depth map is even less than that of the Kinect - about 150x200. It is clear that if you say: “Our resolution is about 150x200 pixels or 0.03 megapixels,” the people will say briefly and succinctly: “Sucks!”. And if you say "Dot projector: More than 30,000 invisible dots are projected onto your face," People will say: "Wow, 30 thousand invisible points, cool!" Some blondes will ask if freckles appear from invisible points? And the topic will go to the masses! Therefore, the second option was far-sighted in advertising. The resolution is not large for three reasons: firstly, the miniature requirements, secondly, energy consumption, and thirdly, prices.

Nevertheless, this is another depth camera in a structured light, gone into a series of millions of copies and has already been repeated by other manufacturers of smartphones, for example (surprise-surprise!) Huawei (which bypassed Apple in sales of smartphones last year). Only Huawei has a camera on the right and a projector on the left, but also, of course, on the edges of the “bang”:

Source: Huawei Mate 20 Pro unlock

In this case, 300,000 dots are announced, that is , 10 times more than that of Apple , and the front camera is better,

At the same time, it is clear why the technology of identification of persons was used in phones. First, now it is impossible to deceive the detector by showing a photo of a person (or video from a tablet). Secondly, the face changes greatly when the lighting changes, but its shape does not, which makes it possible to identify a person more precisely with the data from the RGB camera:

Source: photo of the same person from TI materials

Obviously, the infrared sensor has inherent problems. Firstly, the sun illuminates our relatively weak projector once or twice, so these cameras do not work on the street. Even in the shade, if the white wall of the building is lit by the sun, you may have big problems with your Face ID. The noise level in Kinect also rolls over, even when the sun is covered with clouds:

Source: this and the next two pictures - Basler AG materials

Another big problem is reflections and reflections. Since infrared light is also reflected, it will be problematic to photograph an expensive stainless steel kettle, a varnished table or a glass ceiling with a Kinect:

And finally, two cameras that shoot one object can interfere with each other. Interestingly, in the case of a structured light, you can make the projector flicker and understand where our points are, and where not, but this is a separate and rather difficult story:

Now you know how to break FaceID ...

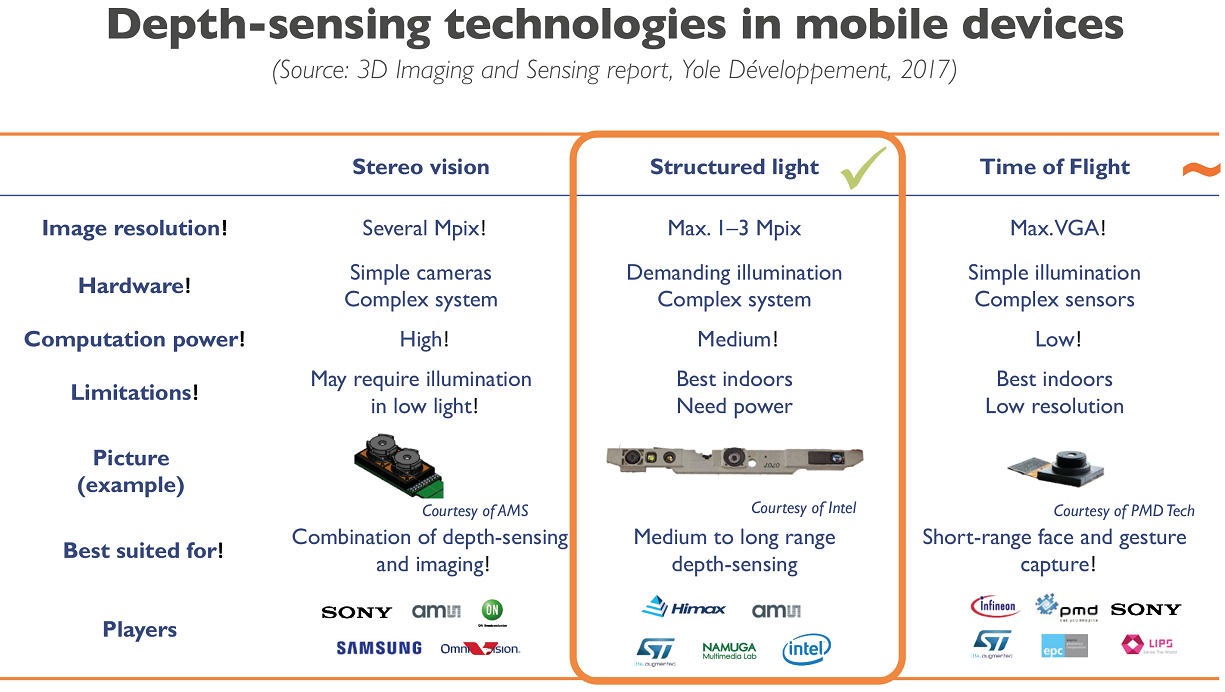

However, for mobile devices, structured light looks like the most reasonable compromise for today:

Source: Smartphone Companies Scrambling to Match Apple 3D Camera Performance and Cost

For structured light, the cheapness of a conventional sensor is such that its use in most cases is more than justified. What brought to life a large number of startups operating by the formula: cheap sensor + complex software = quite an acceptable result.

For example, our former graduate student Maxim Fedyukov , who has been involved in 3D reconstruction since 2004, created Texel , whose main product is a platform with 4 Kinect cameras and software that in 30 seconds turns a person into a potential monument. Well, or a desktop statuette. This is someone for how much money is enough. Or you can send your 3D model photos cheaply and angrily (while for some reason the most requested case). Now they send their platforms and software abroad from the UK to Australia:

Source: Creating a 3D human model in 30 seconds

As a ballerina, I cannot stand beautifully, so I only thoughtfully look at the fin of a shark swimming by:

Source: materials of the author

In general, a new type of sensor generated new art projects. In winter, I saw a rather curious VR film, shot with Kinect. Below is an interesting visualization of the dance, also made with Kinect (it looks like 4 cameras were used), and unlike the previous example, they didn’t struggle with noises, they rather added some amusing specifics:

Source: A Dance Performance Captured With A Kinect Sensor And Visualized With 3D Software

What trends can be observed in the area:

- As is well known, digital sensors of modern cameras are sensitive to infrared radiation, so you have to use special blocking filters so that infrared noise does not spoil the picture (even the direction of artistic imaging in the infrared range appeared , including when the filter is removed from the sensor). This means that huge amounts of money are invested in miniaturization, increasing resolution and cheapening sensors that can be used as infrared (with a special filter ).

- Similarly, algorithms for processing depth maps, including the methods of the so-called cross-filtering, when data from an RGB sensor and noisy data on depth make it possible to get very good video depth, are rapidly improving. In this case, using neural network approaches, it becomes possible to drastically increase the speed of obtaining a good result.

- In this area, all the top companies work, especially manufacturers of smartphones.

Consequently:

- You can expect a dramatic increase in the resolution and accuracy of shooting Structured Light depth cameras in the next 5 years.

- It will go (though slower) to reduce the power consumption of mobile sensors, which will simplify the use of next-generation sensors in smartphones, tablets and other mobile devices.

In any case, what we are seeing now is the infancy of technology. The first mass products, which are just debugging the production and use of a new unusual type of data - video with depth.

Method 2: Time of Flight Camera

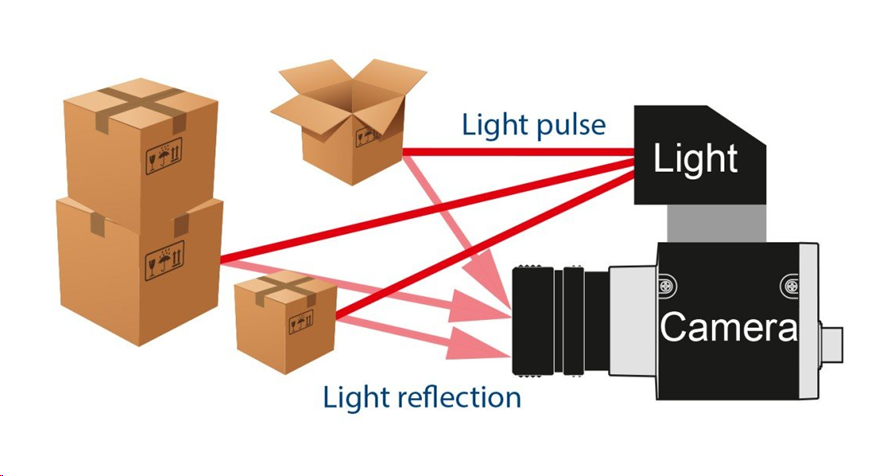

The next way to get the depth is more interesting. It is based on measuring round-trip light delay (ToF - Time-of-Flight ). As you know, the speed of modern processors is high, and the speed of light is small. For one processor clock at 3 GHz, the light has time to fly just 10 centimeters. Or 10 cycles per meter. A lot of time, if someone was engaged in low-level optimization. Accordingly, we install a pulsed light source and a special camera:

Source: The Basler Time-of-Flight (ToF) Camera

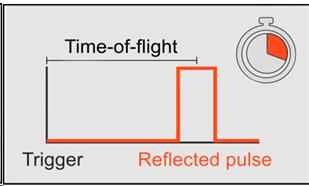

In fact, we need to measure the delay in which the light returns to each point:

Source: The Basler Time-of-Flight (ToF) Camera

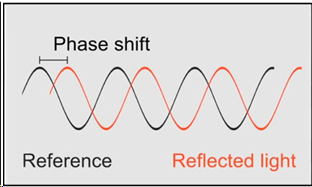

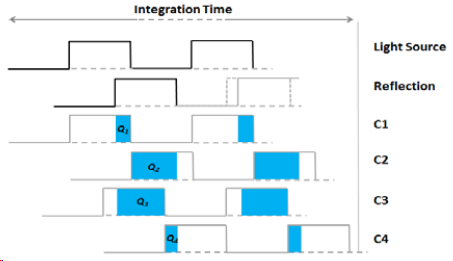

Or, if we have several sensors with different charge accumulation time, then, knowing the time shift relative to the source for each sensor and the flash brightness taken, we can calculate the shift and, accordingly, the distance to the object, and increasing the number of sensors - we increase the accuracy:

Source: Larry Li "Time-of-Flight Camera - An Introduction"

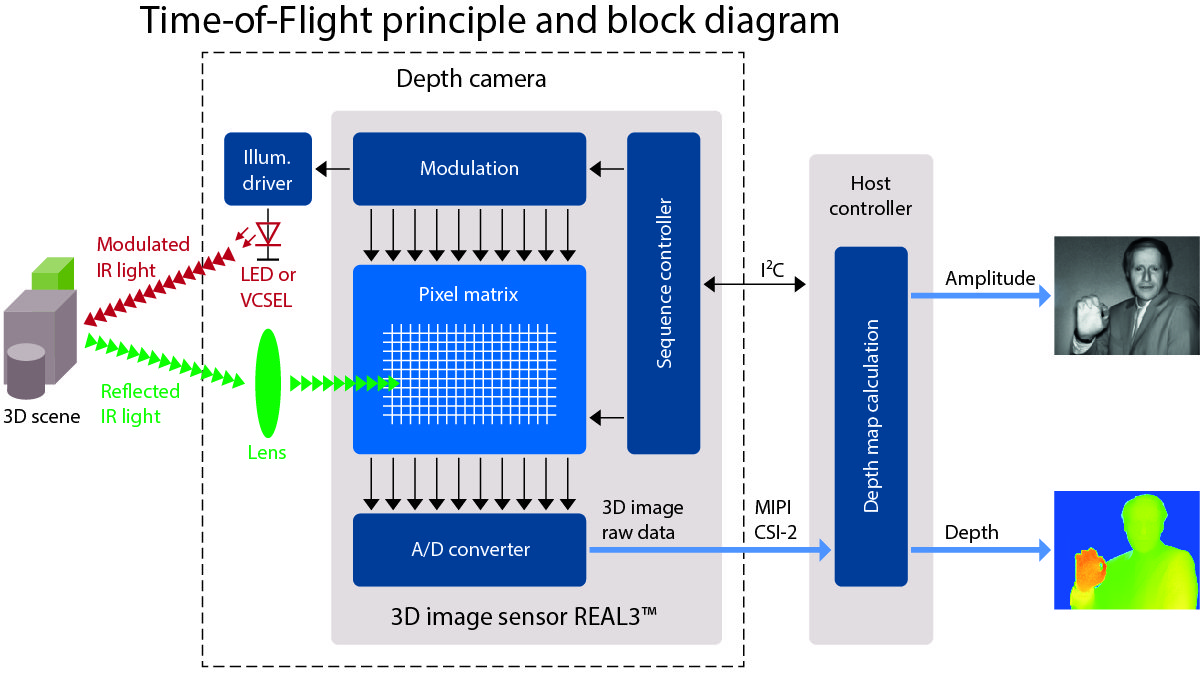

The result is such a scheme of the camera with LED or, more rarely, laser ( VCSEL ) infrared illumination:

Source: a very good job description of ToF on allaboutcircuits.com

The picture thus turns out to be quite low resolution (after all, we need to position several sensors next to each other with different polling times), but potentially with high FPS. And the problems are mainly at the boundaries of objects (which is typical for all depth cameras). But without the “shadows” typical for structured light:

Source: Basler AG video

In particular, it was this type of camera (ToF) that at one time actively tested Google in the Google Tango project, which is well presented in this video . The point was simple - to combine the data of a gyroscope, an accelerometer, an RGB camera and a depth camera by building a three-dimensional scene in front of a smartphone:

Source: Google's Project Tango Is Now Sized for Smartphones

The project itself did not go (my opinion was due to the fact that it was somewhat ahead of its time), but it created important prerequisites for creating a wave of interest in AR - augmented reality - and, accordingly, developing sensors that can work with it. Now all his achievements are poured into ARCore from Google.

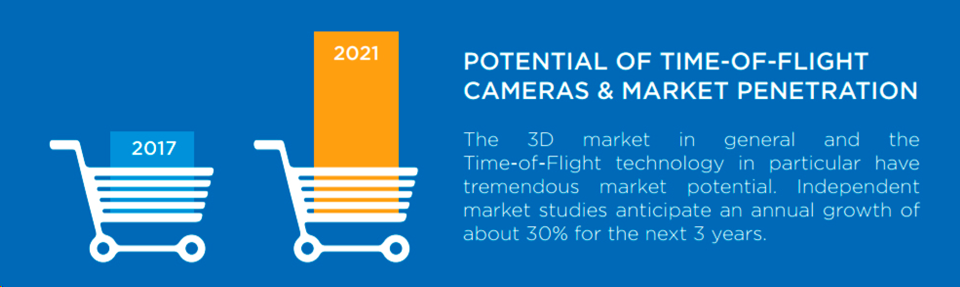

In general, the volume of the ToF camera market is growing by about 30% every 3 years, which is quite an exponential growth, and so few markets are growing so fast:

Source: Potential of Time-of-Flight Cameras & Market Penetration

A serious market driver today is the rapid (and also exponential) development of industrial robots, for which ToF cameras are the ideal solution. For example, if you have a robot that is packing boxes, then with a conventional 2D camera, determining that you are starting to jam the cardboard is an extremely non-trivial task. And for the ToF camera it is trivial to both “see” and process. And very quickly. As a result, we are seeing a boom in industrial ToF cameras :

Naturally, this also leads to the appearance of home-made products using depth cameras. Here, for example, a security camera with a night video unit and a ToF depth camera from the German PMD Technologies , which has been developing 3D cameras for more than 20 years :

Source: 3D Time-of-Flight-Depth Sensing

Remember the invisibility cloak under which Harry Potter was hiding?

Source: Harry Potter's Exact in Real Life

I am afraid that the German camera will detect it once or twice. And it will be difficult to put a screen with a picture in front of such a camera (this is not a distracted guard to you):

Source: Fragment of the film “Mission: Impossible: Phantom Protocol”

It seems that the new Hogwarts magic will be needed for CCTV cameras to fool their ToF depth camera, which is able to shoot such a video in complete darkness:

To pretend to be a wall, a screen, and other means of protecting oneself from the fact that a combined ToF + RGB camera detects a foreign object becomes technically more difficult.

Another massive peaceful application of depth cameras is gesture recognition. In the near future, we can expect televisions, consoles and robotic vacuum cleaners, which will be able to perceive not only voice commands as smart speakers, but also careless “put away!” With a wave of the hand. Then the remote control (aka laziness) to the smart TV will not be completely needed, and fiction will come to life. As a result, what was fantastic in 2002 became experimental in 2013 , and finally serial in 2019 (people will not know what is inside the depth chamber,

Source: article , experiments and product

And the full range of applications is even wider, of course:

Source: Terabee video depth sensors (by the way, what kind of mice do they run on the floor for 2 and 3 videos? See them? I'm kidding, it's dust in the air - the fee for the small size of the sensor and the proximity of the light source to the sensor)

By the way - in the famous "shops without cashiers" Amazon Go under the ceiling, too many cameras:

Source: Inside Amazon's surveillance-powered, no-checkout convenience store

Moreover, as TechCrunch writes: “They’re augmented by separate depth-sensing cameras (using a time-of-flight technique , or so I understood from Kumar) that is, matte black.” That is, the miracle of determining exactly which shelf yogurt was taken from is provided by the mysterious black matte ToF cameras (a good question, are they in the photo):

Unfortunately, it is often difficult to find direct information. But there is an indirect. For example, there was such a company Softkinetic , which since 2007 has been developing ToF cameras. 8 years later, they were bought by Sony (which, by the way, is ready to conquer new markets under the brand Sony Depthsensing ).So one of the top Softkinetic employees is now working on Amazon Go. Such a coincidence! In a couple of years, when the technology is brought in and the main patents are filed, the details are likely to be disclosed.

Well, as usual, the Chinese lit. Company Pico Zense , for example, introduced at CES 2019 a very impressive lineup of ToF cameras, including for outdoor use:

They promise revolution everywhere. Wagons will be loaded more tightly due to automated loading, ATMs will become safer, due to depth cameras in each, robot navigation will become simpler and more accurate, people (and, most importantly, children!) Will be much better in the stream, new fitness equipment will appear c the ability to control the correctness of the exercises without an instructor, and so on and so forth. Naturally, cheap Chinese cameras of the depth of the new generation are ready for all this magnificence. Take and build!

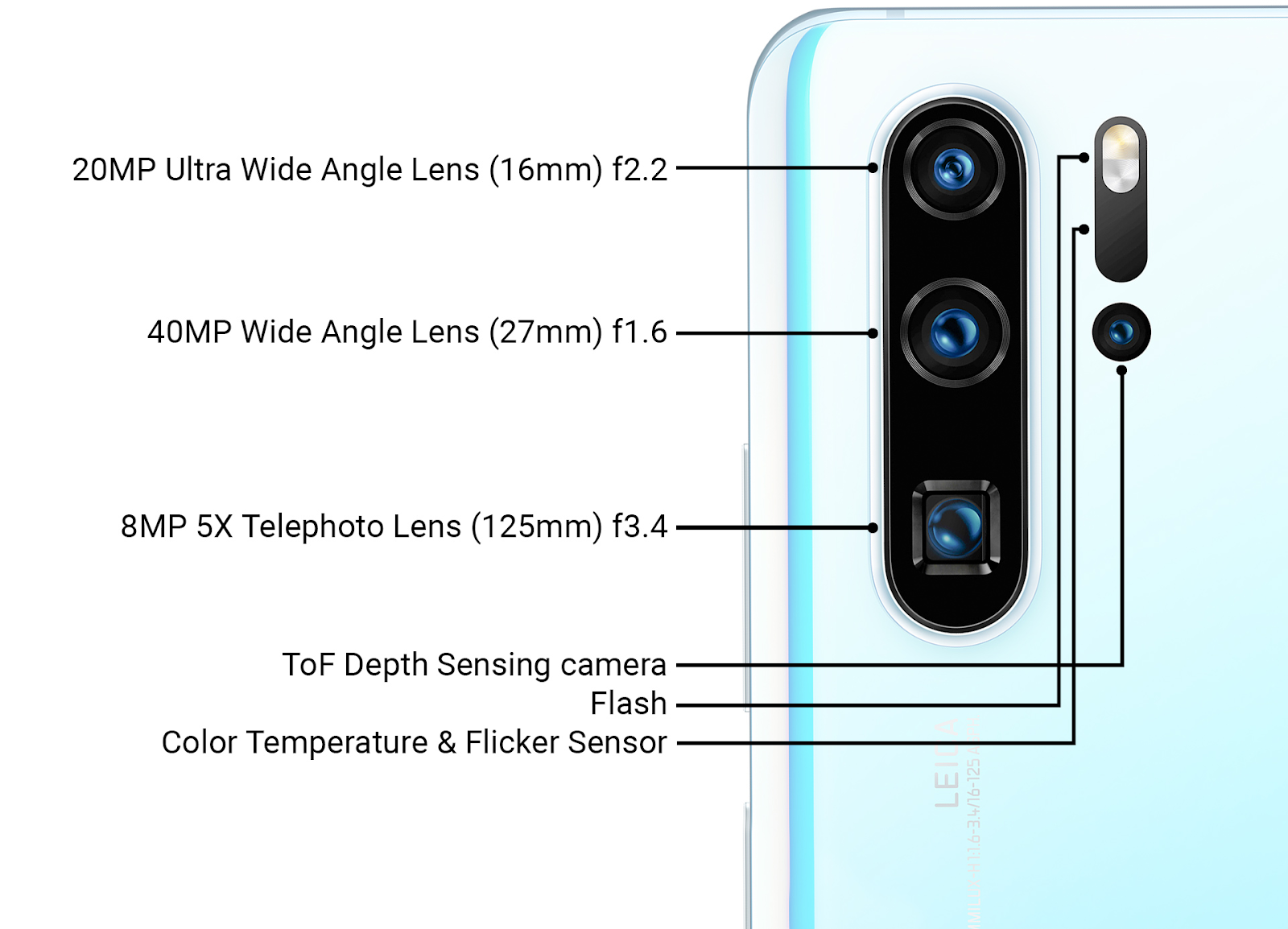

Interestingly, the latest serial Huawei P30 Pro has a ToF sensor next to the main cameras, i.e. the long-suffering Huawei knows better than Apple how to make frontal structured light sensors and, it seems, more successful than Google (Project Tango, which was closed) introduced next to the main cameras ToF camera:

Source: review of the Huawei technology from Ars Technica at the end of March 2019

Details of use, of course, are not disclosed, but in addition to accelerating the focusing (which is important for the three main cameras with different lenses), this sensor can be used to increase the quality of background blur for photos (imitation of small DOF ).

It is also obvious that the next generation of depth sensors next to the main cameras will be used in AR applications, which will raise the accuracy of the AR from the current “fun, but often buggy” to a massively working level. And, obviously, in the light of the Chinese success, the big question is how much Google will want to support in ARCorerevolutionary Chinese iron. Patent wars can significantly slow technology entry into the market. We will see the development of this dramatic story literally in the next two years.

Subtotals

About 25 years ago, when the first automatic doors just appeared, I personally observed how quite respectable guys periodically accelerated before such doors. Will you have time to open or will you not have time? She is big, heavy, glass! Approximately the same thing I observed during the excursion of quite respectable professors at an automatic factory in China recently. They were a little behind the group to see what would happen if they got in the way of a robot peacefully carrying parts and playing a quiet pleasant tune. I, too, repent, could not resist ... You know, it stops! Maybe smoothly. Maybe as rooted to the spot. Depth sensors are working!

Source: Inside Huawei Technology's New Campus

The hotel also had cleaning robots that looked like this:

At the same time, they were mocked at them more than they were at the robots at the factory. Not as tough as the inhuman in every sense of Bosstown Dynamics , of course. But I personally observed how they got up on the road, the robot tried to drive around a person, the person shifted, blocking the road ... A sort of cat and mouse. In general, it seems that when unmanned vehicles appear on the roads, at first they will be cut more often than usual ... Eh, people-people ... Hmmm ... However, we digress.

Summarizing the key points:

- Due to a different principle of operation, we can locate the light source in the ToF camera as close as possible to the sensor (even under the same lens). In addition, many industrial models have LEDs located around the sensor. As a result, the “shadows” on the depth map are drastically reduced, or even in general, disappear. Those. , .

- , , — : , .. , , .

- , ToF « » RGB , , ToF () ( Samsung, Google Pixel, Sony Xperia...).

- Sony , 2 8 (!!!) ToF (!), .. :

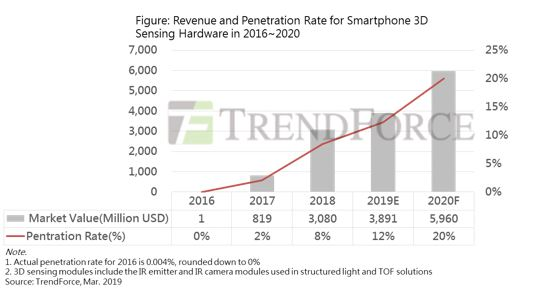

: Hexa-cam Sony phone gets camera specs revealed - , ! 20% (Structured Light + ToF). , 2017 Apple «30 », 300 , :

: Limited Smartphone 3D Sensing Market Growth in 2019; Apple to be Key Promoter of Growth in 2020

Do you still doubt the revolution going?

This was the first part! A general comparison will be in the second.

In the next series, wait:

- Method 3, classic: depth from stereo;

- Method 4, newfangled: the depth of plenoptic;

- Method 5, fast-growing: lidars, including Solid State Lidars;

- Some problems of processing video with depth;

- And finally, a brief comparison of all 5 methods and general conclusions.

Stay tuned! (If there is enough time - by the end of the year I will describe new cameras, including tests of fresh Kinect.)

Part 2

Acknowledgments

I would like to sincerely thank:

- Laboratory of Computer Graphics VMK MSU. MV Lomonosov for his contribution to the development of computer graphics in Russia in general and work with depth cameras in particular,

- Microsoft, Apple, Huawei and Amazon for excellent products based on depth cameras,

- Texel for the development of high-tech Russian products with depth cameras,

- personally Konstantin Kozhemyakov, who did a lot to make this article better and clearer,

- and, finally, many thanks to Roman Kazantsev, Yevgeny Lyapustin, Yegor Sklyarov, Maxim Fedyukov, Nikolay Oplachko and Ivan Molodetsky for a large number of practical comments and edits that made this text much better!

Source: https://habr.com/ru/post/457524/

All Articles